A Method of Inter-frame Prediction in Hybrid Video Coding Standard

A prediction method and inter-frame prediction technology, applied in digital video signal modification, electrical components, image communication, etc., can solve problems such as poor model prediction effect, and achieve the effect of inter-frame prediction performance improvement

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

[0039] Embodiment 1: The inter-frame prediction method in the hybrid video coding standard described in this embodiment is used to describe the deformation motion existing in the video sequence, and the prediction method is used in merge mode, skip mode or inter mode; the prediction The implementation process of the method is:

[0040] Step 1: Obtain the motion information of several adjacent coded blocks around the current coded block, the size of the current coded block is W*H, W is the width of the current coded block, and H is the height of the current coded block; the motion information Including reference index and motion vector; several adjacent coded blocks around are called adjacent coded blocks;

[0041] Step 2: Obtain the reference index of each division unit in the current coding block according to the reference index of the adjacent coding block obtained in step 1;

[0042] Step 3: Process the motion vector of the adjacent coding block according to the reference ...

specific Embodiment approach 2

[0043] Specific implementation mode two: the inter-frame prediction method in the hybrid video coding standard described in this implementation mode is characterized in that:

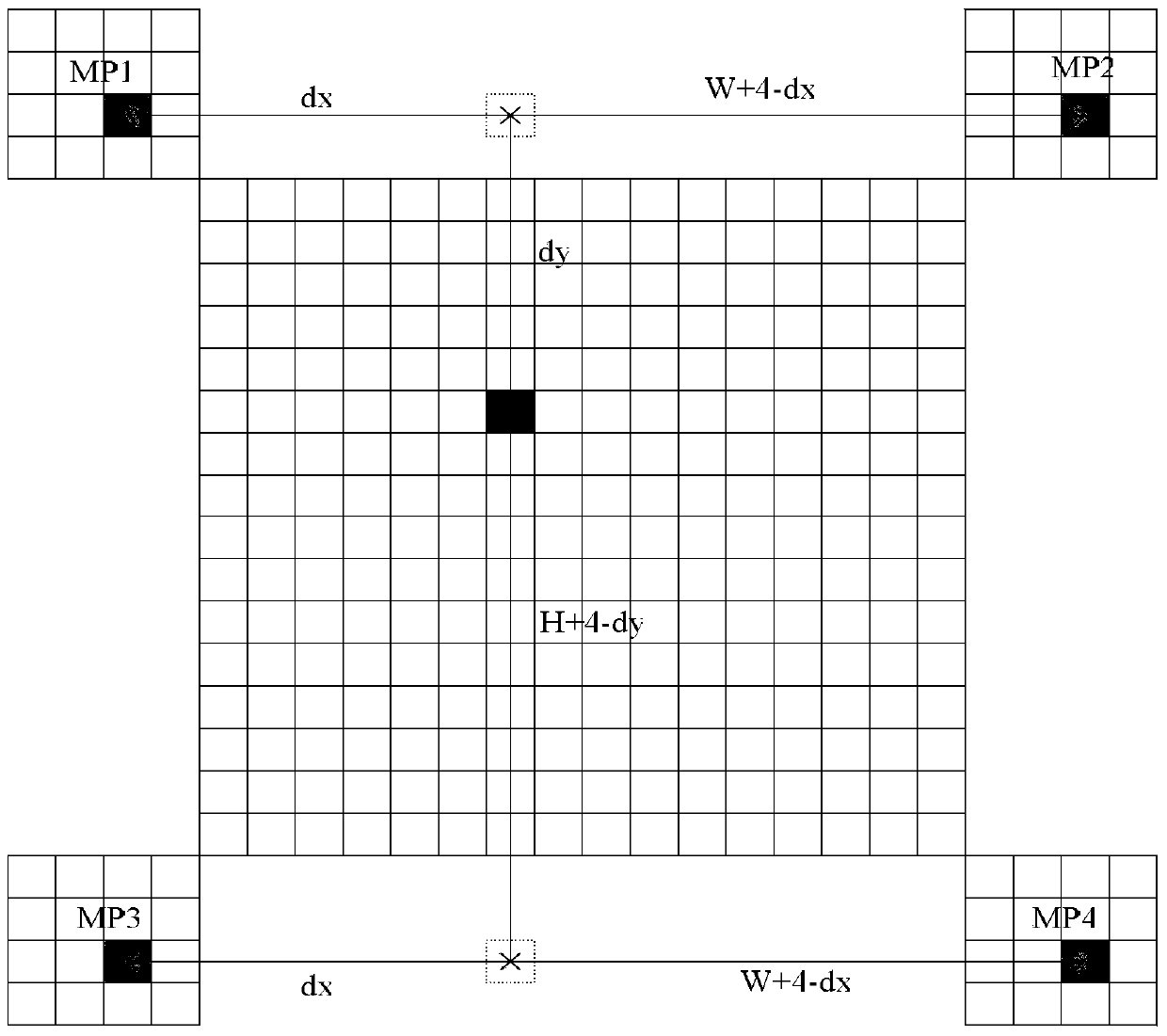

[0044] In step 1, the adjacent coding blocks are adjacent blocks at the four corner positions of the current coding block, or adjacent blocks at the four corner positions and the center point of the current coding block; the adjacent coding blocks are located at Spatial neighboring blocks in the current frame or temporal neighboring blocks located in the temporal reference frame;

[0045] It also supports selecting a larger number of adjacent blocks to obtain the motion information of the current coding block. In addition to the four corner positions and the central point position, adjacent blocks in other positions are also supported;

[0046] For example, the four adjacent coded blocks of the four corners are selected from the upper left, upper right, lower left, and lower right corners of the current...

specific Embodiment approach 3

[0054] Specific embodiment three: In the inter-frame prediction method in the hybrid video coding standard described in this embodiment, in step three, the realization process of obtaining the motion vector of each division unit in the current coding block is as follows:

[0055] The motion vector is obtained through bilinear interpolation model calculation, and the calculation process is as follows: select four adjacent blocks from several adjacent blocks in step 1, the motion information of which must all exist and at least one selected adjacent block has motion information and The motion information of other selected adjacent blocks is different; according to the method described in the second embodiment, the reference index of the selected adjacent block is used to obtain the target reference index of each division unit in the current block; according to the method described in the second embodiment, the selected adjacent block The motion vector is preprocessed, and then it...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com