Single-viewpoint video depth obtaining method based on scene classification and geometric dimension

A technology of geometric annotation and acquisition method, applied in the field of single-view video depth acquisition, to achieve the effect of good effect, low noise and moderate calculation amount

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

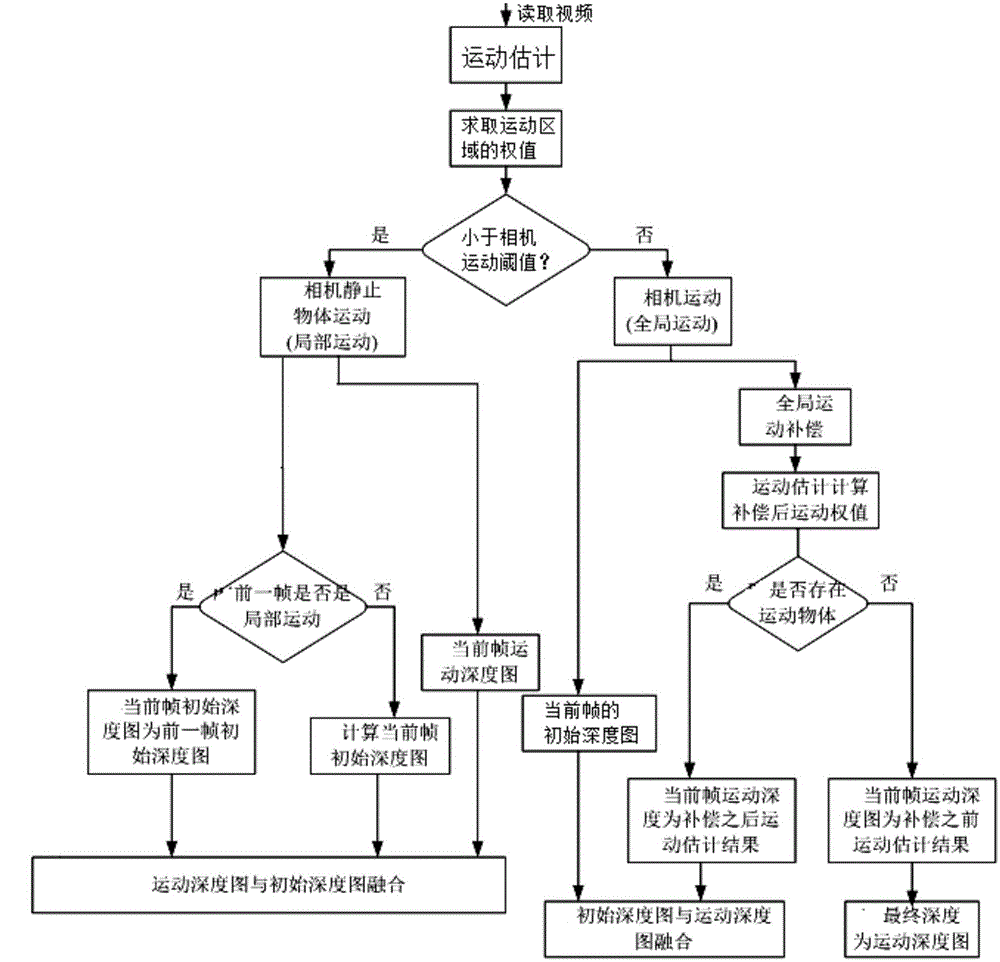

[0065] A single-view video depth acquisition method based on scene classification and geometric annotation, the specific steps include:

[0066] (1) Read the video sequence, use the optical flow method to perform motion estimation on the adjacent frame images in the video sequence, obtain the optical flow motion vector result, and judge whether the current frame image belongs to the camera still object motion scene or the camera according to the optical flow motion vector result. A motion scene, the camera motion scene includes a still scene of a camera moving object and a motion scene of a camera moving object;

[0067] (2) Determine whether it is necessary to estimate the initial depth map of the current frame image, if necessary, go to step (3), otherwise, the initial depth map of the current frame image defaults to the initial depth map of the previous frame image of the current frame image, and directly enter step (4);

[0068] (3) Obtain the initial depth map of the cur...

Embodiment 2

[0071] A single-view video depth acquisition method based on scene classification and geometric annotation, the specific steps include:

[0072] (1) Read the highway video downloaded from the ChangeDetection website, use the optical flow method to estimate the motion of adjacent frame images in the video sequence, and obtain the optical flow motion vector result. According to the optical flow motion vector result, it is judged that the 8th frame image belongs to the camera still object The motion scene still belongs to the camera motion scene, and the camera motion scene includes the still scene of the camera moving object and the motion scene of the camera moving object; the specific steps include:

[0073] a. Read the highway video, figure 2 Screenshot for highway video. Obtain all the images, find the optical flow motion vector results between adjacent frame images, and then gather the optical flow motion vector results of the first 7 frames of the 8th frame image, and th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com