Automatic automobile driving method and device

An automatic driving and automobile technology, applied in transportation and packaging, vehicle position/route/height control, motor vehicles, etc., can solve the problem that the automatic driving system perception and driving methods cannot learn excellent human driver experience and vehicle control Failure of subsystems, inability to achieve anthropomorphic autonomous driving, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

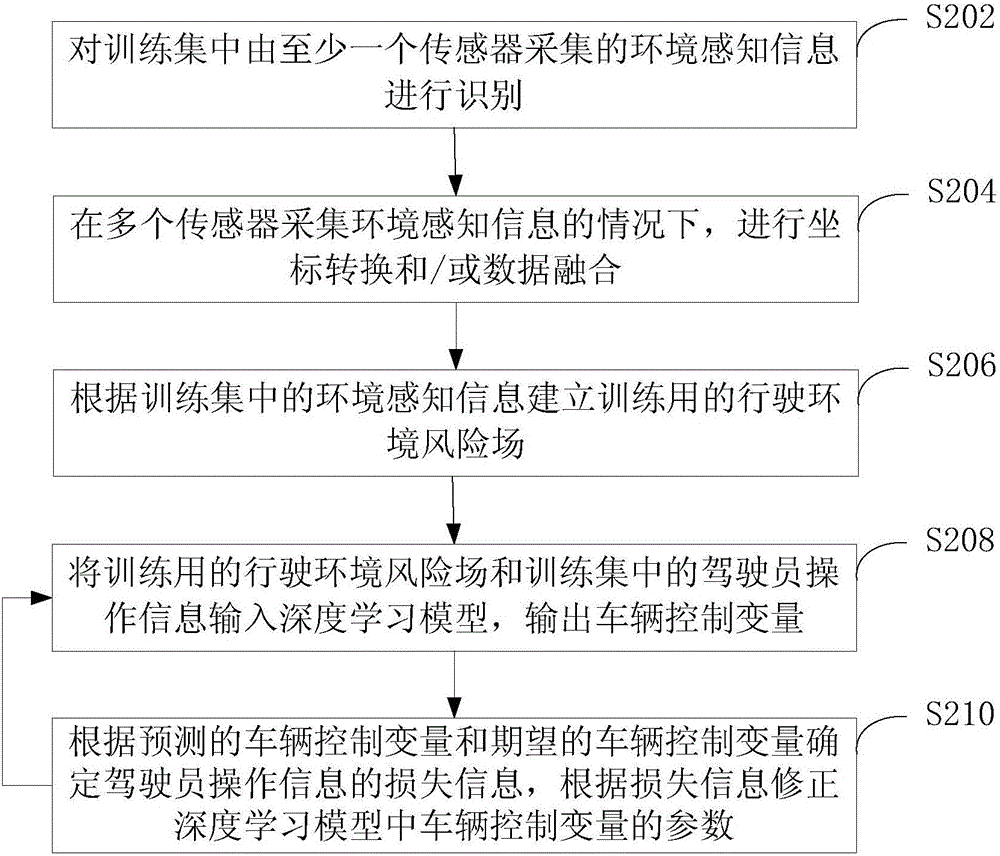

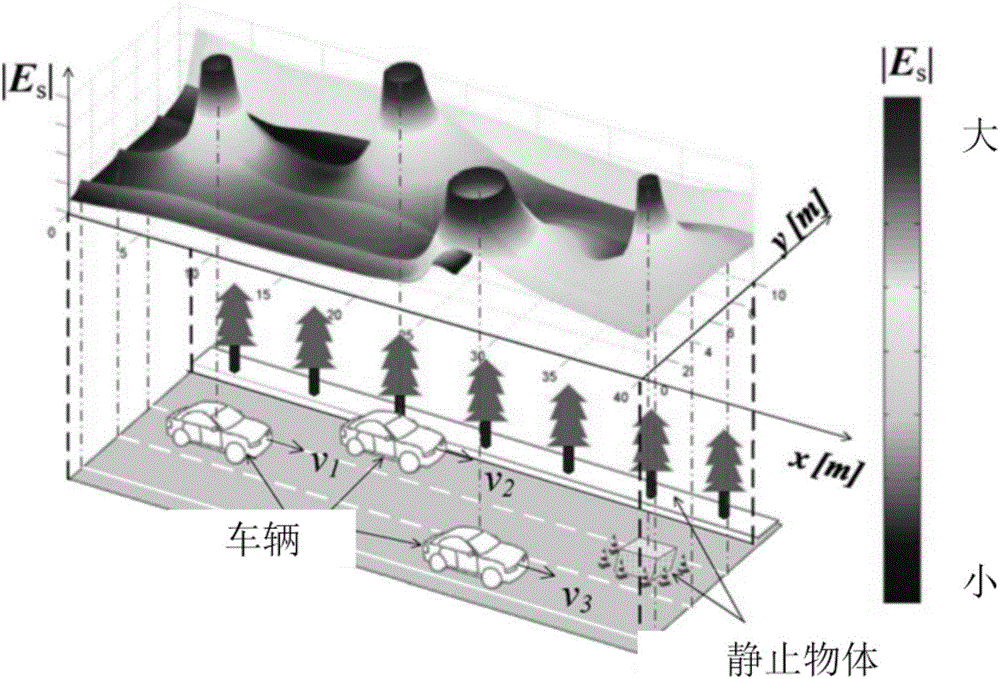

[0034] The invention proposes a method for automatic driving of automobiles. The method utilizes the collected environmental perception information to establish a driving environment risk field, and according to the driving environment risk field and the driver's operation training deep learning model, the automatic driving of the vehicle can be realized, and the automatic driving of the vehicle can be reduced. The training difficulty of the driving model (model for short).

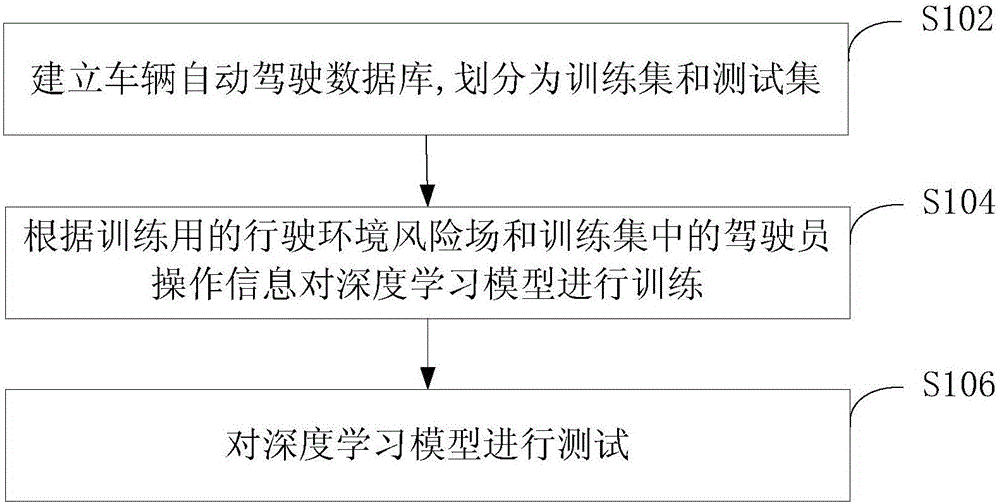

[0035] figure 1 It is a schematic flow chart of an embodiment of the automobile automatic driving method of the present invention. like figure 1 As shown, the method includes the following steps:

[0036] Step S102, establishing a vehicle automatic driving database according to the collected environment perception information and driver operation information, and dividing the vehicle automatic driving database into a training set and a test set to form samples. The training set is used to train the mod...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com