A stereoscopic video saliency detection method based on binocular multi-dimensional perception characteristics

A technology of stereoscopic video and detection methods, applied in stereoscopic systems, televisions, electrical components, etc., can solve the problems of high complexity of optical flow algorithm, inaccurate detection, unstable detection, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

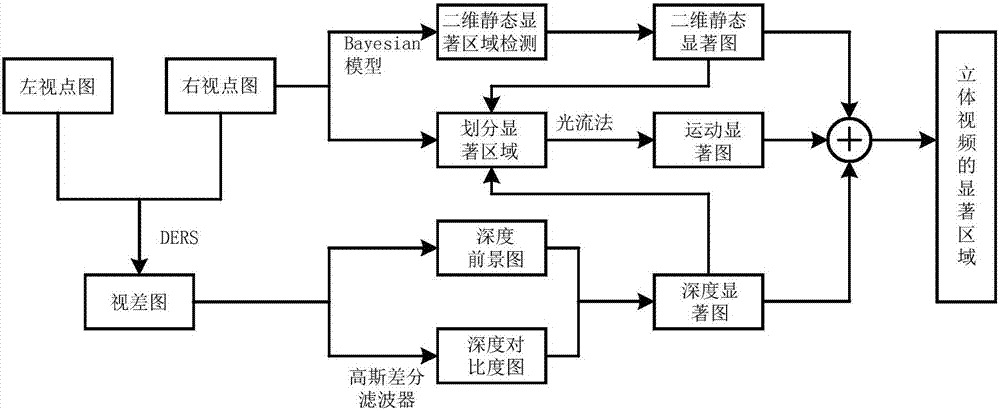

[0060] Such as figure 1 As shown, a stereoscopic video saliency detection method based on binocular multi-dimensional perception characteristics includes salient feature extraction and salient feature fusion.

[0061] The salient feature extraction is to calculate the saliency from the view information of the three different dimensions of space, depth and motion of the stereo video, which includes three parts: two-dimensional static salient area detection, depth salient area detection, and motion salient area detection. in:

[0062] Two-dimensional static salient region detection: Calculate the salience of the spatial features of a single color image according to the Bayesian model, and detect the two-dimensional static salient region of the color image, specifically:

[0063] Estimate the salience degree S of an object by calculating the probability of interest at a single point Z :

[0064]

[0065] In the formula, z represents a certain pixel point in the image, p rep...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com