Robot locating method adopting multi-sensor data fusion

A technology of robot positioning and data fusion, applied in the direction of instruments, re-radiation of electromagnetic waves, measuring devices, etc., can solve the problems that road sign features cannot be highly similar, mismatched, etc., to reduce mismatched results, reduce influence, and eliminate inherent constraints. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] The technical solution of this patent will be further described in detail below in conjunction with specific embodiments.

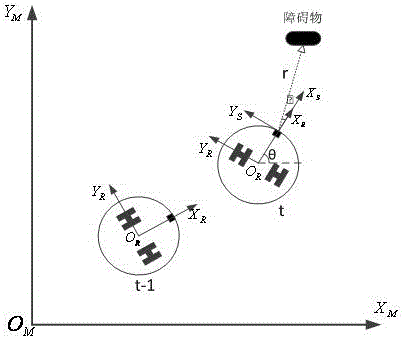

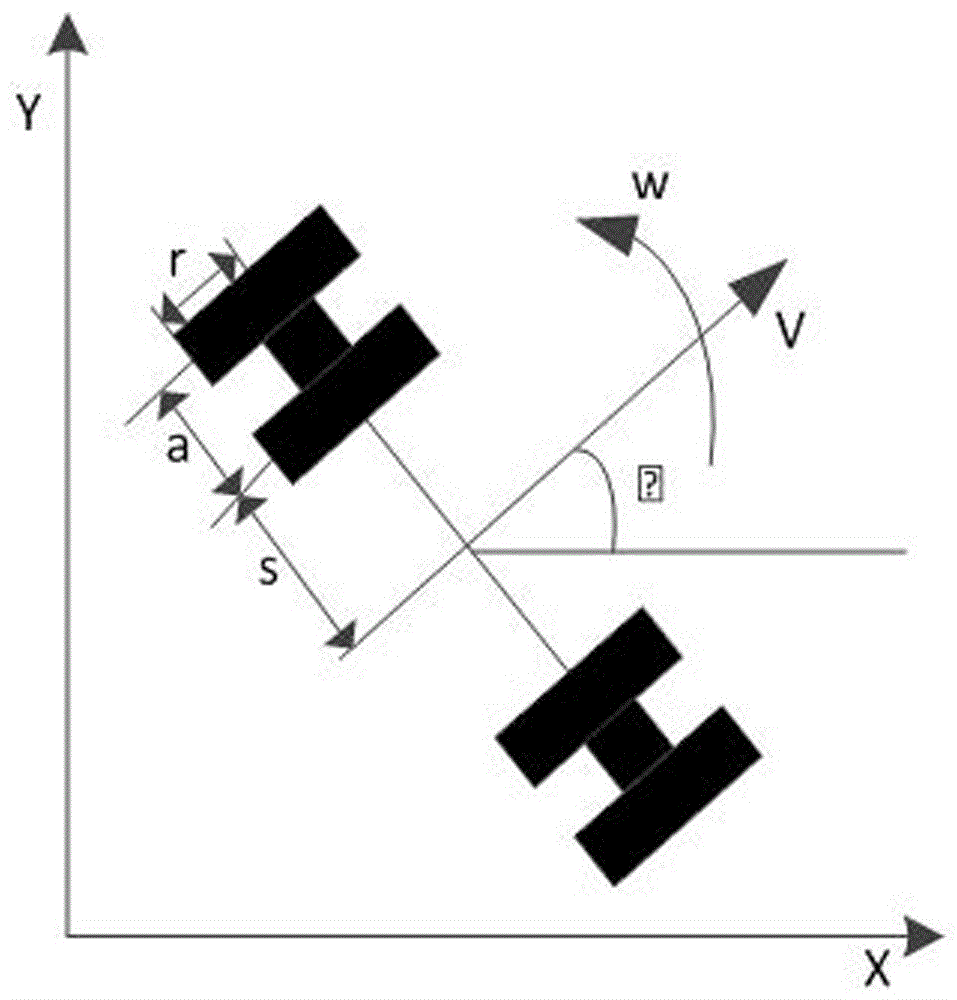

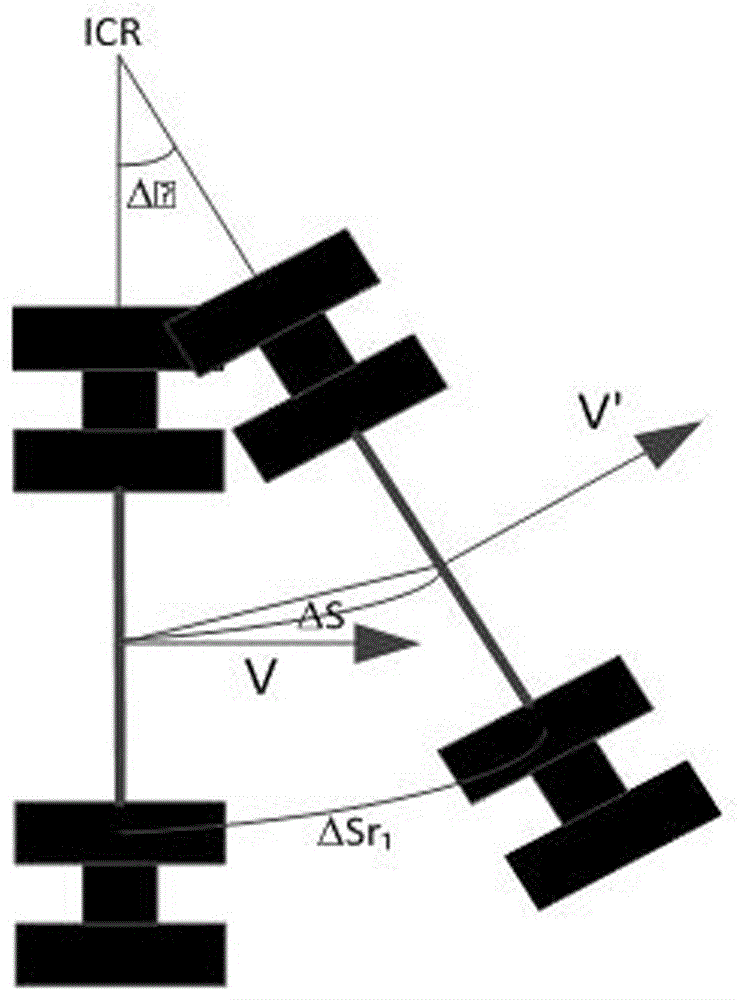

[0022] see Figure 1-5 , a multi-sensor data fusion robot positioning method, the specific steps are as follows:

[0023] (1) According to the motion model of the mobile robot, calculate the robot position change (Δx t ,Δy t ), and the yaw angle change θ calculated by the inertial measurement unit t ;Calculate the robot pose estimate P at the current moment based on the change in pose and the pose data at the previous moment odom (t)=[x(t),y(t),θ(t)] T ;

[0024] (2) Let q 0 =(Δx t ,Δy t ,θ t ), according to the reference scan data S of lidar at time t-1 t-1 And the scan data S at time t t , put q 0 As an initial estimate of the pose change, execute the laser point cloud scanning matching algorithm, and iteratively calculate the pose transformation q k ; And according to this result, calculate the robot pose estimation P corresponding ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com