Method and system for processing high-concurrency data requests

A data request and database technology, applied in transmission systems, electrical digital data processing, resource allocation, etc., can solve problems such as slow response time, reduced speed, and short-term system paralysis, and achieve the effect of reducing operation locks and improving processing speed.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

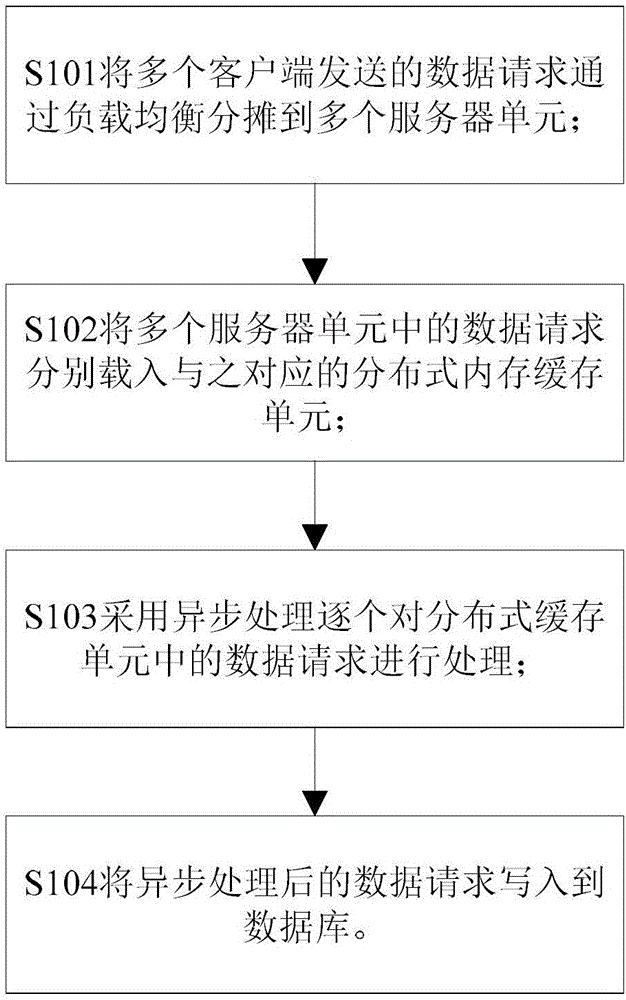

[0037] Such as figure 1 As shown, the data processing method provided by this embodiment includes: S101 load balancing the data requests sent by multiple clients to multiple server units; S102 loading the data requests from multiple server units into the corresponding A distributed memory cache unit; S103 uses asynchronous processing to process the data requests in the distributed cache unit one by one; S104 writes the asynchronously processed data requests into the database.

[0038]The data request that a plurality of clients sends is distributed to a plurality of server units by load balancing; Data request is distributed to a plurality of server units by load balancing, can reach load balancing by NGINX reverse proxy PHP in this embodiment; Multiple The data requests in the server unit are respectively loaded into the corresponding distributed memory cache unit. This embodiment uses REDIS cache and joins the REDIS CLUSTER cluster queue. The function of the queue is to allo...

Embodiment 2

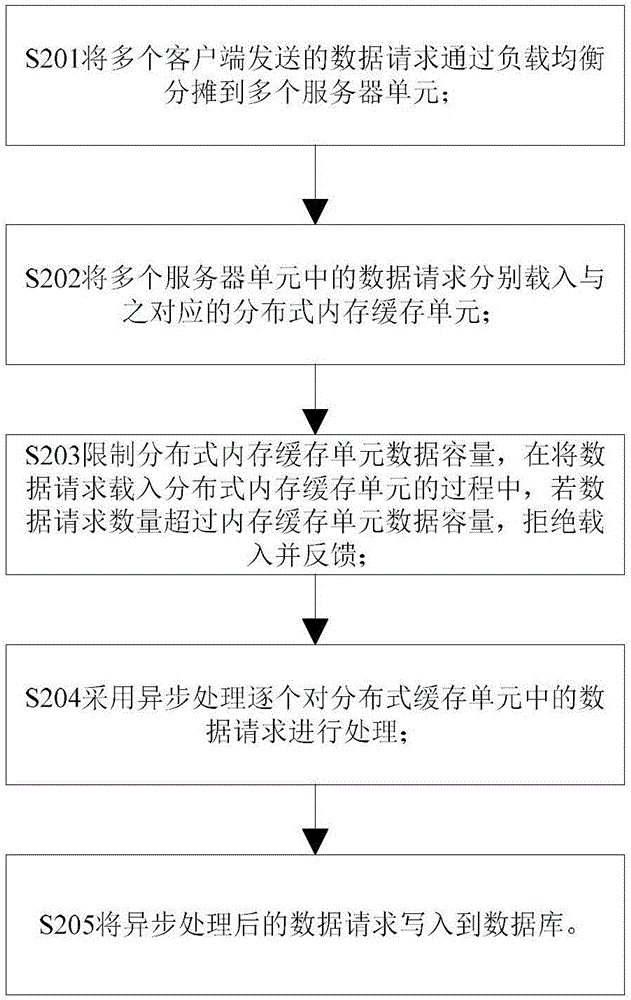

[0042] Such as figure 2 As shown, the data processing method provided by this embodiment includes: S201 load balancing the data requests sent by multiple clients to multiple server units; S202 loading the data requests from multiple server units into the corresponding Distributed memory cache unit; S203 limits the data capacity of the distributed memory cache unit. During the process of loading data requests into the distributed memory cache unit, when the number of data requests exceeds the data capacity of the memory cache unit, refuse to load and give feedback; S204 adopts The asynchronous processing processes the data requests in the distributed cache unit one by one; S205 writes the asynchronously processed data requests into the database.

[0043] The data requests sent by multiple clients are distributed to multiple server units through load balancing; the data requests are load balanced through reverse proxy, and in this embodiment, load balancing can be achieved by N...

Embodiment 3

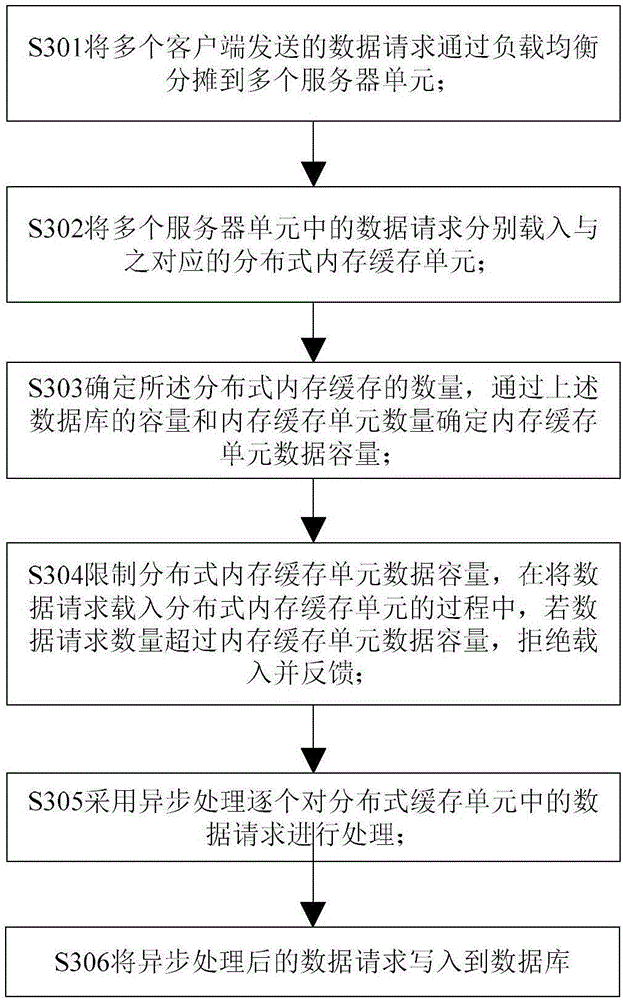

[0047] Such as image 3 As shown, the data processing method provided by this embodiment includes: S301 load balancing the data requests sent by multiple clients to multiple server units; S302 loading the data requests from multiple server units into the corresponding Distributed memory cache unit; S303 determines the quantity of the distributed memory cache, and determines the data capacity of the memory cache unit through the capacity of the above-mentioned database and the number of memory cache units; S304 limits the data capacity of the distributed memory cache unit, and loads the data request into During the process of the distributed memory cache unit, when the number of data requests exceeds the data capacity of the memory cache unit, it refuses to load and feeds back; S305 uses asynchronous processing to process the data requests in the distributed cache unit one by one; S306 processes the asynchronously processed data Request to write to the database.

[0048] The d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com