Eye movement data-based user help information automatic triggering apparatus and method

A technology that automatically triggers and assists information. It is applied in the input/output process of data processing, program control devices, and electrical and digital data processing. It can solve the problems of affecting user operation experience, difficult to automate integration, and low display efficiency. , to achieve the objective effect of improving user experience, improving efficiency and effect, device and method

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

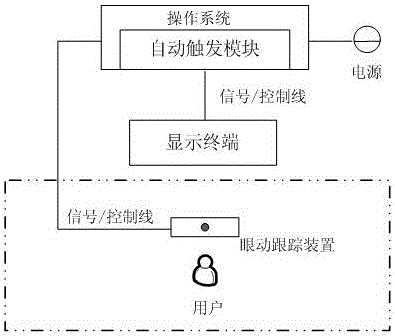

[0039] Taking the information display of office software installed on a desktop computer terminal as an example, the method for automatically triggering user help information based on eye movement data is described. The steps are as follows:

[0040] Step 1. Start the eye tracking device, the operation object information display terminal, the operation object system and related supporting equipment, connect each part reliably through cables, and the user starts to perform the operation task on the operation object system.

[0041] Step 2. The eye-tracking device collects eye-movement trajectories and stores the generated eye-movement data:

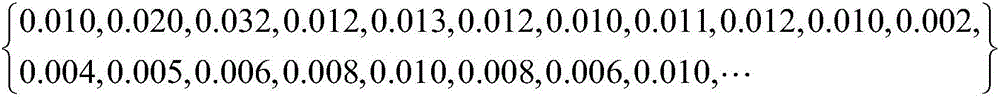

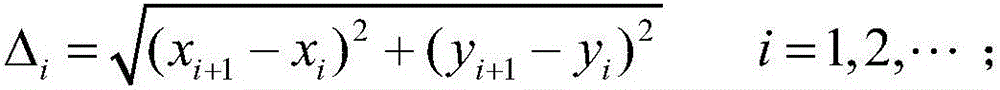

[0042] Step 2.1, the eye movement tracking device starts to collect the user's eye movement trajectory, generates and stores the eye movement data, and the generated eye movement data is a data sequence {(x 1 ,y 1 ),(x 2 ,y 2 ),...,(x i ,y i ),...}, where x is the abscissa of each point in the user's eye track, y is the ordinate of ea...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com