Visual identification and positioning method based on RGB-D camera

A technology of visual recognition and positioning methods, applied in image analysis, image data processing, instruments, etc., can solve the problems of convex hull contour errors, background parts included, slow running speed, etc., and achieve low cost, high calculation efficiency, and calculation amount small effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

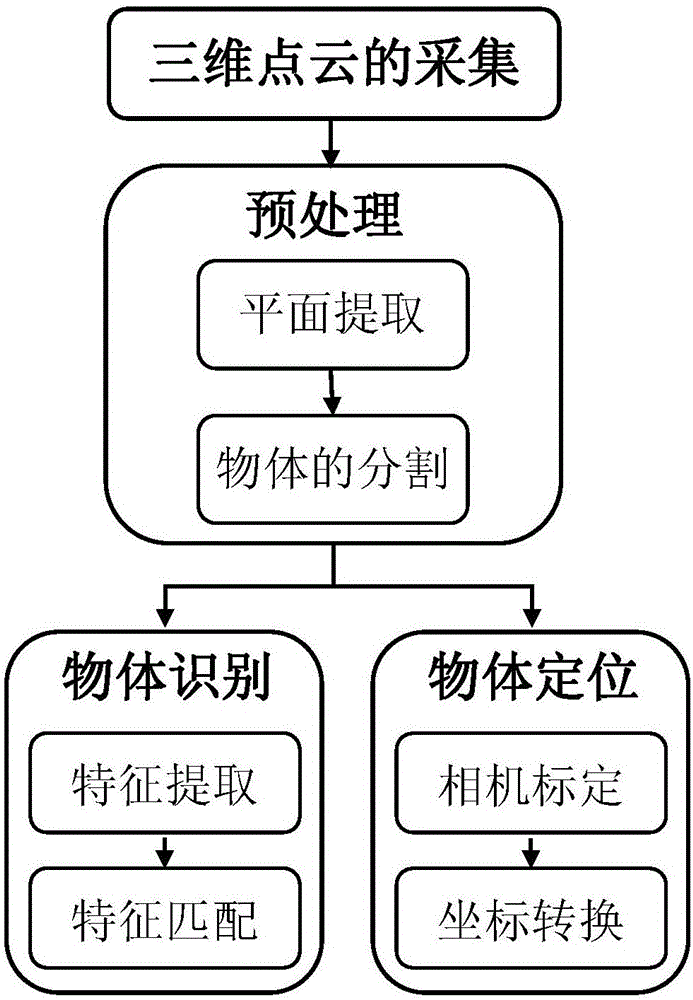

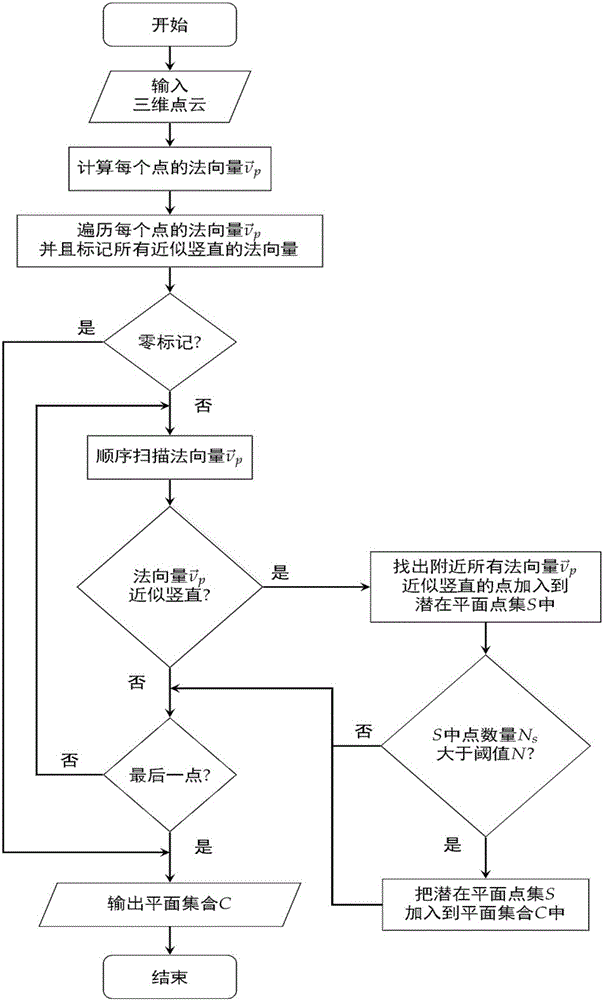

[0048] This embodiment provides a visual recognition and positioning method based on an RGB-D camera, such as figure 1 As shown, it is mainly composed of three-dimensional point cloud collection, plane extraction, object segmentation, object feature extraction and matching, and object positioning. The method specifically includes the following steps:

[0049] Step 1, convert the color image and depth image of the object into a three-dimensional point cloud image after being collected by the Microsoft Kinect camera sensor;

[0050] In this step, Microsoft's Kinect sensor can collect RGB-d images, through the built-in API function or third-party function libraries such as Open Natural Interaction (Open NI), Point Cloud Library (Point Cloud Library, PCL), The 3D point cloud image can be obtained.

[0051] Step 2, performing corresponding normal vector calculation for each point of the 3D point cloud image obtained in step 1;

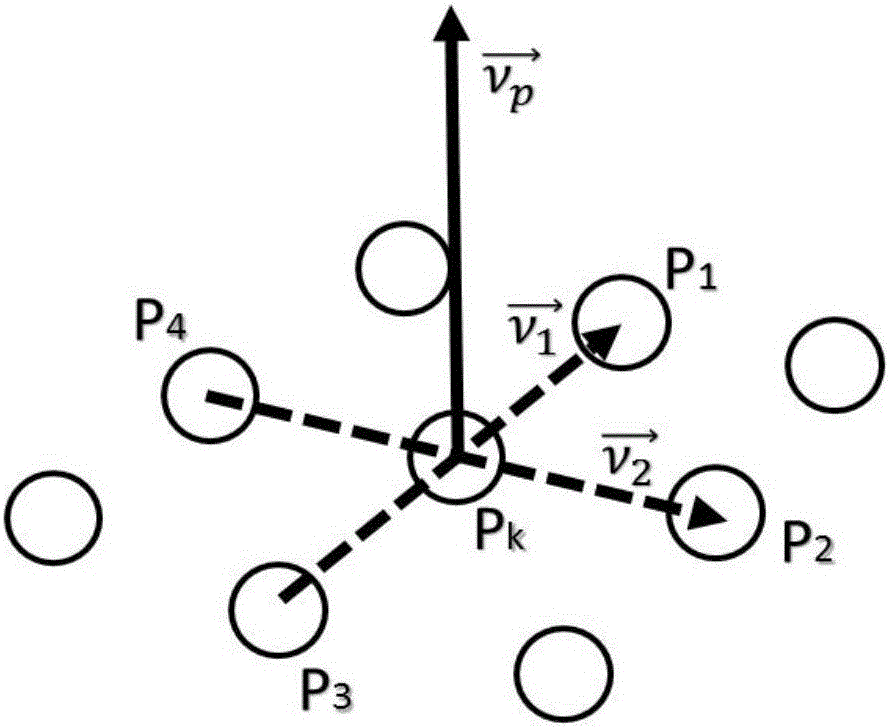

[0052] Such as figure 2 Shown, is the cross product...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com