Computer neural network modified according to pruning method

A neural network and computer technology, applied in the field of neural networks, to achieve the effect of reducing storage space and computing resources, and strong sparsity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

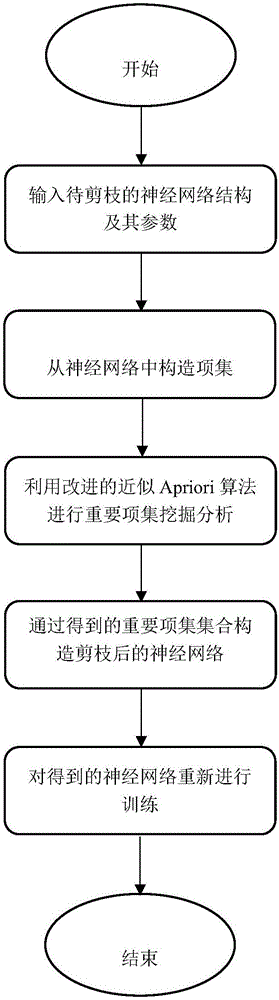

[0069] In this embodiment, the process of pruning the neural network by mining important itemsets is as follows:

[0070] 1. Input the neural network to be pruned. The neural network has two layers, the input layer has four nodes, the second layer has five nodes, and the output layer has one node.

[0071] 2. Prune the first layer of four by five network layers. The input weight matrix of the first layer of neural network is shown in Table 1:

[0072] Table 1

[0073] 0.82 -0.13 0.01 0.04 -0.23 0.31 -0.81 0.24 0.13 0.12 0.23 0.24 0.43 -0.12 -0.12 0.13 0.41 0.51 0.15 -0.43

[0074] 3. According to step 1, first construct the itemset of the neural network, first take the absolute value of the weight, and then take the nodes whose weight is greater than the threshold 0.2 for each layer, as shown in Table 2 below:

[0075] Table 2

[0076] 1 0 0 0 1 1 1 1 0 0 1 1 1 0 0 0 1 1 0 1

[0077] 4. Accor...

Embodiment 2

[0087] In this embodiment, the mining of important itemsets is used to pruning the autoencoder network (Autoencoder) and the running process is as follows:

[0088] 1. The user inputs a given autoencoder network, whose network structure is 784→128→64→128→784 and the training sample set MNIST. The role of the autoencoder network is to compress the input samples to the hidden layer and reconstruct the samples at the output. That is to say, there is the following relationship between the output layer of the autoencoder network and the input layer:

[0089]

[0090] The self-encoding network can be regarded as compressing the data (compressed from the original "n-dimensional" to "m-dimensional" where m is the number of neurons in the hidden layer), and then recovering the data with as little loss as possible when needed come out.

[0091] 2. Pruning the self-encoder network, the pruning results are shown in Table 5 below:

[0092] table 5

[0093]

[0094] The third row ...

Embodiment 3

[0097] In this implementation, the operation process of pruning handwritten digit recognition using important item set mining is as follows:

[0098] 1. The user inputs a given neural network and data set, the network structure is a fully connected neural network of 784→300→100→10, and the data set is the handwritten digit data set MNIST. The user's task is to recognize digits in an image.

[0099] 2. Pruning the neural network, the results of the pruning are shown in Table 6:

[0100] Table 6

[0101]

[0102] The third row is the result of this method, the first three columns are the compression ratio of each layer of the neural network, the fourth column is the overall compression ratio of the neural network, Accuracy is the recognition accuracy, and the fifth column is the accuracy before pruning. The sixth column is the accuracy rate after pruning (prune). It can be seen that this method reduces the neural network to the original 7.76%, and the accuracy of handwritt...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com