New viewpoint synthesizing method based on depth images

A technology of depth image and synthesis method, applied in the field of rendering based on depth map, which can solve the problems of affecting image quality, poor experimental effect, and inability to meet the viewing effect of human eyes.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0053] The present invention will be further described below.

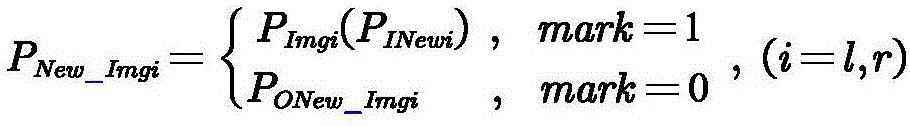

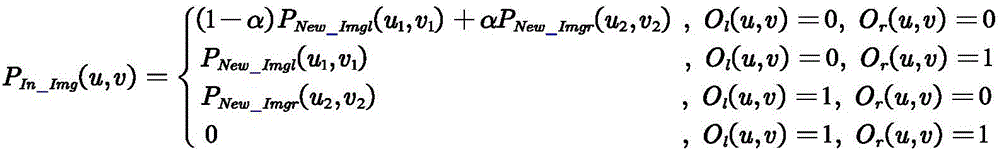

[0054] A new viewpoint synthesis method based on depth image, described viewpoint synthesis method comprises the following steps:

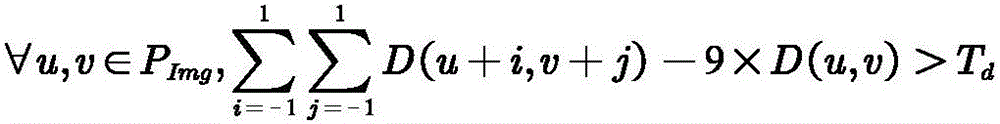

[0055] (1) Perform three-dimensional transformation on the texture map and depth map at the left and right reference viewpoints. The transformation process is as follows:

[0056] 1.1) Project each pixel in the image storage coordinate system, combined with the corresponding depth information, into the world coordinate system:

[0057]

[0058] where P Wi ={P Wi =(X Wi , Y Wi ,Z Wi ) T |i=l, r} represents the three-dimensional coordinates of the pixel at the current position of the reference viewpoint image in the world coordinate system, 1 and r represent left and right respectively, and X Wi and Y Wi are the horizontal and vertical coordinates in the world coordinate system, is the depth, d i is the gray value of the depth map at the current position, MinZ i and MaxZ ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com