Public scene intelligent video monitoring method based on vision saliency and depth self-coding

A technology for intelligent video surveillance and public scenes, applied in the field of image processing, can solve problems such as personnel attention and work efficiency decline

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] In order to make the purpose, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

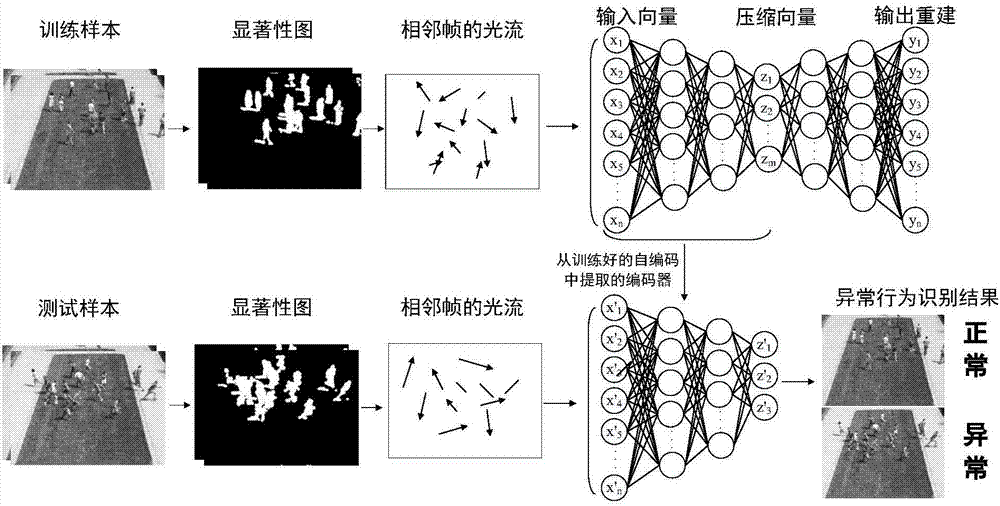

[0034]A public scene intelligent video monitoring method based on visual saliency and depth self-encoding according to the present invention includes: decomposing the video in a public scene into a single frame, and using visual saliency to extract motion information from the decomposed video frames , and then calculate the optical flow of the moving object in the adjacent frame, including the magnitude and direction of the moving speed. The subsequent detection process is divided into two processes: training and testing. In training, the optical flow of the training sample is used as the input of the depth autoencoder. The entire deep autoencoder network is trained by minimizing the loss function. In the test phase, the optical flow of th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com