Deep station caption detection method of weak supervision

A weakly supervised, station logo technology, applied in the field of deep learning, can solve problems such as labor and time consumption, and achieve the effect of improving precision and recall rate, improving data processing efficiency, and improving the effect of station logo detection.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0045] In order to make the above-mentioned features and advantages of the present invention more comprehensible, the following specific examples are given and described in detail in conjunction with the accompanying drawings.

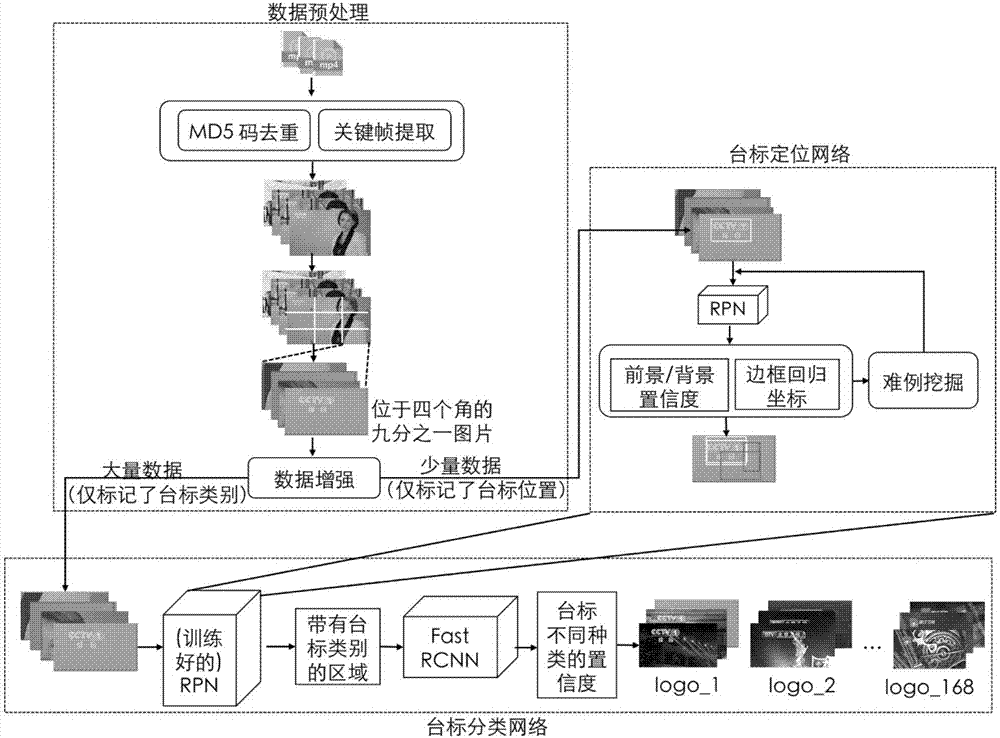

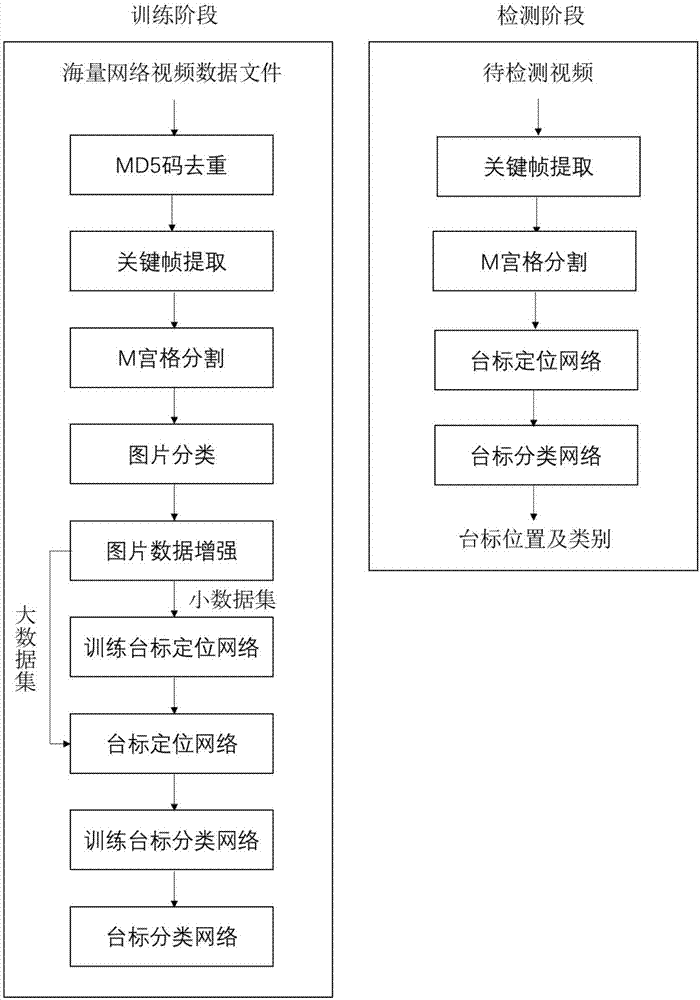

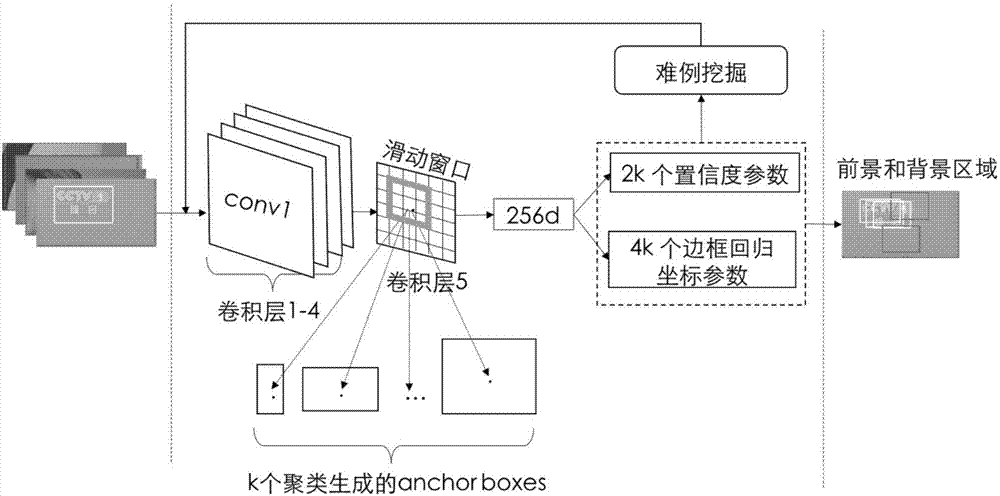

[0046] The present invention provides a weakly supervised deep station logo detection method, and its station logo detection model training process is as follows figure 1 As shown, the flow chart of the method is as follows figure 2 As shown, and the method includes a training phase and a detection phase, and the training phase mainly includes the following steps:

[0047] (1) Deduplicate massive network video data files according to the MD5 code, and retain valid data to facilitate later data processing and ensure effective training.

[0048](2) Use the key frame extraction method to extract some key frames from the above-mentioned de-duplicated network video, and carry out M palace grid division to each network video key frame, only keep four 1 / M p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com