Laser point cloud and image data fusion-based lane line extraction method

A laser point cloud and image data technology, which is applied in image data processing, image analysis, image enhancement, etc., can solve the problems that laser point cloud data cannot obtain lane line positioning, detection and extraction, single thinking, and roads cannot be separated.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0046] The technical solutions of the present invention will be described in detail below with reference to the drawings and embodiments.

[0047] See attached Figure 4 The specific implementation process of lane line detection based on multi-source data fusion provided by the embodiment of the present invention is as follows:

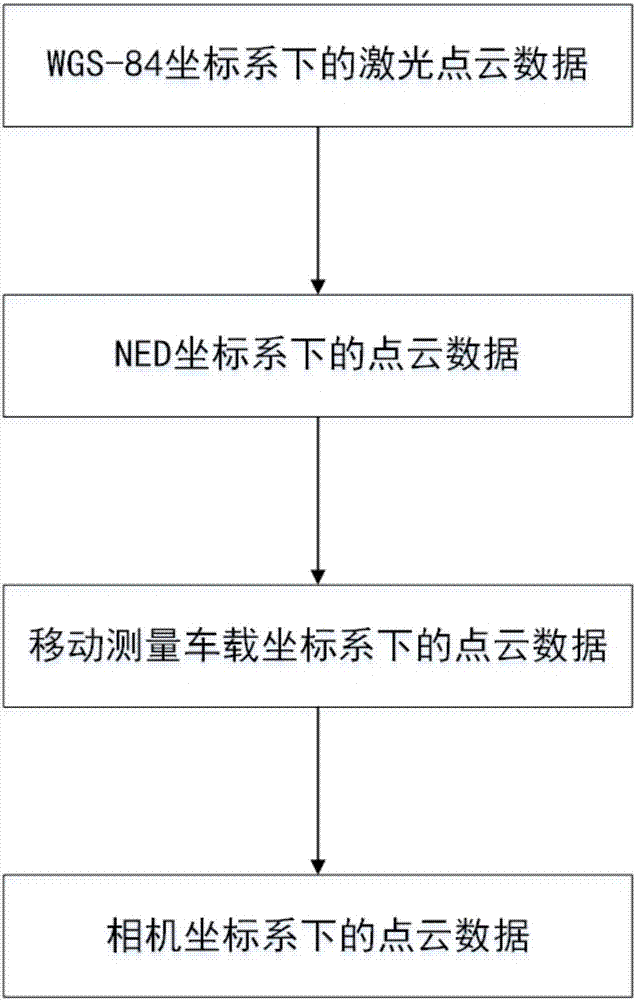

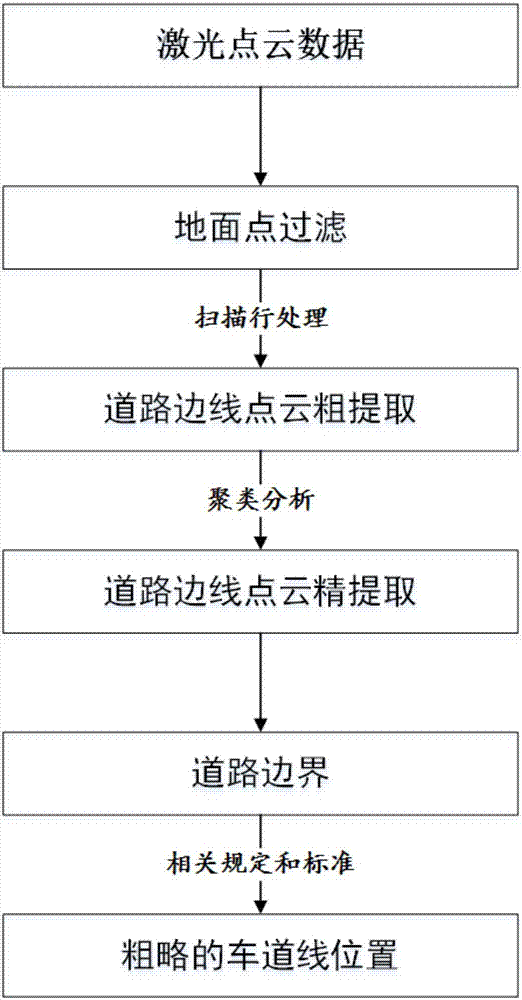

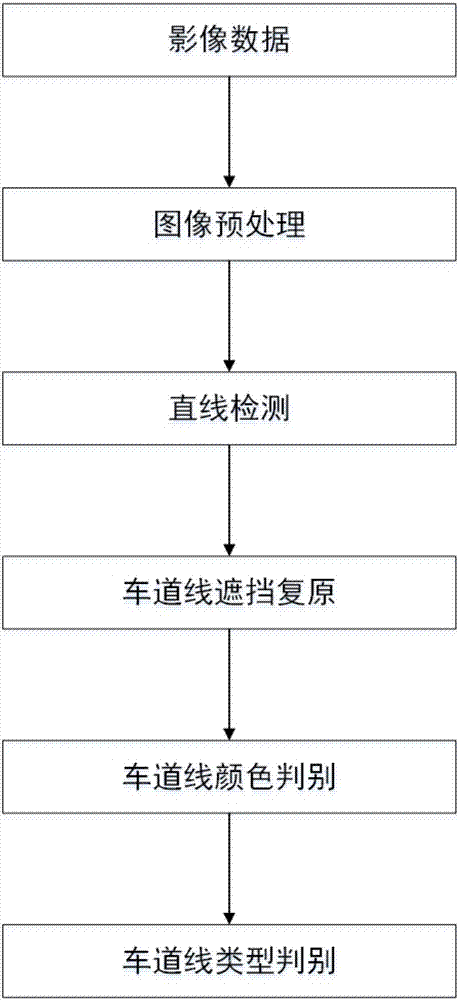

[0048] Step 1: Data preprocessing. This step includes the preprocessing of the original laser point cloud (the purpose is to extract the road surface point cloud) and the image preprocessing. The image preprocessing also includes image segmentation, image denoising and image enhancement. The segmentation link needs to extract the interesting part of the image (that is, the lane line), which can be processed by using lidar data and the characteristics of the photo image itself.

[0049] Preprocessing of the original laser point cloud: First, find the trajectory points corresponding to each group of point clouds, use the trajectory points to remove the interf...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com