Joint sight line direction calculation method of left and right eye images of human eyes

A technology of sight direction and calculation method, which is applied in the field of computer vision and image processing, can solve the problem of monocular image noise, etc., and achieve the effect of solving inaccurate prediction results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The specific implementation of the present invention will be described in detail below in conjunction with the accompanying drawings.

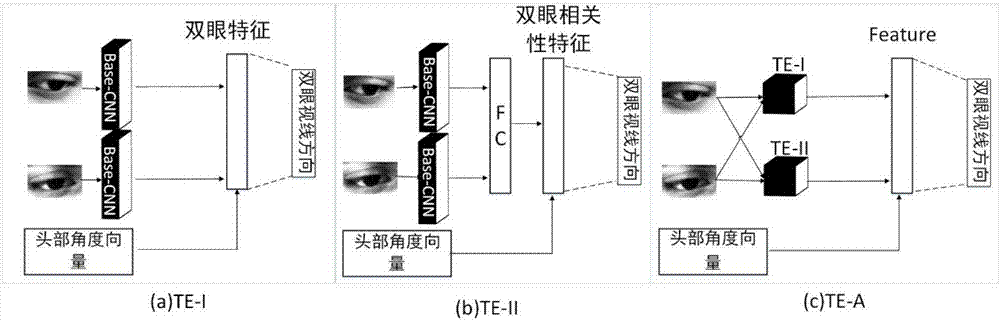

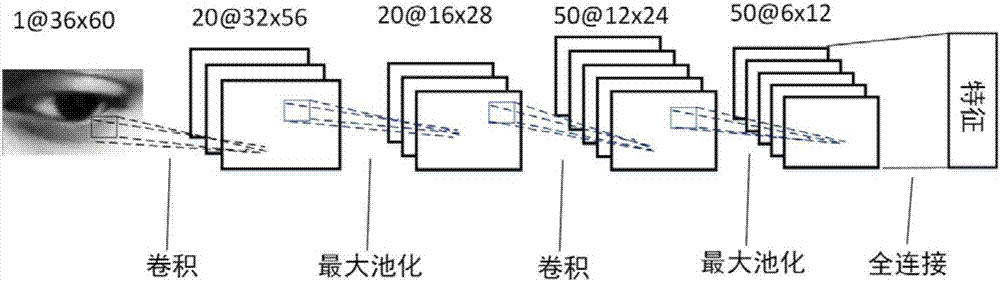

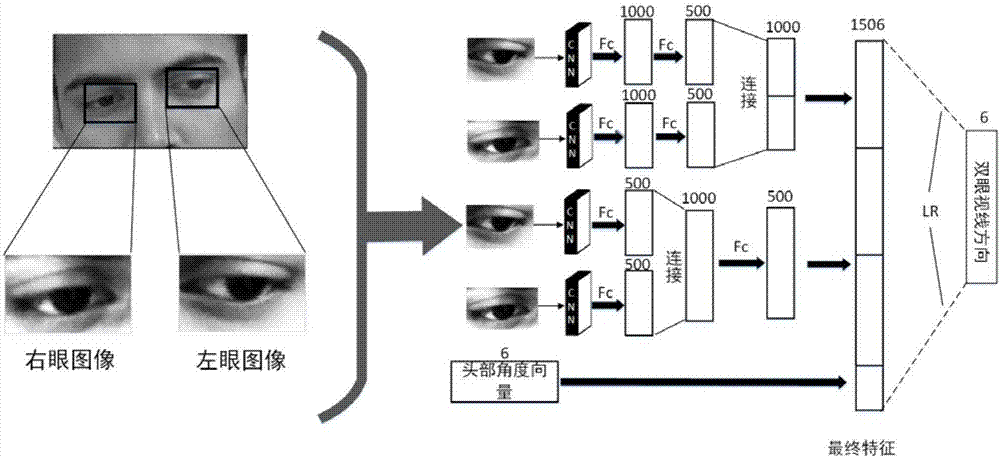

[0035] The present invention provides a line-of-sight calculation method for the combination of left and right eye images of human eyes, which inputs the information characteristics of human eyes to predict the line-of-sight direction of people's eyes. Information features used in . This method has no additional requirements on the system, and only uses the human eye image captured by a single camera as input. At the same time, by combining the image information of both eyes, the present invention can eliminate some error situations with relatively large monocular noise, thereby achieving better robustness than other similar methods.

[0036] First, for the acquisition of human eye images, the present invention includes the following procedures. Using a single camera, capture an image that contains areas of the user's face. Use existin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com