Method of processing emotion in voice, and mobile terminal

A mobile terminal, emotional technology, applied in speech analysis, instruments, etc., can solve the problem of low communication efficiency of voice calls

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

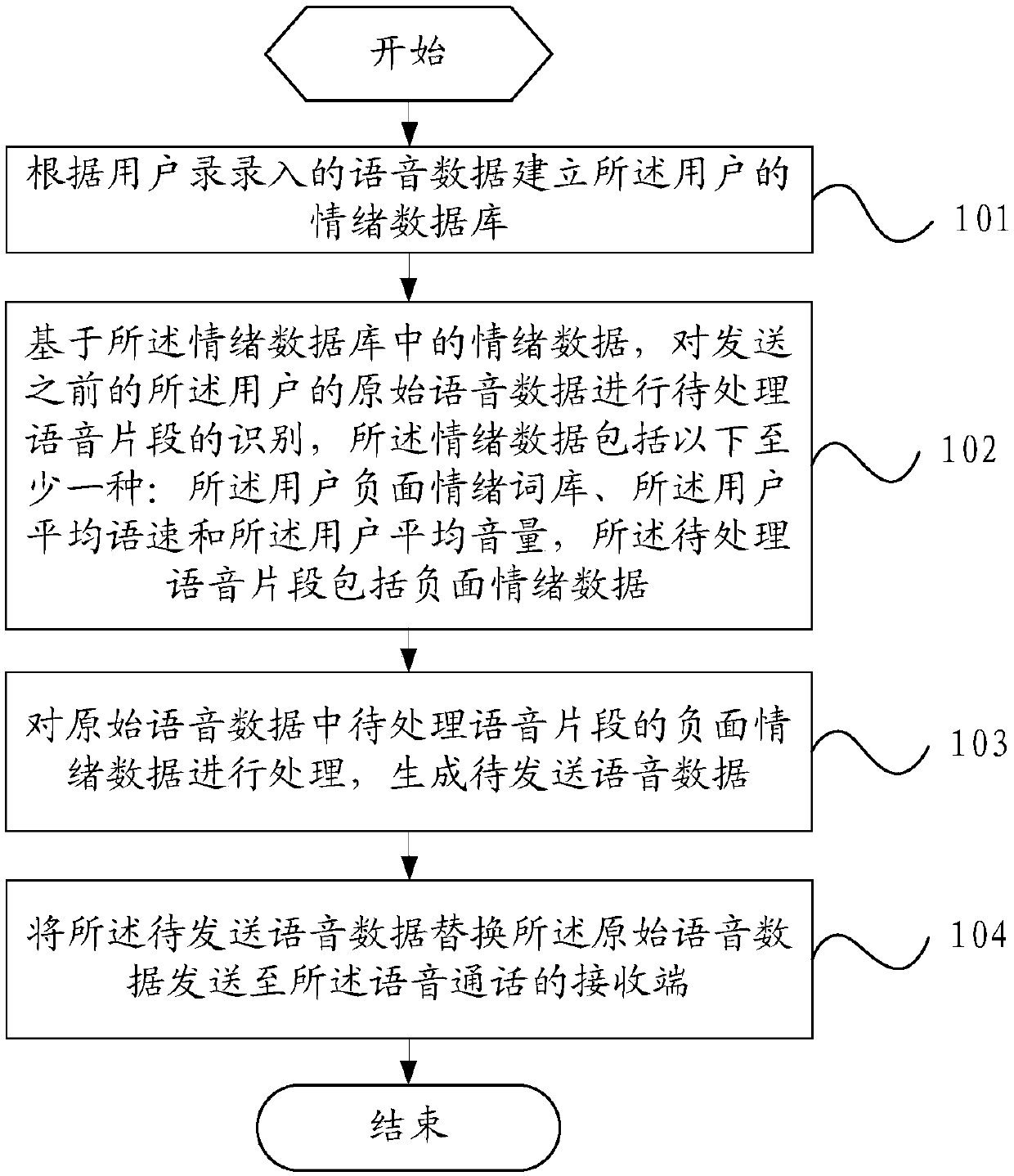

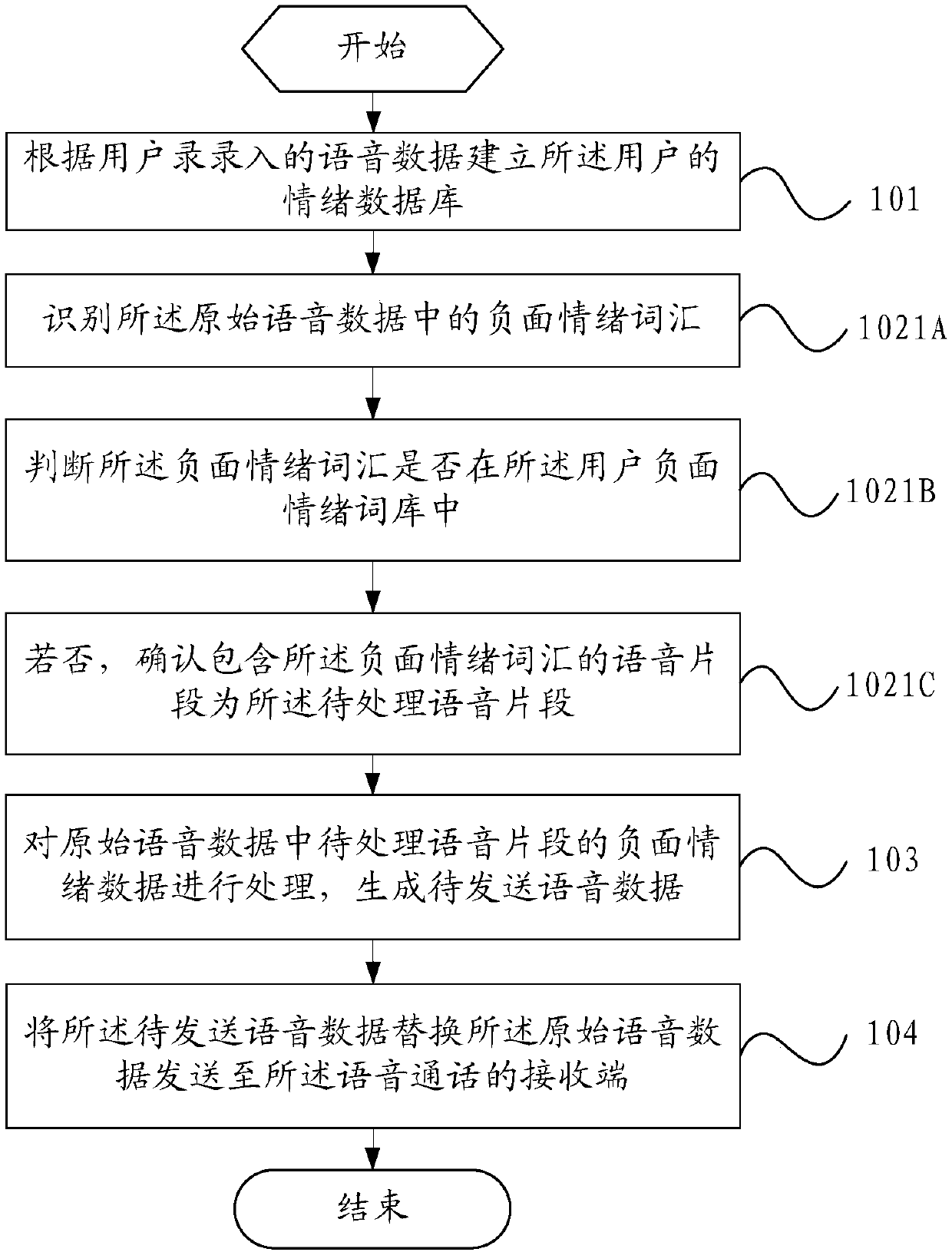

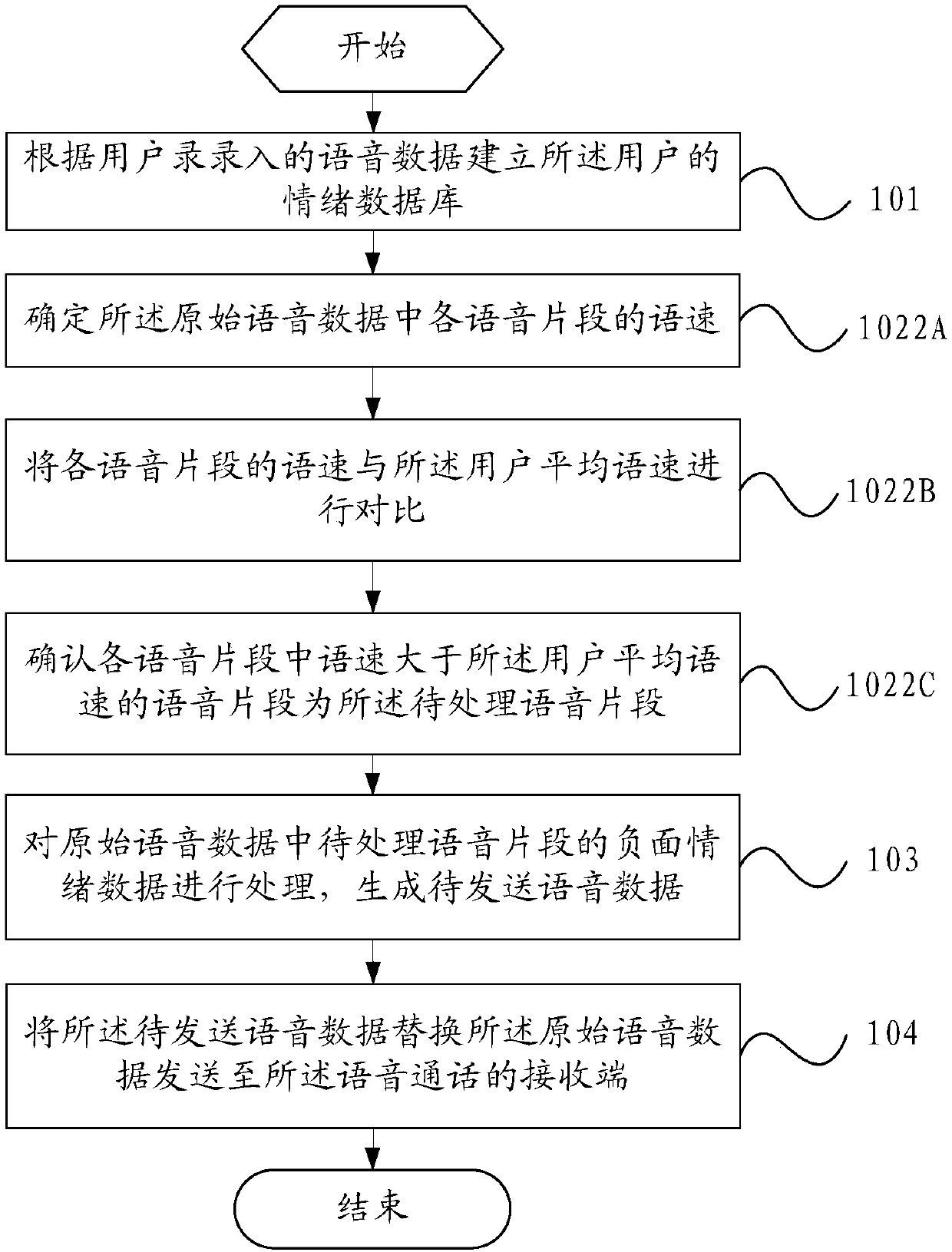

[0032] refer to figure 1 , which shows a flow chart of the steps of a method for processing emotions in speech according to Embodiment 1 of the present invention, which may specifically include the following steps:

[0033] Step 101, establishing the user's emotion database according to the voice data entered by the user.

[0034] In the embodiment of the present invention, an emotion database can be established by analyzing voice data of users, so that each emotion data in the emotion database is different for each user. For example, for a user who usually speaks louder, the average volume for judging that the user is abnormally emotional is higher; for a user who usually speaks faster, the average speech rate for judging that the user is abnormally emotional is faster.

[0035] In practical applications, the emotion database corresponds to the current mobile terminal and is stored under a specified path of the mobile terminal. The emotion database saves the emotion data of...

Embodiment 2

[0111] refer to figure 2 , shows a structural block diagram of a mobile terminal according to Embodiment 2 of the present invention.

[0112] The mobile terminal 200 includes: an emotion database establishment module 201 , a speech segment identification module 202 to be processed, a speech processing module 203 , and a sending module 204 .

[0113] The functions of each module and the interaction between each module are introduced in detail below.

[0114] The emotion database building module 201 is configured to create the user's emotion database according to the voice data entered by the user.

[0115] The voice segment identification module 202 to be processed is configured to identify the voice segment to be processed on the user's original voice data before sending based on the emotional data in the emotional database, the emotional data including at least one of the following: The user's negative emotion lexicon, the user's average speech rate and the user's average ...

Embodiment 3

[0135] image 3 A schematic diagram of the hardware structure of a mobile terminal for implementing various embodiments of the present invention, the mobile terminal 300 includes but not limited to: a radio frequency unit 301, a network module 302, an audio output unit 303, an input unit 304, a sensor 305, a display unit 306, User input unit 307, interface unit 308, memory 309, processor 310, power supply 311 and other components. Those skilled in the art can understand that, image 3 The structure of the mobile terminal shown in the above does not constitute a limitation to the mobile terminal, and the mobile terminal may include more or less components than those shown in the figure, or combine certain components, or arrange different components. In the embodiment of the present invention, the mobile terminal includes, but is not limited to, a mobile phone, a tablet computer, a notebook computer, a palmtop computer, a vehicle-mounted terminal, a wearable device, and a pedom...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com