Patents

Literature

96 results about "Rate of speech" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Converting text-to-speech and adjusting corpus

ActiveUS20080270139A1Improve voice qualitySpeech recognitionSpeech synthesisSpeech soundText to speech conversion

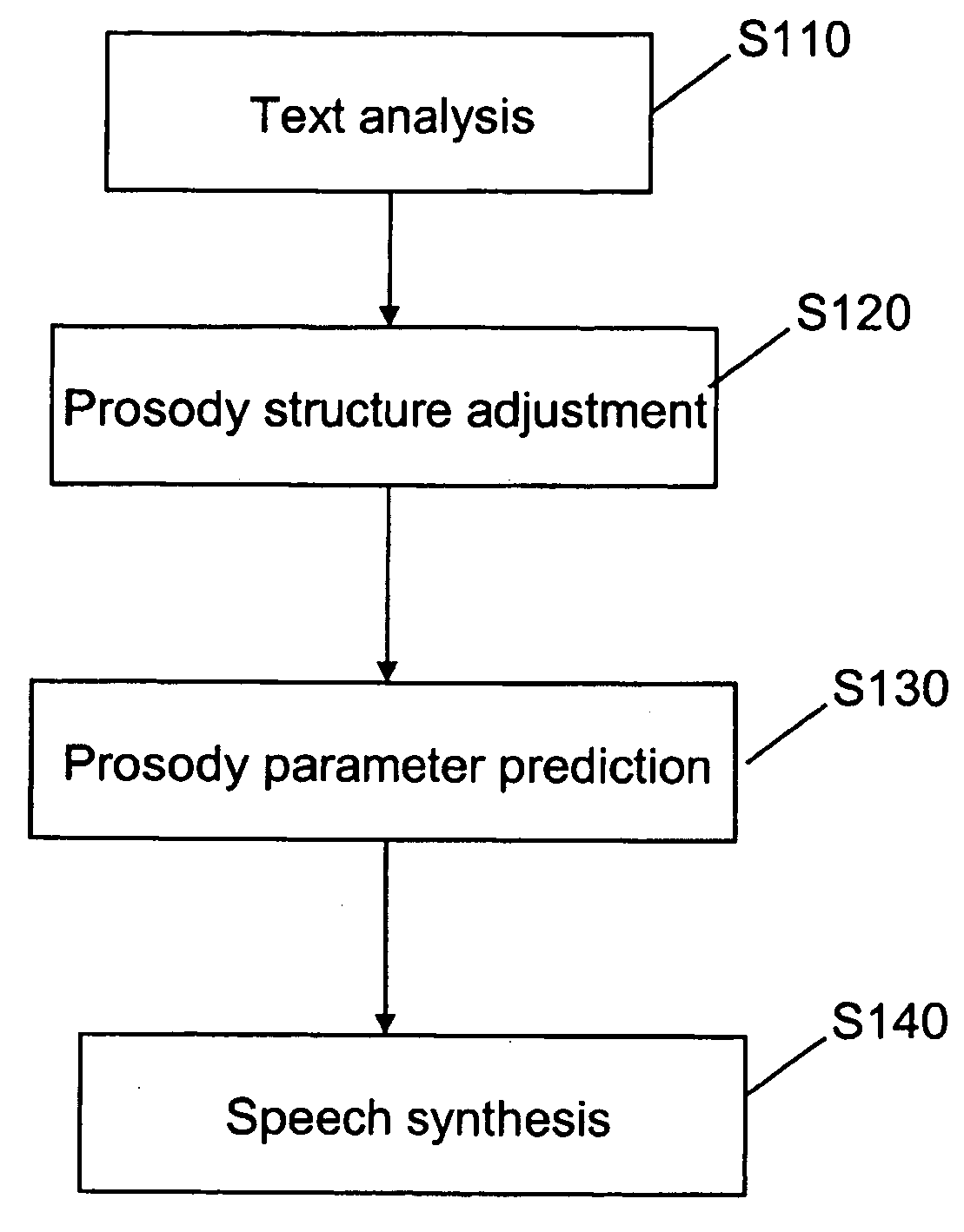

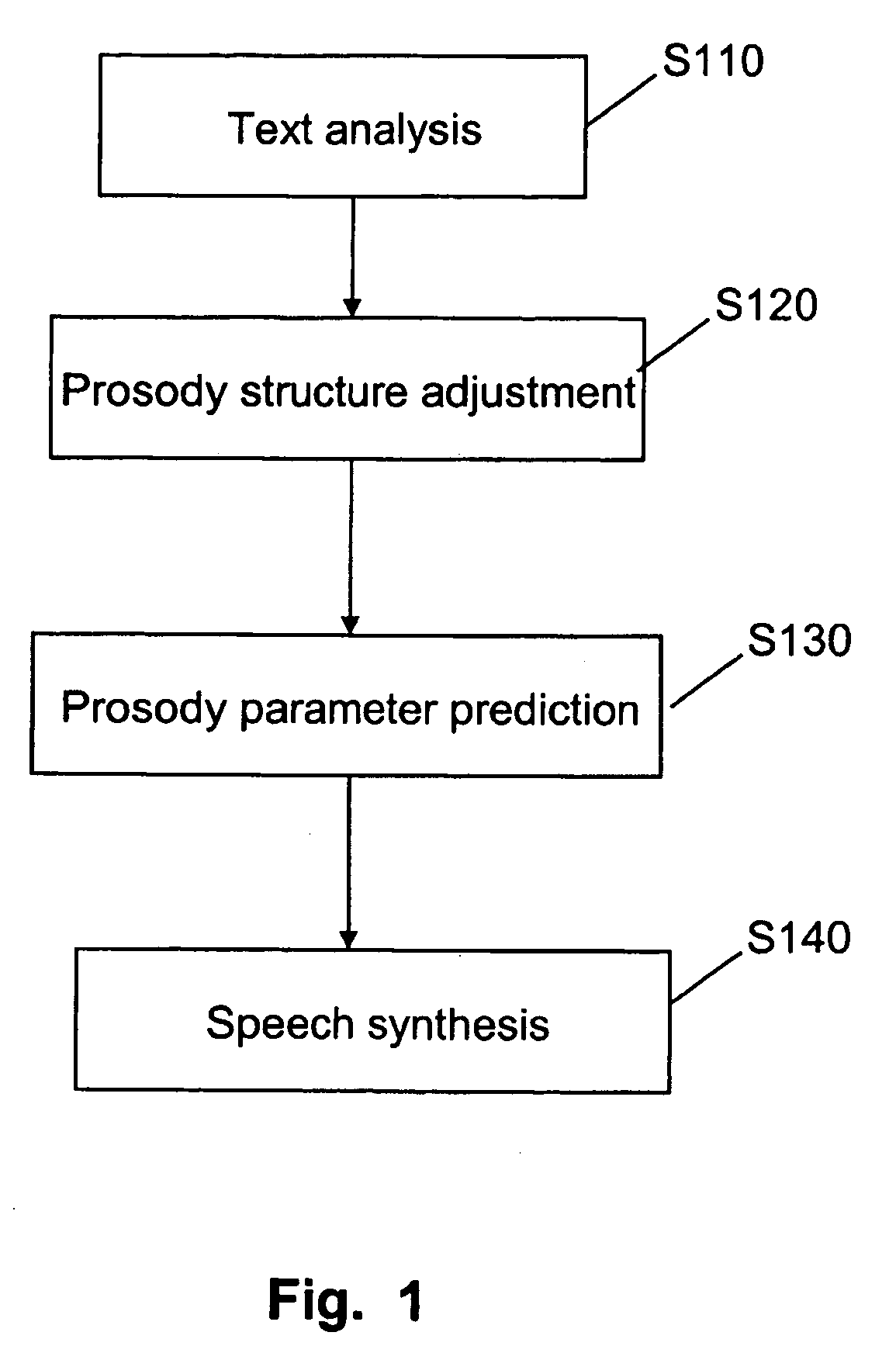

The present invention provides a method and apparatus for text to speech conversion, and a method and apparatus for adjusting a corpus. The method for text to speech comprises: text analysis step for parsing the text to obtain descriptive prosody annotations of the text based on a TTS model generated from a first corpus; prosody parameter prediction step for predicting the prosody parameter of the text according to the result of text analysis step; speech synthesis step for synthesizing speech of said text based on said the prosody parameter of the text; wherein descriptive prosody annotations of the text include prosody structure for the text, the prosody structure of the text is adjusted according to a target speech speed for the synthesized speech. The present invention adjusts the prosody structure of the text according to the target speech speed. The synthesized speech will have improved quality.

Owner:CERENCE OPERATING CO

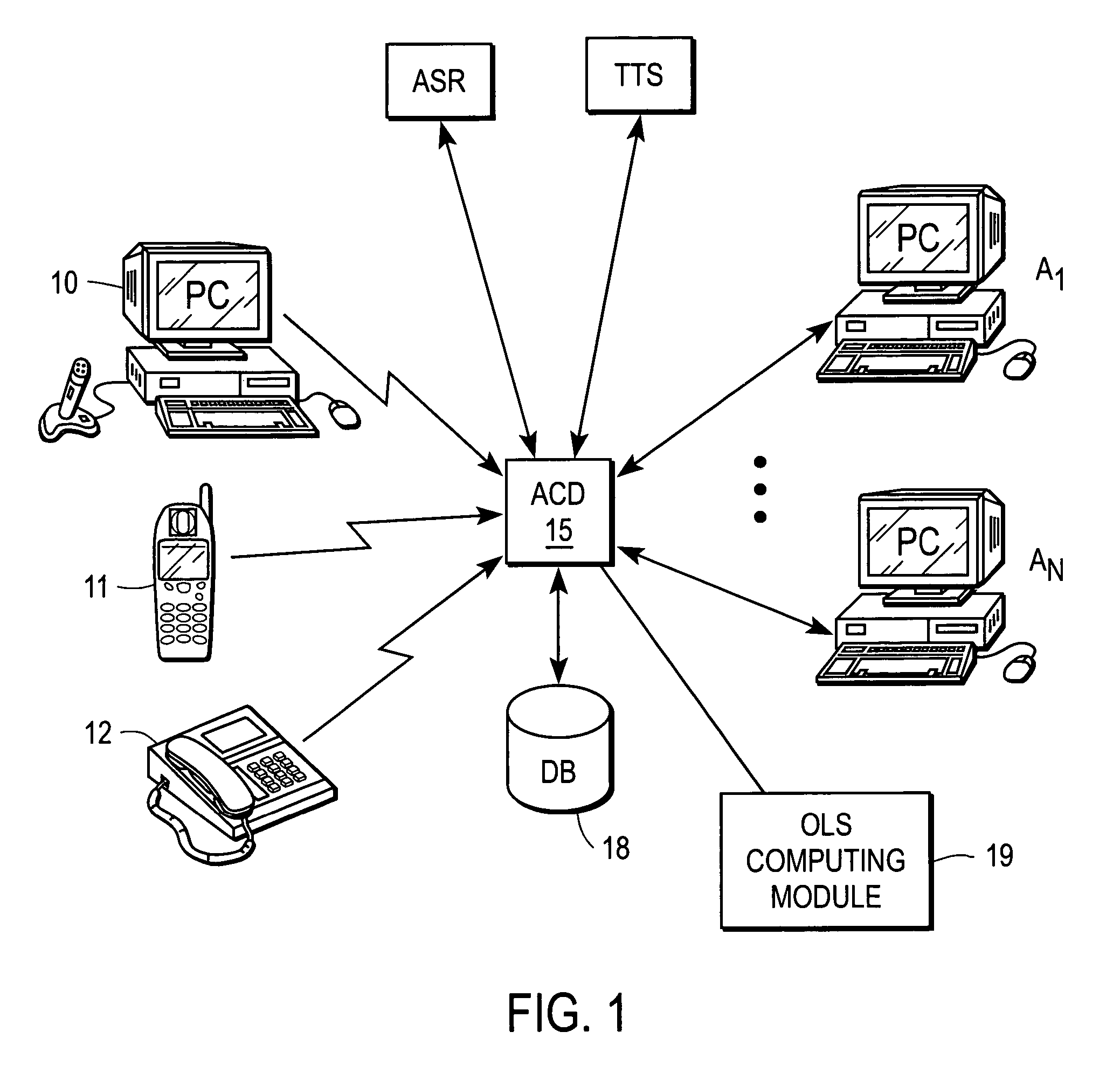

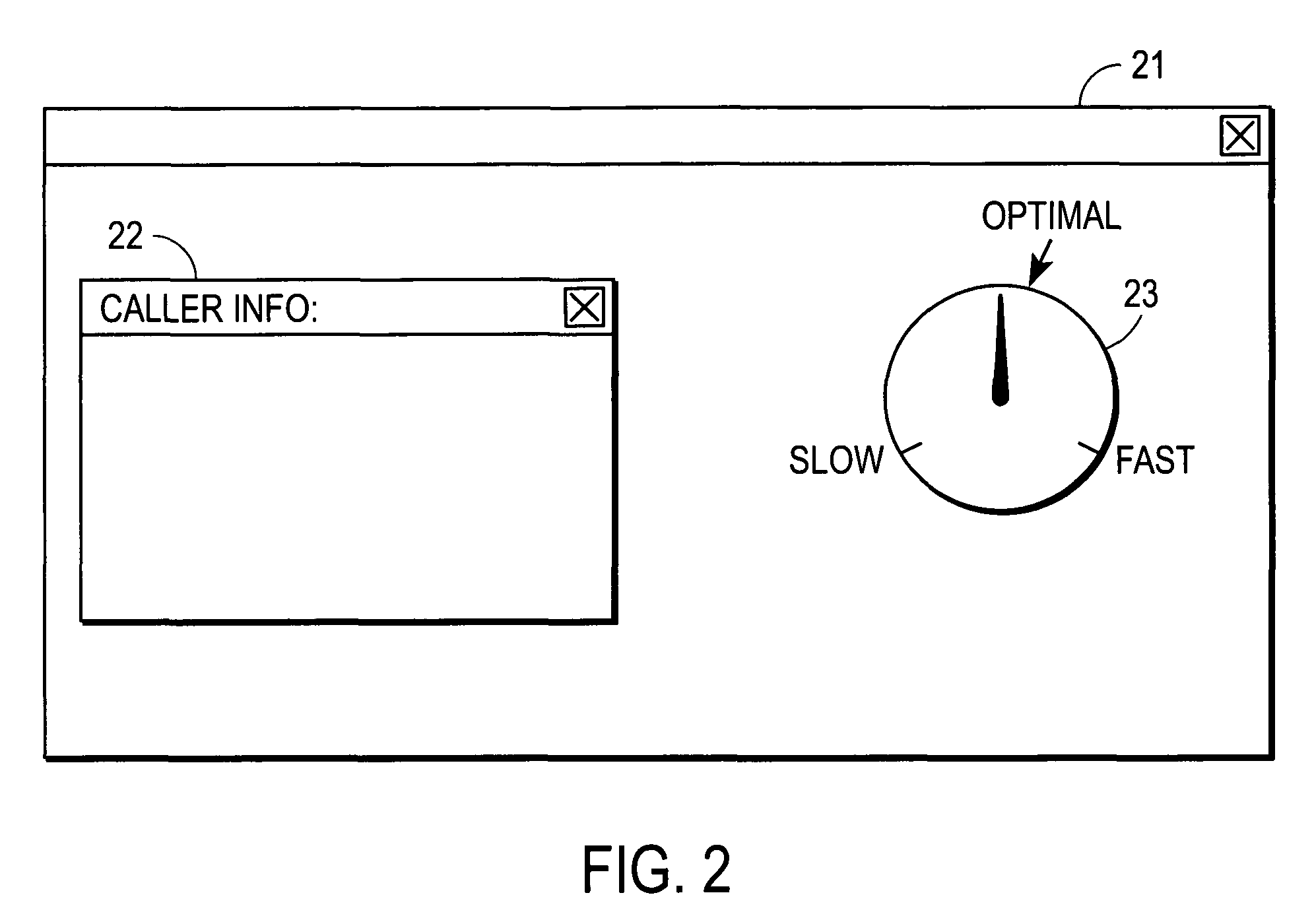

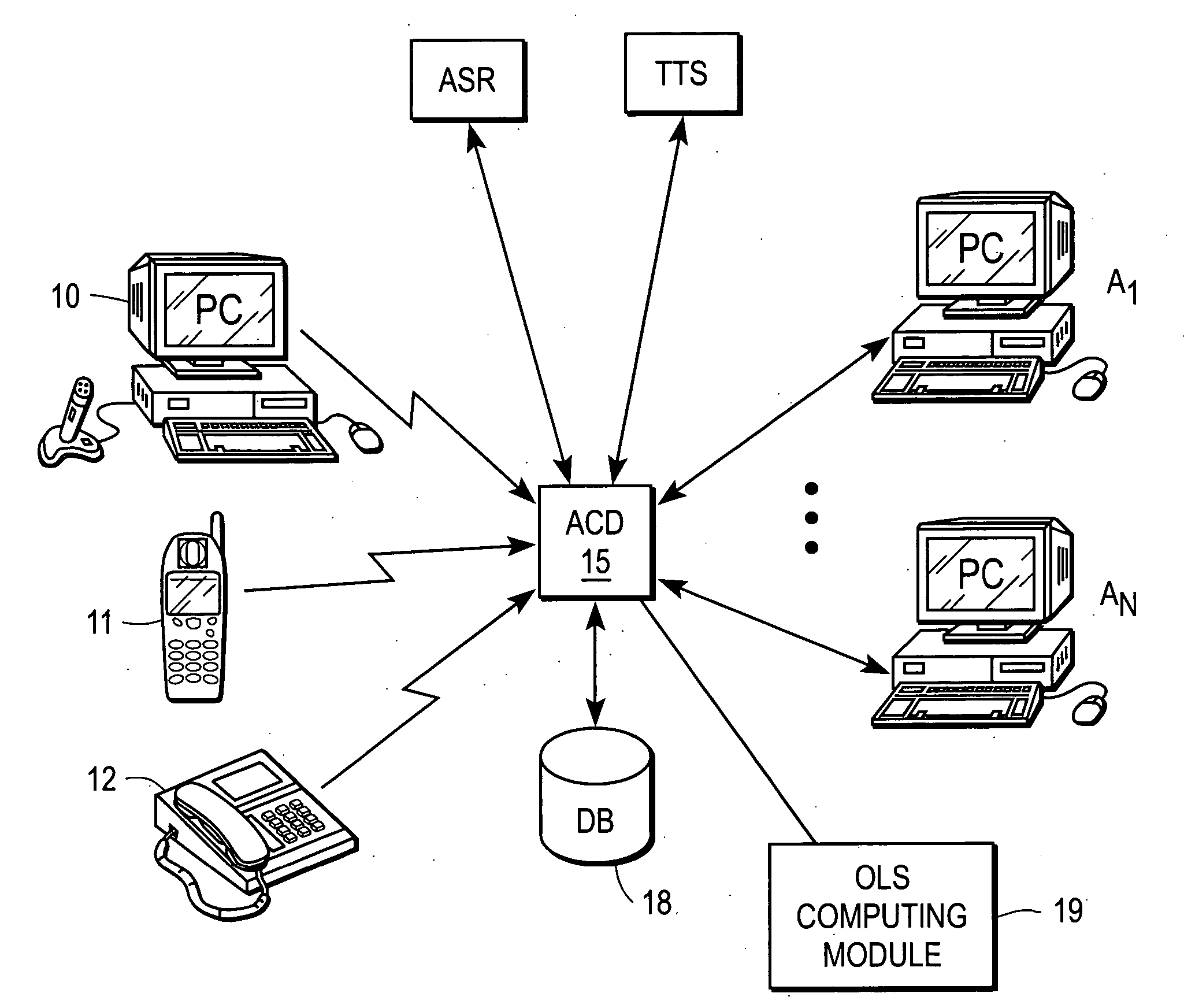

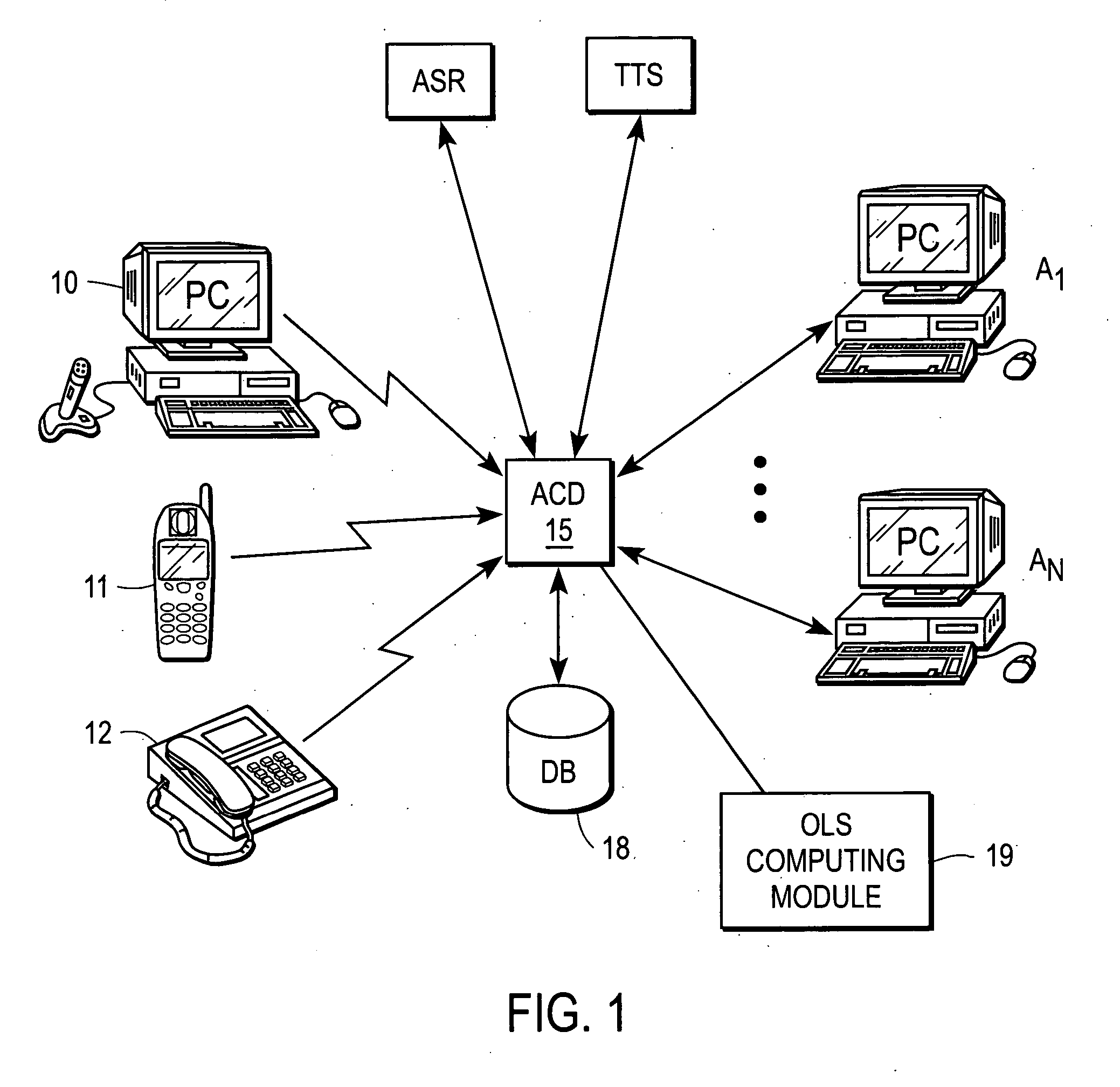

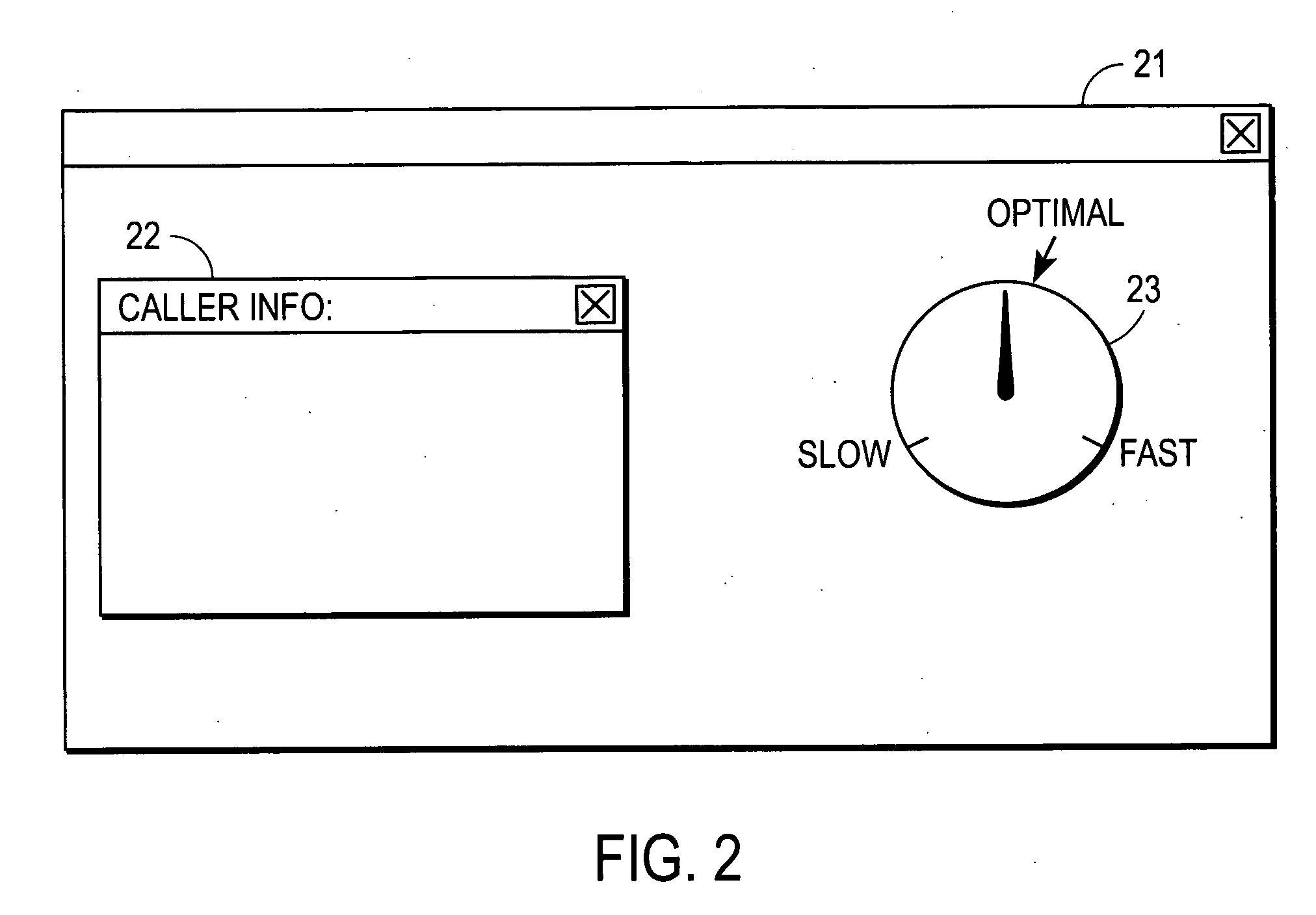

System and method for optimizing the rate of speech of call center agents

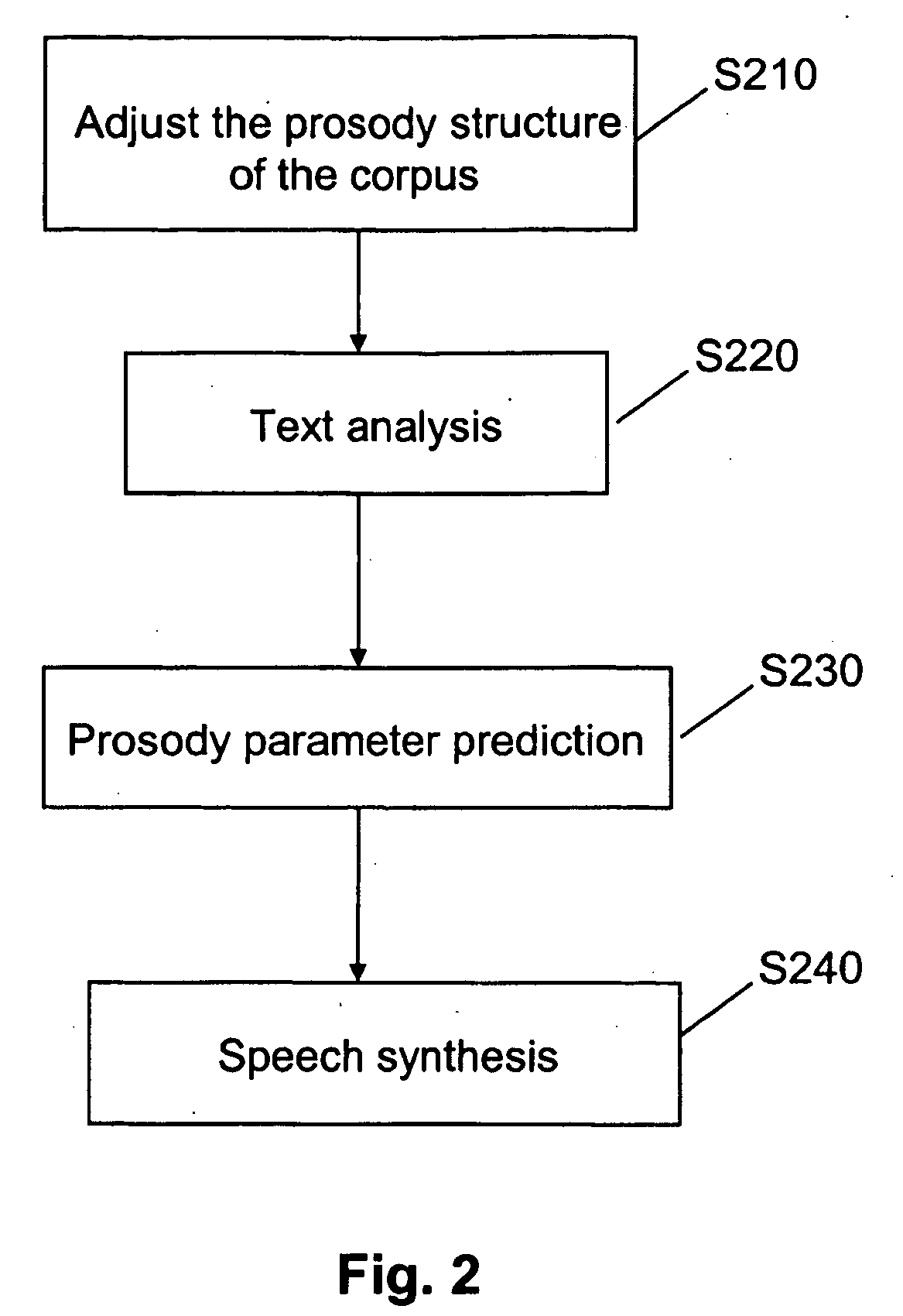

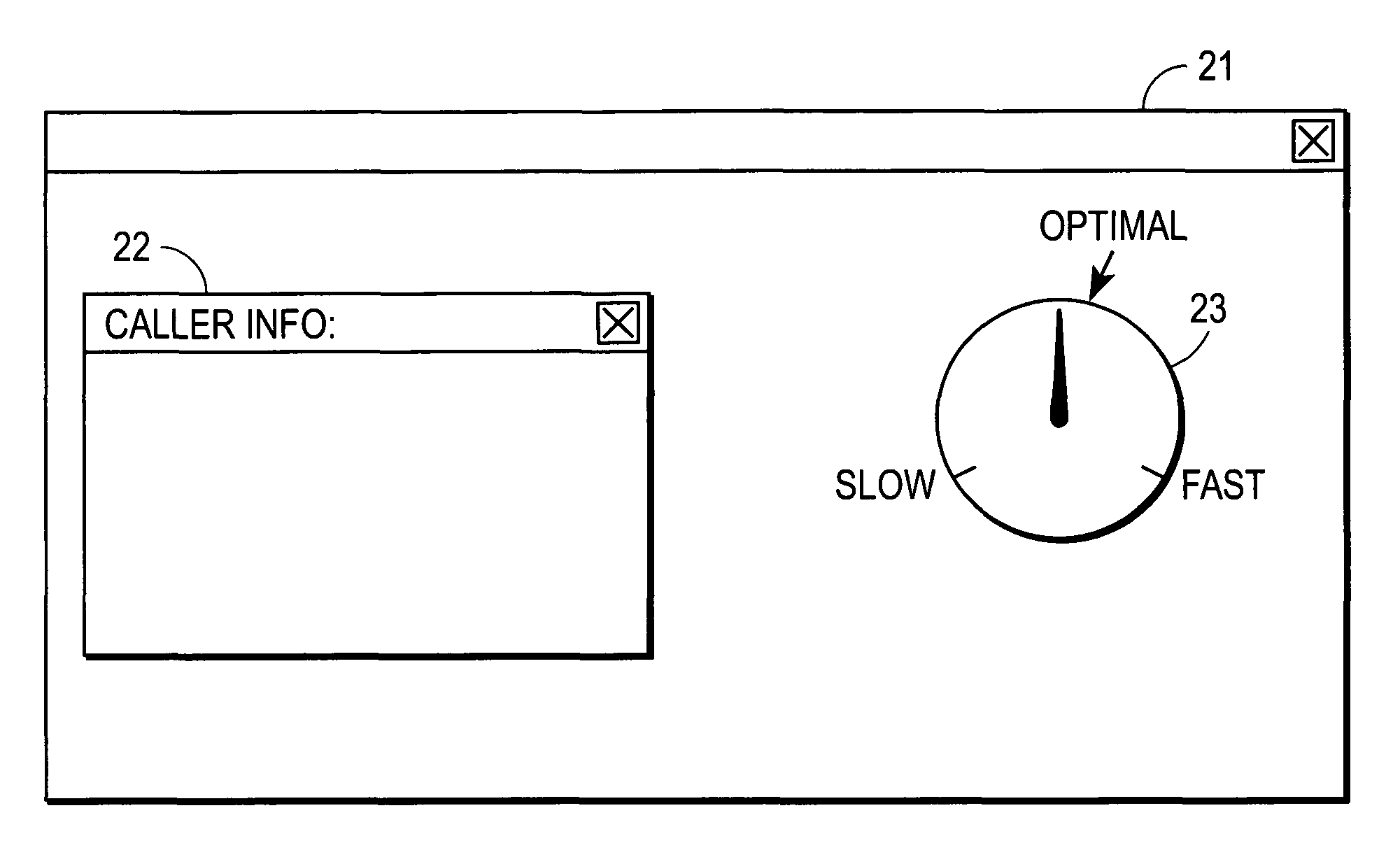

A system and method for handling a call from a caller to a call center includes an automatic call distributor (ACD) to receive the call and to route the call to an agent. A module operates to compute a rate of speech of the caller, and a display graphically displays the rate of speech of the caller to the agent during the call session. It is emphasized that this abstract is provided to comply with the rules requiring an abstract that will allow a searcher or other reader to quickly ascertain the subject matter of the technical disclosure. It is submitted with the understanding that it will not be used to interpret or limit the scope or meaning of the claims. 37 CFR 1.72(b).

Owner:CISCO TECH INC

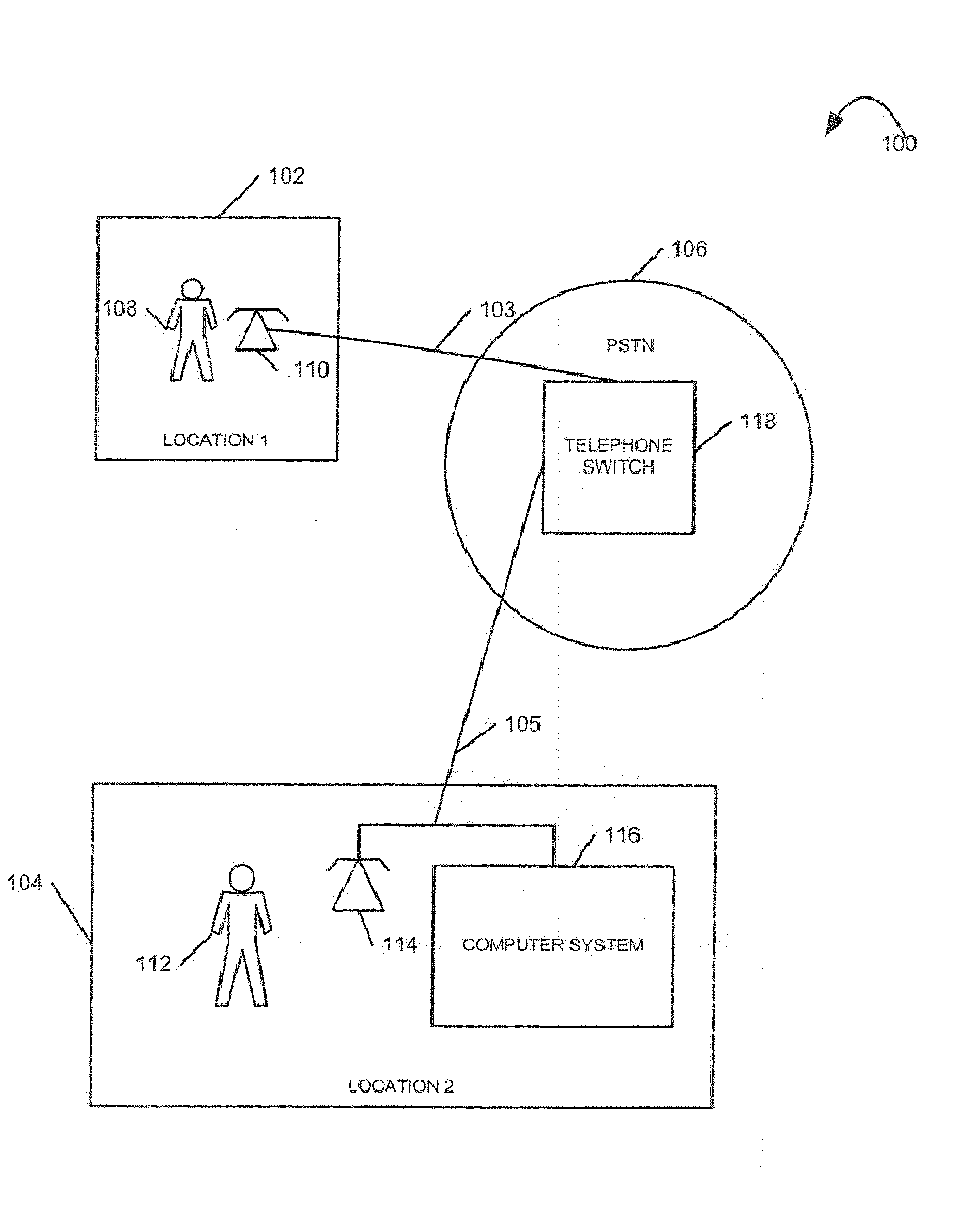

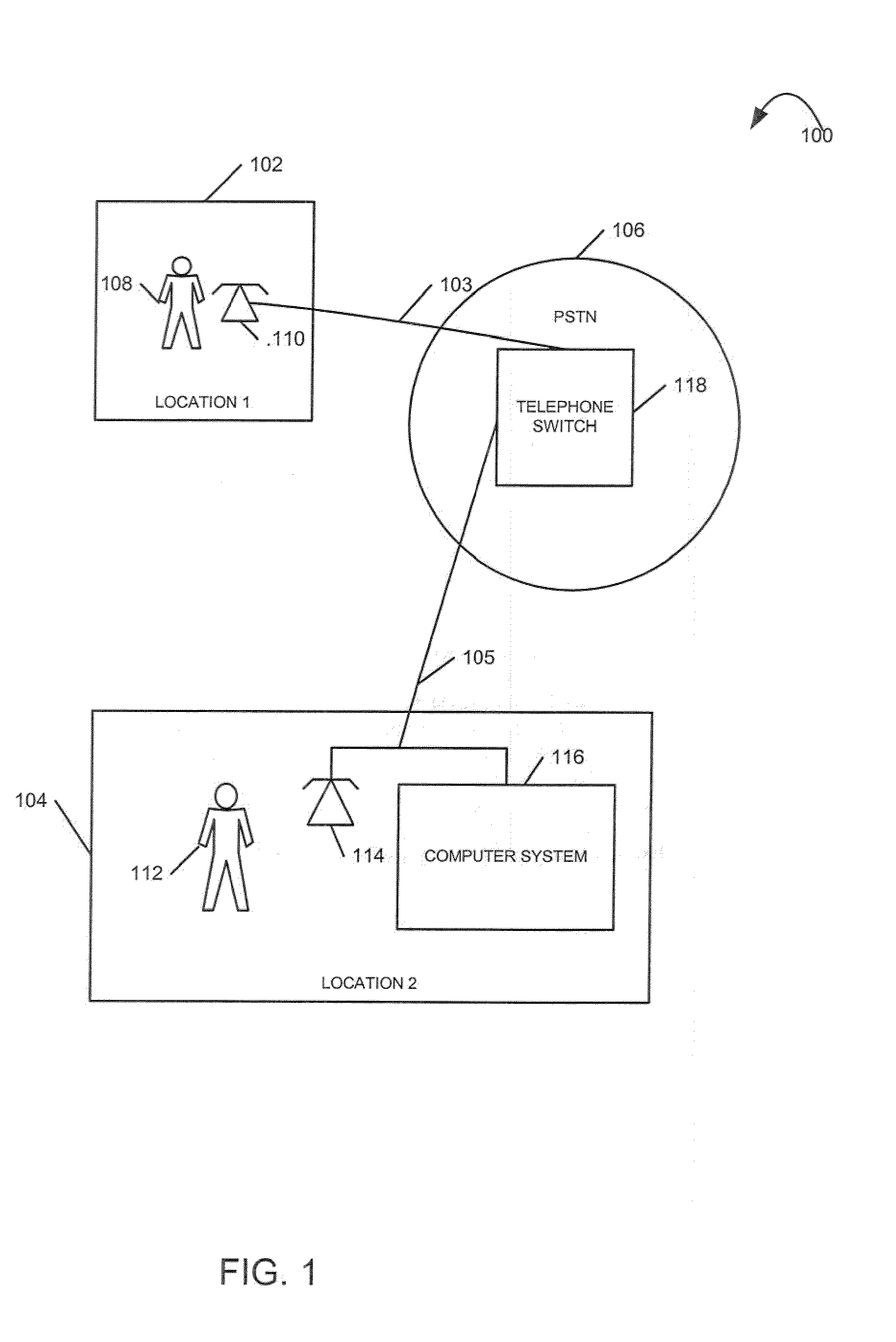

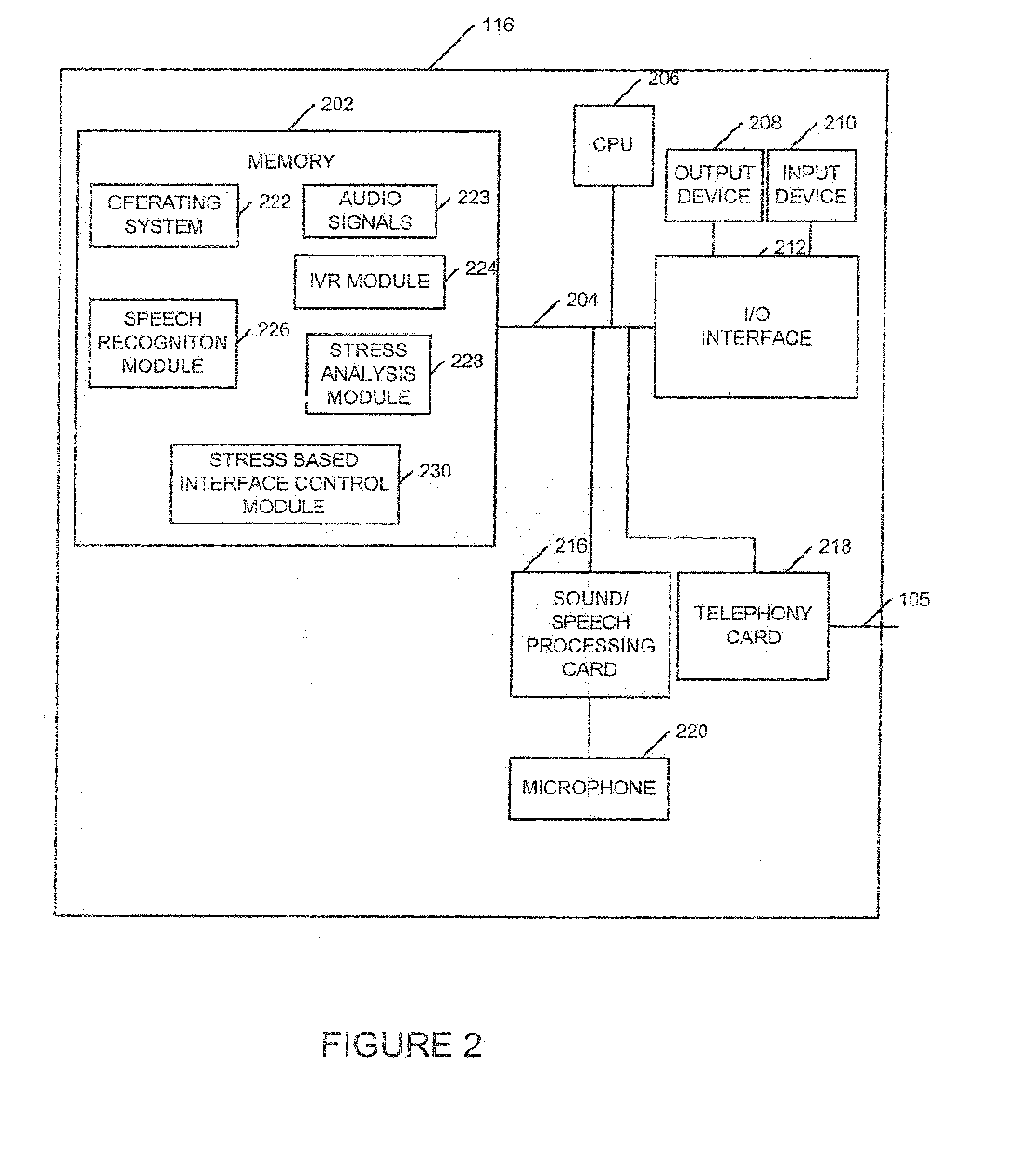

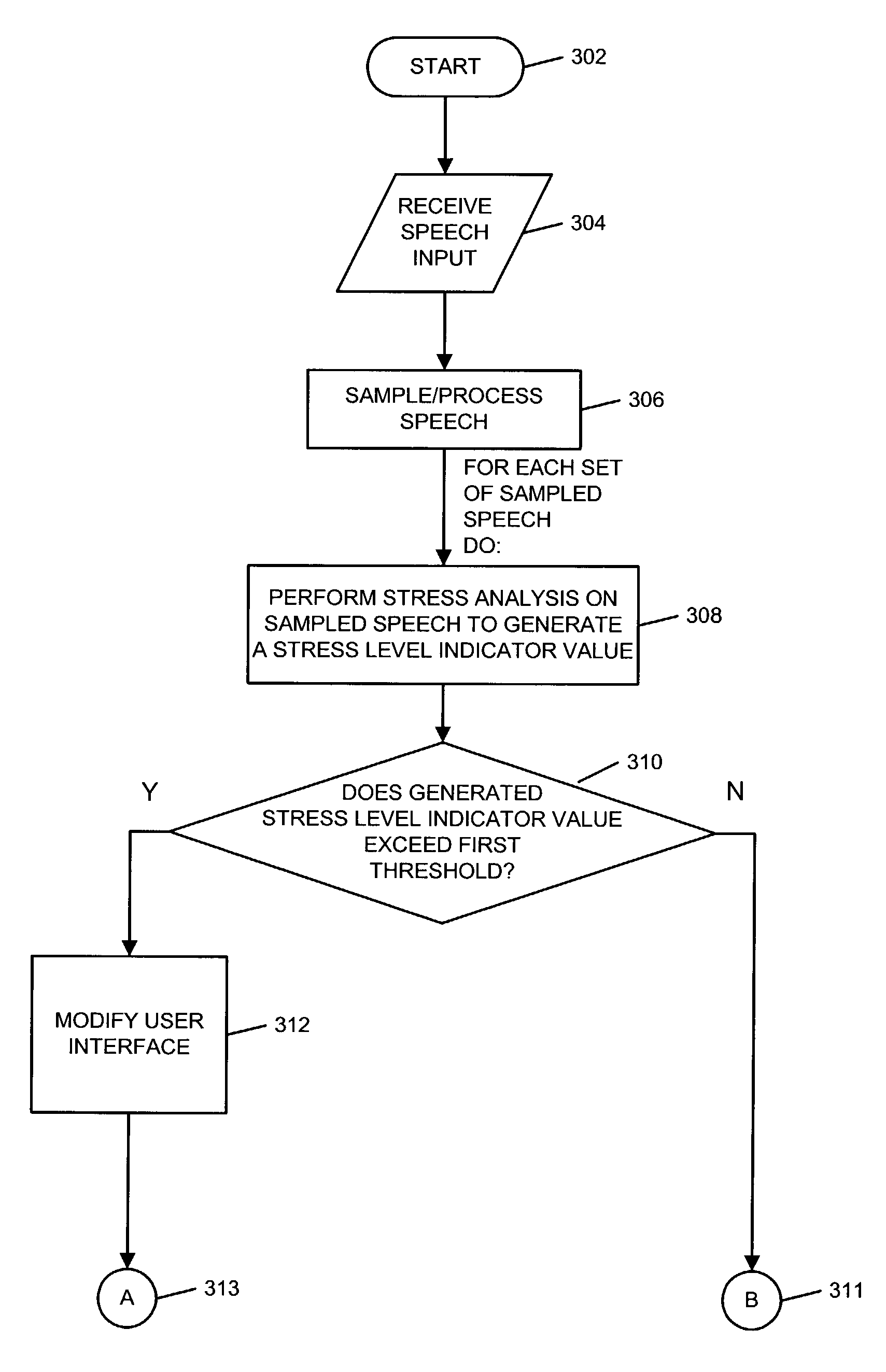

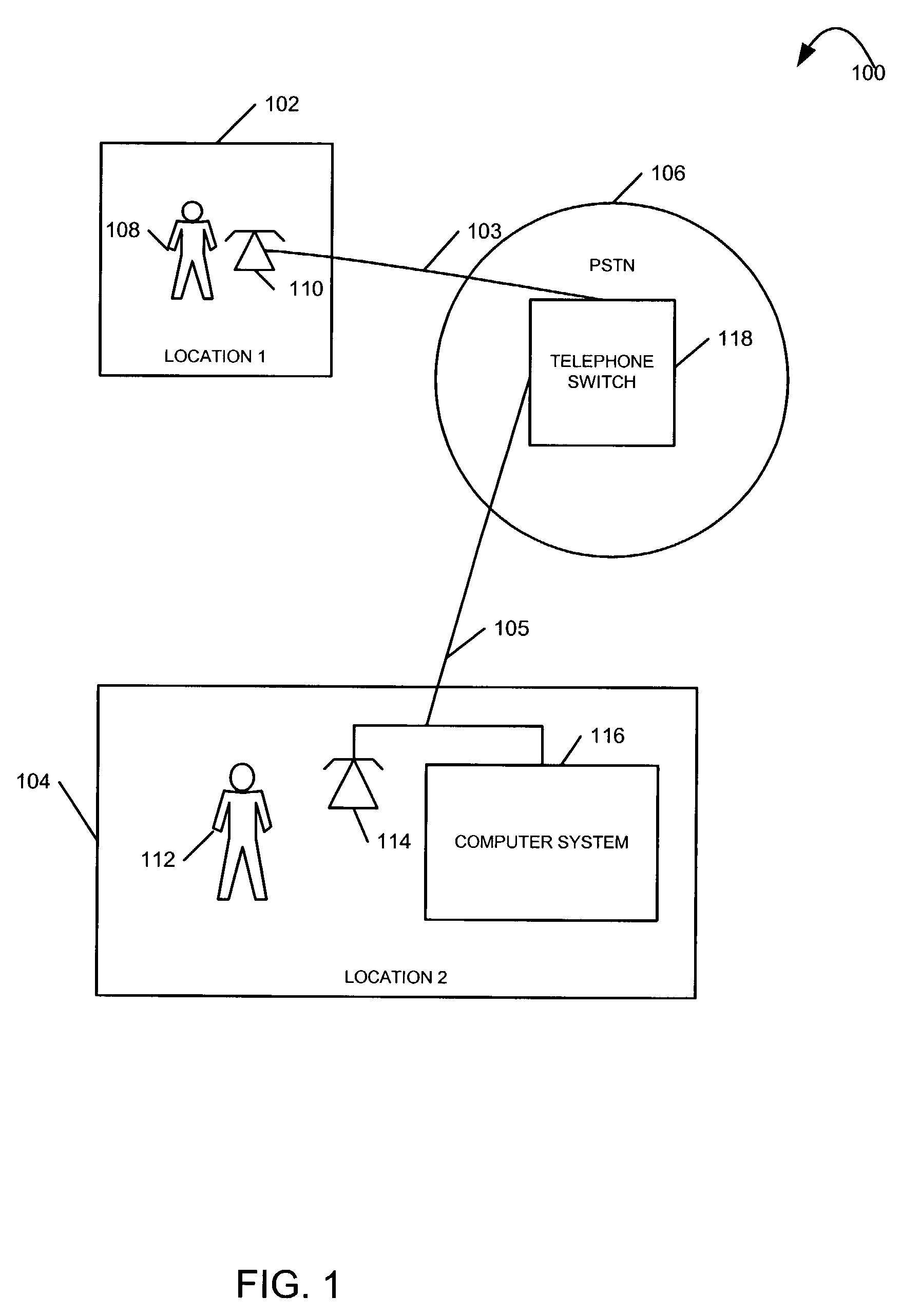

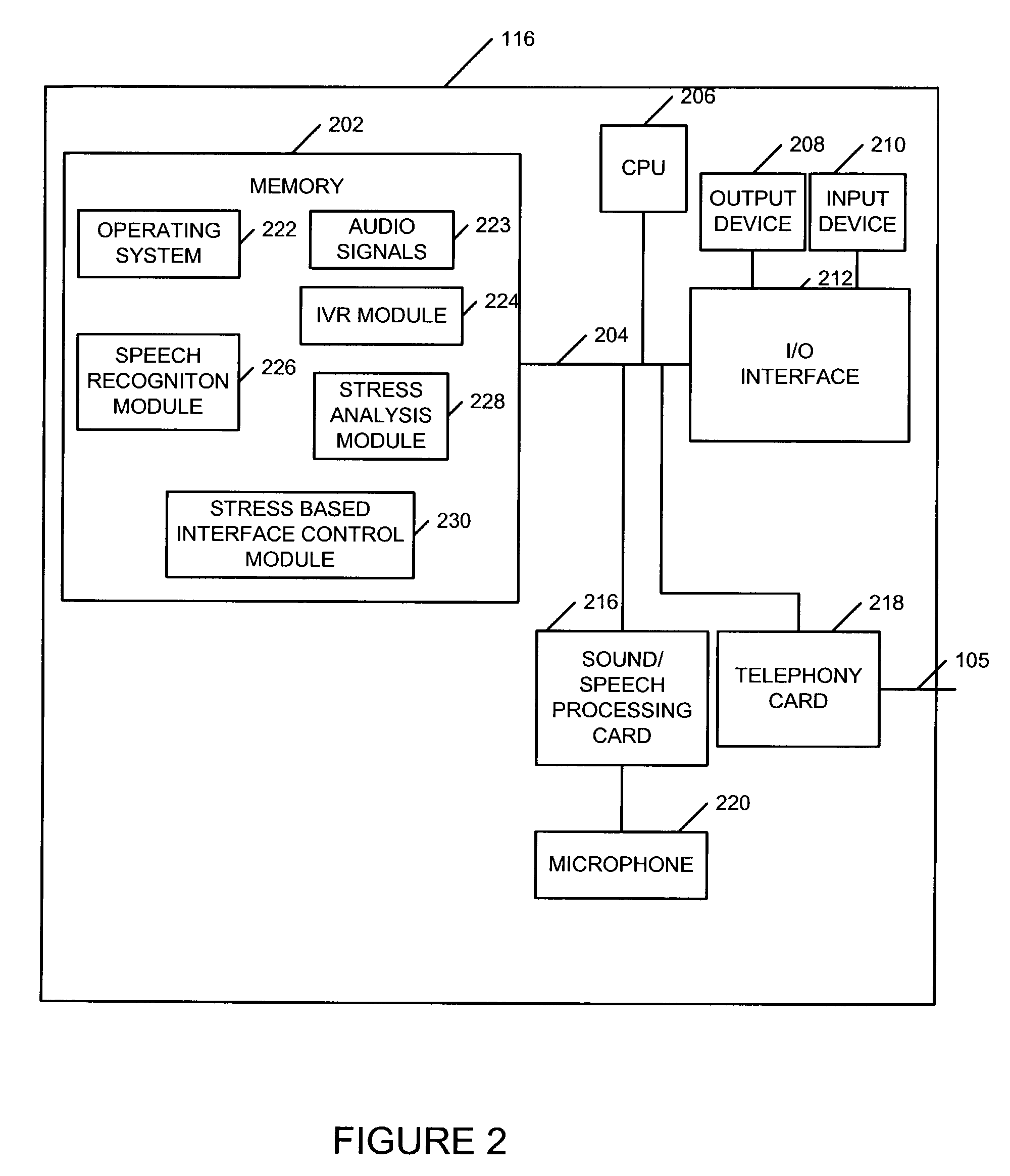

Methods and apparatus for controlling a user interface based on the emotional state of a user

ActiveUS20100037187A1Shorten the construction periodLow stress levelSpeech analysisExecution for user interfacesStress levelCombined use

Methods and apparatus for modifying a user interface as a function of the detected emotional state of a system user are described. In one embodiment, stress analysis is performed on received speech to generate an emotional state indicator value, e.g., a stress level indicator value. The stress level indicator value is compared to one or more thresholds. If a first threshold is exceeded the user interface is modified, e.g., the presentation rate of speech is slowed. If a second threshold is not exceeded, another modification to the user interface is made, e.g., the speech presentation rate is accelerated. If the stress level indicator value is between first and second thresholds, user interface operation continues unchanged. The user interface modification techniques of the present invention may be used in combination with known knowledge or expertise based user interface adaptation features.

Owner:VERIZON PATENT & LICENSING INC

Methods and apparatus for controlling a user interface based on the emotional state of a user

ActiveUS7665024B1Presentation rate is slowedSpeech presentation rate is acceleratedSpeech analysisExecution for user interfacesStress levelCombined use

Methods and apparatus for modifying a user interface as a function of the detected emotional state of a system user are described. In one embodiment, stress analysis is performed on received speech to generate an emotional state indicator value, e.g., a stress level indicator value. The stress level indicator value is compared to one or more thresholds. If a first threshold is exceeded the user interface is modified, e.g., the presentation rate of speech is slowed. If a second threshold is not exceeded, another modification to the user interface is made, e.g., the speech presentation rate is accelerated. If the stress level indicator value is between first and second thresholds, user interface operation continues unchanged. The user interface modification techniques of the present invention may be used in combination with known knowledge or expertise based user interface adaptation features.

Owner:VERIZON PATENT & LICENSING INC

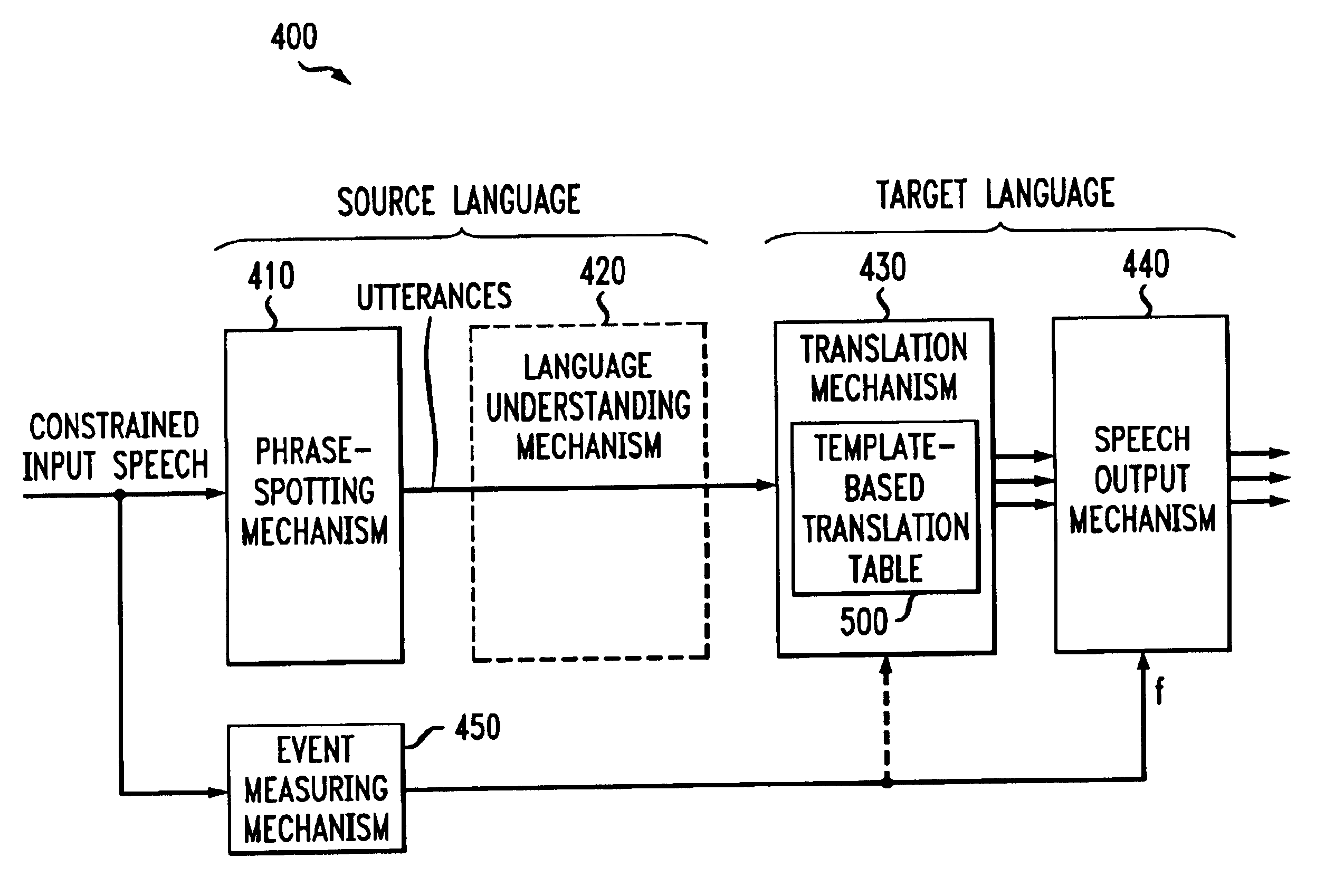

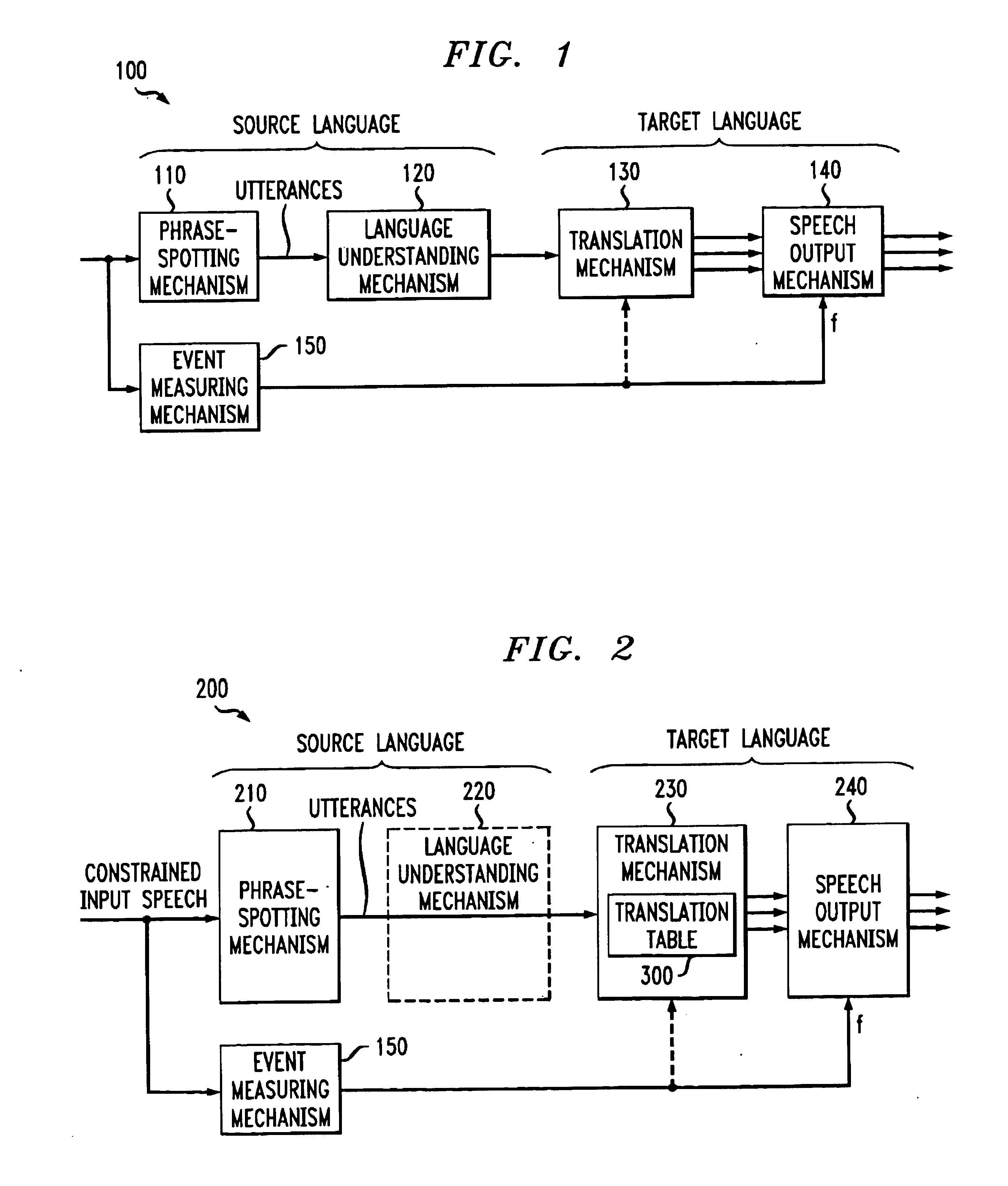

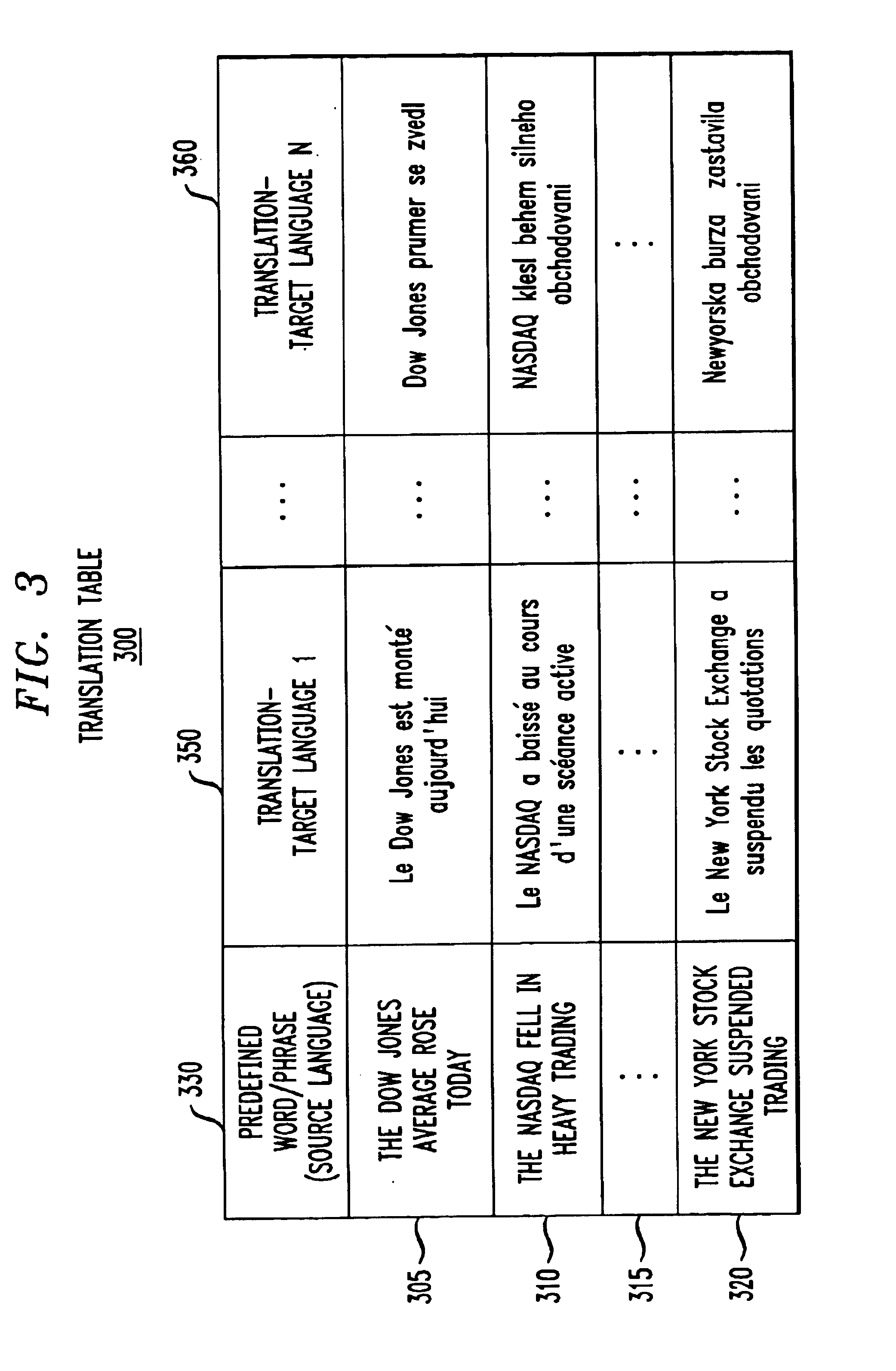

Method and apparatus for translating natural-language speech using multiple output phrases

InactiveUS6859778B1Accurate synchronizationQuality improvementNatural language translationAdhesive processes with adhesive heatingLanguage understandingSadness

A multi-lingual translation system that provides multiple output sentences for a given word or phrase. Each output sentence for a given word or phrase reflects, for example, a different emotional emphasis, dialect, accents, loudness or rates of speech. A given output sentence could be selected automatically, or manually as desired, to create a desired effect. For example, the same output sentence for a given word or phrase can be recorded three times, to selectively reflect excitement, sadness or fear. The multi-lingual translation system includes a phrase-spotting mechanism, a translation mechanism, a speech output mechanism and optionally, a language understanding mechanism or an event measuring mechanism or both. The phrase-spotting mechanism identifies a spoken phrase from a restricted domain of phrases. The language understanding mechanism, if present, maps the identified phrase onto a small set of formal phrases. The translation mechanism maps the formal phrase onto a well-formed phrase in one or more target languages. The speech output mechanism produces high-quality output speech. The speech output may be time synchronized to the spoken phrase using the output of the event measuring mechanism.

Owner:IBM CORP +1

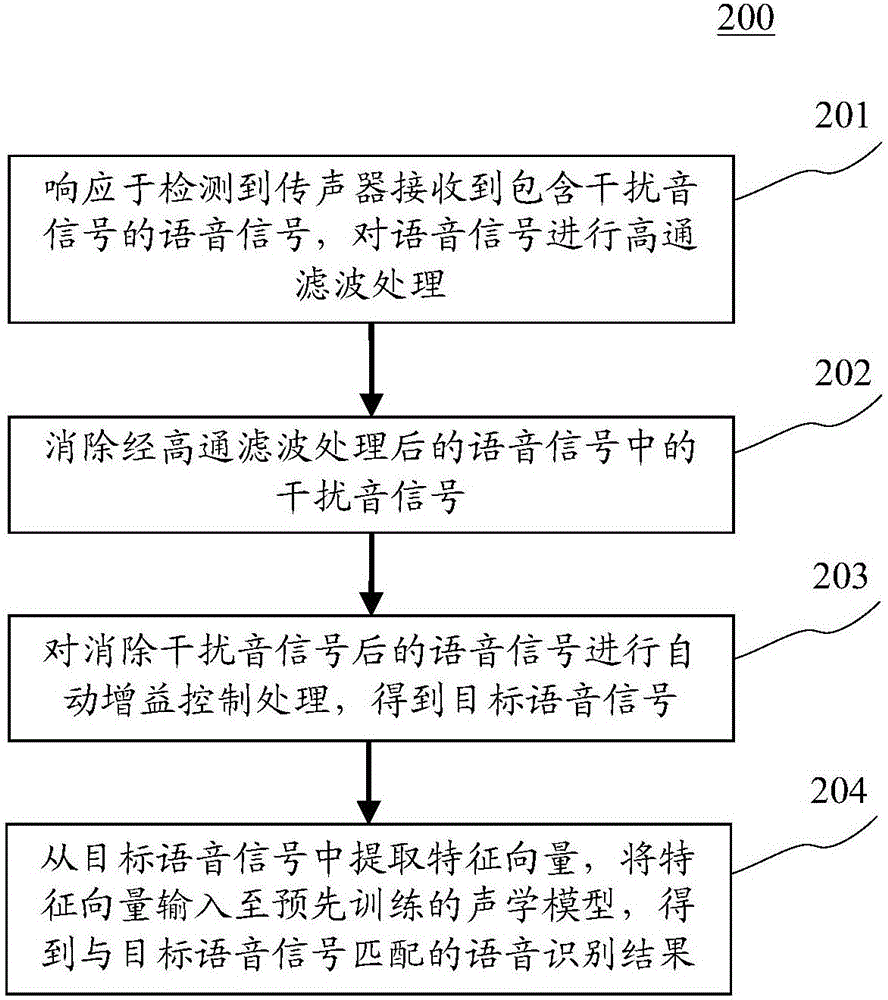

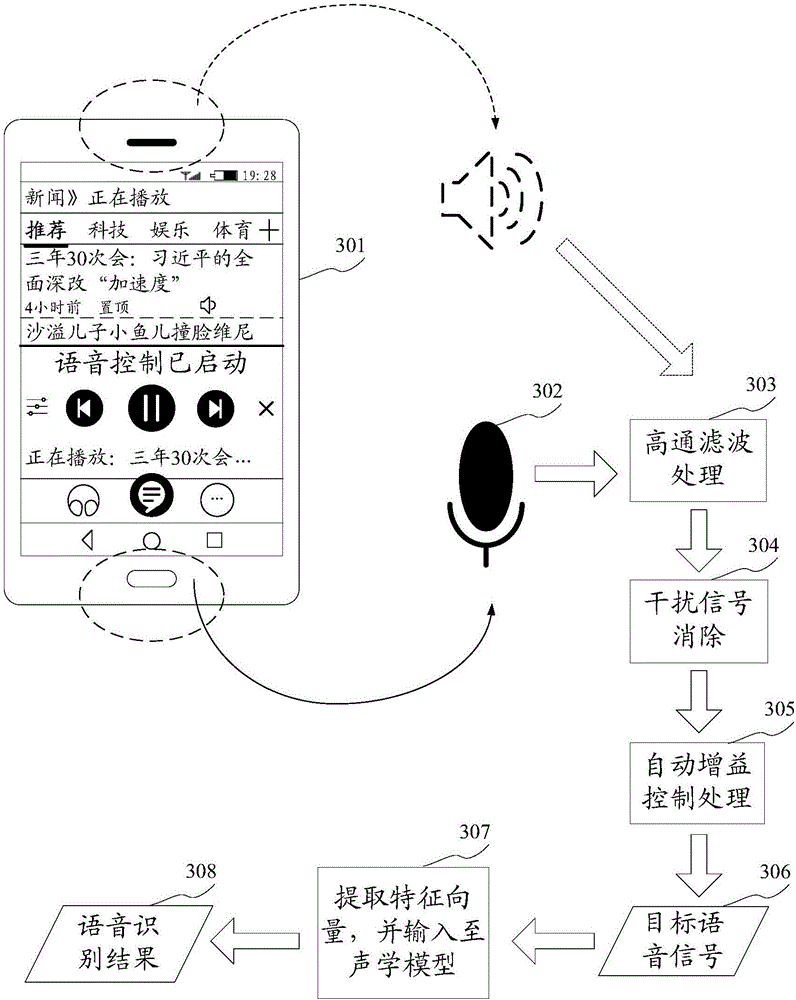

Speech recognition method and device

The invention discloses a speech recognition method and device. According to one embodiment, the method comprises the steps of conducting high-pass filtering treatment on speech signals by responding to the detection that a microphone receives the speech signals containing crosstalk signals; eliminating the crosstalk signals in the speech signals after high-pass filtering treatment; conducting automatic gain control treatment on the speech signals with the crosstalk signals removed, so as to obtain target speech signals; and extracting feature vectors from the target speech signals, and inputting a pre-trained acoustic model into the feature vectors, so as to obtain a speech recognition result matched with the target speech signals, wherein the acoustic model is used for representing the corresponding relation of the feature vectors and the speech recognition result. In this way, the success rate of speech recognition is improved.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

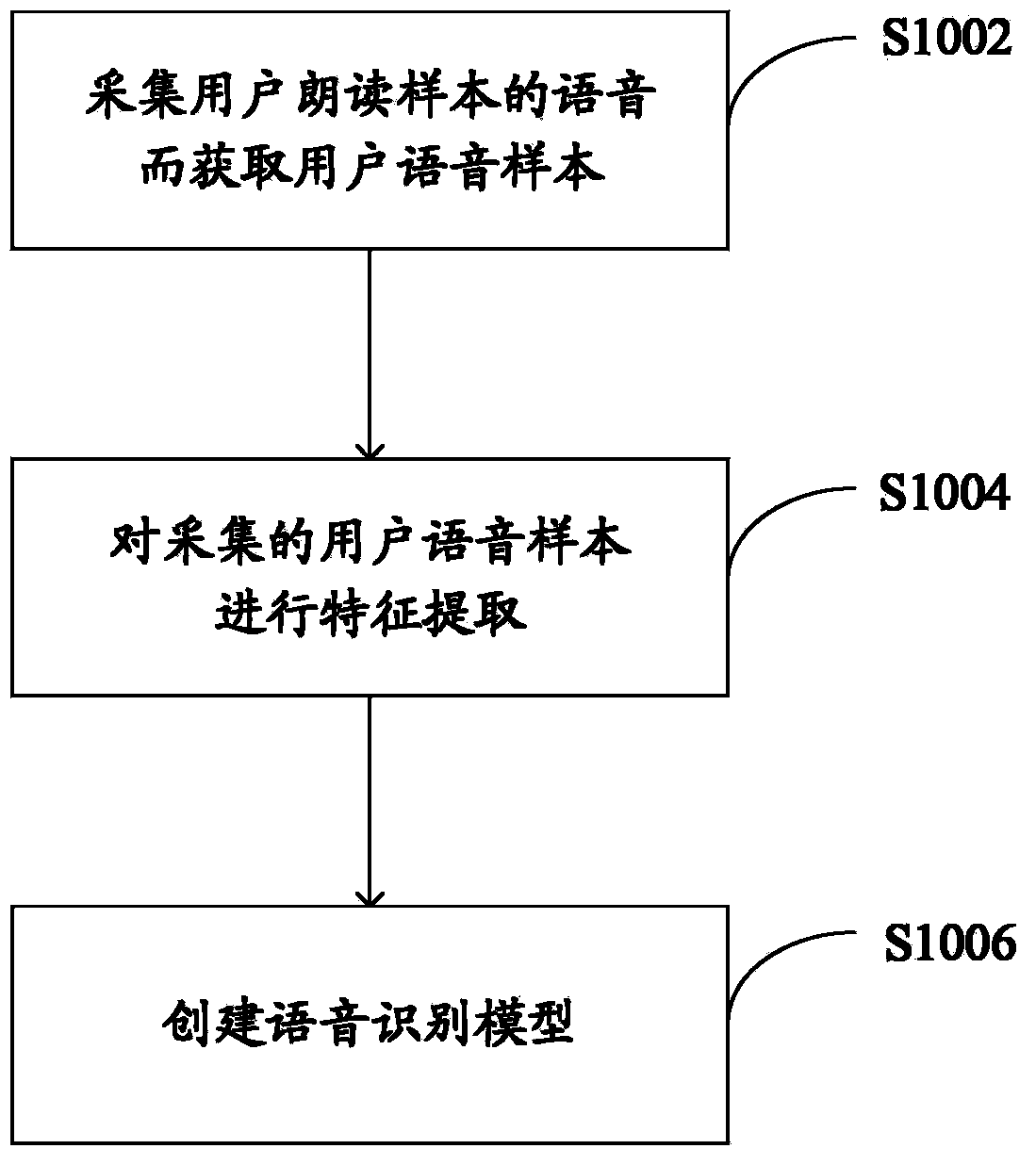

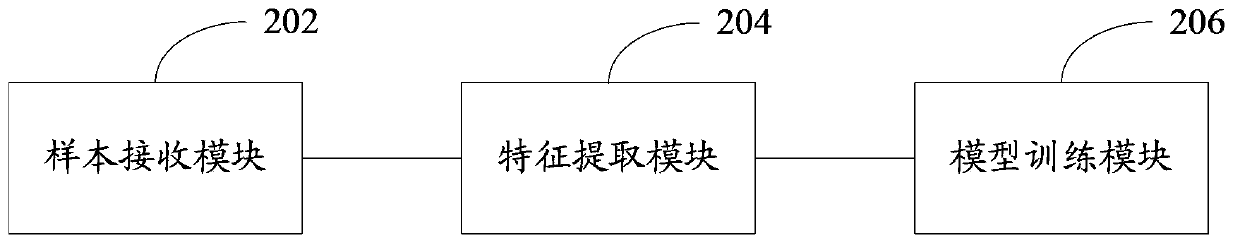

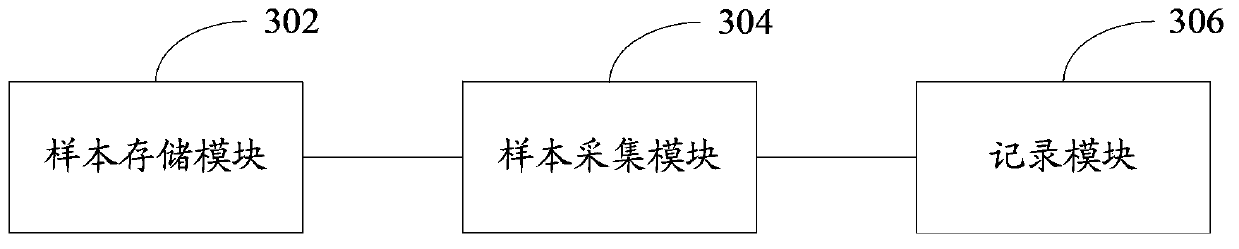

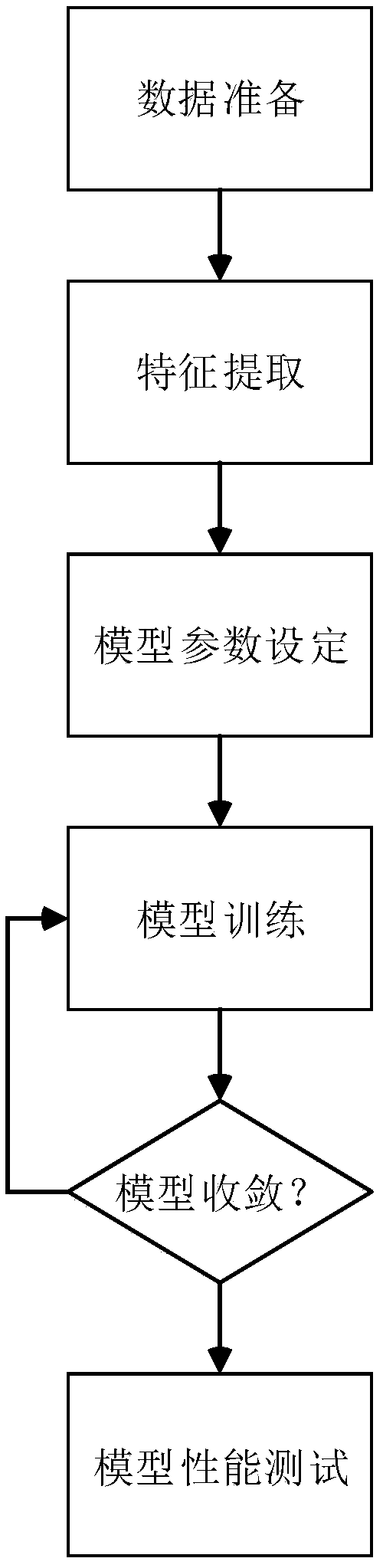

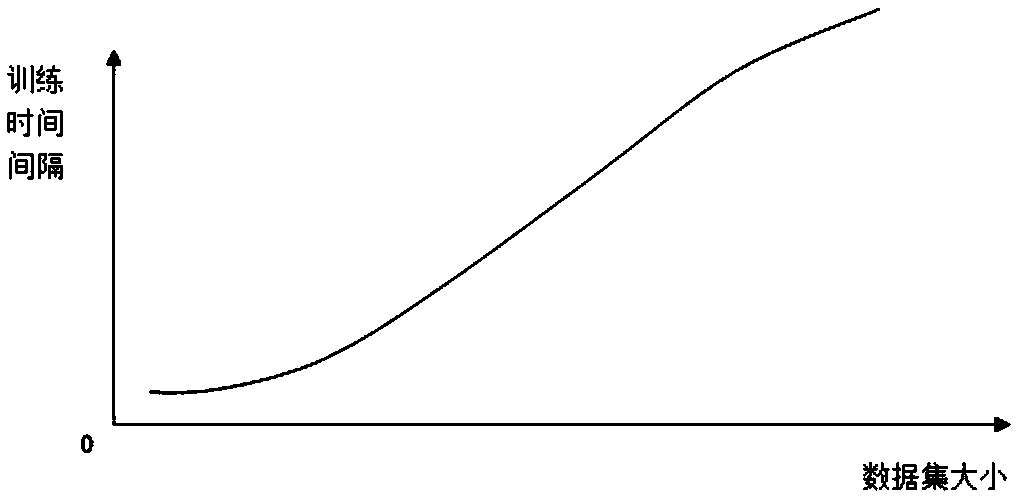

Speech recognition model training method, speech recognition model training device and terminal

ActiveCN103680495AImprove experienceImprove the success rate of speech recognitionSpeech recognitionFeature extractionSpeech identification

The application discloses a speech recognition model training method, a speech recognition model training device and a terminal. According to the application, the speech recognition model training method can comprise the steps of acquiring speech of a reading sample of a user to obtain a user speech sample, extracting features of the acquired user speech sample and creating a speech recognition model according to the extracted features. By the adoption of the method and the device of the application, a speech recognition model base can be updated according to user features, thereby improving the success rate of speech recognition and improving the user experience.

Owner:CHINA MOBILE COMM GRP CO LTD

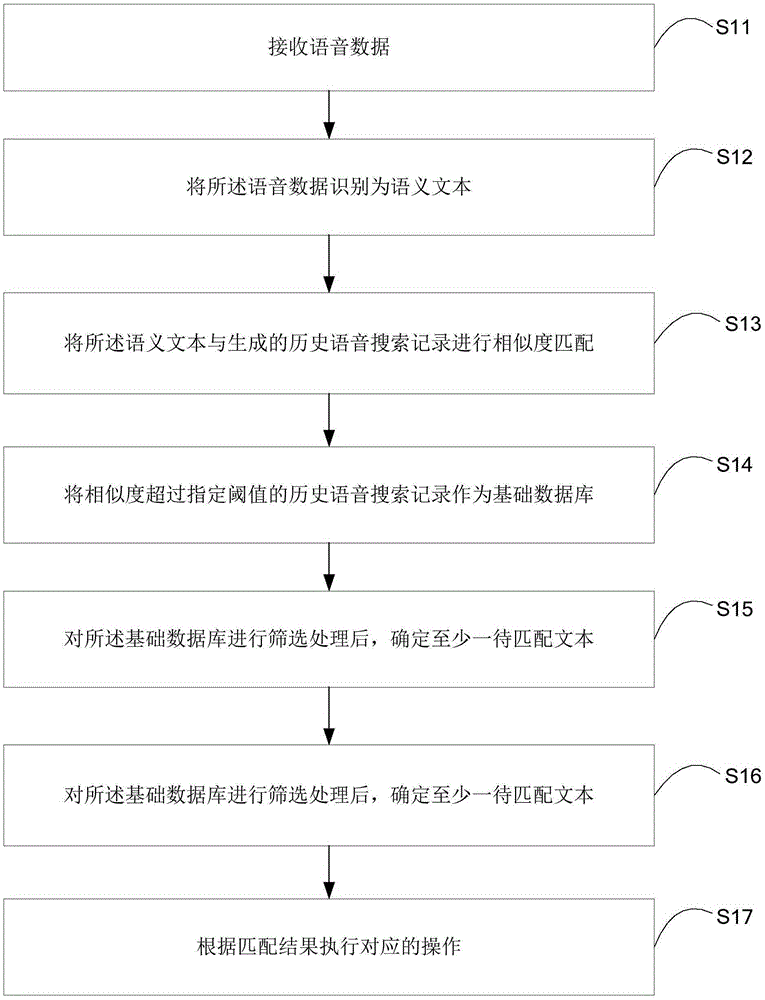

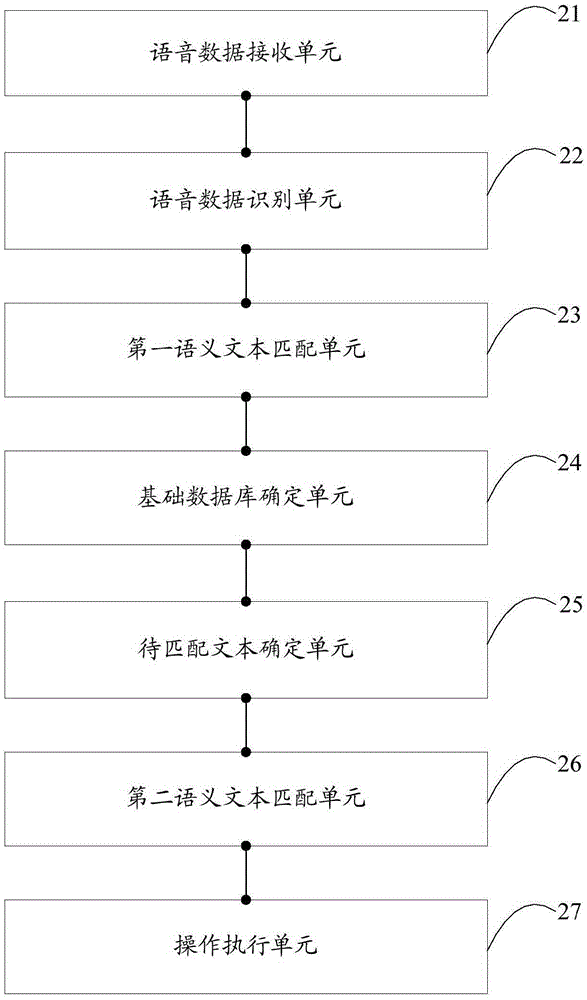

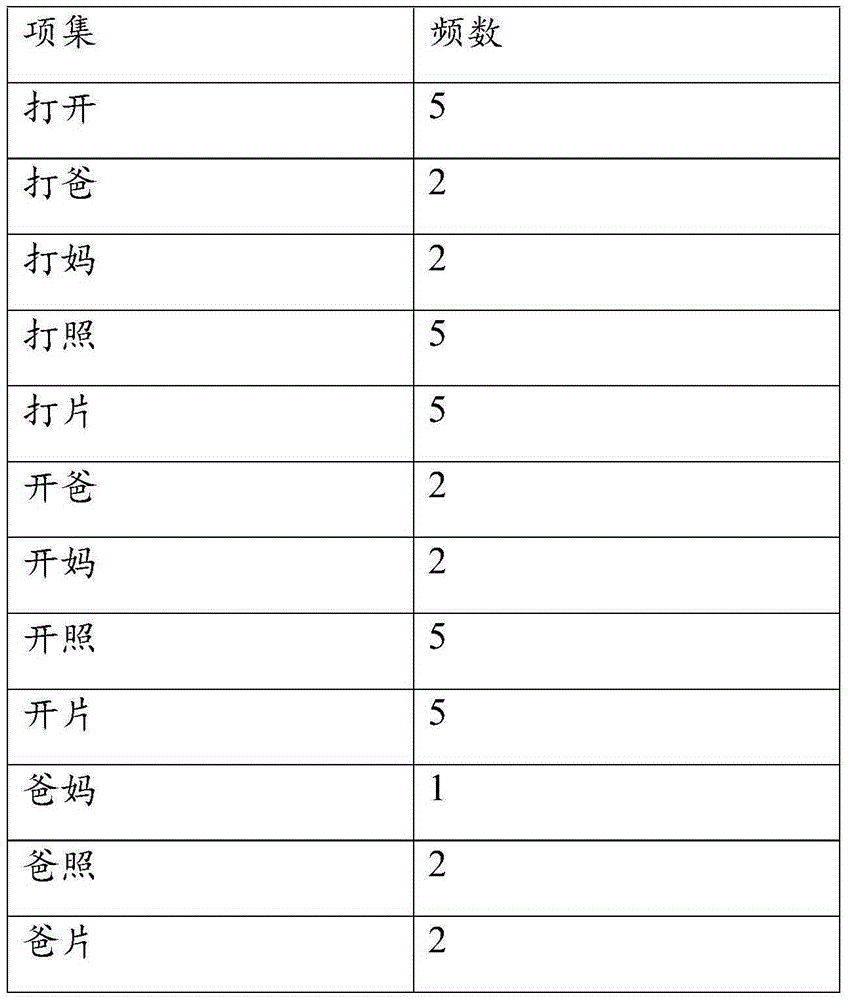

Speech interaction method and device

ActiveCN105389400AImprove accuracyIncrease success rateSpeech recognitionSpecial data processing applicationsVoice searchSpeech sound

The invention is applicable in the field of speech interaction and provides a speech interaction method and device. The speech interaction method comprises the following steps of: receiving speech data; recognizing the speech data to generate a semantic text; carrying out similarity matching on the semantic text and a generated history speech research record; taking the history speech research record with the similarity exceeding a specified threshold as a basic database; determining at least one text to be matched after carrying out screening treatment on the basic database; matching the semantic text with the determined at least one text to be matched; and executing corresponding operation according to a matching result. The embodiment of the invention can increase the accuracy rate and the success rate of speech interaction.

Owner:TCL CORPORATION

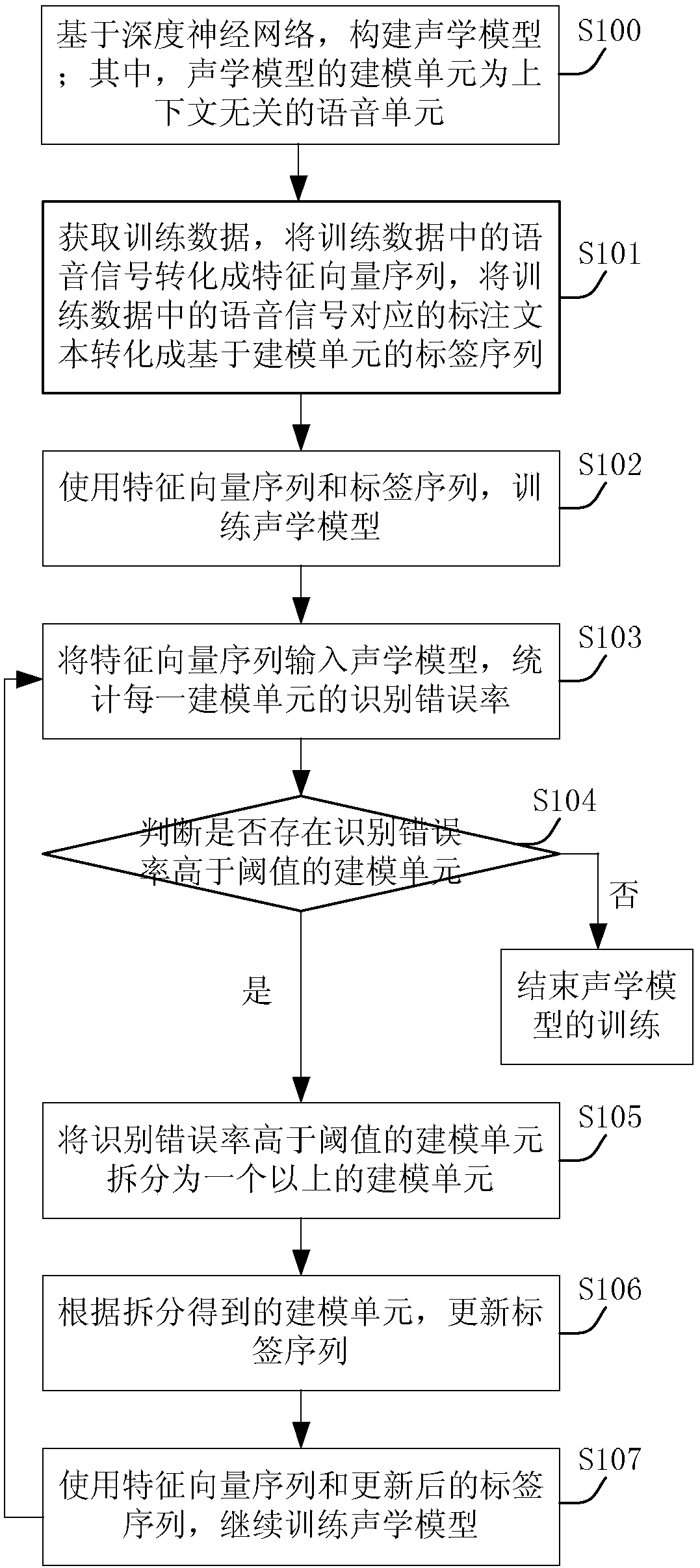

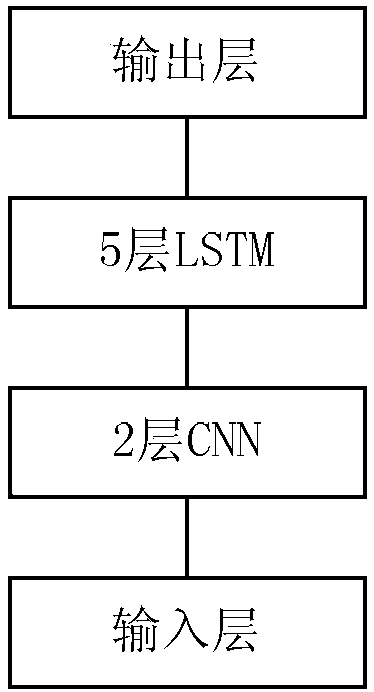

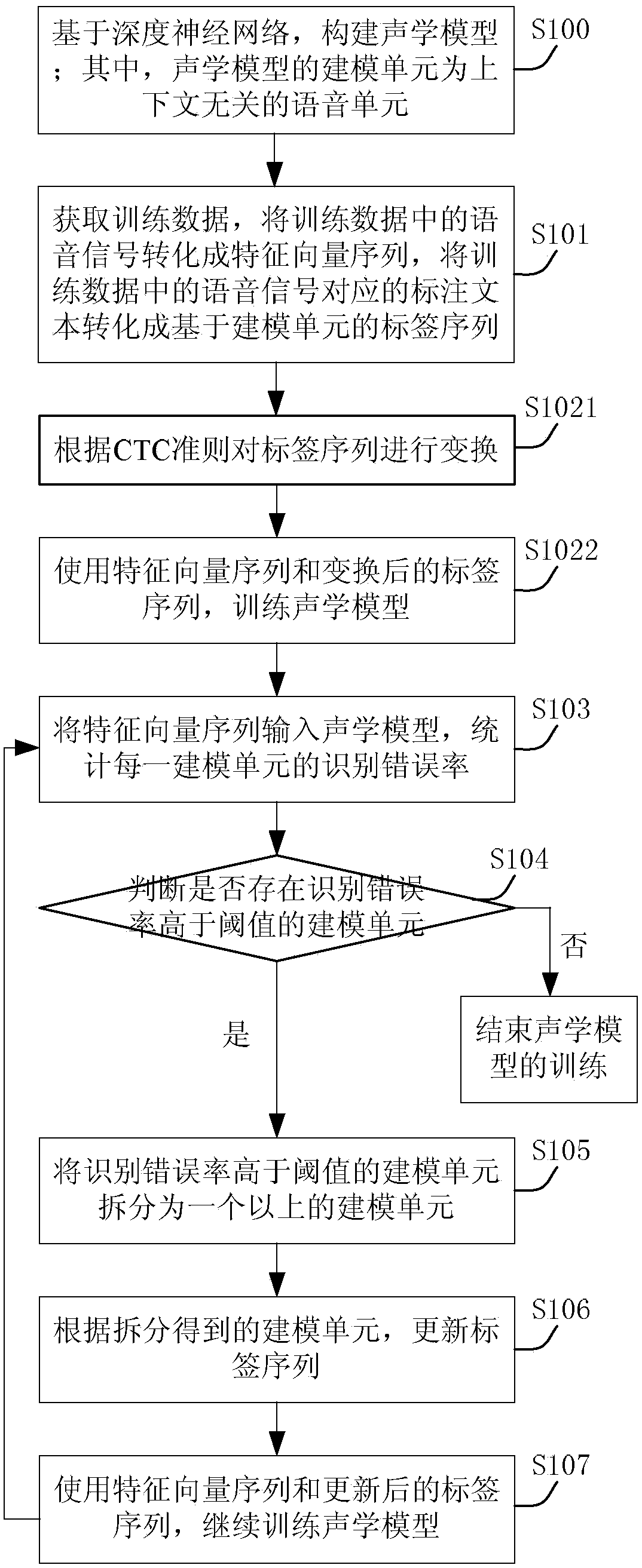

Speech recognition acoustic model building method and device, and electronic equipment

ActiveCN108711421AImprove generalization abilityImprove accuracySpeech recognitionFeature vectorSpeech identification

Embodiments of the invention provide a speech recognition acoustic model building method and device, and electronic equipment. The method includes building an acoustic model based on a deep neutral network, modeling units of the acoustic model being context free speech units, obtaining training data, converting speech signals in the training data into eigenvector sequences, and converting annotated texts corresponding to the speech signals into modeling unit based label sequences; training the acoustic model by using the eigenvector sequences and the label sequences; inputting the eigenvectorsequences into the acoustic model, and counting the recognition error rate of each modeling unit; splitting the modeling units whose recognition error rates are higher than threshold values into morethan one modeling units; updating the label sequences according to the modeling units obtained by splitting; and retraining the acoustic model according to the eigenvector sequences and updated labelsequences. According to the embodiments, the correct rates of speech recognition can be effectively enhanced.

Owner:BEIJING ORION STAR TECH CO LTD

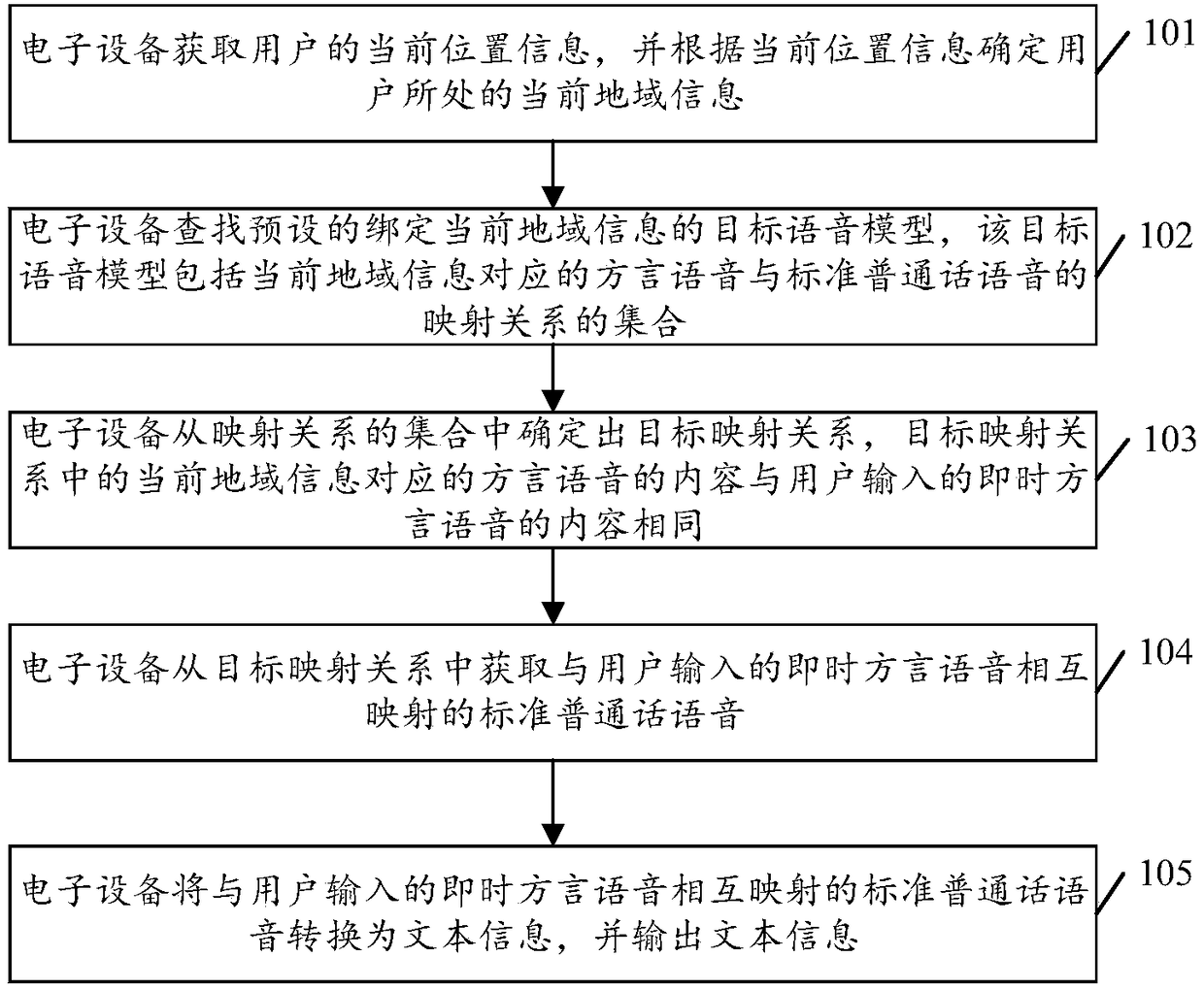

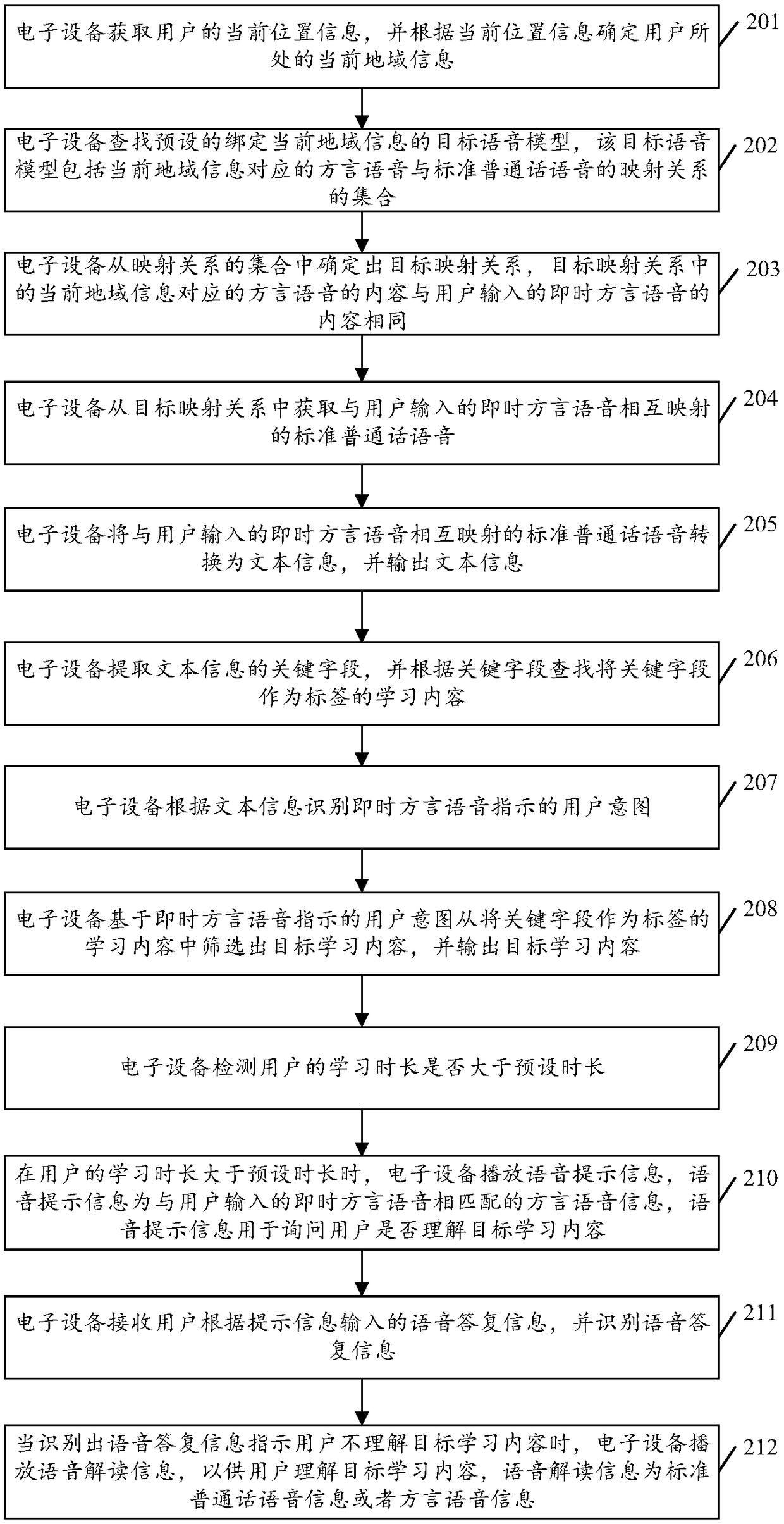

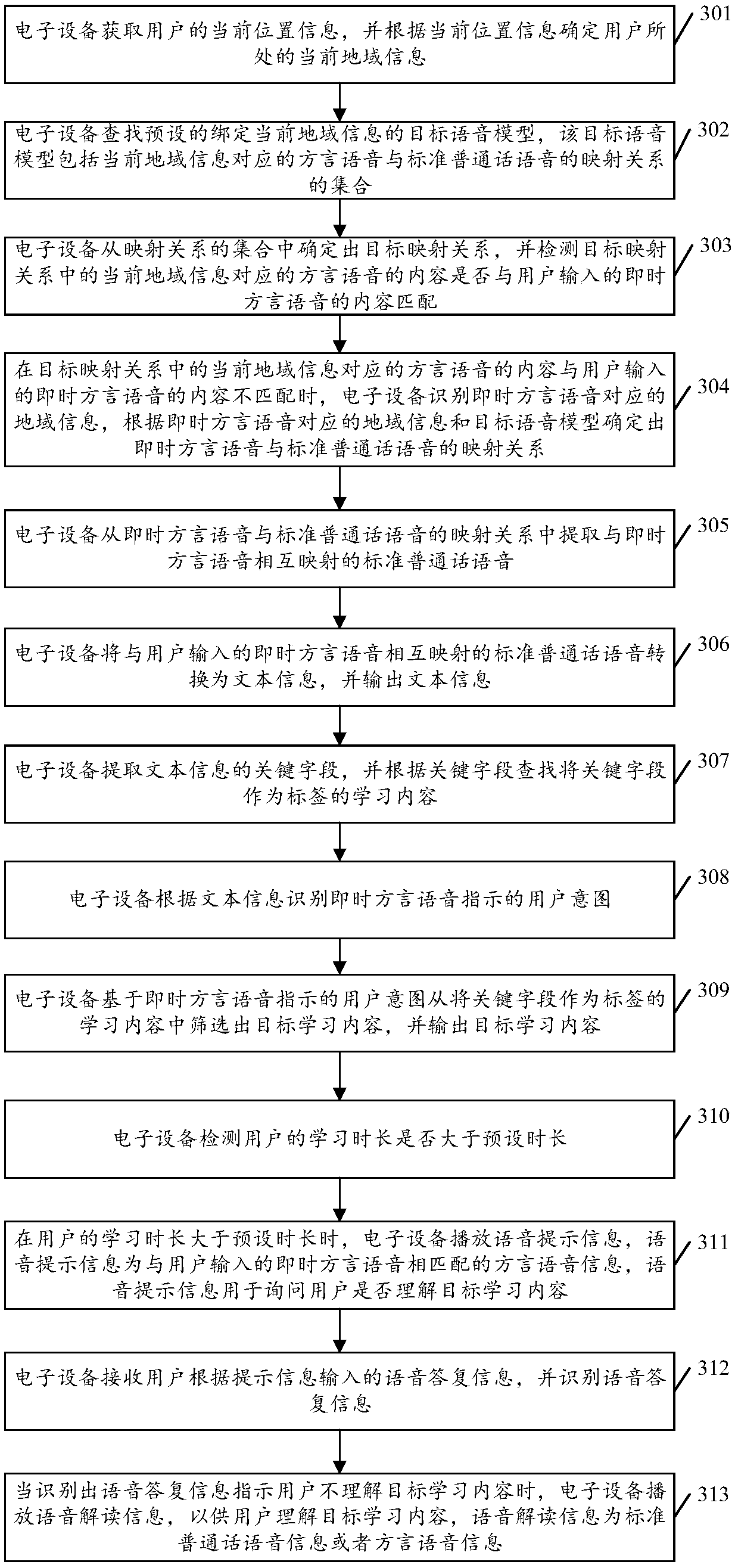

Dialect speech recognition method and electronic device

The embodiments of the invention disclose a dialect speech recognition method and an electronic device. The method includes the following steps: the current location information of a user is acquired,and the current regional information of the user is determined according to the current location information; a preset target speech model bound with the current regional information is searched, wherein the target speech model includes a set of mapping relationships between dialect speech corresponding to the current regional information and standard mandarin speech; a target mapping relationship is determined from the set of the mapping relationships, and the content of the dialect speech corresponding to the current regional information in the target mapping relationship is identical withthe content of instant dialect speech inputted by the user; and standard mandarin speech which is in mapping relationship with the instant dialect speech inputted by the user is obtained from the target mapping relationship; and the standard mandarin speech which is in mapping relationship with the instant dialect speech inputted by the user is converted into text information, and the text information is outputted. With the dialect speech recognition method and the electronic device provided by the embodiments of the present invention adopted, dialect speech can be correctly recognized, and the correct rate of speech recognition is improved.

Owner:GUANGDONG XIAOTIANCAI TECH CO LTD

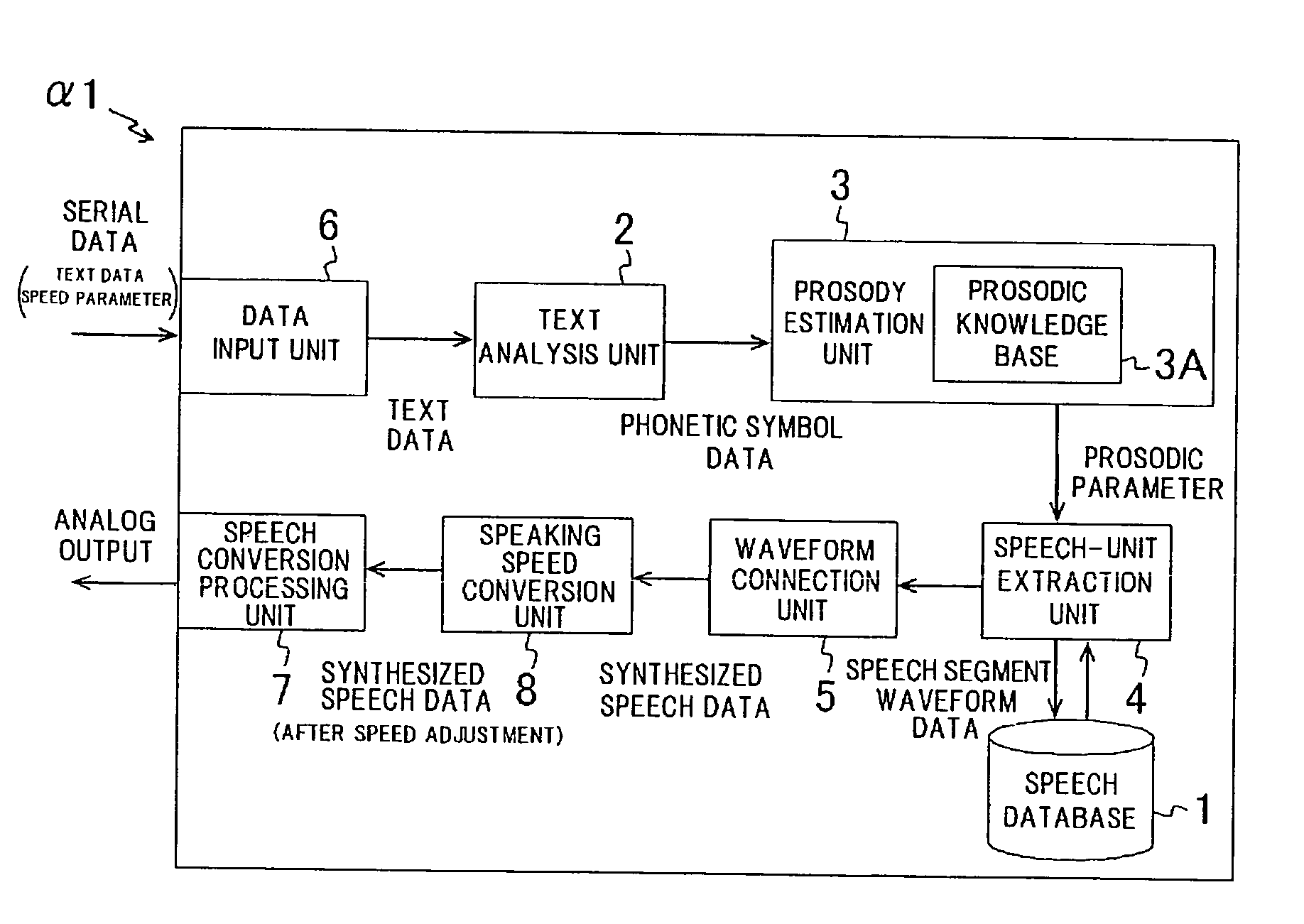

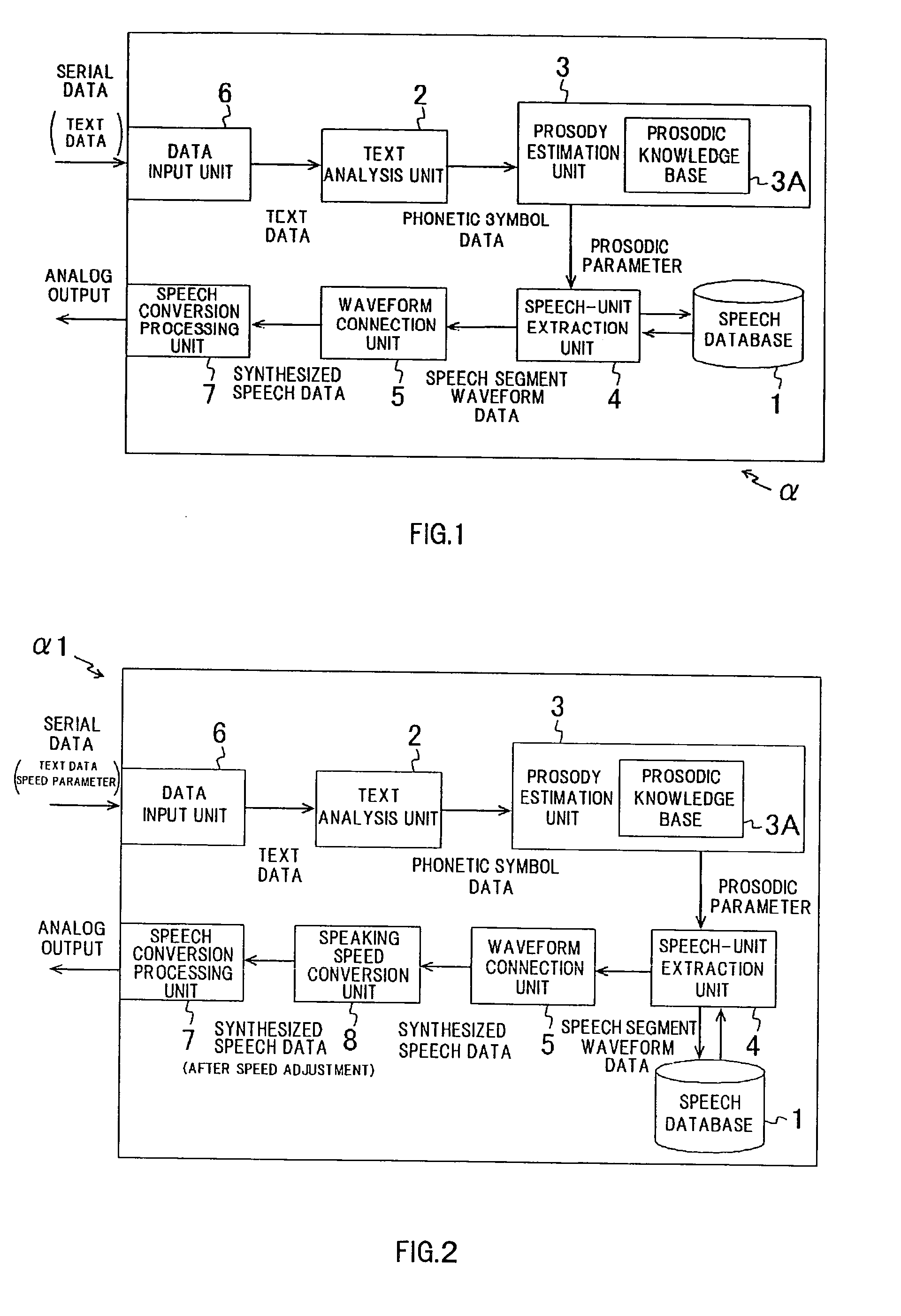

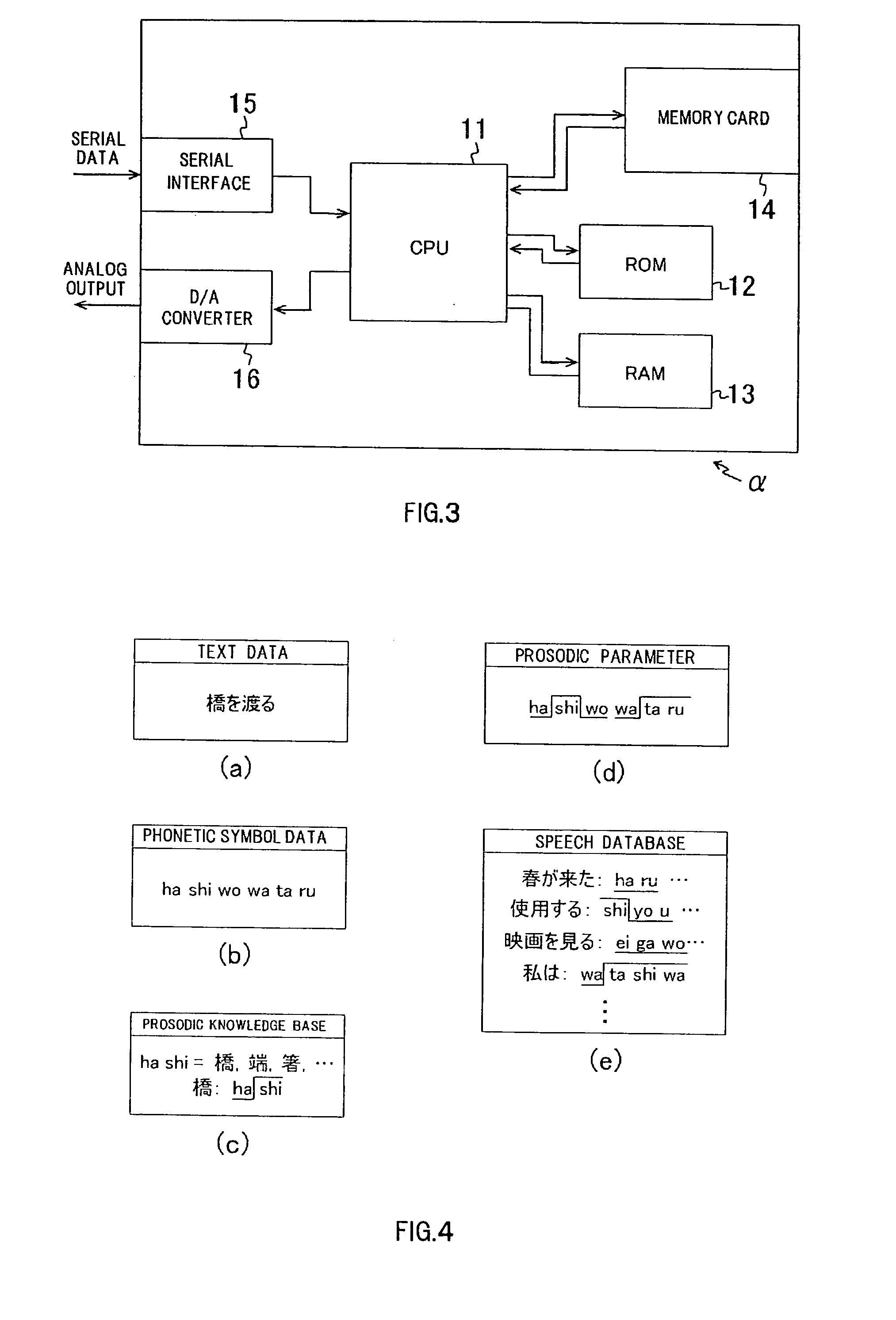

Speech Synthesizing Apparatus

A corpus-based speech synthesizing apparatus is provided which has a text analysis unit for analyzing a given sentence in text data and generating phonetic symbol data corresponding to the sentence; a prosody estimation unit for generating a prosodic parameter representing an accent and an intonation corresponding to each phonetic symbol data according to a preset prosodic knowledge base for accents and intonations; speech-unit extraction unit for extracting all the speech segment waveform data of a predetermined speech unit part from each speech data having the predetermined speech unit part closest to the prosodic parameter, based on a speech database which stores therein plural kinds of predetermined selectively prerecorded speech data only such that the speech database has a predetermined speech unit suitable for a specific application of the speech synthesizing apparatus; and a waveform connection unit for generating synthesized speech data by performing sequentially successive waveform connection of the speech segment waveform data groups such that the speech waveform of the speech segment waveform data groups continues, wherein the respective functional units, a data input unit, a speech conversion processing unit, and a speech speed conversion unit is added or removed as desired depending on a specific application and a scale of the apparatus.

Owner:EE I KK

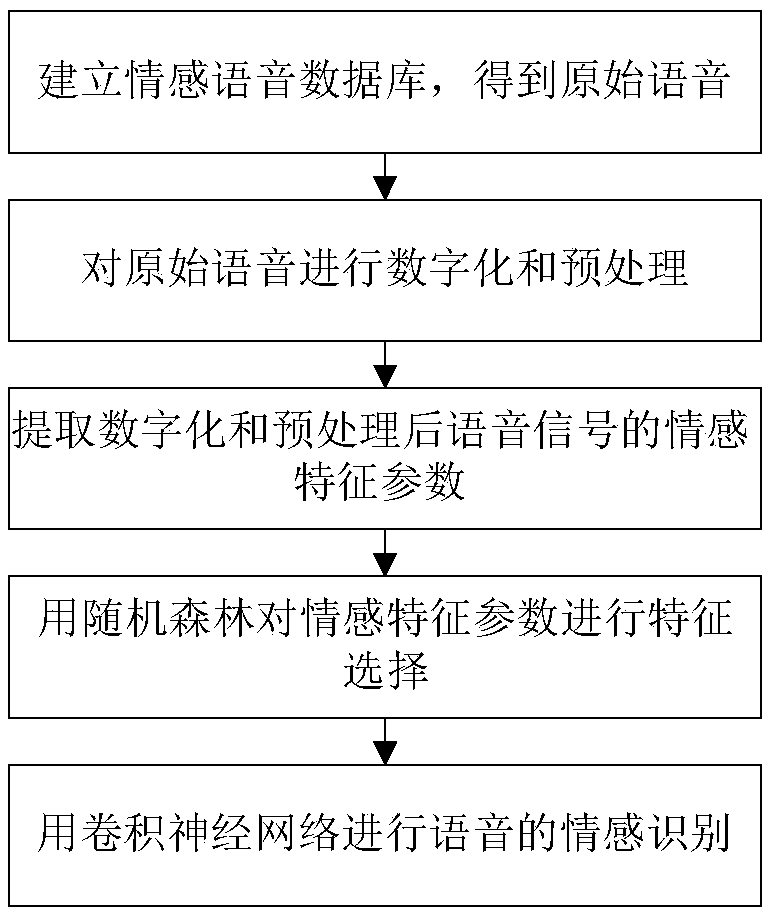

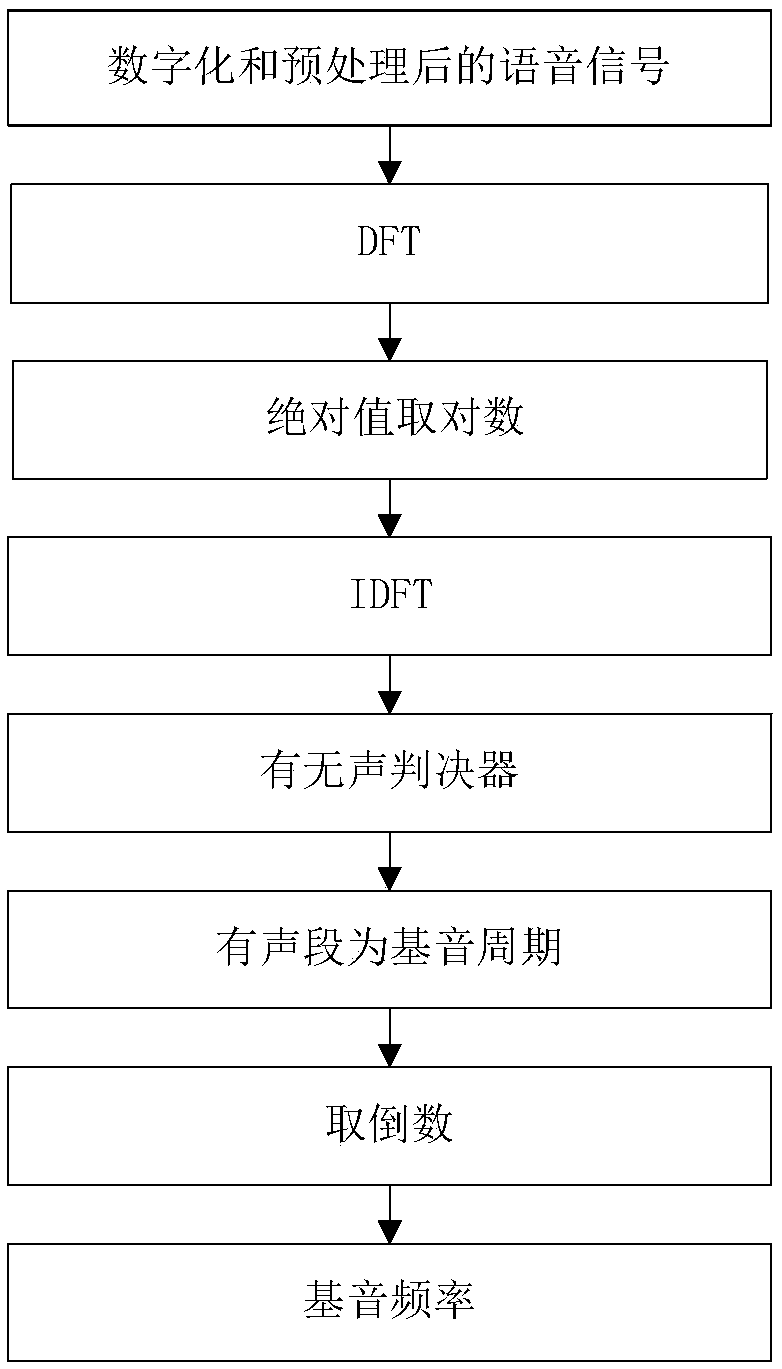

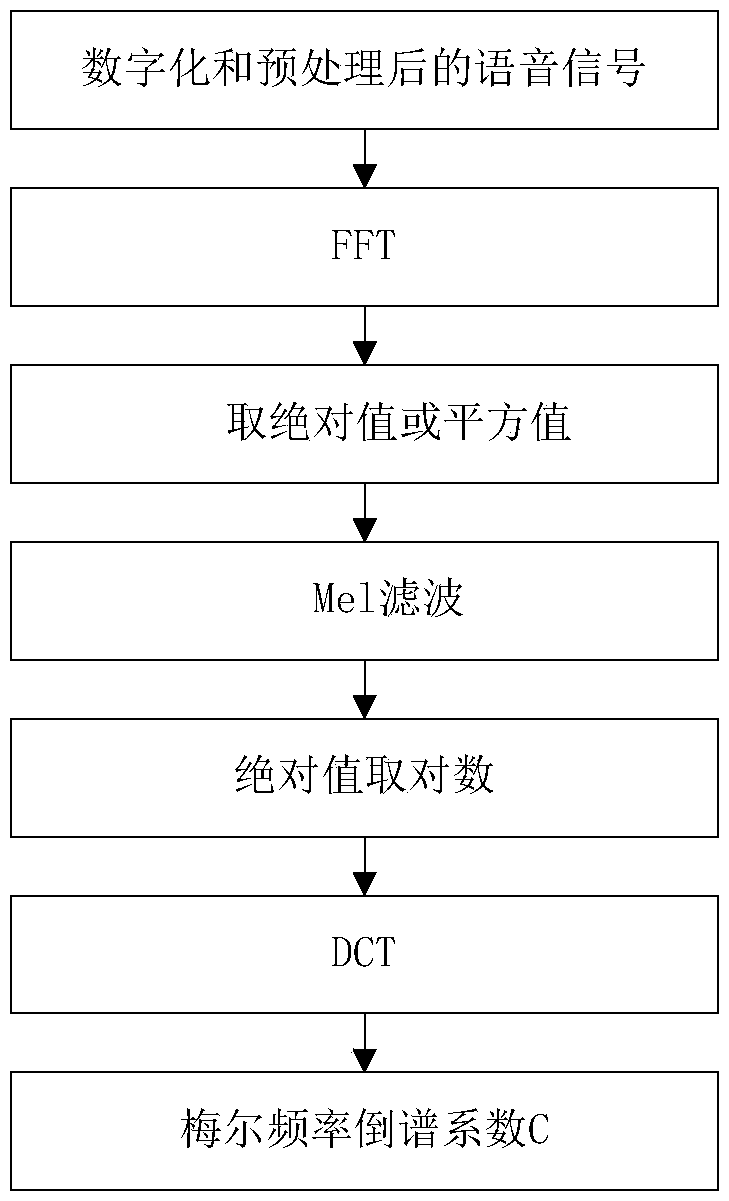

Voice motion recognition method based on feature selection and optimization

InactiveCN109493886AAvoid cases that only apply to samples that follow a Gaussian distributionAvoid the downside of ignoring category attributesSpeech analysisMedical diagnosisFeature parameter

The invention discloses a voice emotion recognition method based on feature selection and optimization, and mainly solves the problem of low accuracy rate of speech emotion recognition in the prior art. According to the scheme, the method includes the following steps of: 1) establishing an emotion voice database and obtaining original voice; 2) carrying out preemphasis, windowing by frames and end-point detection and other preprocessing on the original voice; 3) extracting emotion characteristic parameters of the pre-treated voice; 4) selecting the optimal parameters of the voice emotion by using a random forest algorithm; 5) inputting the optimal voice emotion characteristic parameters into a trained convolution neural network to obtain the result of the voice emotion recognition. By analyzing the importance of the voice emotion characteristics, the optimal voice emotion characteristic parameters are obtained, the accuracy of the convolution neural network algorithm to voice emotion recognition is improved, and the method can be applied to mobile phone communication, human-computer interaction and recognition of speaker emotion in medical diagnosis and criminal investigation.

Owner:XIDIAN UNIV

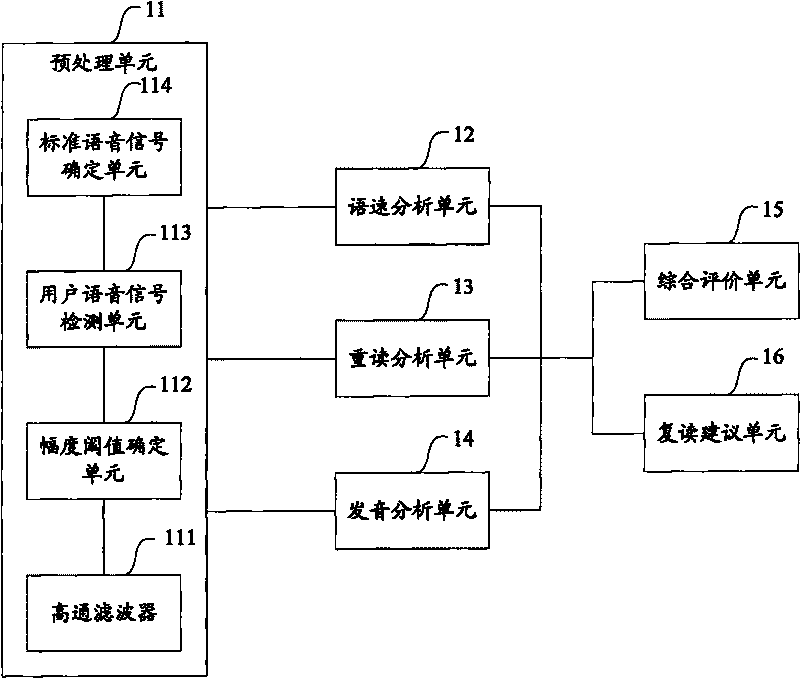

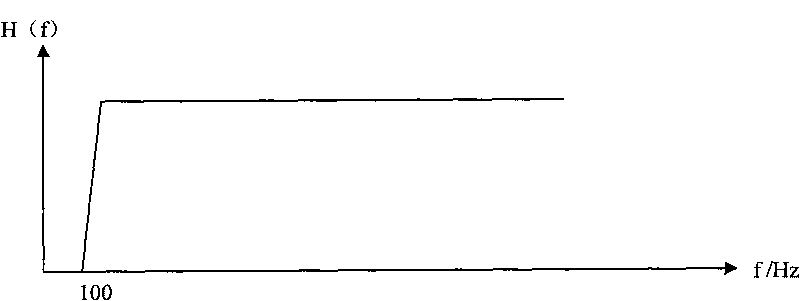

Method and equipment for detecting user pronunciation

InactiveCN101727900AImplement speed detectionAccurate analysisSpeech recognitionAudio frequencySpeech sound

Owner:WUXI ZGMICRO ELECTRONICS CO LTD

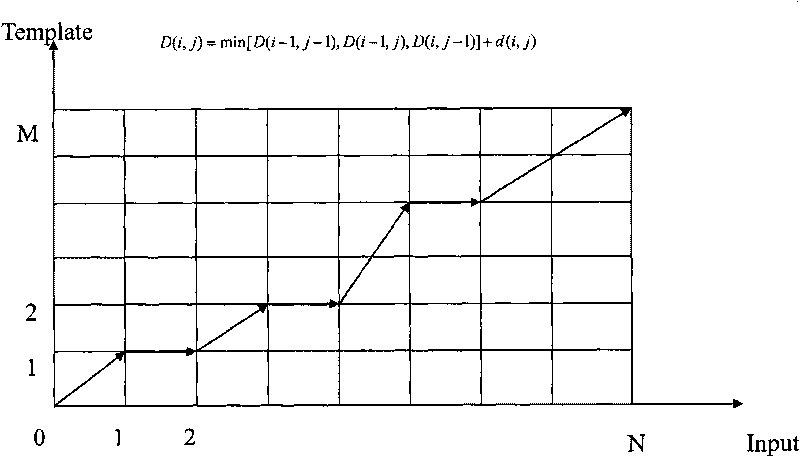

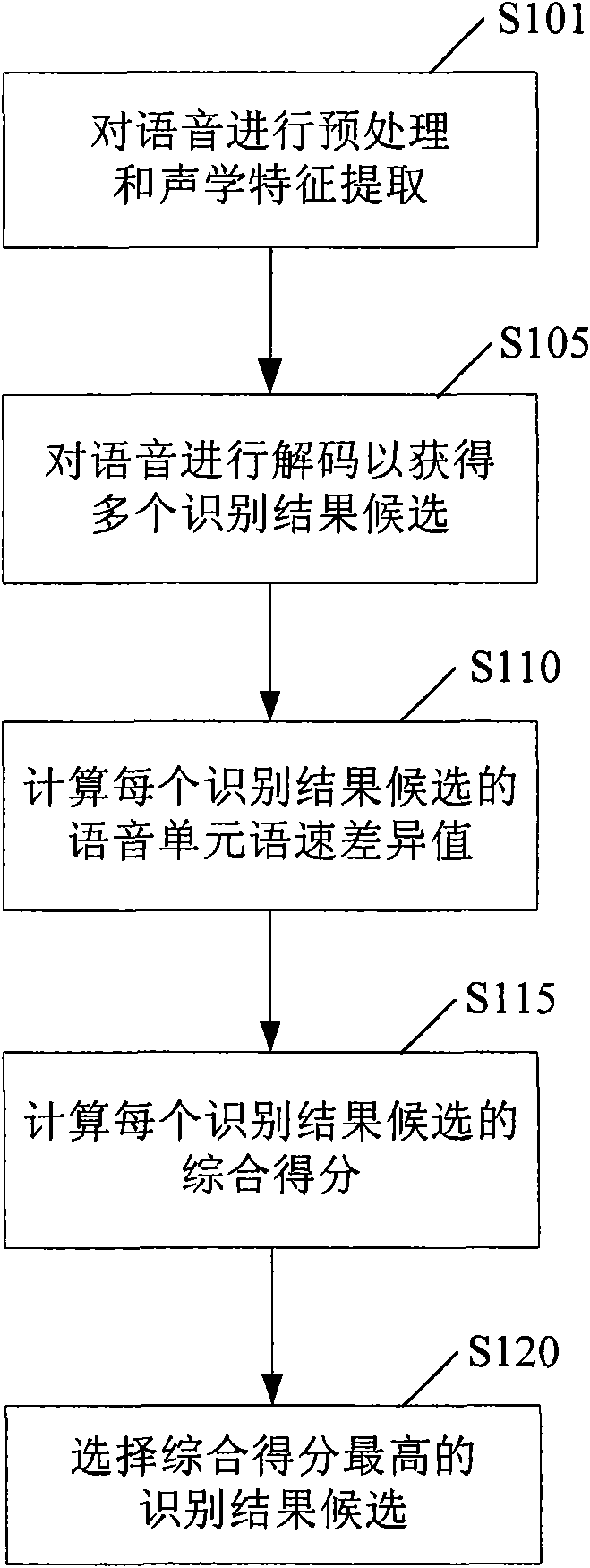

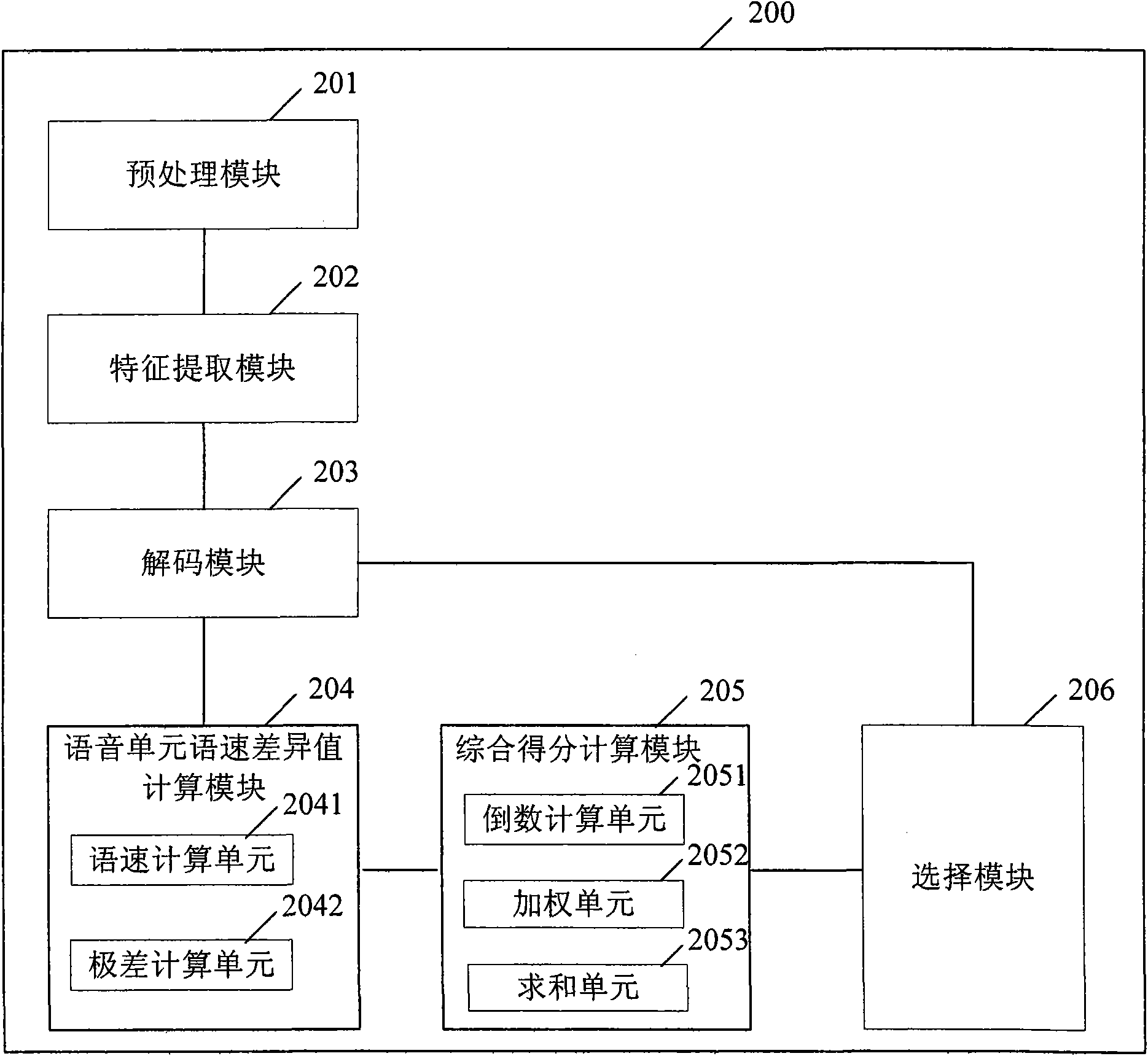

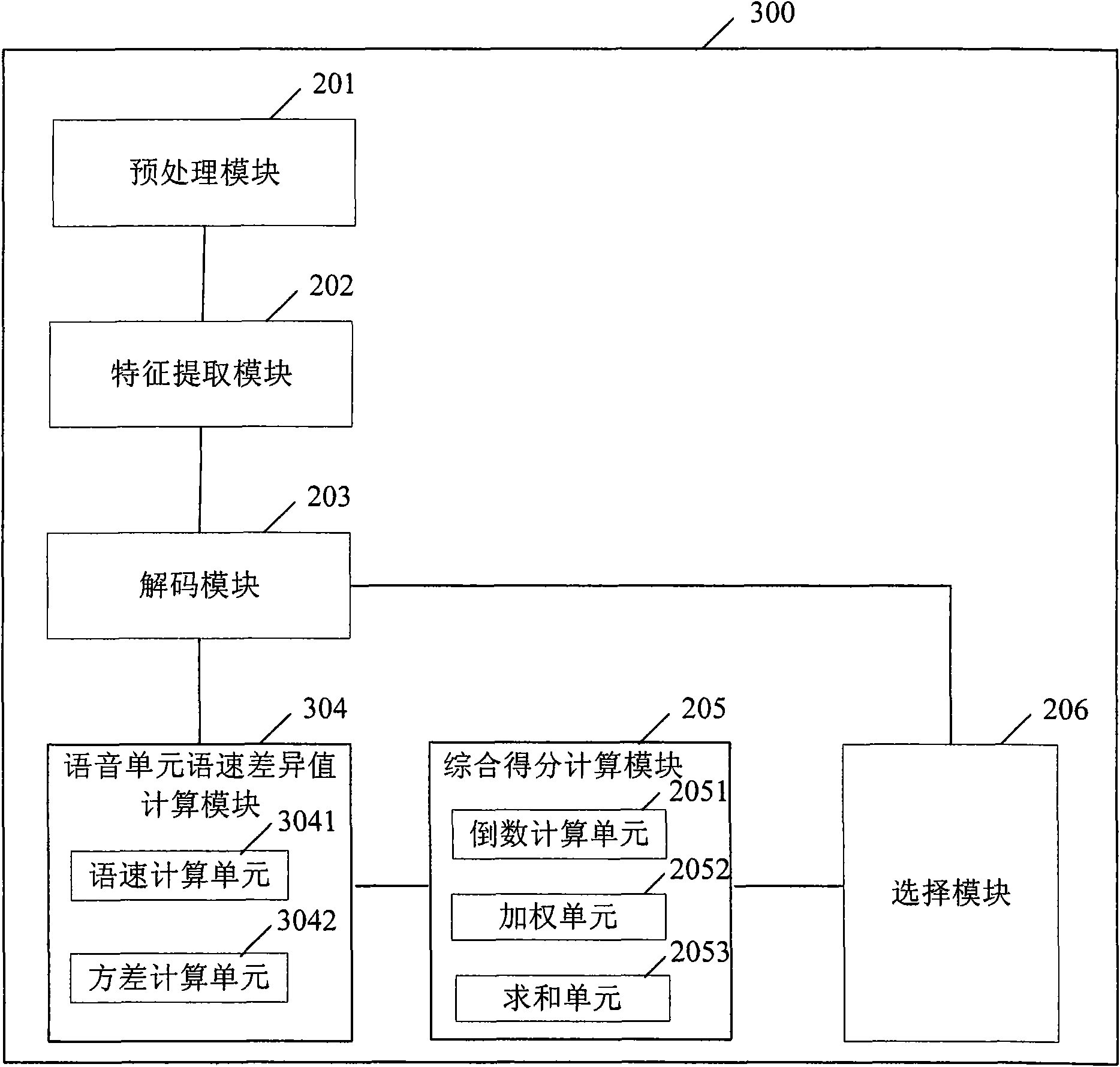

Speech recognition method based on speed difference of voice unit and system thereof

InactiveCN102013253AImprove speech recognition performanceSpeech recognitionAcoustic modelSpeech sound

The present invention relates to a speech recognition method based on the speed difference of the voice unit, comprising: preprocessing an input voice; extracting acoustics characteristics of the voice; according to the acoustics model trained in advance and the extracted acoustics characteristics, decoding the voice to obtain a plurality of candidate recognition results, wherein, each of the candidate recognition results possesses an acoustics score and a section length of the voice units contained by the voice; based on the section length of the voice units contained by the voice, calculating the speed difference of the voice unit for each of the candidate recognition results; based on the speed difference of the voice unit and the acoustics score, calculating a comprehensive score for the candidate recognition result; and selecting the candidate recognition result with the highest comprehensive score from the plurality of candidate recognition results as the final recognition result of the voice. In addition, the present invention also provides a corresponding speech recognition system.

Owner:KK TOSHIBA

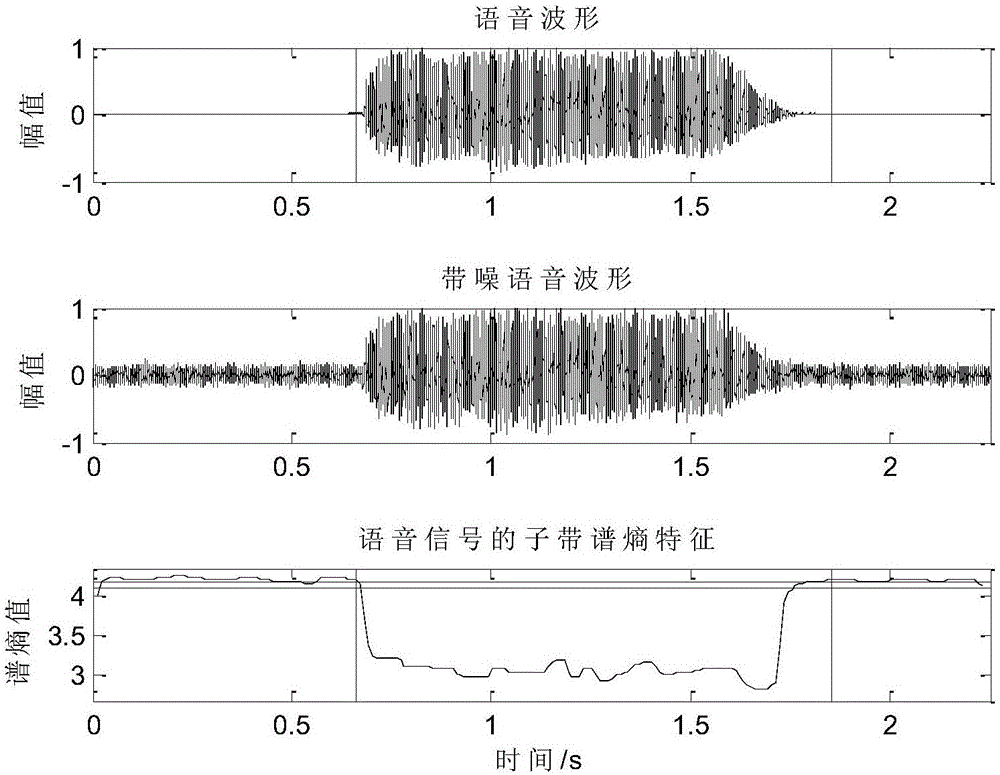

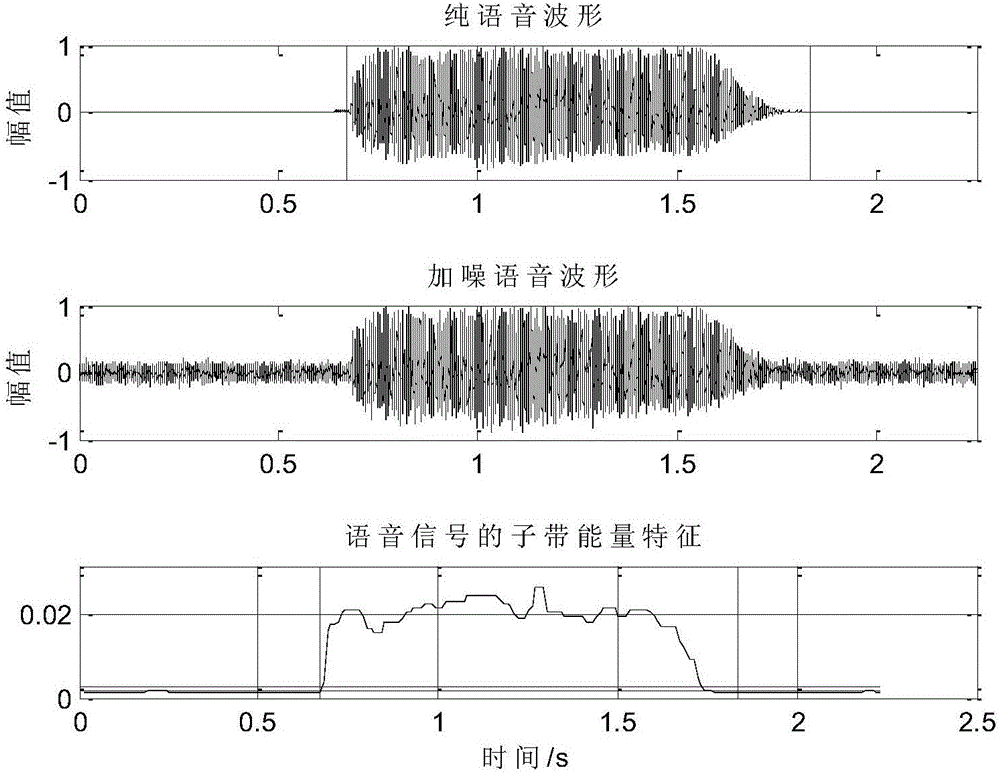

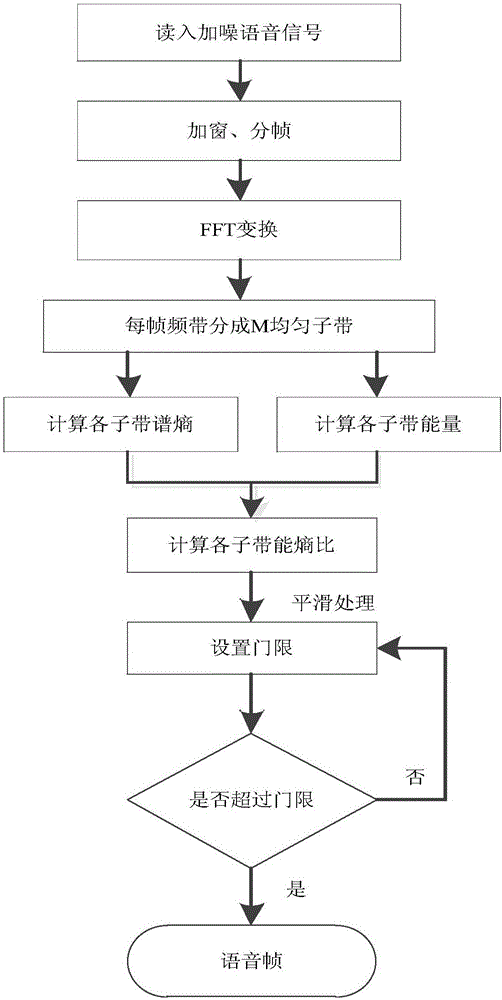

Spectrum-entropy improvement based speech endpoint detection method in low signal-to-noise ratio environment

InactiveCN106653062AEasy to detectImprove recognition rateSpeech analysisEnvironmental noiseSignal-to-noise ratio (imaging)

The invention provides a spectrum-entropy improvement based speech endpoint detection method in a low signal-to-noise ratio environment. In order to solve the problem that a speech endpoint detection system is not high in accuracy rate in the low signal-to-noise ratio environment in recognition of a current speaker, the endpoint detection method capable of improving accuracy rate of speech endpoint detection in the low signal-to-noise ratio environment is provided, wherein the detection method comprises the following steps: (1) pre-processing a speech signal in accordance with characteristics of the signal; (2) in accordance with division of each frame of frequency band of the speech signal, calculating spectrum-entropy and energy of various sub-bands, so that an energy-entropy ratio SEH of the various sub-bands is finally obtained; and (3) setting a proper threshold value, and in combination with median filtering, obtaining starting and ending positions of speech. The invention aims at removing influence of environmental noise by conducting the median filtering, so that the speech signal is more stable, and the accuracy rate of endpoint detection in the low signal-to-noise ratio environment is improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

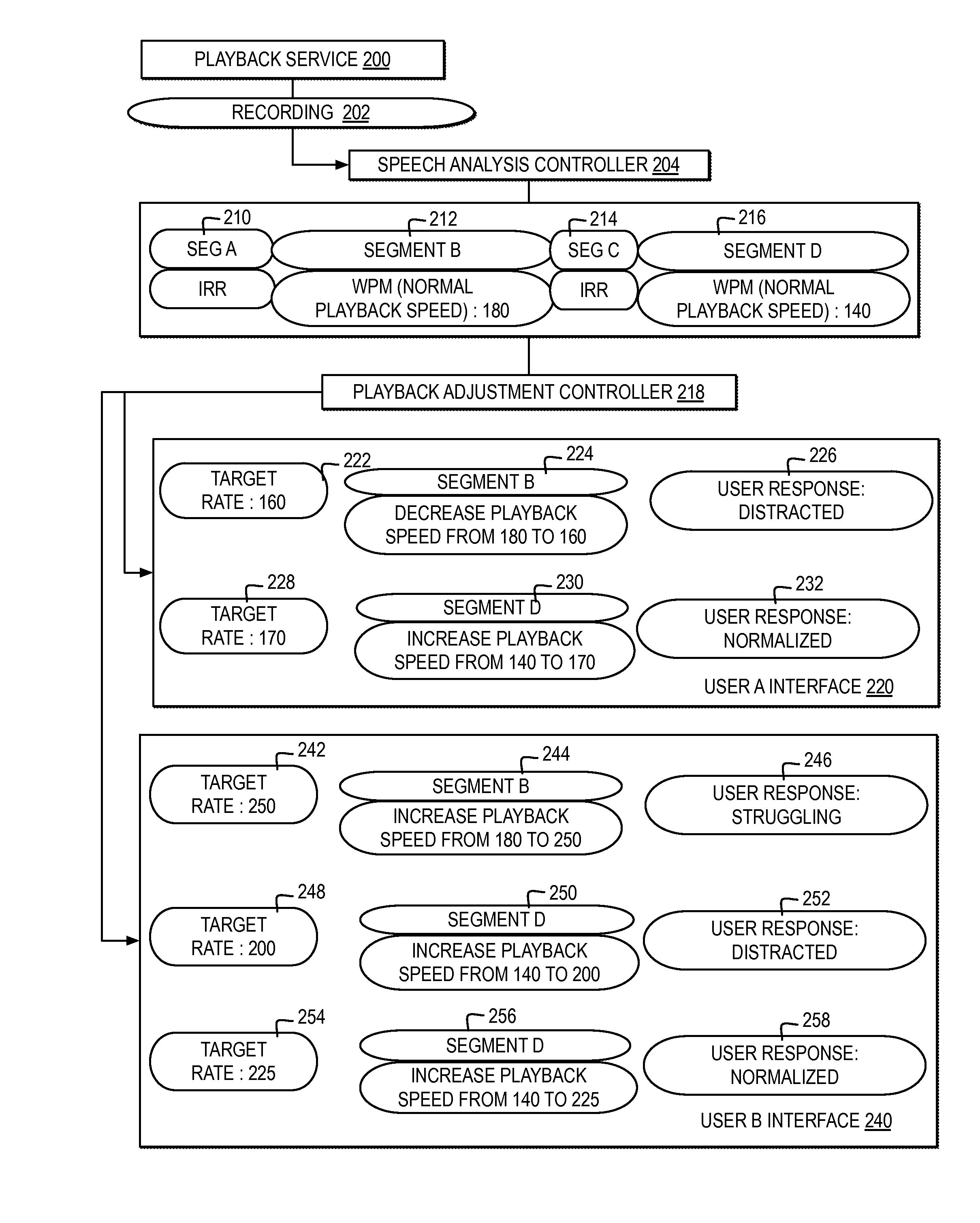

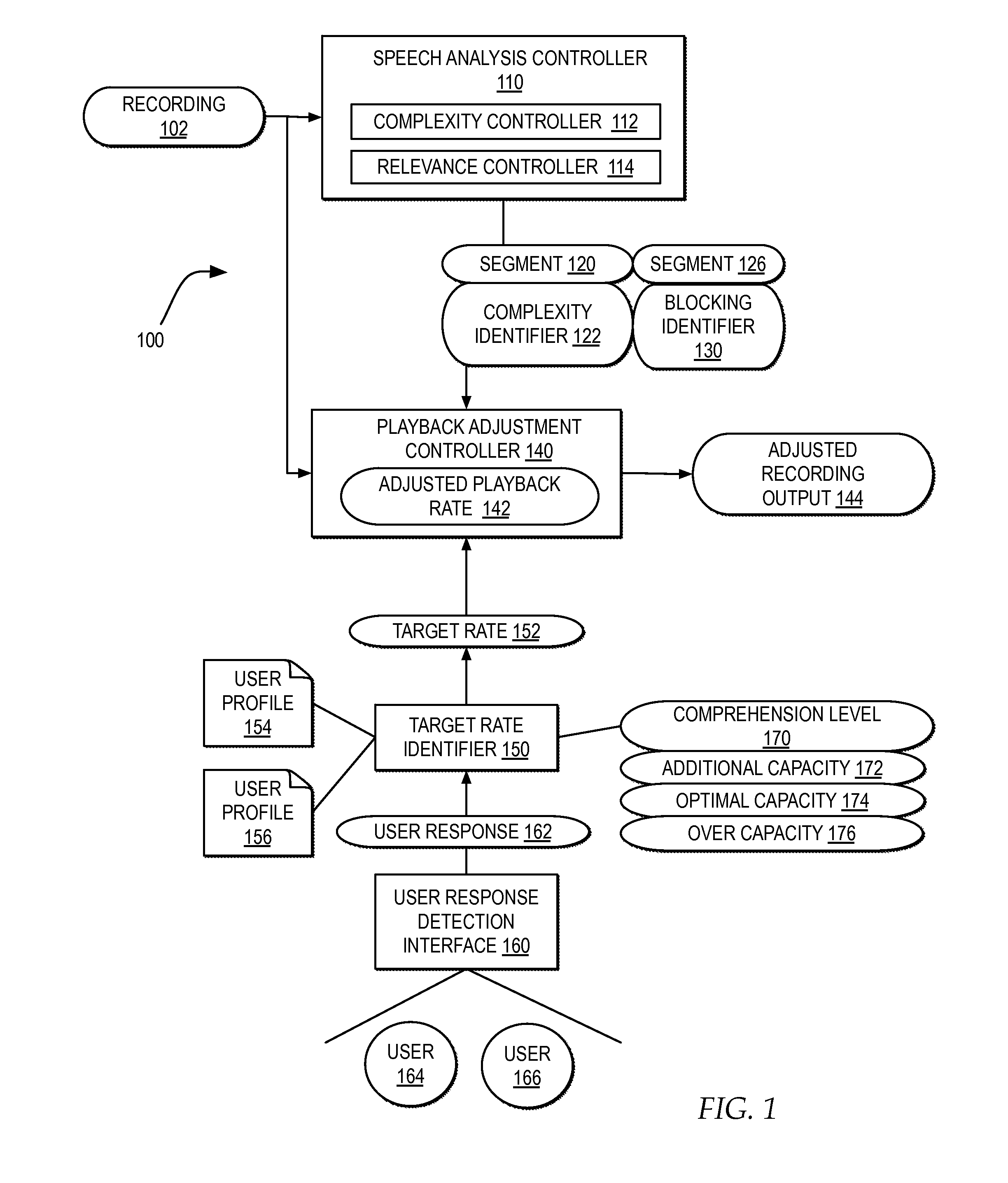

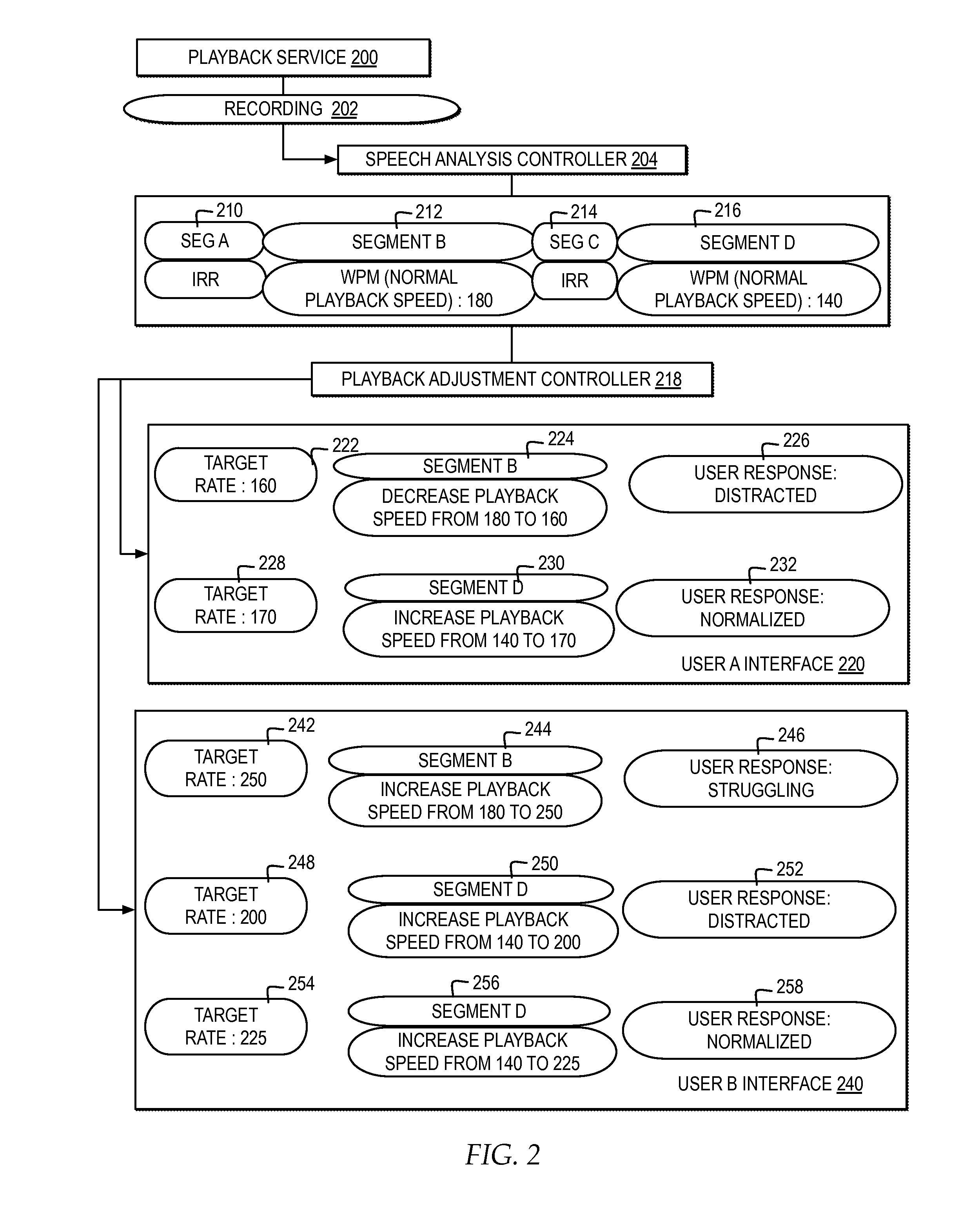

Adapting a playback of a recording to optimize comprehension

ActiveUS20170064244A1Easy to understandTelevision system detailsRecording carrier detailsSpeech soundRate of speech

Each of segment of a recording is analyzed for complexity specifying a rate of speech at a normal playback speech. A target rate is selected at which to playback the recording, the target rate specifying a fastest optimal speed at which a particular user listening to the recording is able to comprehend the playback. During playback of the recording, a separate adjusted playback rate is selected for each of the segments to adjust the playback rate of speech from the rate of speech at the normal playback speed to the target rate.

Owner:IBM CORP

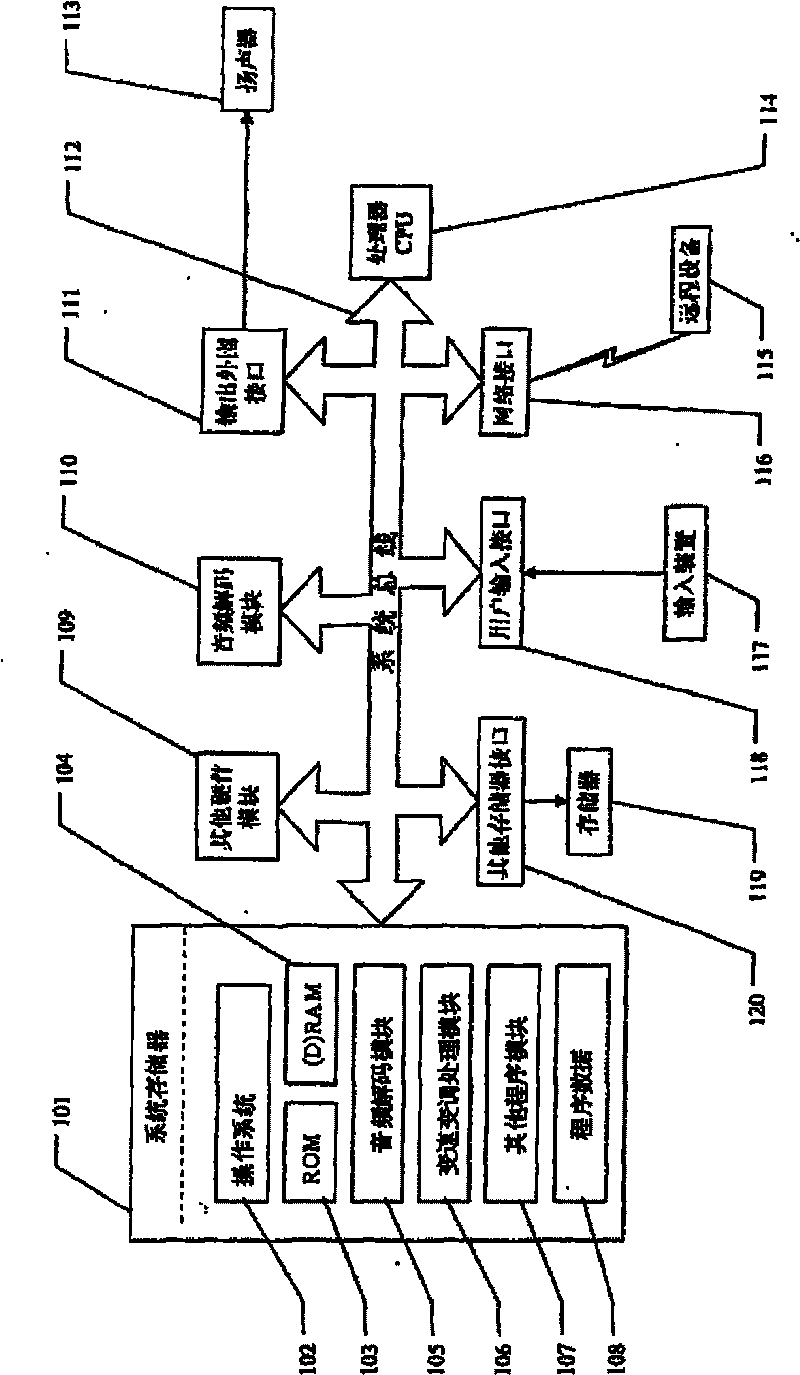

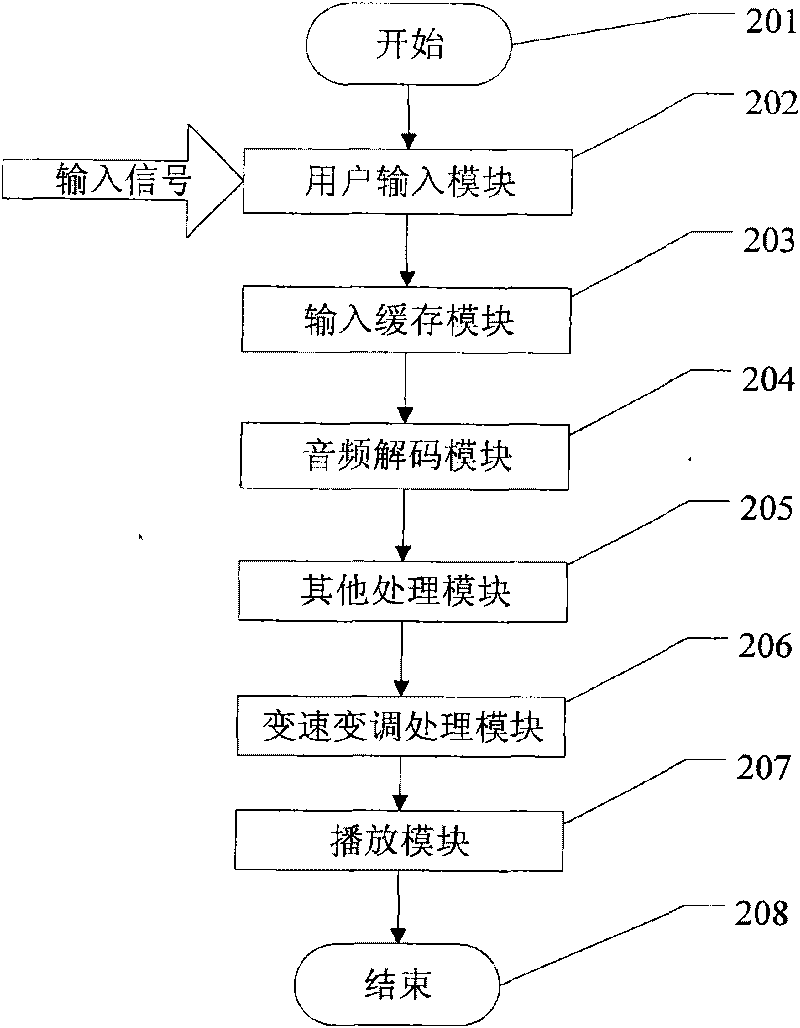

Method for realizing sound speed-variation without tone variation and system for realizing speed variation and tone variation

InactiveCN101740034ARealize functionImprove sound qualitySpeech analysisRecord information storageState variationSound quality

The invention discloses a system for realizing sound speed variation and tone variation, which comprises an input cache module, a tone variation processing module, a speed-variation no-tone-variation processing module and a data output module, wherein the input cache module is used for reading the sound signal data to be processed into the cache; the tone variation processing module is used for carrying out the tone variation processing on the sound signal to change the sound tone; the speed-variation no-tone-variation processing module is used for carrying out the speed-variation no-tone-variation processing on the sound signal, thereby changing the sound speed without changing the tone; and the data output module is used for outputting the speed-variation tone-variation signal. The speed-variation no-tone-variation processing module comprises a segmentation data module and a connection data module, wherein the speed-variation no-tone-variation processing module extracts a string of signal subfamilies (namely small sections of sound) from the original speech signal according to the coefficient of variation in speed by using a window function; and the connection data module connects the signal subfamilies according to the time sequence, thereby obtaining the speed-variation no-tone-variation signal. The invention realizes the speed-variation no-tone-variation function and the speed-variation tone-variation function of the audio frequency by using very low algorithm complexity, and does not introduce noise, thereby enhancing the quality of the processed sound.

Owner:刘盛举 +1

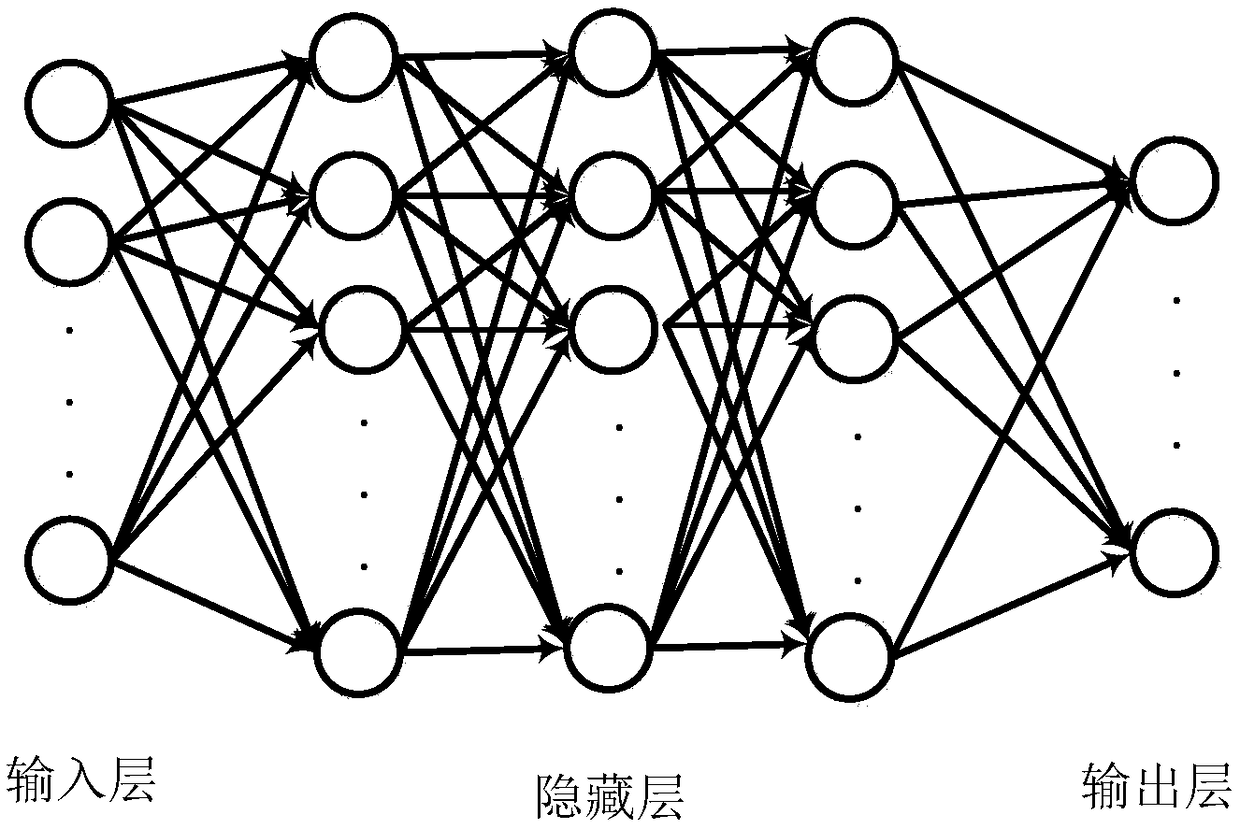

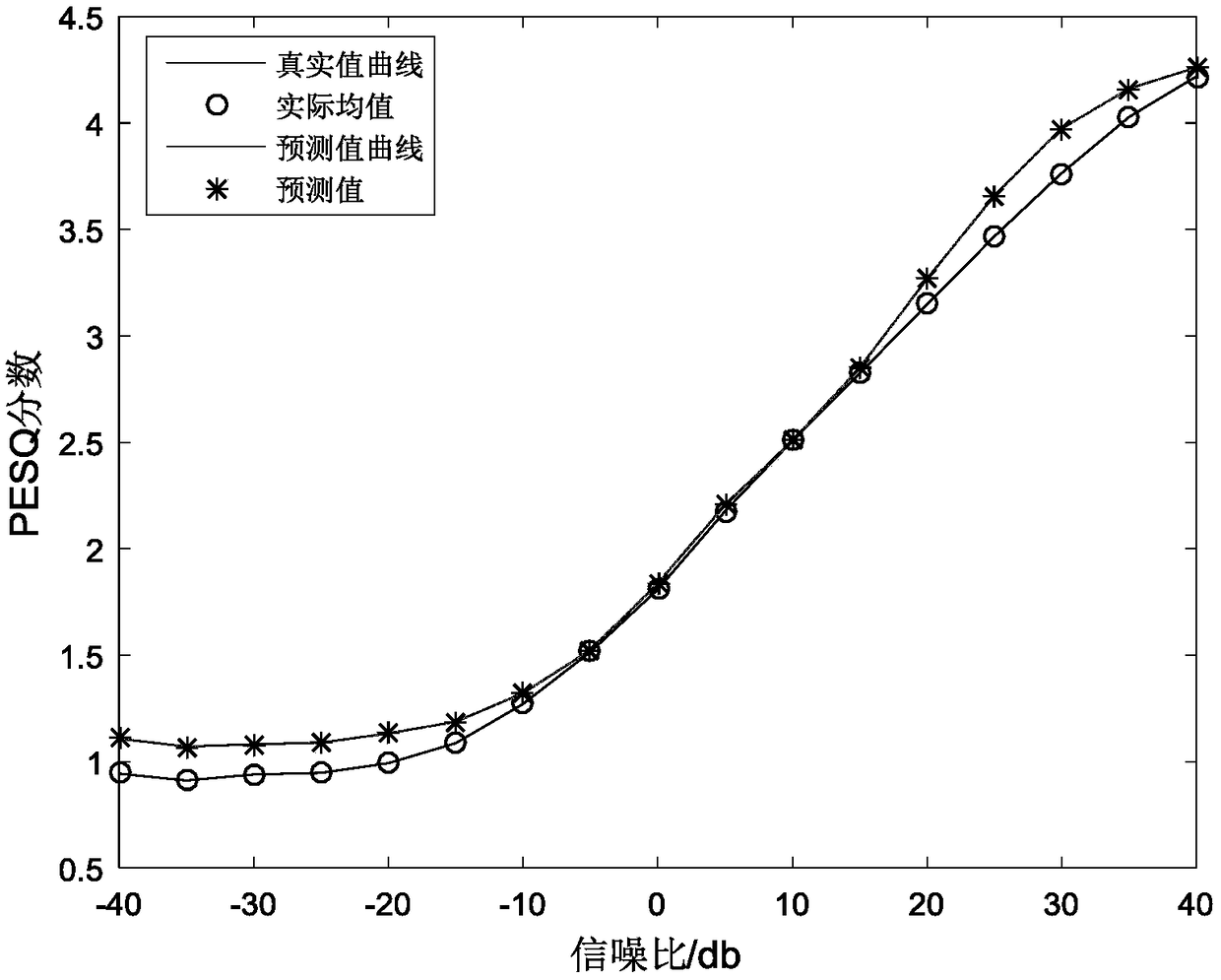

Deep-neural-network-based objective evaluation method for speech quality

ActiveCN109065072AImprove relevanceThe evaluation result is accurateSpeech analysisFeature vectorPESQ

The invention discloses a deep-neural-network-based objective evaluation method for speech quality. The method comprises: step one, constructing and training a deep neural network by using data generated based on three kinds of speech features of a noisy speech as the input of the deep neural network and a PESQ score of an actual target speech as an output target of the deep neural network; and step two, inputting a q-frame speech feature vector, as input data, of a to-be-evaluated speech into the trained deep neural network and outputting a quality evaluation score of the to-be-evaluated speech. With the deep-neural-network-based objective evaluation method disclosed by the invention, the target speech quality can be evaluated objectively under the condition of only having a target speechsignal; and the objective evaluation of the target speech is associated with evaluation made by the actual PESQ algorithm highly. The deep-neural-network-based objective evaluation method is suitablefor the variable-speed speech; a phenomenon that the speech quality can not be evaluated objectively because of speech speed changing is avoided; and the evaluation result is accurate. The speech quality can be evaluated directly without a pure reference signal.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

Optimal call speed for call center agents

A system and method for handling a call from a caller to a call center includes an automatic call distributor (ACD) to receive the call and to route the call to an agent. A module operates to compute a rate of speech of the caller, and a display graphically displays the rate of speech of the caller to the agent during the call session. It is emphasized that this abstract is provided to comply with the rules requiring an abstract that will allow a searcher or other reader to quickly ascertain the subject matter of the technical disclosure. It is submitted with the understanding that it will not be used to interpret or limit the scope or meaning of the claims.

Owner:CISCO TECH INC

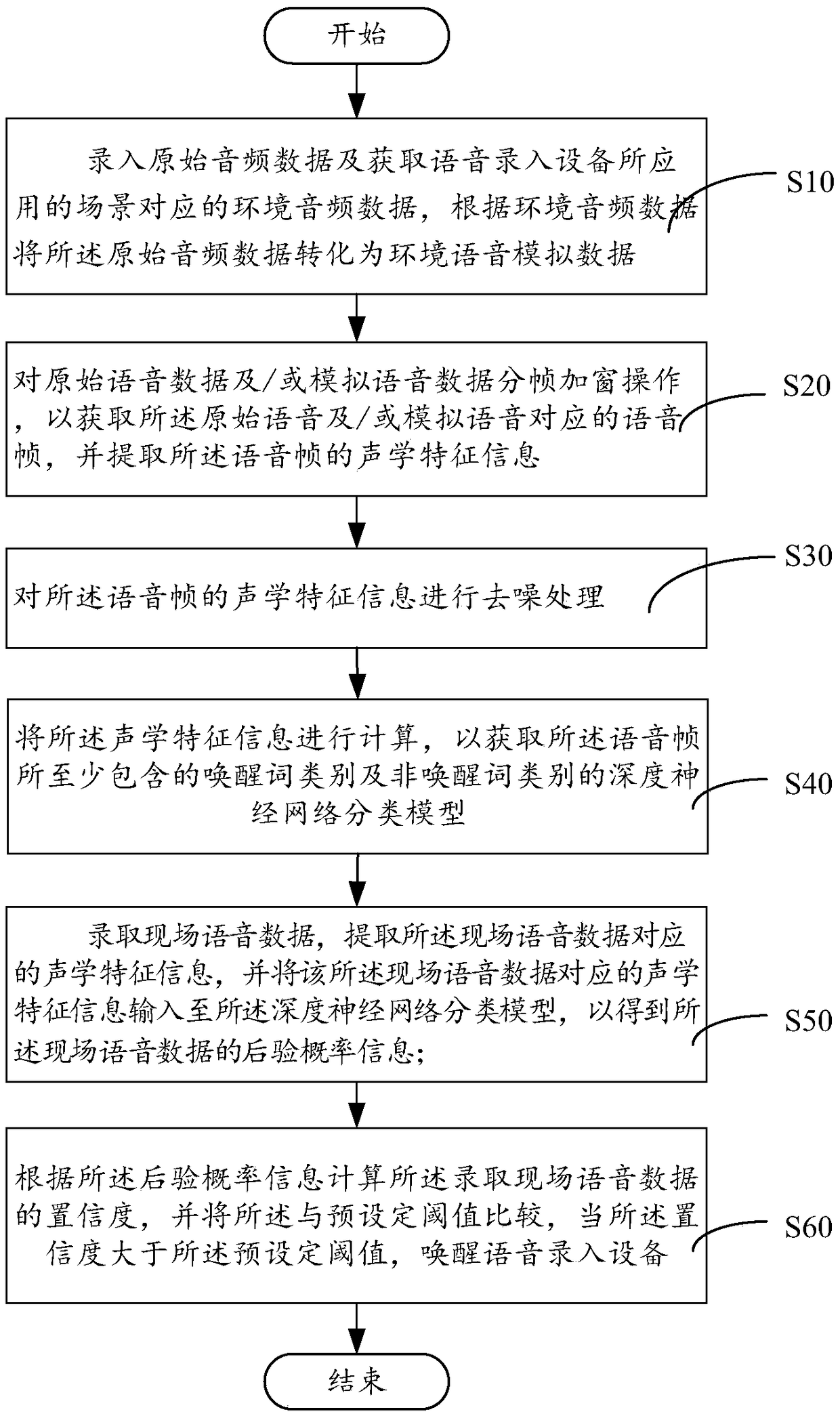

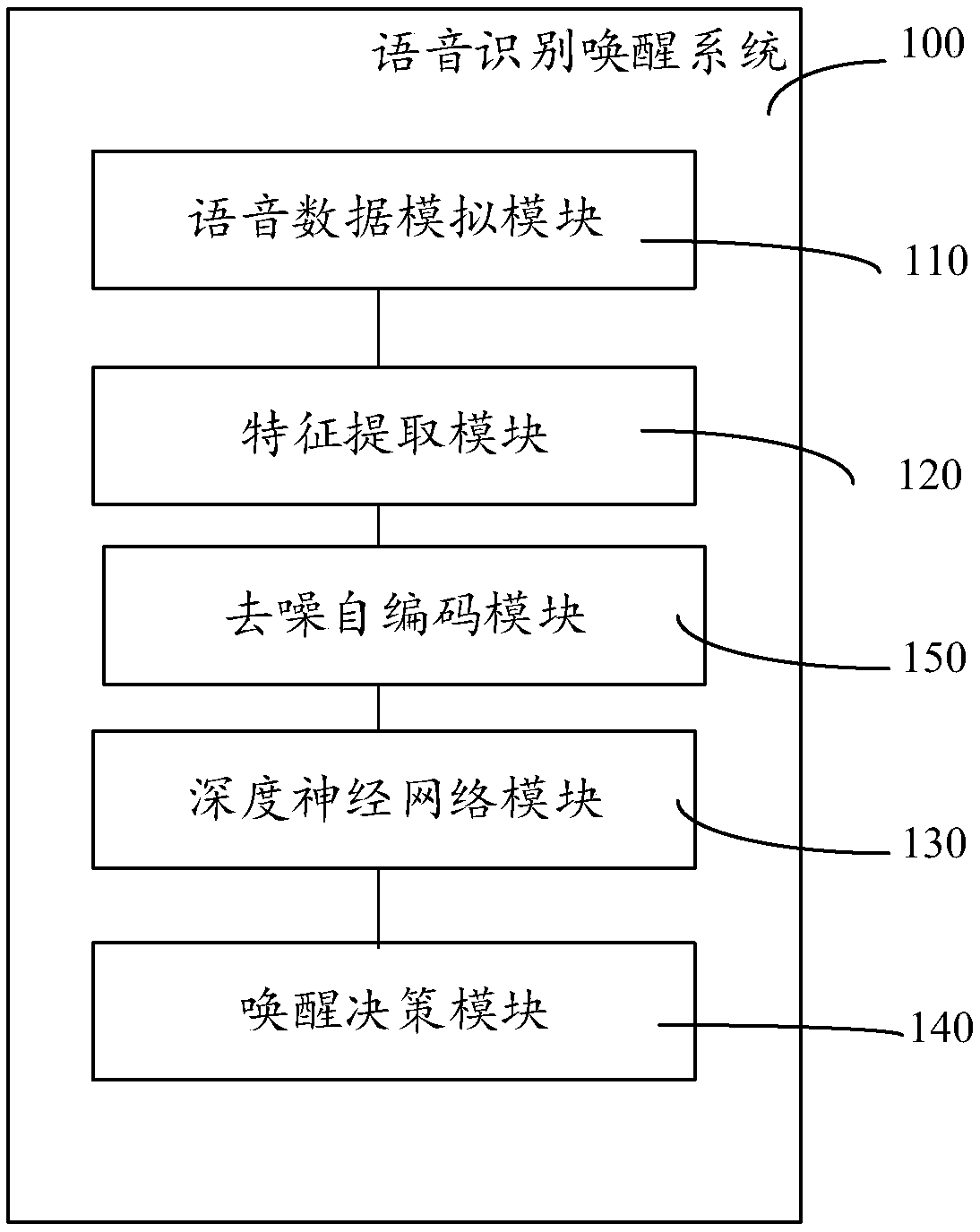

Voice waking-up method and system thereof

InactiveCN109036412AImprove adaptabilitySolve the robustness problemSpeech recognitionRate of speechVoice data

The invention relates to a voice waking-up method and a system thereof, wherein the method comprises the following steps of performing an enframing windowing operation on original voice data for acquiring a voice frame which corresponds with original voice, and extracting acoustic characteristic information of the voice frame; calculating the acoustic characteristic information for obtaining a deep neural network classification model; recording field voice data, extracting the acoustic characteristic information which corresponds with the field voice data, and inputting the acoustic characteristic information which corresponds with the field voice data into the deep neural network classification model, thereby obtaining posterior probability information; and comparing the posterior probability information with a preset threshold, and when the confidence is higher than the preset threshold, waking up voice recording equipment. The voice waking-up method and the system thereof have advantages of effectively improving waking-up performance in a noise scene, performing simulation such as voice speed, pitch and sound volume on the original data, and effectively improving adaptability ofthe waking-up system to different speakers.

Owner:苏州奇梦者科技有限公司

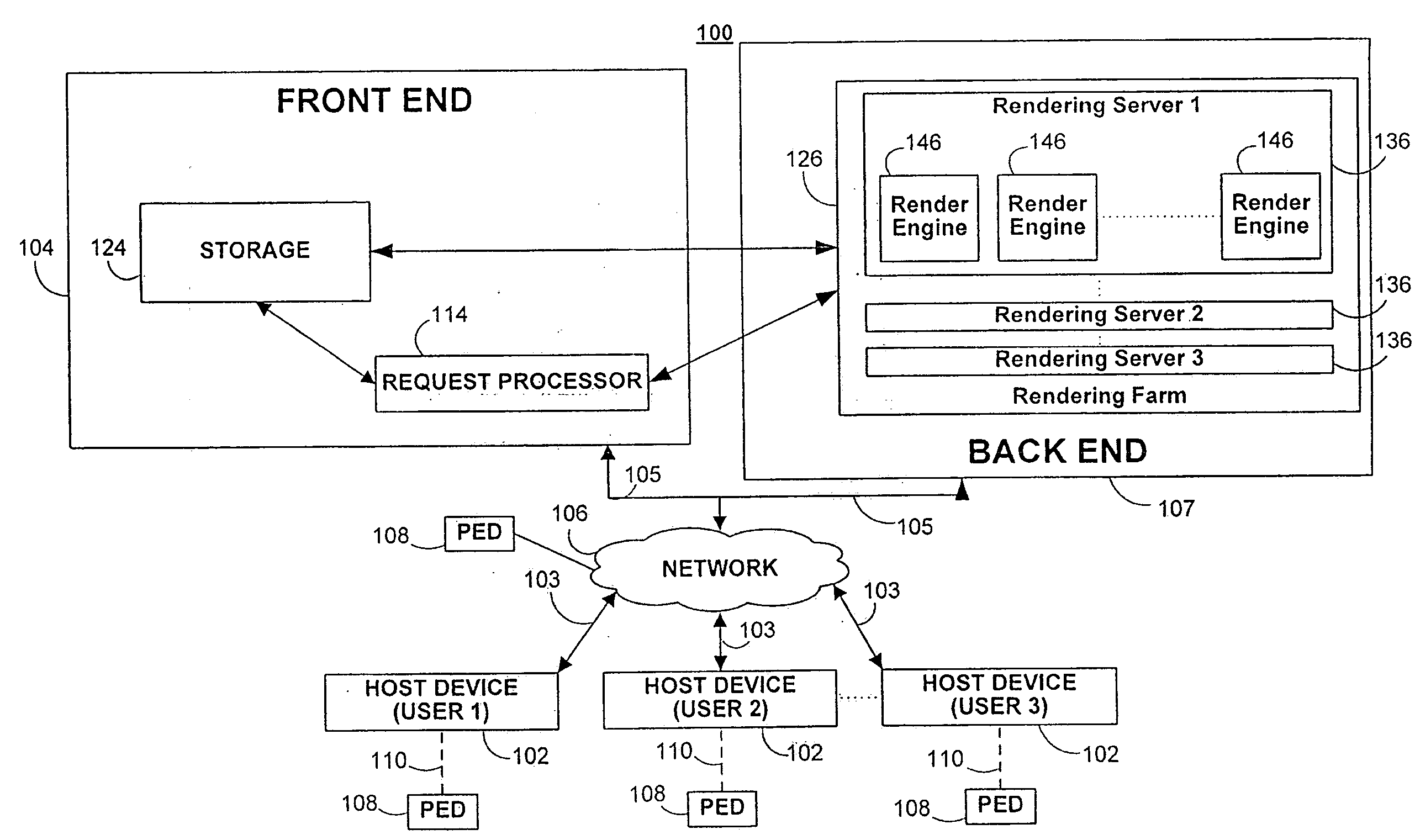

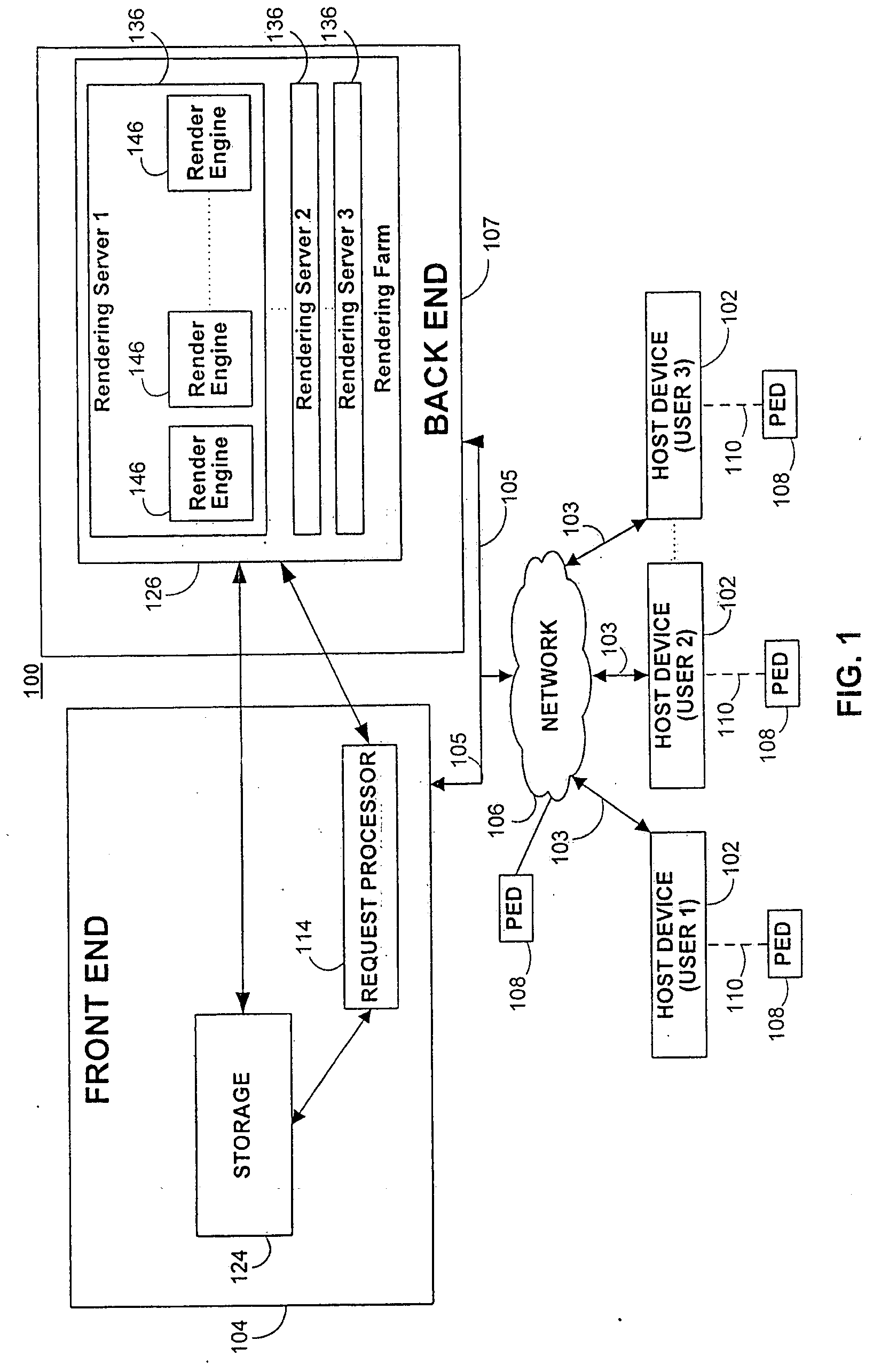

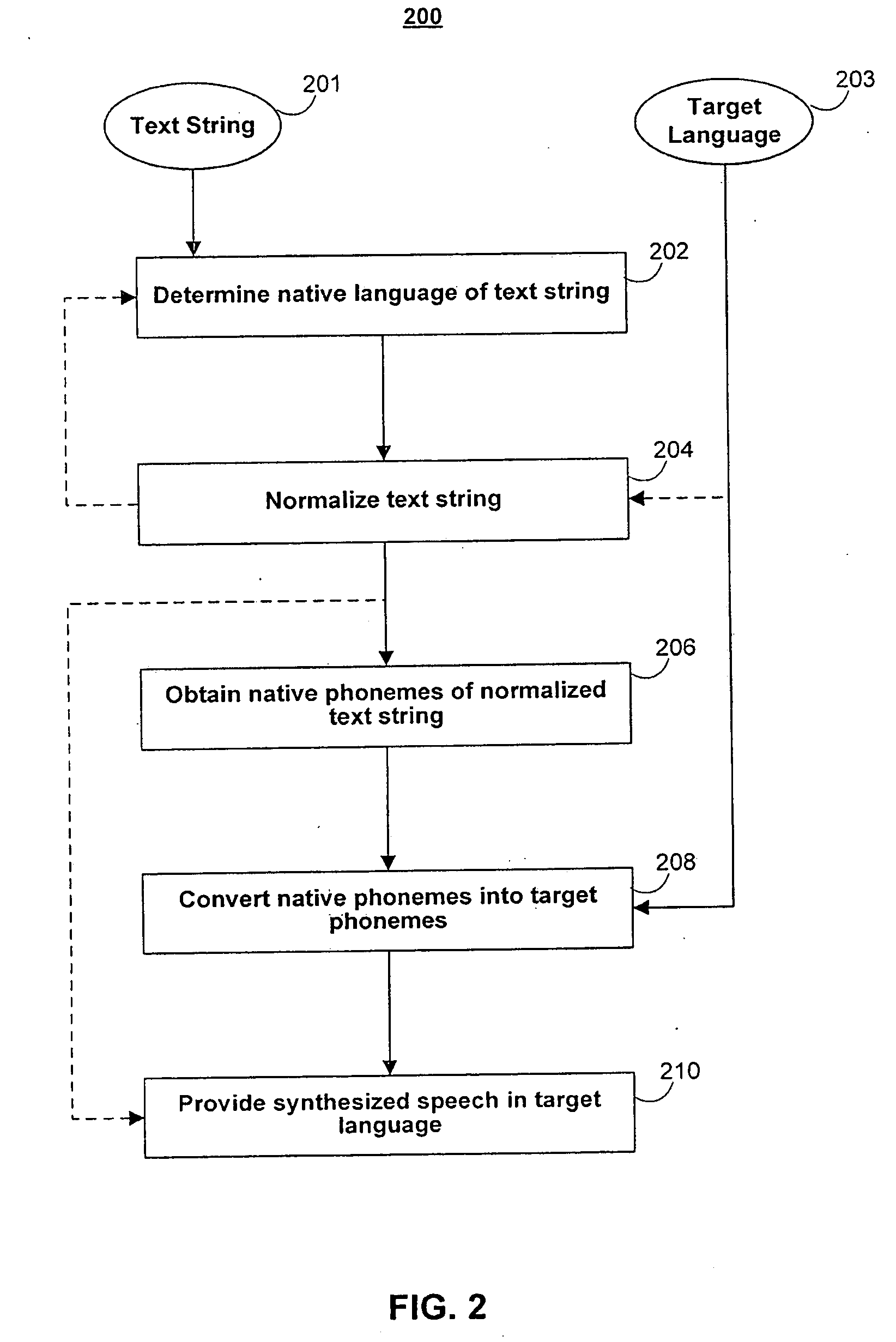

Systems and methods for selective rate of speech and speech preferences for text to speech synthesis

Algorithms for synthesizing speech used to identify media assets are provided. Speech may be selectively synthesized form text strings associated with media assets. A text string may be normalized and its native language determined for obtaining a target phoneme for providing human-sounding speech in a language (e.g., dialect or accent) that is familiar to a user. The algorithms may be implemented on a system including several dedicated render engines. The system may be part of a back end coupled to a front end including storage for media assets and associated synthesized speech, and a request processor for receiving and processing requests that result in providing the synthesized speech. The front end may communicate media assets and associated synthesized speech content over a network to host devices coupled to portable electronic devices on which the media assets and synthesized speech are played back.

Owner:APPLE INC

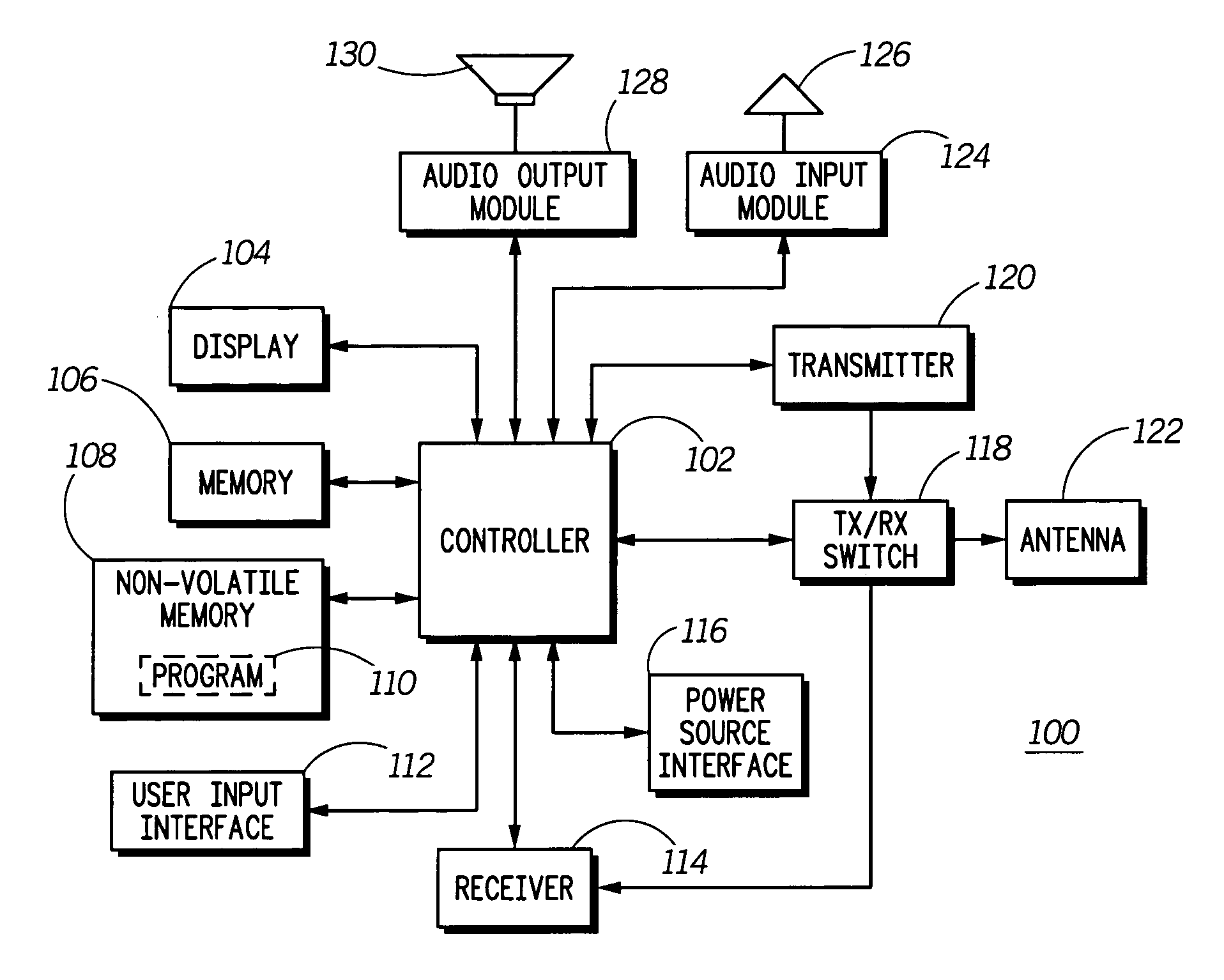

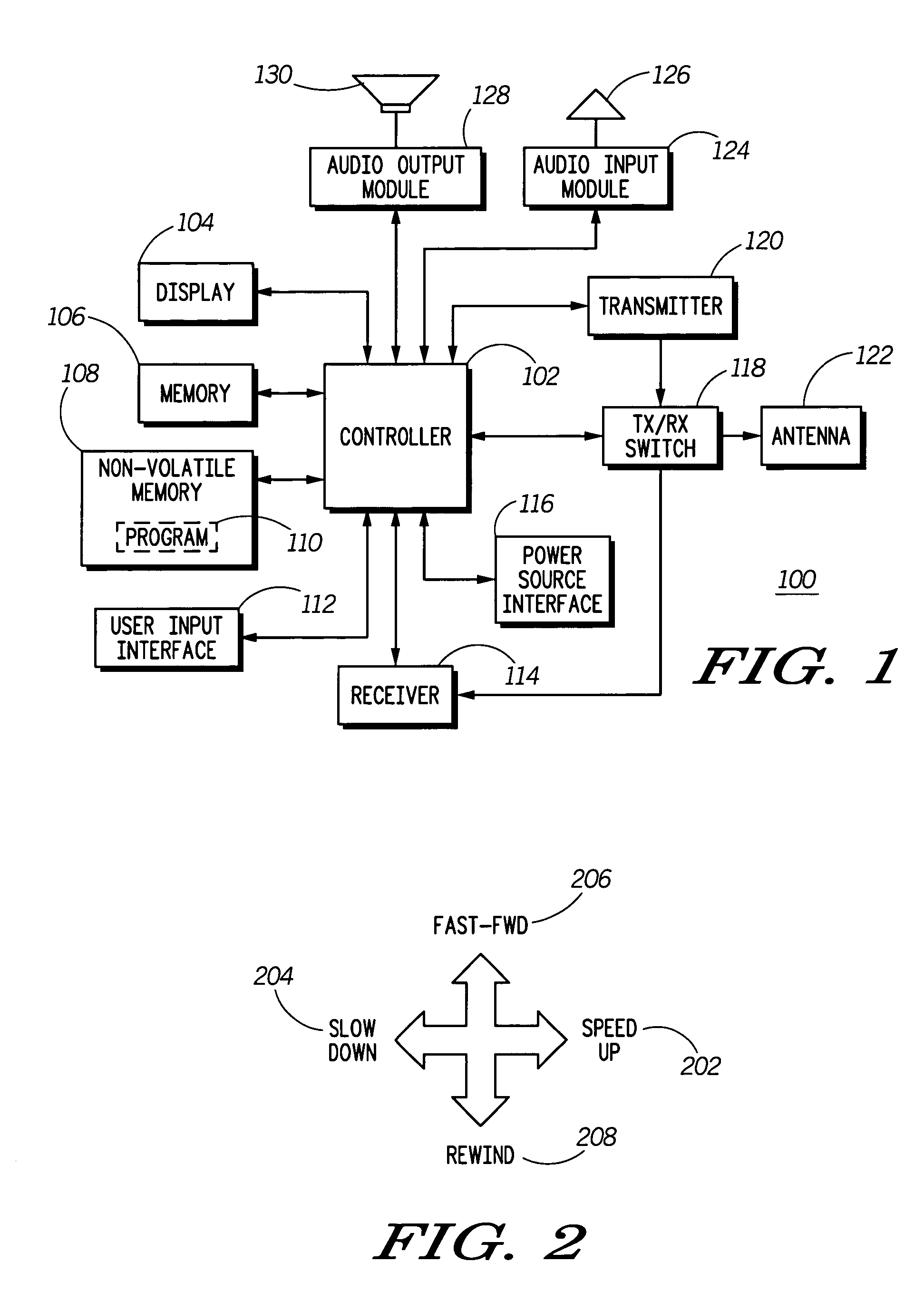

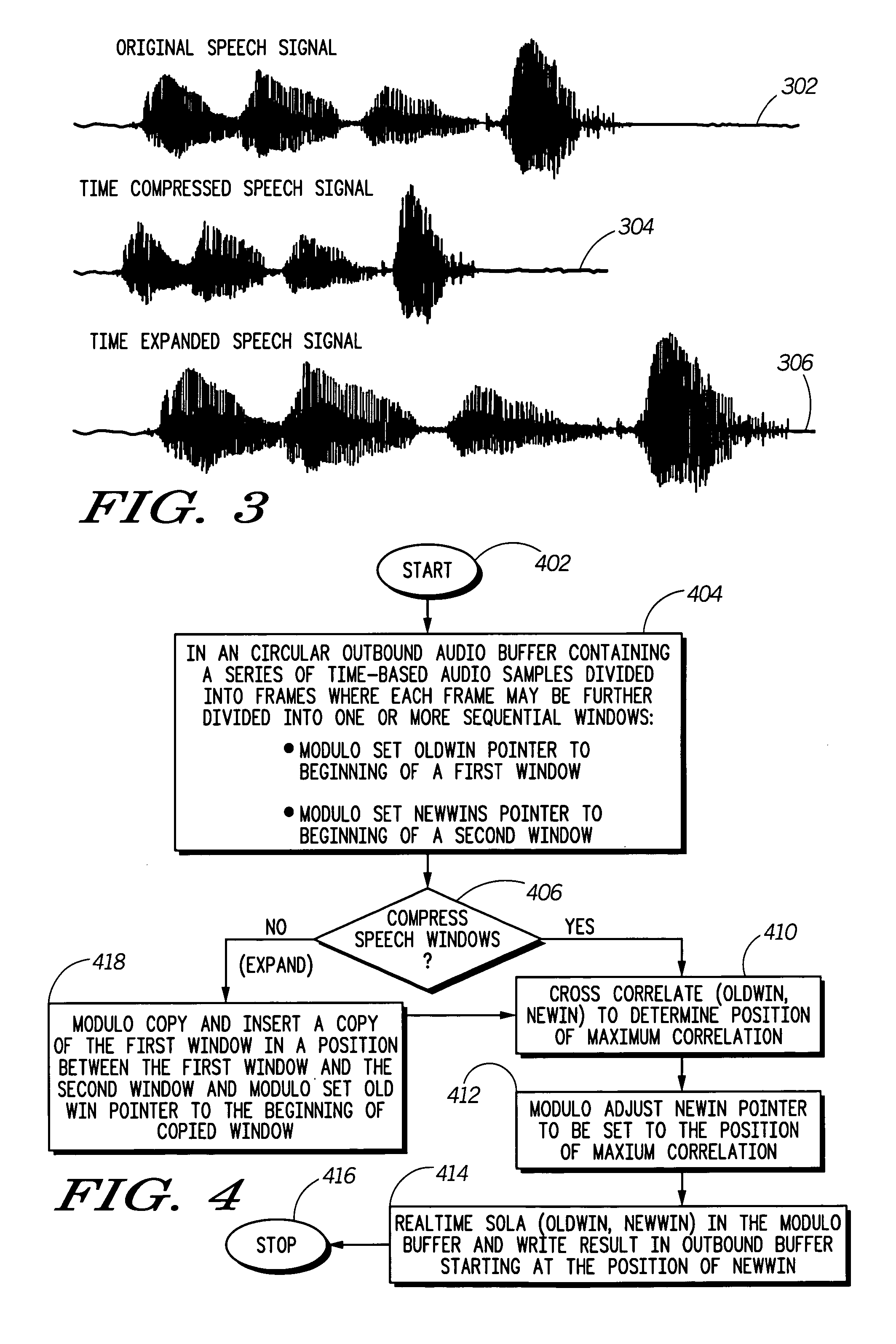

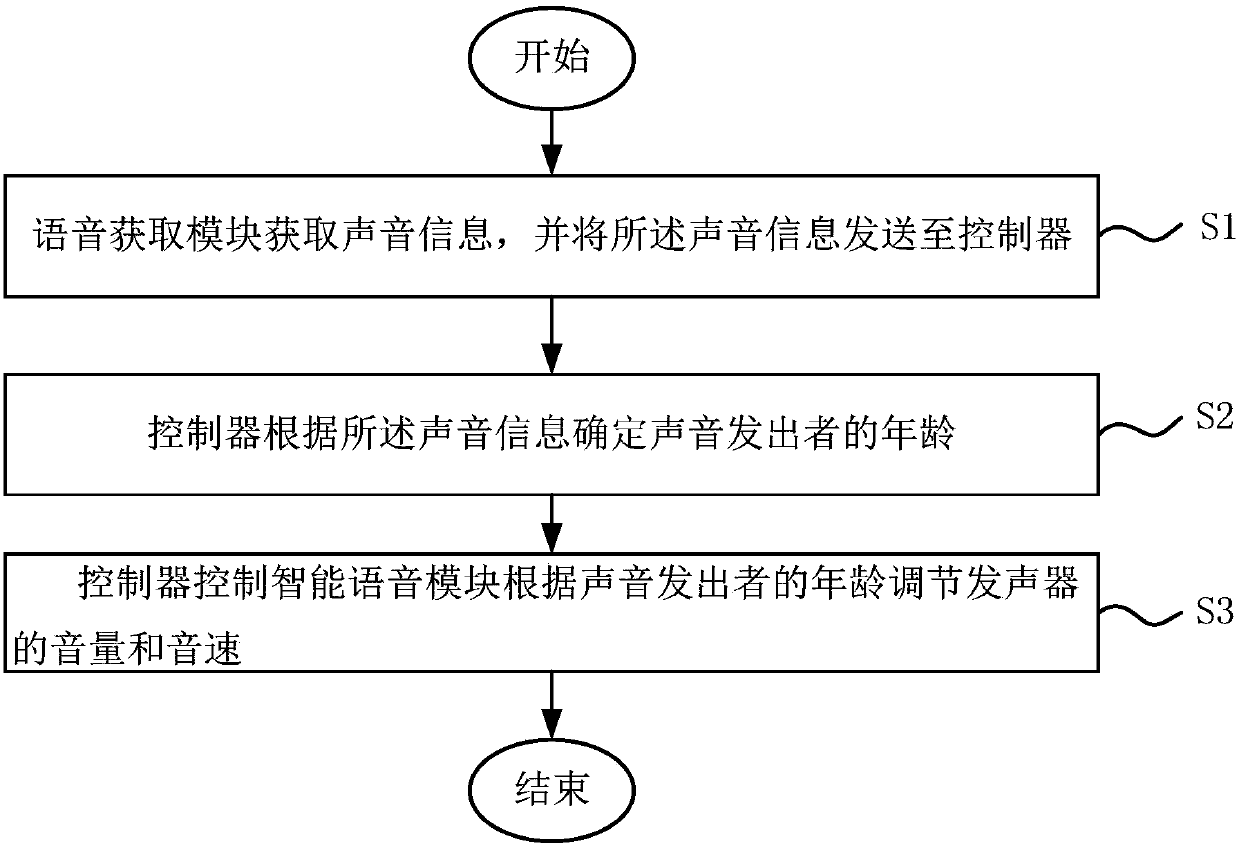

Synchronization and overlap method and system for single buffer speech compression and expansion

The present invention (110) permits a user to speed up and slow down speech without changing the speakers pitch (102, 110, 112, 128, 402–416). It is a user adjustable feature to change the spoken rate to the listeners' preferred listening rate or comfort. It can be included on the phone as a customer convenience feature without changing any characteristics of the speakers voice besides the speaking rate with soft key button (202) combinations (in interconnect or normal). From the users perspective, it would seem only that the talker changed his speaking rate, and not that the speech was digitally altered in any way. The pitch and general prosody of the speaker are preserved. The following uses of the time expansion / compression feature are listed to compliment already existing technologies or applications in progress including messaging services, messaging applications and games, real-time feature to slow down the listening rate.

Owner:GOOGLE TECH HLDG LLC

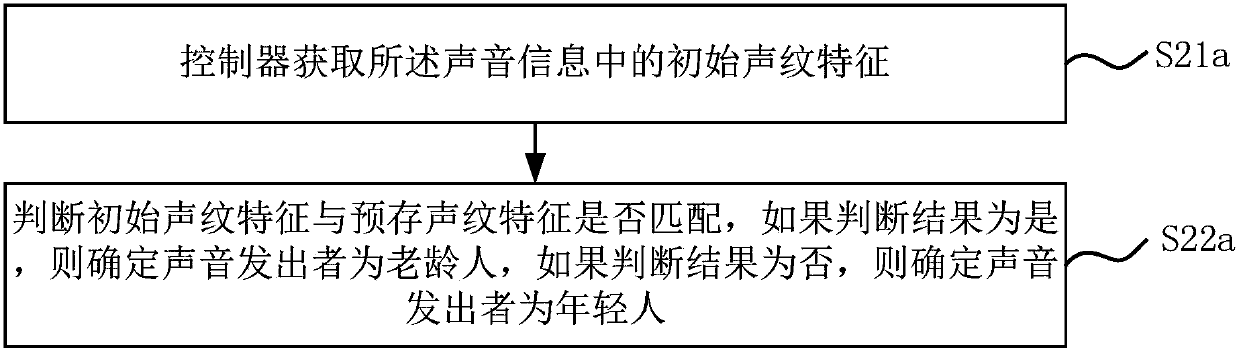

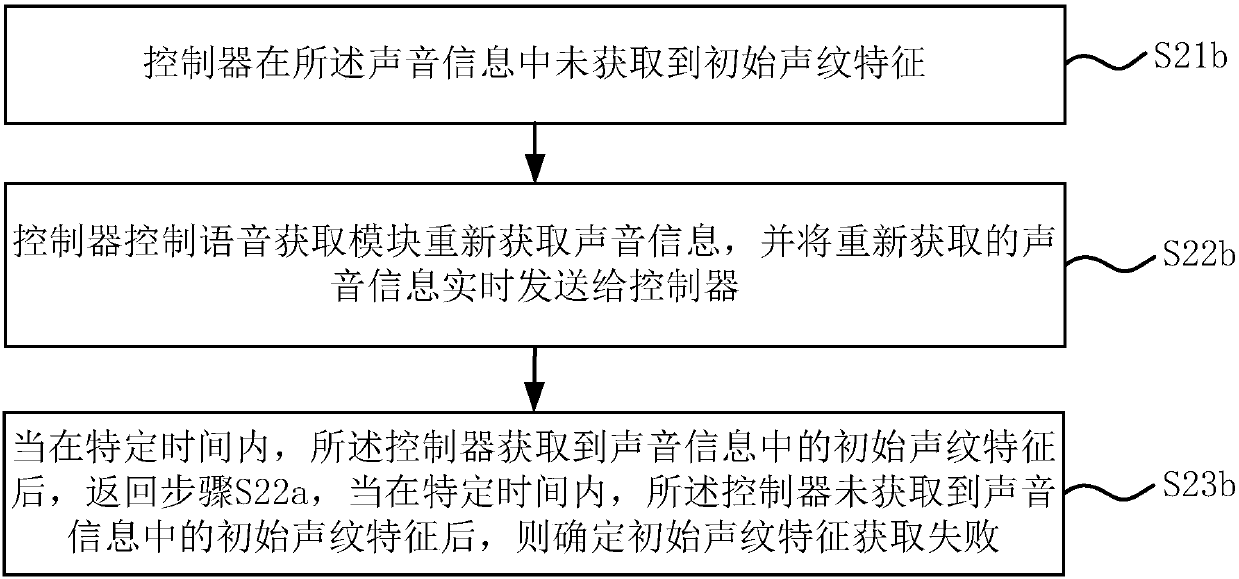

Method for adjusting voice based on user's age, and washing machine

InactiveCN107656461AImprove experienceProgramme controlComputer controlHearing perceptionComputer science

The invention discloses a method for adjusting voice based on user's age, and a washing machine. The method comprises the steps that: S1, a voice acquisition module acquires sound information, and sends the sound information to a controller; S2, the controller determines the age of a sound producing person according to the sound information; S3, and the controller controls an intelligent voice module to adjust sound volume and a sound speed of a sounder according to the age of the sound producing person. By adopting the method, the washing machine can select the sound speed and volume s suitable for the user's age according to the age of the user, thereby bringing great convenience to the user, particularly benefiting the elderly with poor hearing, and enhancing user experience.

Owner:QINGDAO HAIER WASHING MASCH CO LTD

Voice recognition method and device and computer storage medium

InactiveCN109448711AImprove recognition rateImprove experienceSpeech recognitionAcquiring/recognising facial featuresCrowdsSpeech sound

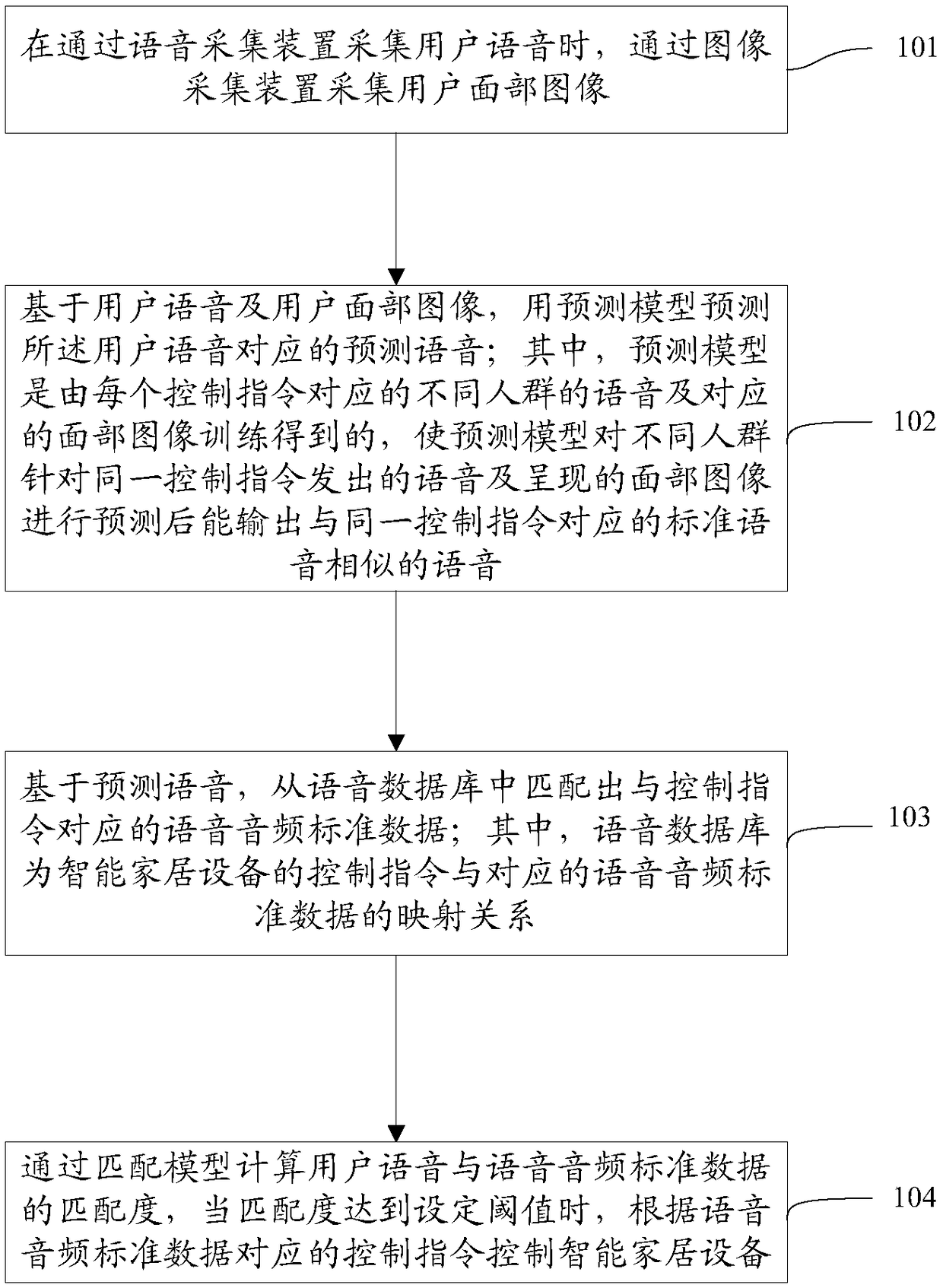

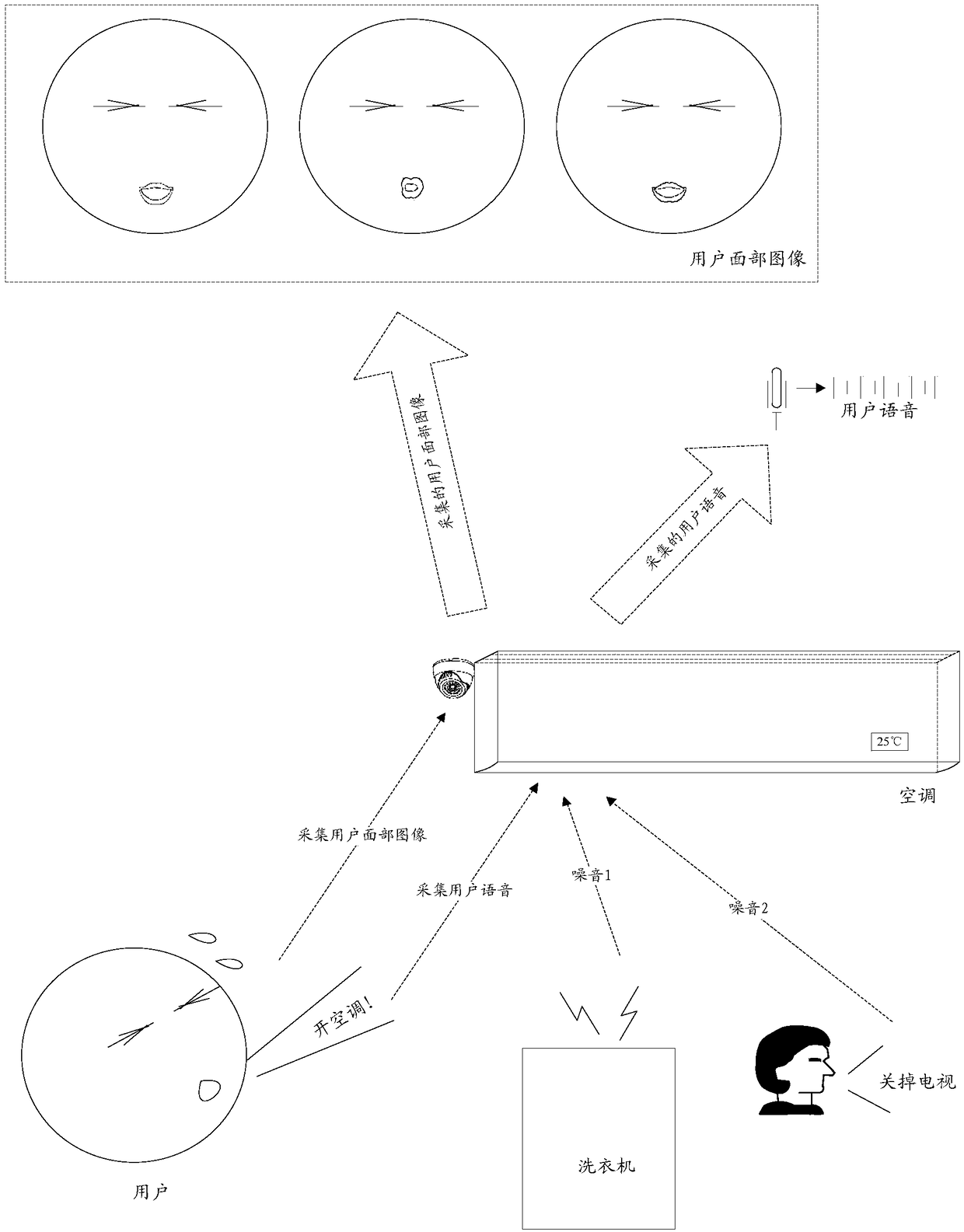

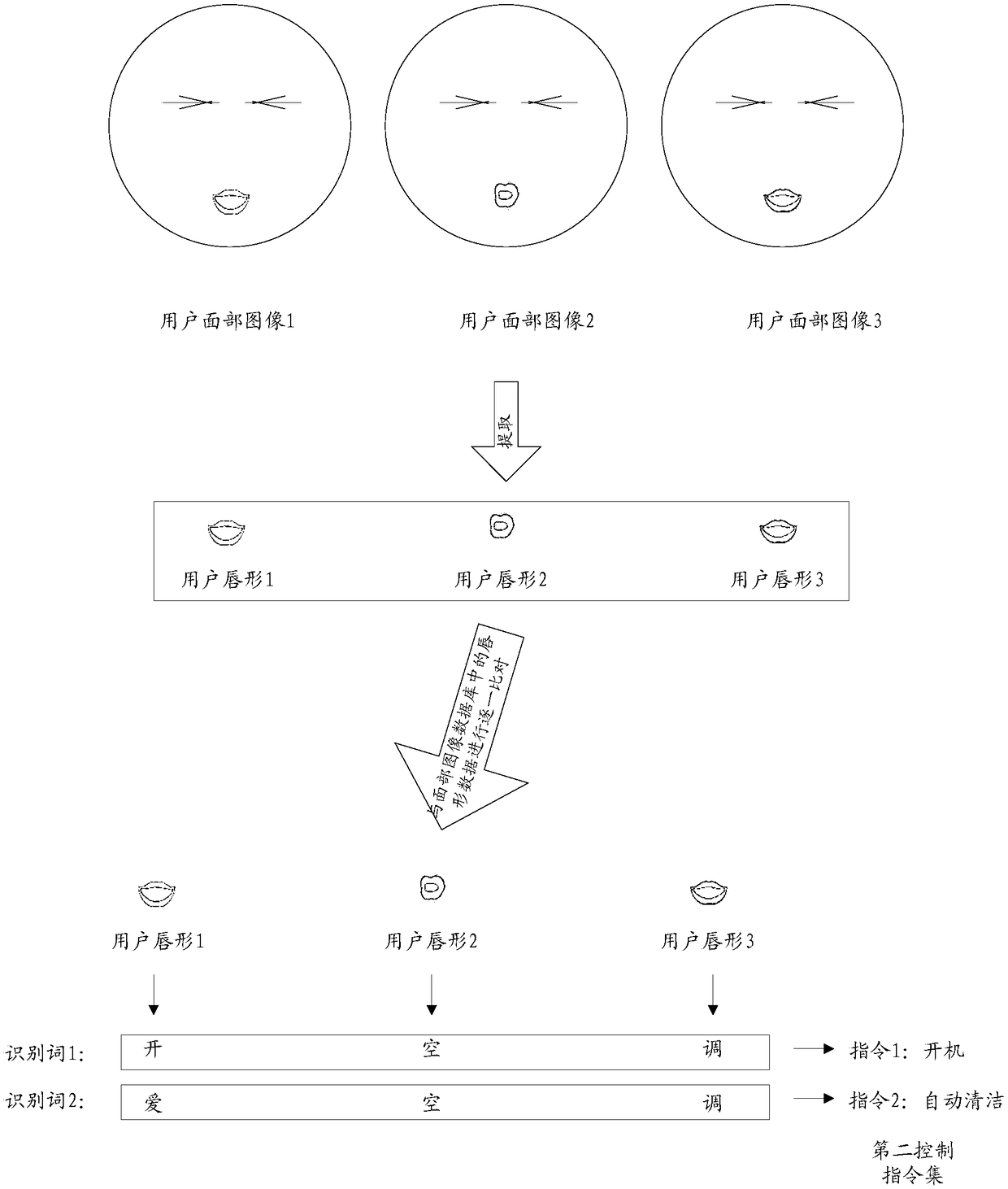

The invention discloses a voice recognition method and device and a computer storage medium which are used for solving the technical problems of low recognition rate of speech and inconvenience and slowness in the prior art. The method comprises a step of collecting a user facial image through an image collecting device when collecting a user voice through a voice collecting device, a step of predicting a prediction voice corresponding to the user voice by using a prediction model based on the user voice and the user facial image, wherein the prediction model is obtained by training voices ofdifferent people corresponding to each control instruction and the corresponding facial image, a step of matching a voice audio standard data corresponding to the control instruction in a voice database based on the prediction voice, wherein the voice data comprises a mapping relationship between the control instruction and the corresponding voice audio standard data, and a step of calculating thematching degree between the user voice and the voice audio standard data by a matching model, and controlling a smart home device according to the control instruction corresponding to the voice audiostandard data when the matching degree reaches a set threshold.

Owner:GREE ELECTRIC APPLIANCES INC

Chinese speech recognition system based on heterogeneous model differentiated fusion

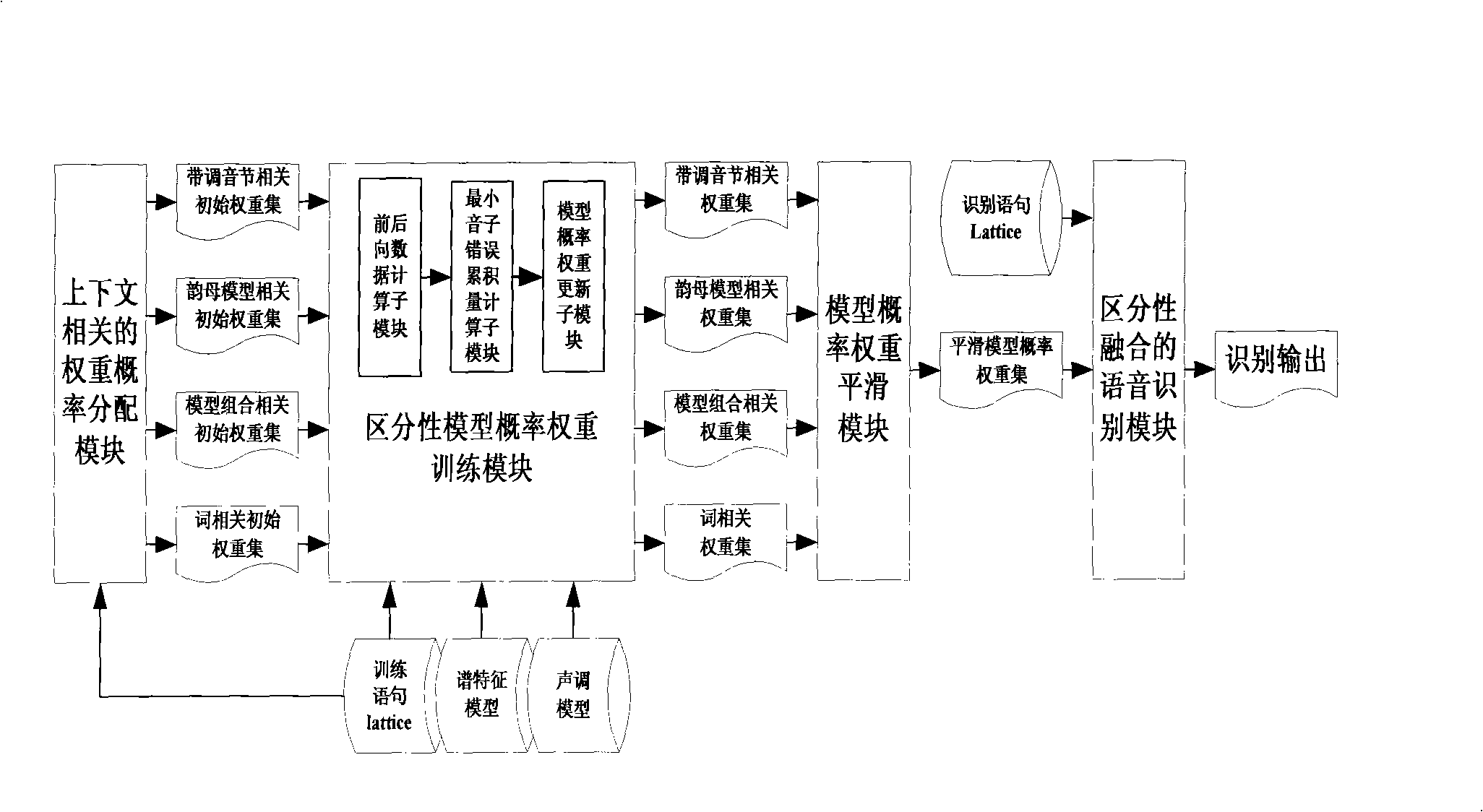

InactiveCN101334998AImprove recognition ratePrevent overfittingSpeech recognitionSpeech identificationSpeech sound

The invention relates to a Chinese speech recognition system which pertains to the speech recognition technology field and is based on heterogeneous model differential fusion. The system comprises: a model-probability weighty-distribution module, a differential model-probability weighty-training module, a model-probability weighty-smoothing module and a speech recognition module of differential fusion. The model-probability weighty-distribution module is responsible for generating the relevant model-probability weight sets for the linguistic context of every arc of a lattice and carrying out initialization; the differential model-probability weighty-training module utilizes minimum tone error rule to differentially train the output of heterogeneous model and obtain a minimum tone error cumulant, and a differential model-probability weight sets is obtained according to the minimum tone error cumulant; the model-probability weighty-smoothing module carries out the smoothing process on the relevant model-probability weight sets which is input into the context; the speech recognition module of differential fusion carries out speech recognition output by the weight sets after the smoothing process. The system can reduce the relative error recognition rate of speech recognition.

Owner:SHANGHAI JIAO TONG UNIV

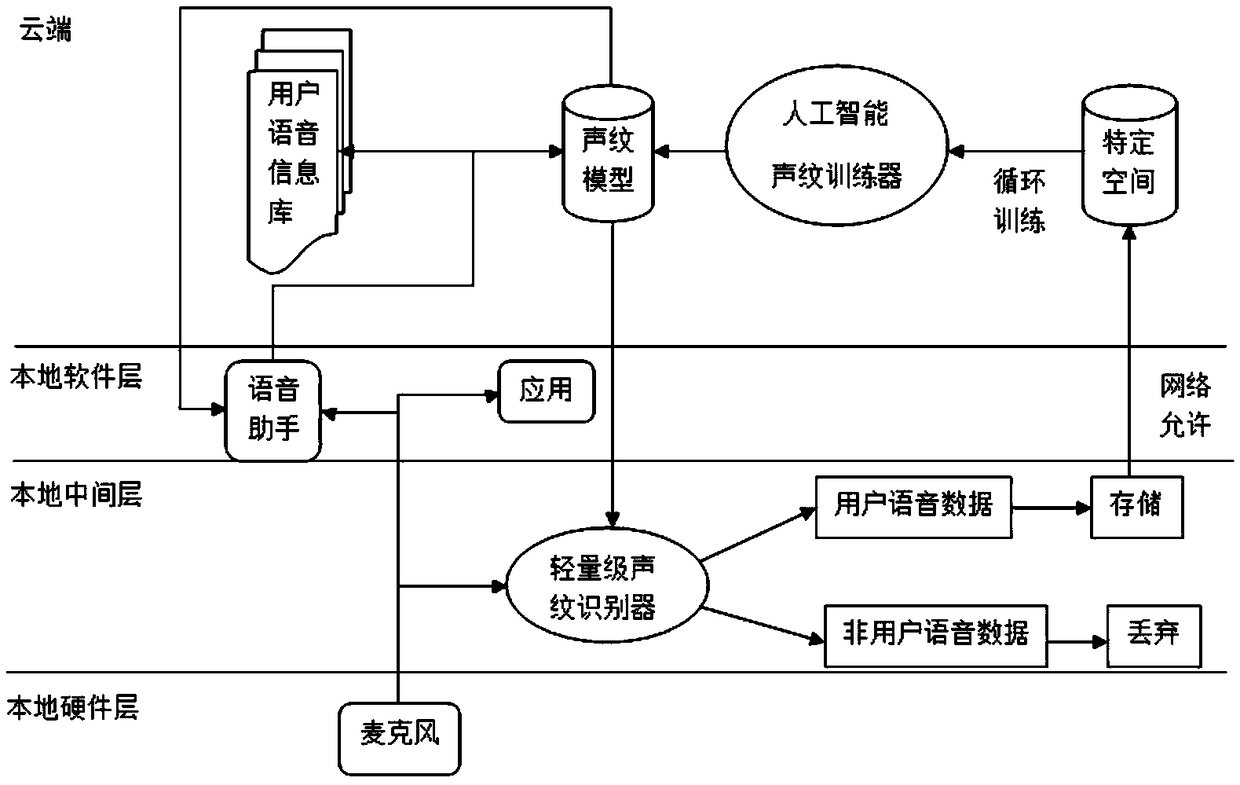

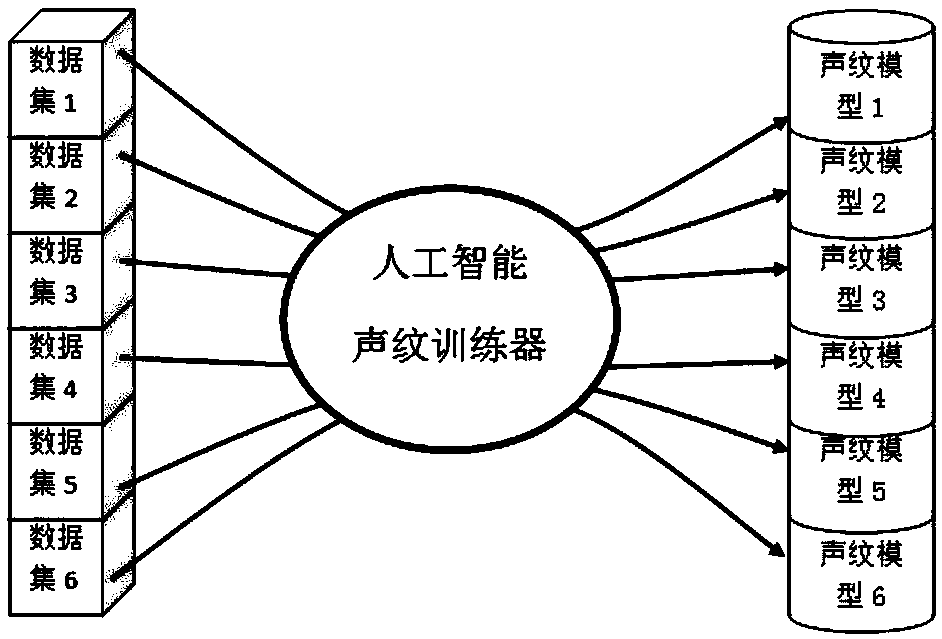

Speech recognition method and device

ActiveCN109256136AImprove experienceImprove recognition rateSpeech recognitionFeature extractionSpeech identification

The invention provides a speech recognition method and a speech recognition device, applied to intelligent equipment. The method comprises the following steps: collecting speech data of a user, extracting voiceprint information in the speech data of the user, and training all extracted voiceprint information by virtue of a voiceprint trainer, so that speech characteristic information and a voiceprint model of the user; and when a speech command of the user is received, converting the voice command in accordance with the speech characteristic information and the voiceprint model of the user, sothat a voice command which conforms to a user's command format requirement of the intelligent equipment. According to the speech recognition method and device, real expression of the user can be extracted in combination with speech characteristics, and an accuracy rate of speech recognition can be improved.

Owner:SAMSUNG ELECTRONICS CHINA R&D CENT +1

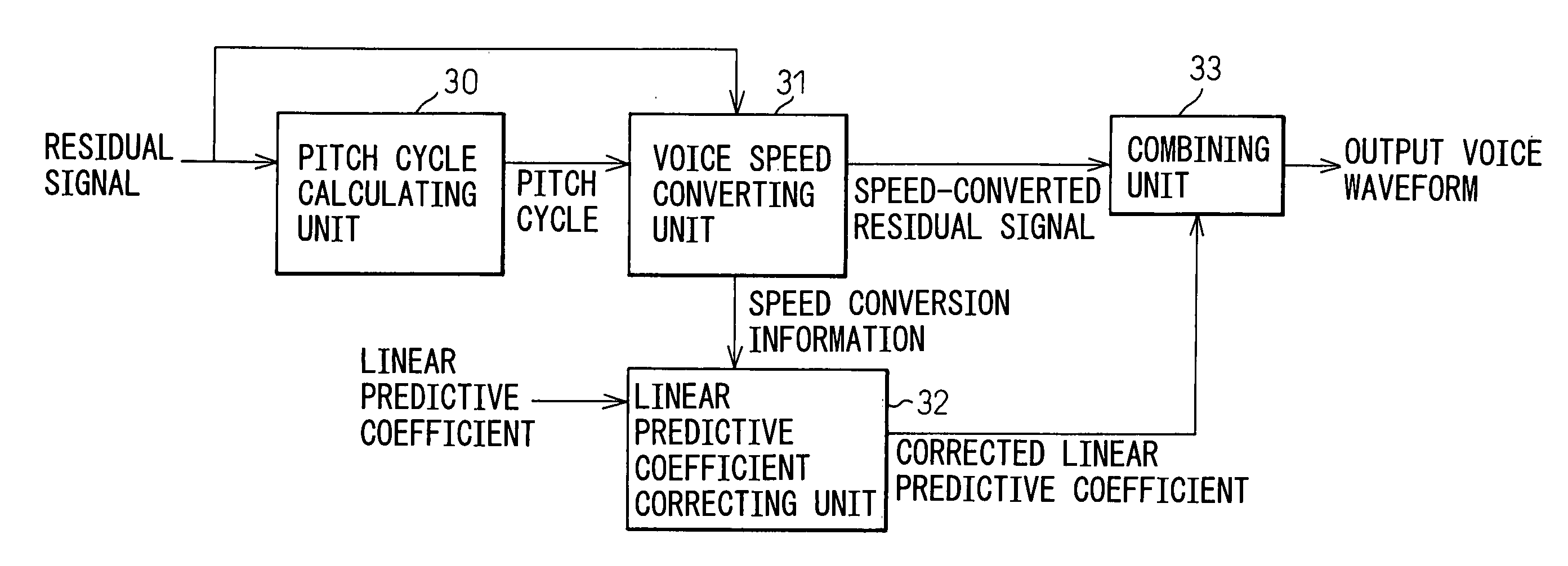

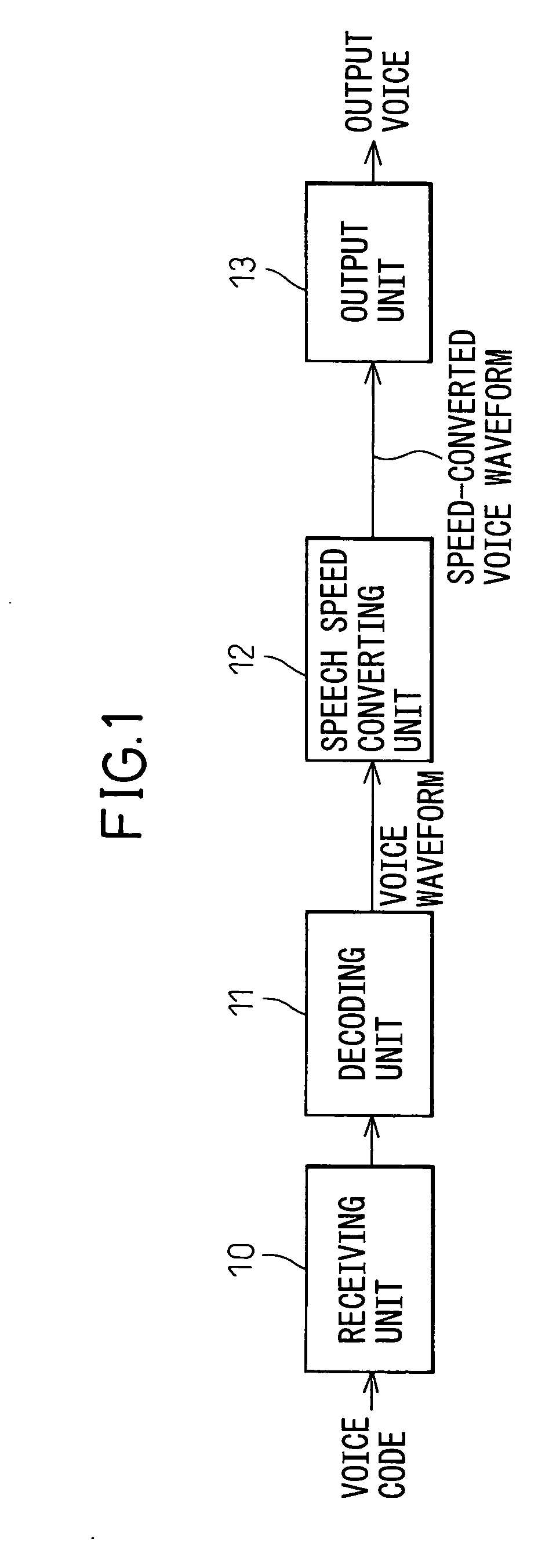

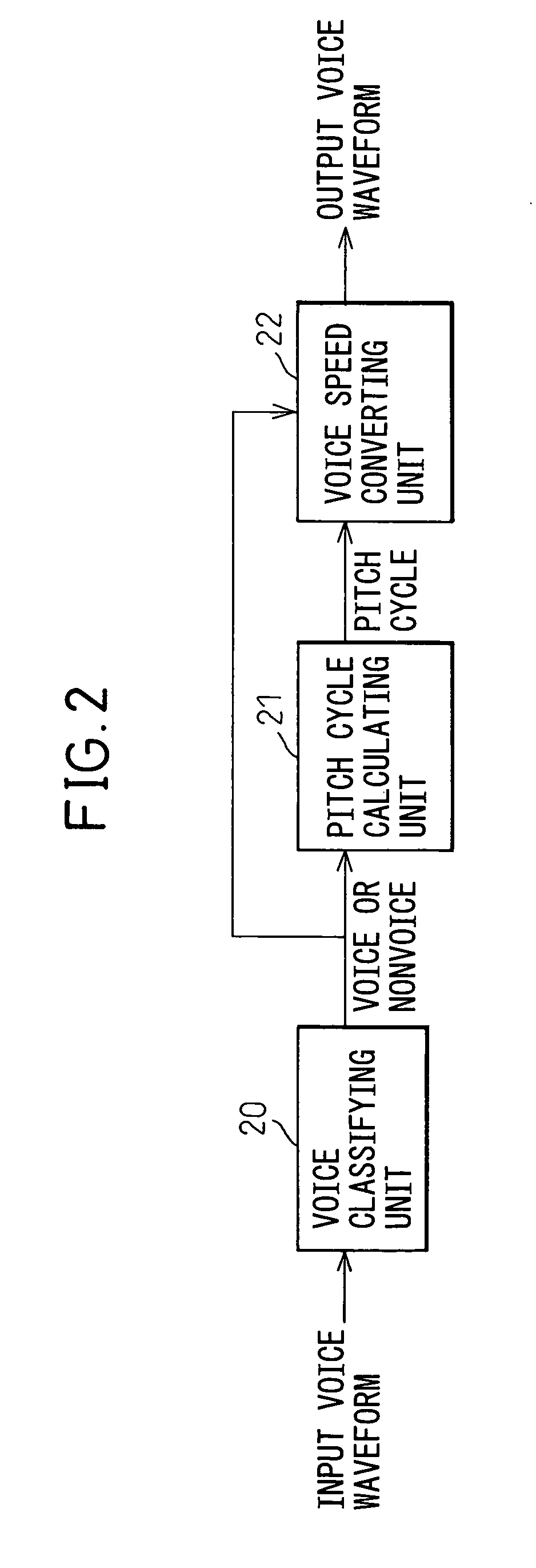

Speech speed converting device and speech speed converting method

InactiveUS20060293883A1Quality improvementNo degradation of voice qualitySpeech analysisSpeech codeLinearity

The invention relates to speech speed conversion, and provides a speech speed converting device and a speech speed converting method for changing a speed of voice without degrading the voice quality, without changing characteristics, regarding a signal containing voice. The speech speed converting device includes: a voice classifying unit that is input with voice waveform data and a voice code based on a linear prediction, and that classifies the input signal based on the characteristic of the input signal; and a speed adjusting unit that selects either one of or both a speed conversion processing using the voice waveform and a speed conversion processing using the voice code, based on the classification, and that changes a speech speed of the input signal using the selected speed converting method.

Owner:FUJITSU CONNECTED TECH LTD

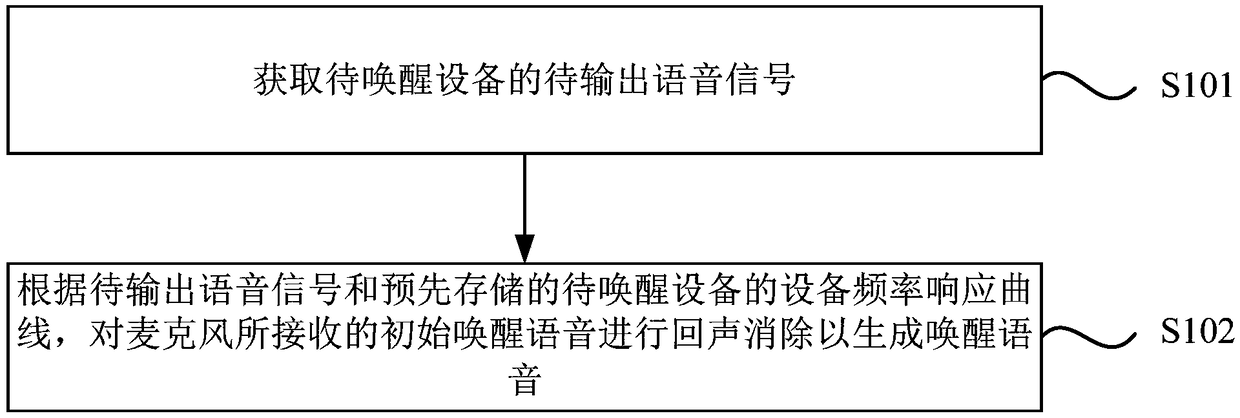

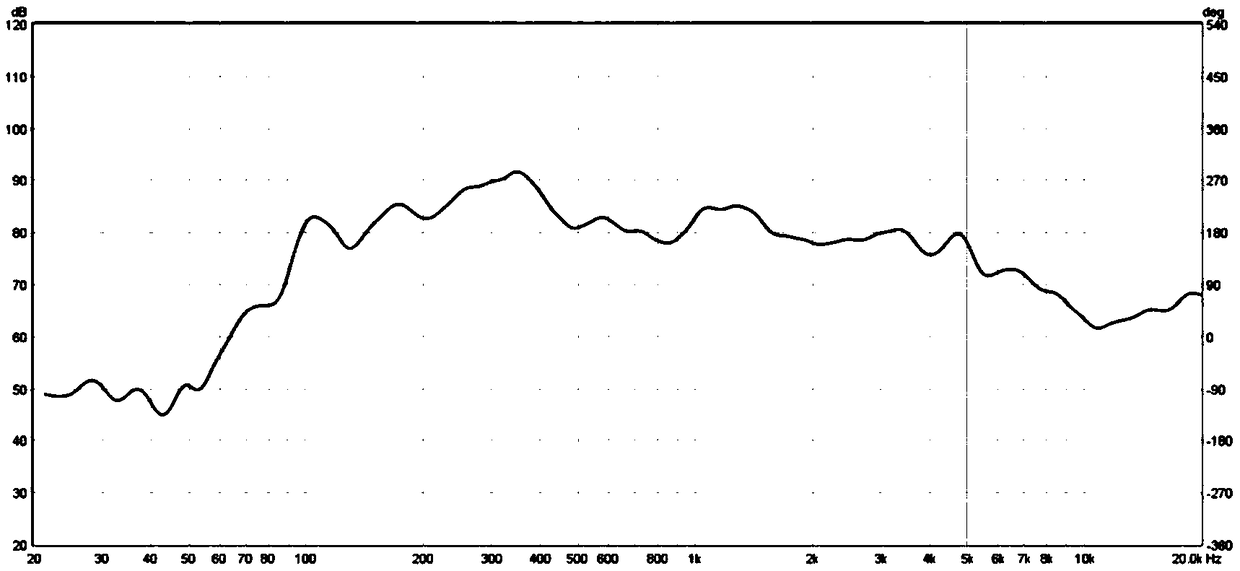

Echo cancellation method, device, medium, speech wake-up method and device

ActiveCN109360562AImprove echo cancellation effectImprove voice wake-up effectSpeech recognitionSpeech soundFrequency response

The embodiments of the present invention disclose an echo cancellation method, an echo cancellation device, a medium, a speech wake-up method and a speech wake-up device. The echo cancellation methodincludes the following steps that: the to-be-outputted speech signals of a device to be awakened are obtained; and echo cancellation is performed on initial wake-up speech received by a microphone according to the to-be-output speech signals and the pre-stored device frequency response curve of the device to be awakened, so that wake-up speech can be generated. With the echo cancellation method, the echo cancellation device, the medium, the speech wake-up method and the speech wake-up device in the prior art adopted, the technical problem that an echo cancellation method in the prior art generally fails to completely eliminate echo signals generated by playing signals in initial wake-up speech can be solved; an echo cancellation effect is improved; and the success rate of speech wake-up can be increased.

Owner:SHENZHEN SKYWORTH RGB ELECTRONICS CO LTD

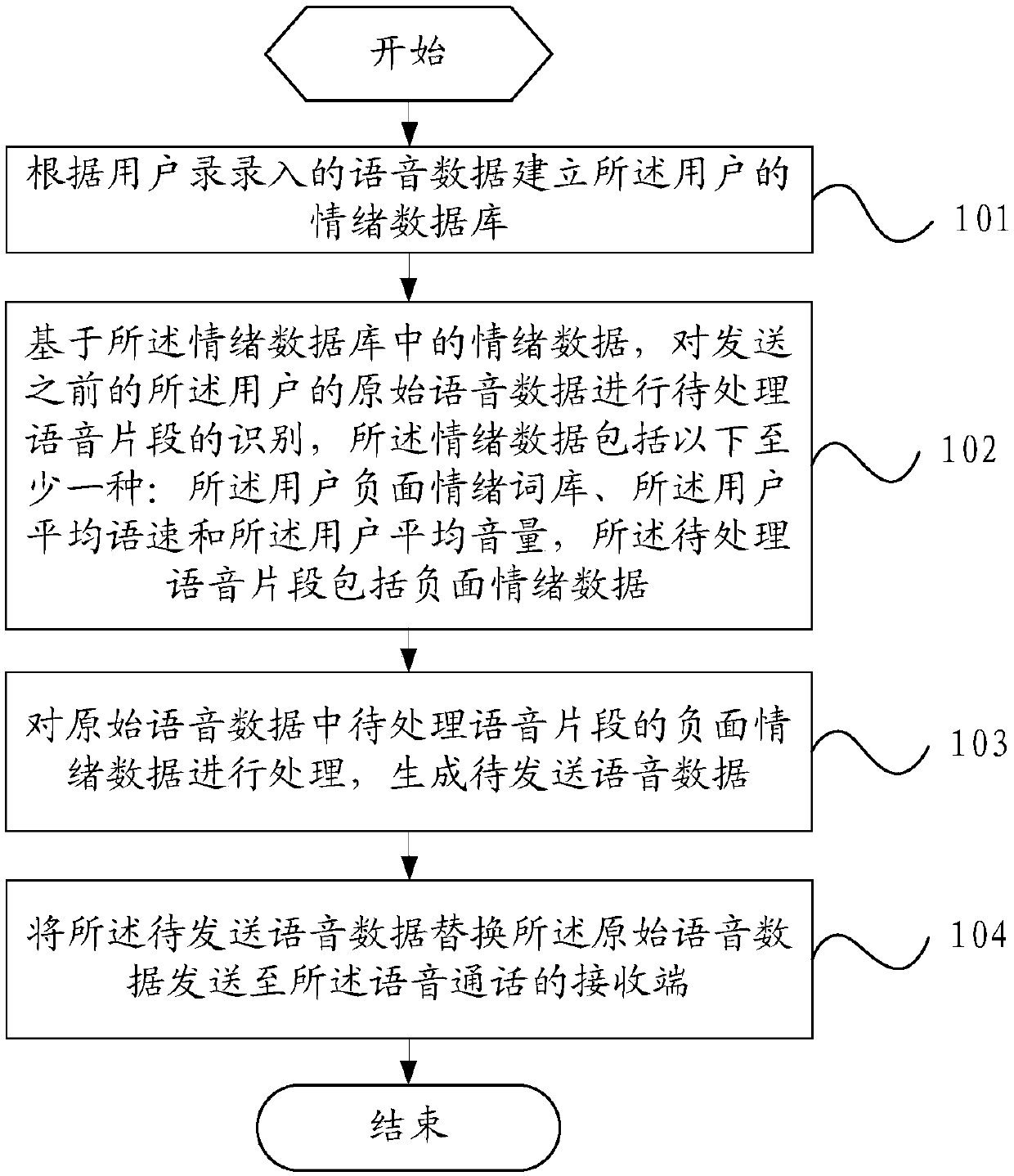

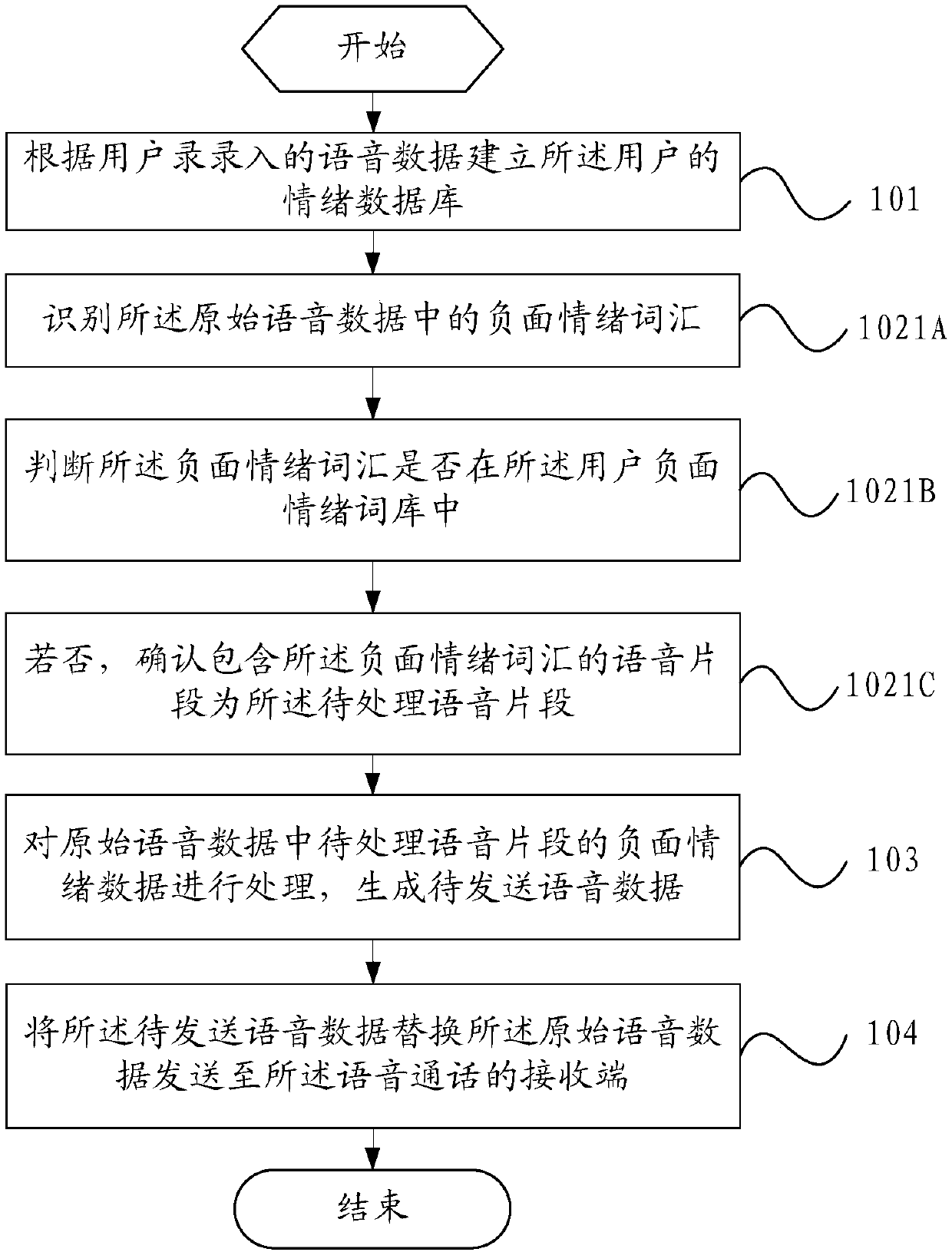

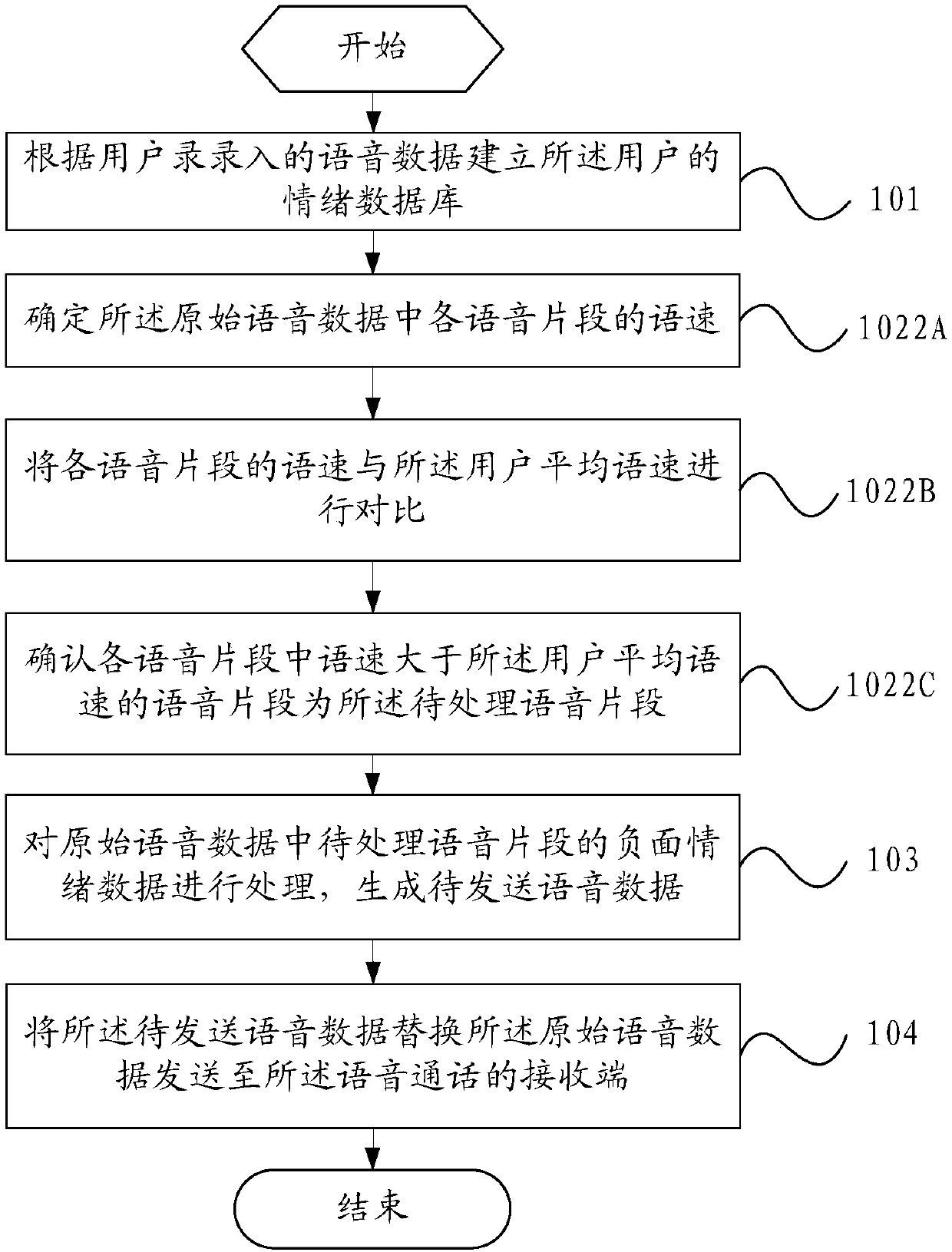

Method of processing emotion in voice, and mobile terminal

ActiveCN107919138AAvoid receivingImprove communication efficiencySpeech analysisVoice communicationComputer terminal

The invention provides a method of processing emotion in voice and a mobile terminal. The method of processing emotion in voice includes the steps: according to the voice data entered by a user, establishing an emotion database of the user; based on the emotion data in the emotion database, performing identification of the voice clips to be processed on the original voice data of the user before sending, wherein an emotion data packet includes at least one of the followings: a user negative induced emotion word bank, the average speed of the user and the average volume of the user; and the voice clips to be processed include the negative induced emotion data; processing the negative induced emotion data of the voice clips to be processed in the original voice data, and generating the voicedata to be transmitted; and replacing the original voice data by means of the voice data to be transmitted, and sending the voice data to be transmitted to the receiving terminal of voice communication. The method of processing emotion in voice and a mobile terminal can avoid the receiving party receiving the voice data which is not conductive to communication so as to realize the good effect ofimproving the communication efficiency by performing emotion processing on the voice data before sending the voice data.

Owner:VIVO MOBILE COMM CO LTD

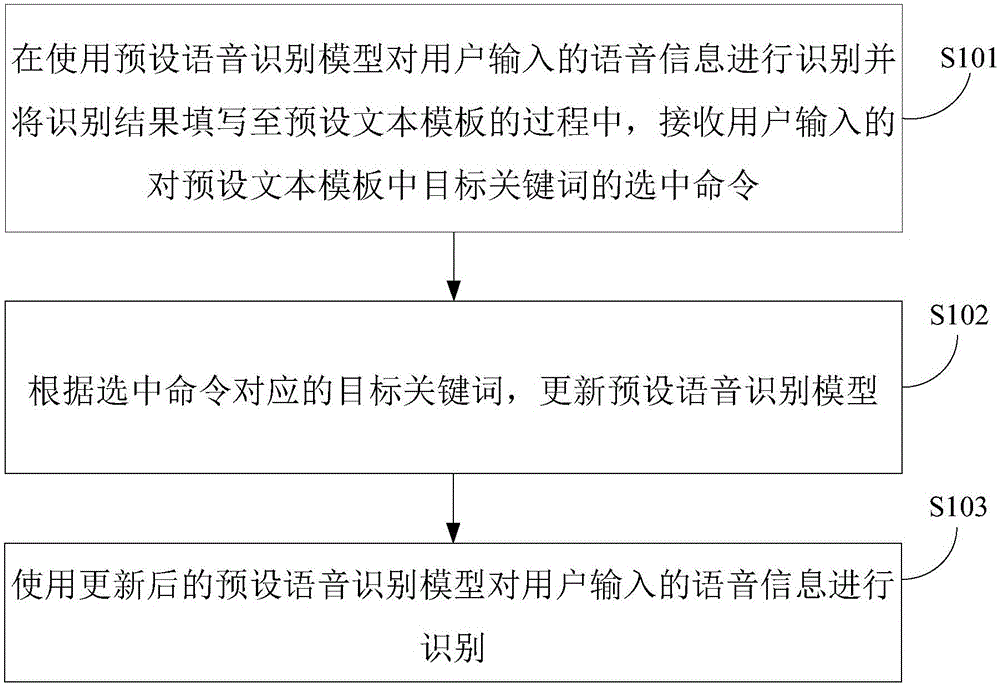

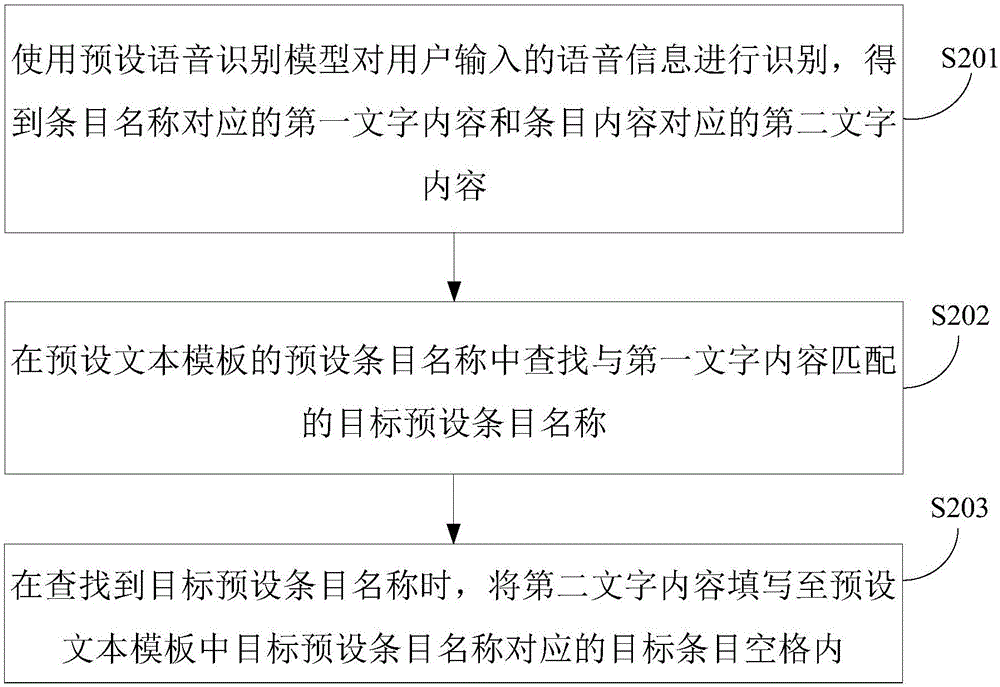

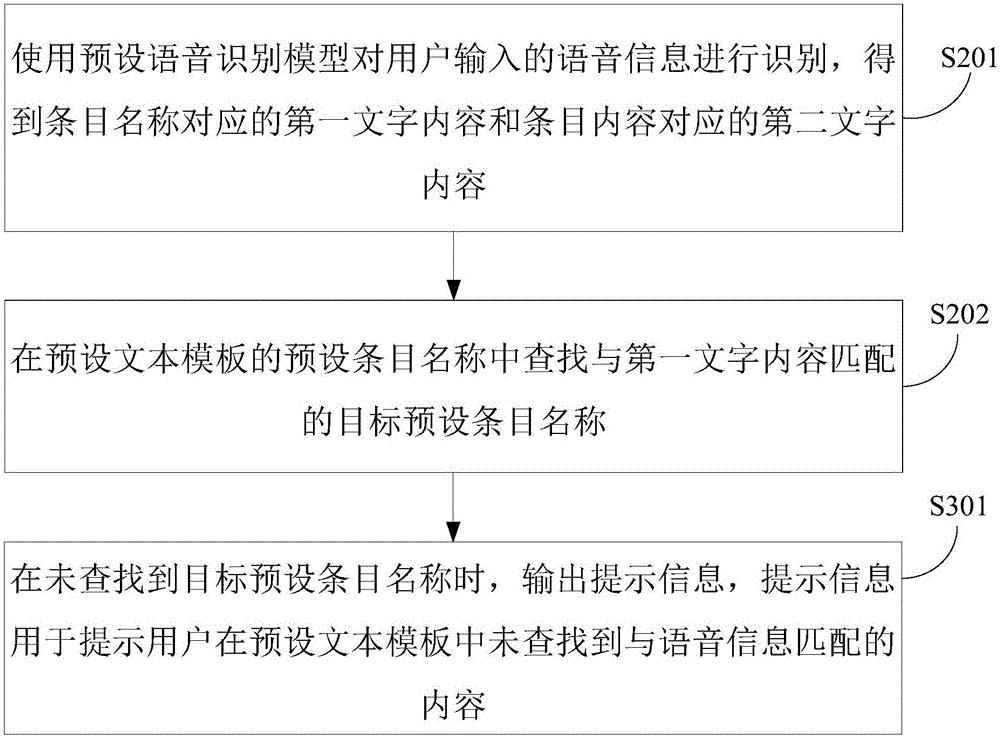

Speech recognition method and apparatus

The invention provides a speech recognition method and apparatus wherein the method comprises: in the process of using a preset speech recognition model to identify the speech information inputted by a user and filling the recognition result in a preset text template, receiving the chosen instruction of the key words in the preset speech template inputted by the user; according to the key words corresponding to the chosen instruction, updating the preset speech recognition model; using the updated preset speech recognition model to identify the speech information inputted by the user. Through the technical schemes of the invention, it is possible for the user to update the preset speech recognition model more conveniently so as to ensure the success rate of speech recognition.

Owner:BEIJING UNISOUND INFORMATION TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com