Virtual figure expression driving method and system

A virtual character and driving method technology, applied in the field of virtual character expression driving, can solve the problems of the production method cannot be applied to the environment of real-time interaction, cannot meet the market demand, time-consuming and labor-intensive cost, etc., and achieves accurate and stable capture, low cost, Good real-time effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

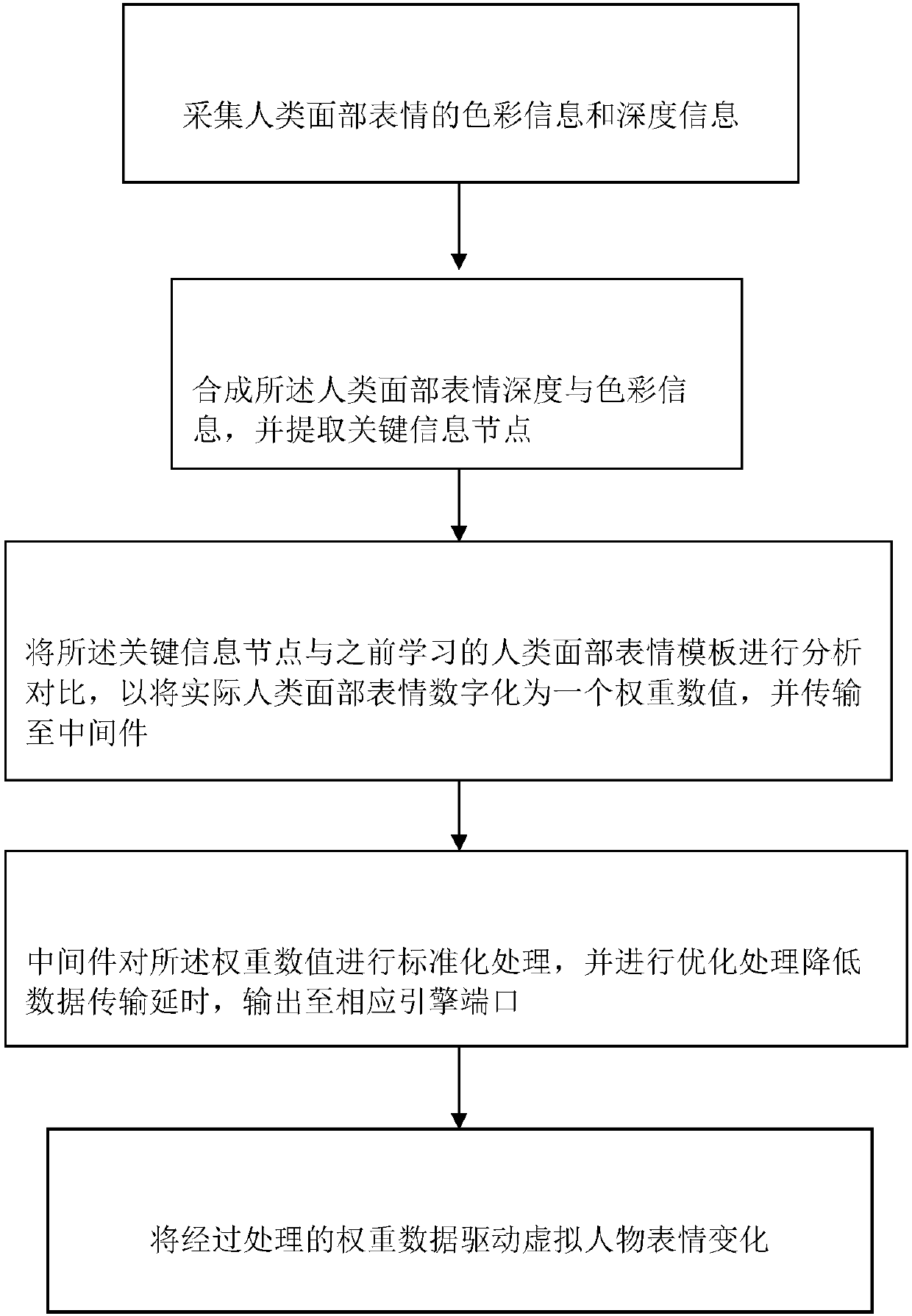

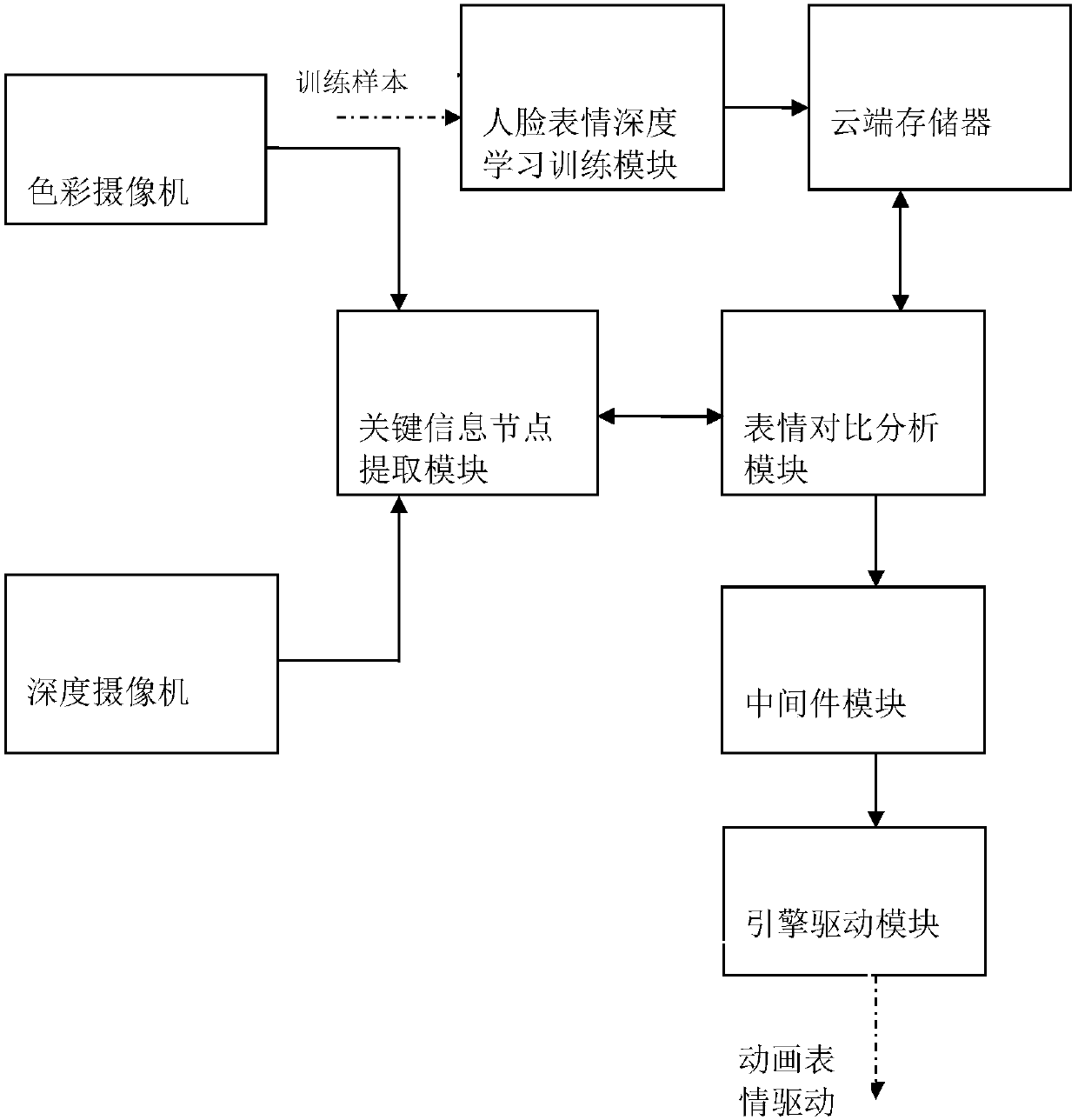

[0069] The present invention uses depth and color cameras to extract the information weight of facial expressions of real people through a new algorithm, and finally guides virtual characters to make the same expressions as real people in real time. According to the depth camera, the accurate point cloud data of human facial skin displacement is collected, and the color camera is used for data collection. The color camera is responsible for subdividing the facial expressions into 72 types through deep learning data, and mobilizing different facial muscles according to the displacement of facial muscles. The weight value is used to find the closest expression to drive the facial expression of the avatar. According to the application of different platforms, the actual platform computing power is different, and less than 72 weights can be used to adapt to low computing power platforms (such as mobile devices).

[0070] Description of the workflow of the facial capture system:

...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com