Method for distinguishing electric bicycles and motorcycles through videos

A technology for electric bicycles and motorcycles, applied in character and pattern recognition, instruments, computer parts, etc., can solve the problems of not being able to distinguish electric bicycles and motorcycles well, being susceptible to environmental interference, and low recognition rate. , to achieve the effect of improving accuracy, improving accuracy, high detection rate and high recognition rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

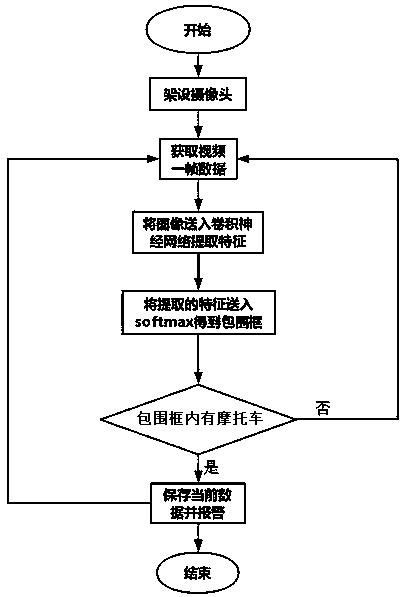

[0028] A method for distinguishing electric bicycles and motorcycles through video, mainly used to distinguish the license plates of electric bicycles and motorcycles, mainly includes the following steps:

[0029] Step A1: collect real-time video of electric bicycles and motorcycles on the road through the camera, and generate images;

[0030] Step A2: Use the deep learning method to extract the features of the marked electric bicycle and motorcycle license plates, select the last layer as the result of feature extraction, and then connect a softmax function to determine whether it is an electric bicycle or a motorcycle, and select the probability The larger value is used as the output result to get the training model;

[0031] Step A3: Input the image generated in step A1 into the training model, and output an alarm message if the license plate of a motorcycle is detected.

[0032] The present invention is mainly used to distinguish the license plates of electric bicycles an...

Embodiment 2

[0034] This embodiment is further optimized on the basis of Embodiment 1, and the step A2 mainly includes the following steps:

[0035] Step A21: collect the video of the electric bicycle and motorcycle through the camera, and convert it into an image, and mark the type of each image according to the classification of the electric bicycle and motorcycle;

[0036]Step A22: Divide the image into 13×13 rectangular blocks, and use clustering to predict the anchor point frame for each rectangular block. The rectangular block takes 5 anchor point frames, and the size of the anchor point frame matches the size of different detection objects , so as to avoid the situation that only one object is detected when multiple objects are located in a rectangular block;

[0037] Step A23: Send each segmented rectangular block into a multi-layer convolutional neural network, use the convolutional neural network to extract image features, take out the features of the last layer, and input them i...

Embodiment 3

[0041] This embodiment is further optimized on the basis of Embodiment 1 or 2. In the step A1, the collected video is converted into an image, the image is divided into several 13×13 rectangular blocks, and each rectangular block is aggregated. Class prediction anchor point frame, described rectangular block takes 5 anchor point frames, and the size of anchor point frame matches the size of different detected objects;

[0042] The image generated in step A1 in step A3 is input into the training model generated in step A2, and each rectangular block after segmentation is sent into a multi-layer convolutional neural network, and the image is characterized by using the convolutional neural network Lift, and take the features of the last layer and input the softmax function to initially judge whether each rectangular block contains the license plate features of electric bicycles or motorcycles; if the rectangular blocks contain license plate features of electric bicycles or motorcy...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com