Method for rapidly tracking and positioning set targets in grayscale videos

A gray-scale video, tracking and positioning technology, applied in image data processing, instruments, calculations, etc., can solve the problems of complex tracking process, increased calculation amount and complexity, slowness, etc., and achieve high tracking accuracy, fast calculation speed, Effects of adaptation to scale changes

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0051] In order to further explain the technical means and effects adopted by this embodiment to achieve the predetermined purpose, the specific implementation, structural features and effects of this embodiment will be described in detail below in conjunction with the accompanying drawings and embodiments.

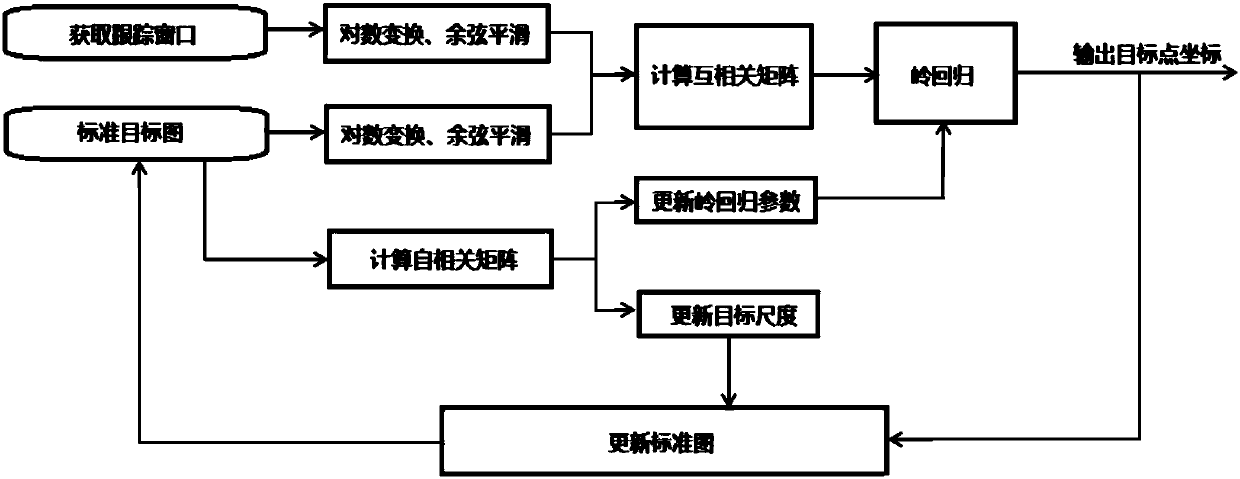

[0052] The technical solution adopted in this embodiment is mainly divided into four parts: a preprocessing stage, a tracking stage, a parameter learning stage, and a scale prediction stage. In the first frame, the position and size of the target in the first frame need to be artificially given, and then the original image is cropped to obtain a standard image centered on the tracking target.

[0053] Step 1. Obtain the tracking window of the current frame through the target coordinates and size calculated in the previous frame, and perform logarithmic transformation and cosine window smoothing on the tracking window and the standard target image respectively.

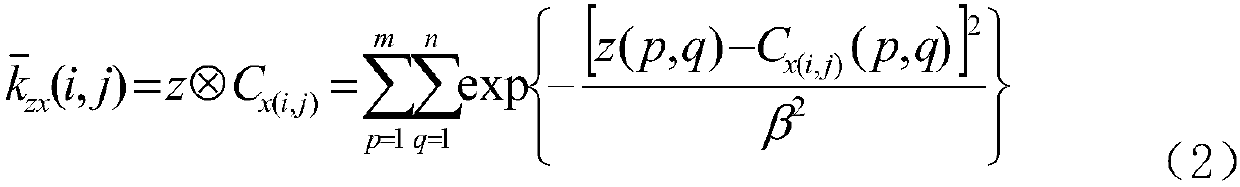

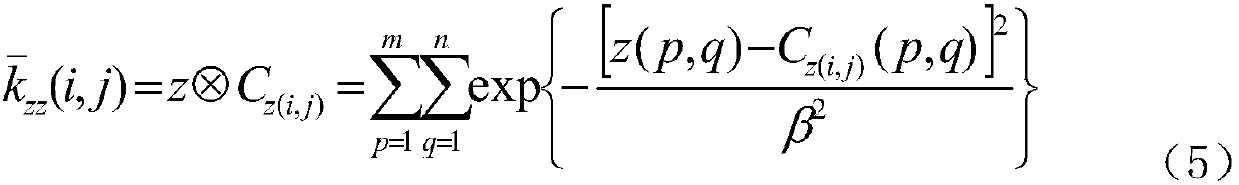

[0054] St...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com