Camera and robot hand-eye calibration method based on ROS

A hand-eye calibration and robot technology, applied in the field of robot vision, can solve the problems of inconvenient use, prolonged visual experiment period, long time-consuming hand-eye calibration, etc., and achieves the effect of high degree of autonomy and strong expansibility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

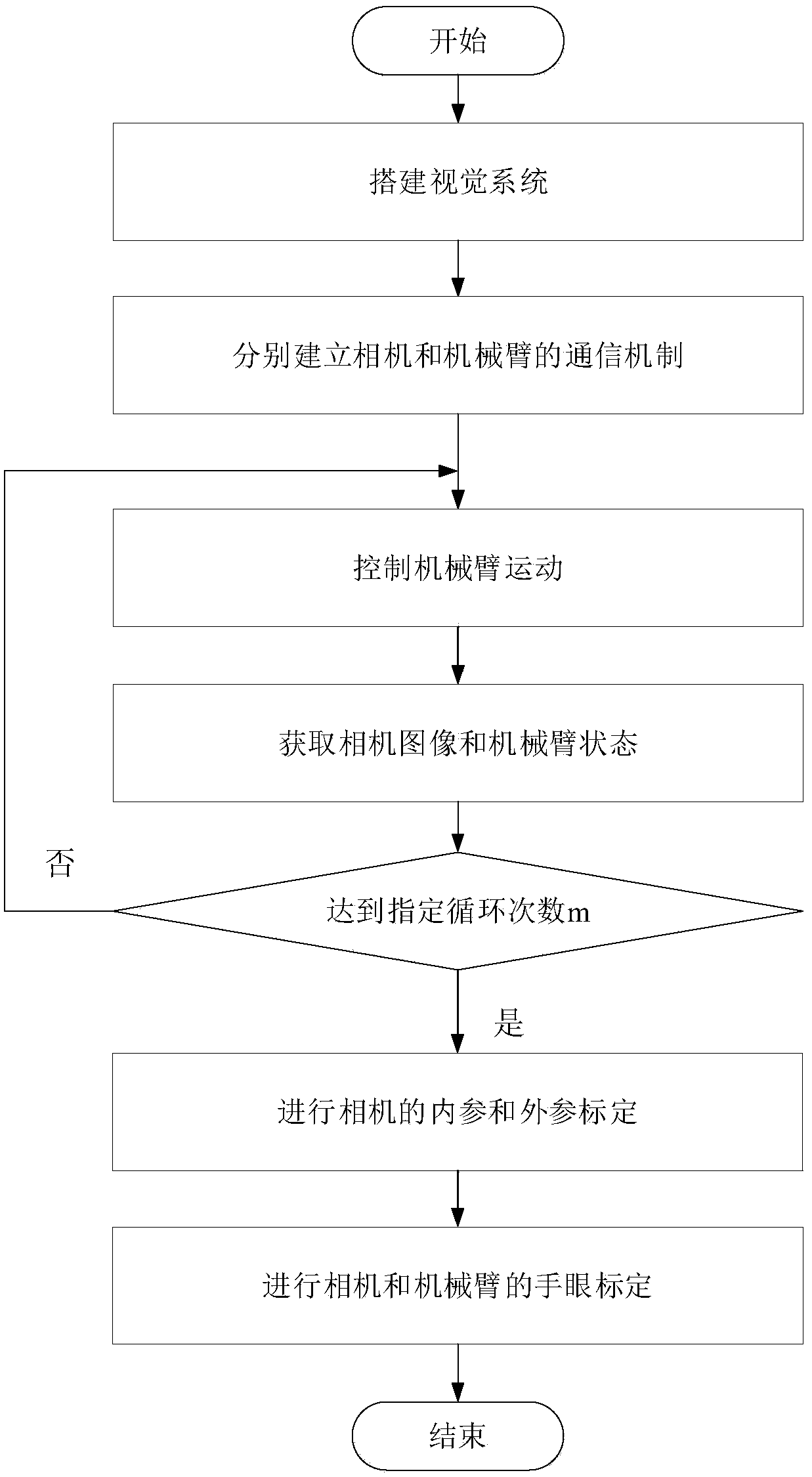

Method used

Image

Examples

Embodiment

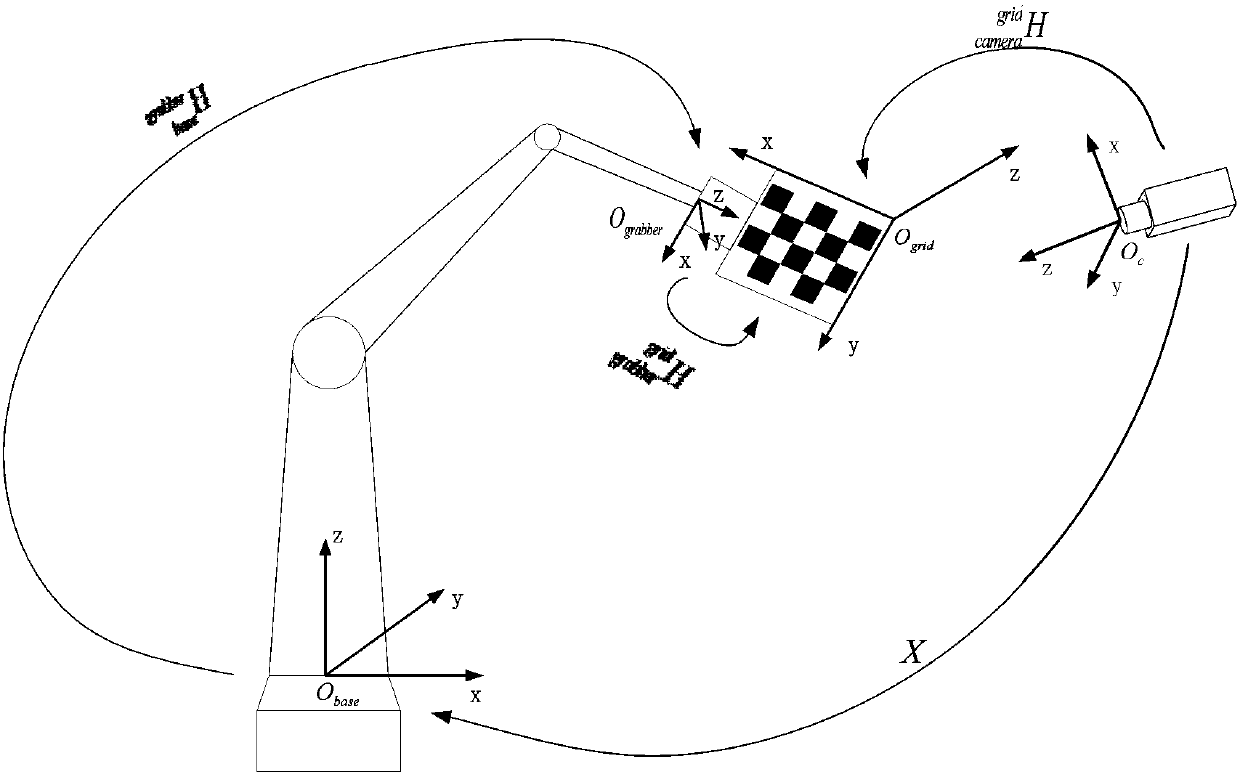

[0056] The ur3 robot arm and the kinect2 depth camera are used to construct the vision system, in which ur3 has 6 degrees of freedom (joints), kinect2 integrates RGB camera and Depth camera, kinect2 is installed in a fixed position outside the robot arm, considering the field of view and measuring range of kinect2 The distance range, the schematic diagram of the installation position of the kinect2 and ur3 robotic arms are as follows figure 2 Shown, and marked the RGB camera coordinate system O of kinect2 c , ur3 manipulator base coordinate system O r , where red, green and blue represent the x, y, and z axes respectively.

[0057] Use A4 paper to print the calibration board, select chess5x7x0.03 for the specification of the calibration board, such as figure 2 shown.

[0058] The relationship between the coordinate systems used in the whole calibration process is as follows: image 3 As shown, a total of 4 coordinate systems are involved: the base coordinate system of th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com