Fruit visual collaborative searching method of harvesting robot

A picking robot and search method technology, applied in the field of picking robot target search, can solve the problems of poor adaptability, high cost, fixed path, etc., and achieve the effect of saving picking time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0015] Embodiments of the present invention will be further described below in conjunction with the accompanying drawings. The present invention is described by taking apples as an example, but the present invention is equally applicable to other fruits.

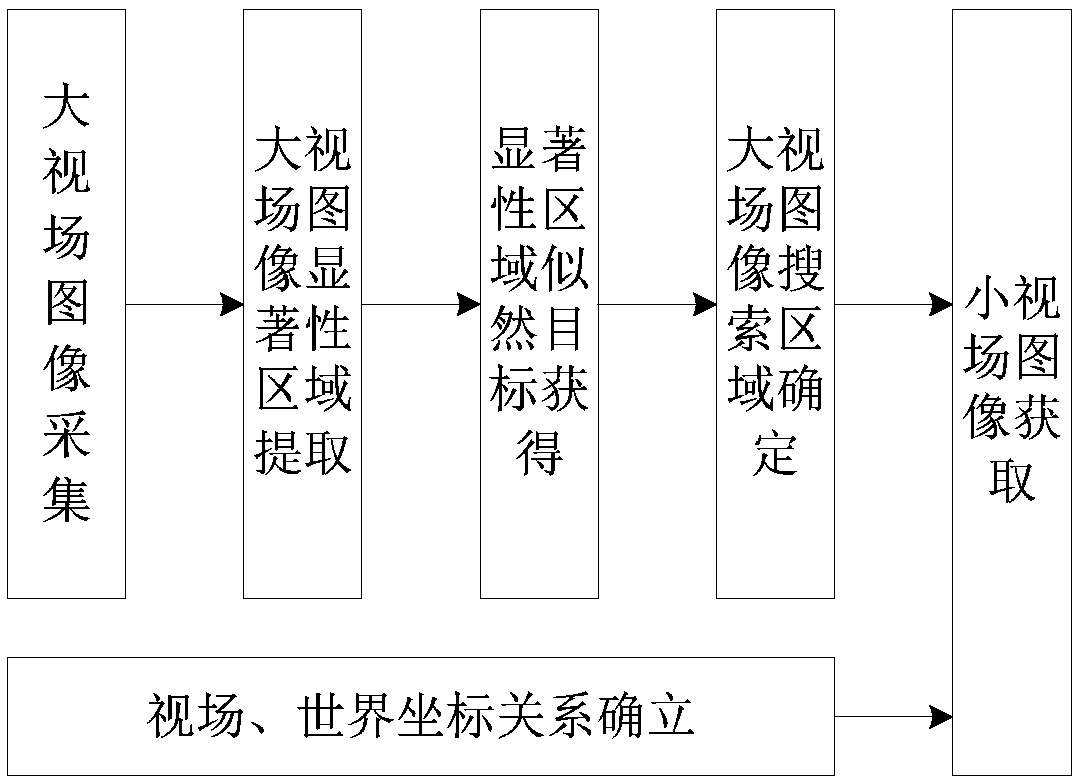

[0016] During image object search, people tend to start paying more attention to the image salient regions and the likelihood objects in the regions. For the picking robot, a large field of view camera installed on its motion platform is used to collect the global image of the fruit trees in the orchard, such as figure 2 . When working with a large field of view camera, first rotate and search for the vertical left or right boundary of the whole area of fruit in the orchard, based on the constant left or right boundary of the whole area of fruit in the image, and measure by the size change of the fruit area closest to the central horizontal line (like image 3 , the black line is the boundary line, and the blue circl...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com