Speech interaction method and speech interaction equipment

A voice interaction and voice technology, applied in the direction of voice analysis, voice recognition, special data processing applications, etc., can solve problems affecting user experience, wrong human-computer interaction, and unable to remove human voice interference, so as to improve user experience and avoid human interference. The effect of computer interaction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

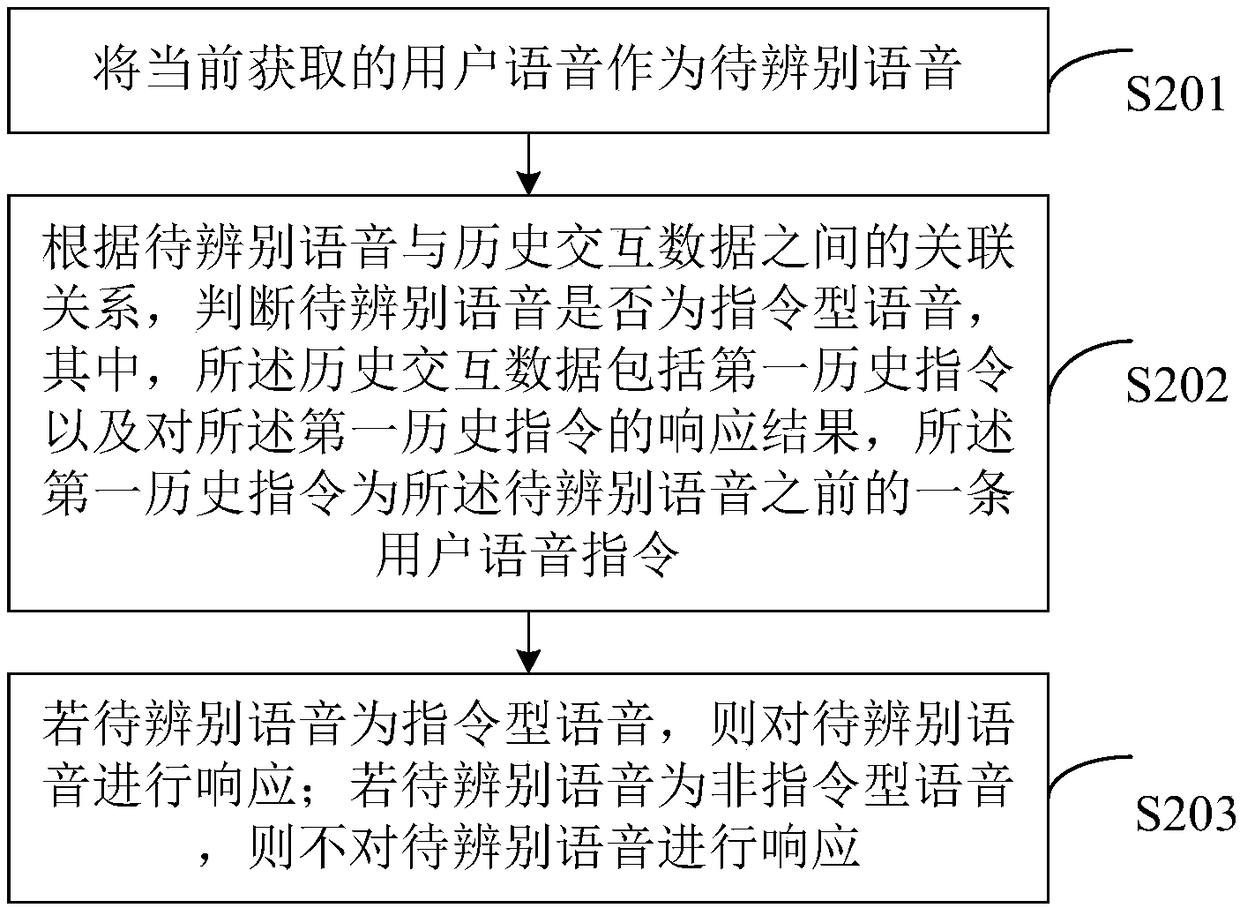

[0082] see figure 2 , which is a schematic flowchart of a voice interaction method provided in this embodiment, the voice interaction method includes the following steps:

[0083] S201: Use the currently acquired user voice as the voice to be identified.

[0084] After the human-computer interaction function of the smart device is activated, the smart device will receive and recognize the user's voice in real time. For ease of distinction, in this embodiment, the currently acquired user voice is defined as the voice to be identified.

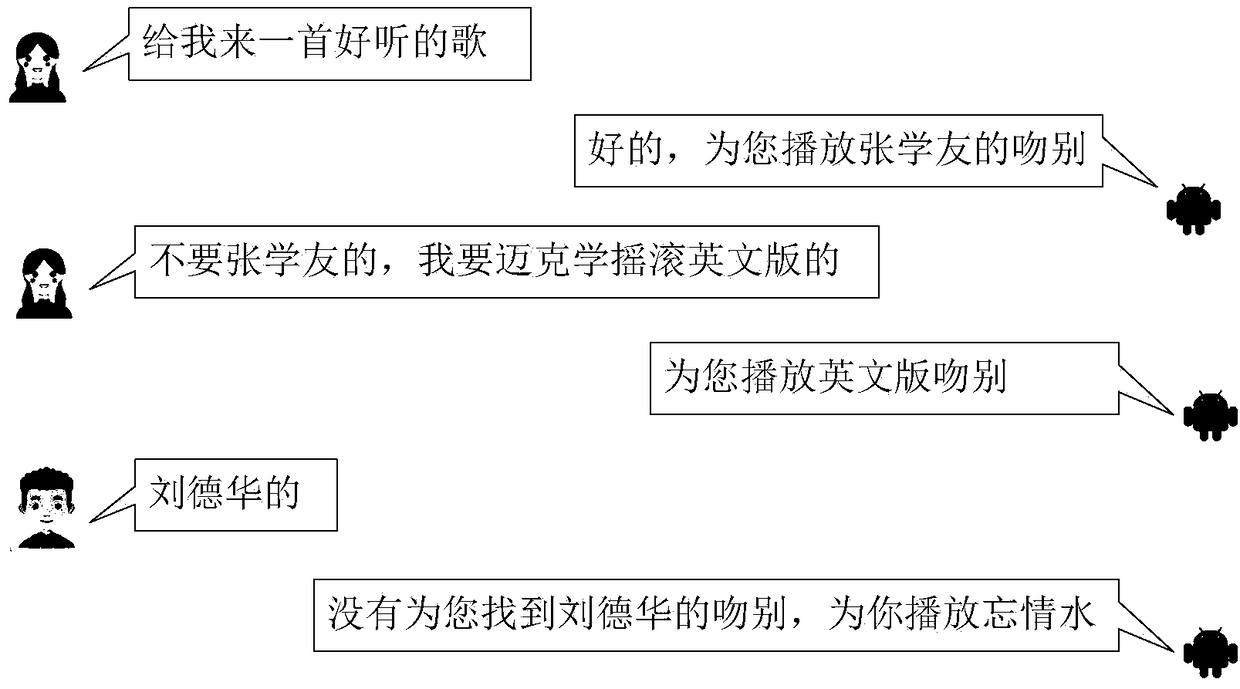

[0085] For example, if figure 1 As shown, if the currently acquired user voice is "Don't want Jacky Cheung, I want Mike to learn rock English version", then this voice is the voice to be identified; if the currently acquired user voice is "Andy Lau", then this item The voice is the voice to be identified.

[0086] S202: According to the correlation between the speech to be recognized and the historical interaction data, determine whether th...

no. 2 example

[0094] In this embodiment, the following S302 will be used to illustrate the specific implementation manner of S202 in the above-mentioned first embodiment.

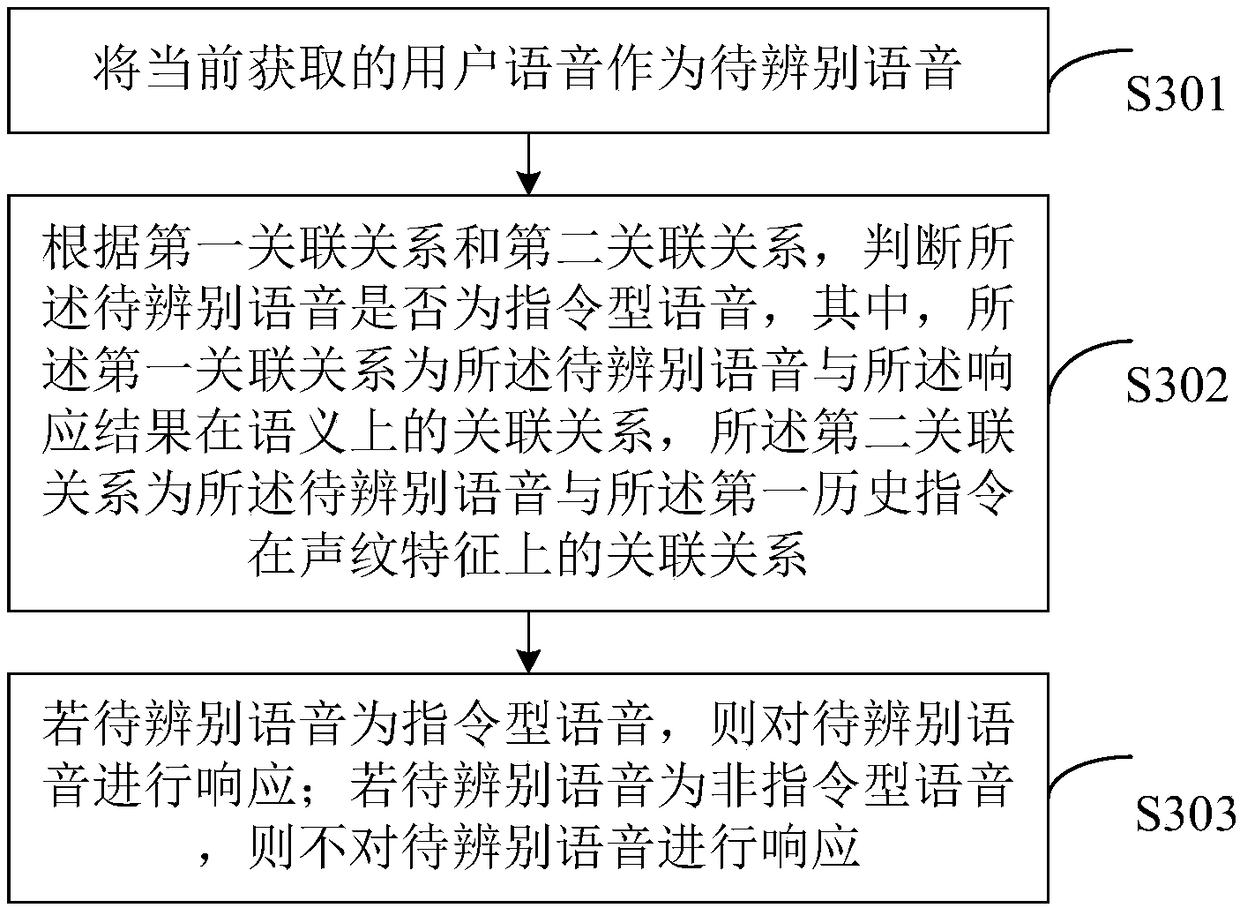

[0095] see image 3 , which is a schematic flowchart of a voice interaction method provided in this embodiment, the voice interaction method includes the following steps:

[0096] S301: Use the currently acquired user voice as the voice to be identified.

[0097] It should be noted that this step S301 is the same as S201 in the above-mentioned first embodiment, and for relevant details, please refer to the first embodiment, which will not be repeated here.

[0098] S302: According to the first association relationship and the second association relationship, determine whether the speech to be identified is instruction speech.

[0099] Wherein, the first association relationship is the semantic association relationship between the speech to be recognized and the historical response result (the historical response result...

no. 3 example

[0108] This embodiment will introduce the specific implementation manner of S302 in the second embodiment above.

[0109] In this embodiment, a speech instruction recognition model may be constructed in advance, so as to use the speech instruction recognition model to judge whether the speech to be recognized is an instruction speech.

[0110] see Figure 4 A schematic flow chart of the construction method of the voice command discrimination model shown, the construction method includes the following steps:

[0111] S401: Collect various sets of human-computer interaction data belonging to the current dialogue field, wherein some or all of the human-computer interaction data includes instruction-type user sample voices and non-instruction-type user sample voices.

[0112] In the human-computer interaction scenario, for a group of human-computer dialogues (usually including one or more rounds of dialogues), the interaction is usually aimed at a specific topic. Therefore, in th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com