Batch memory scheduling method based on Bank division

A memory scheduling and memory technology, applied in resource allocation, program startup/switching, program control design, etc., can solve problems such as increasing system power consumption, inability to use the principle of locality of memory access requests, and enhanced randomness of memory access requests. Achieve the effect of reducing memory power consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] The present invention will be further described below in conjunction with the accompanying drawings.

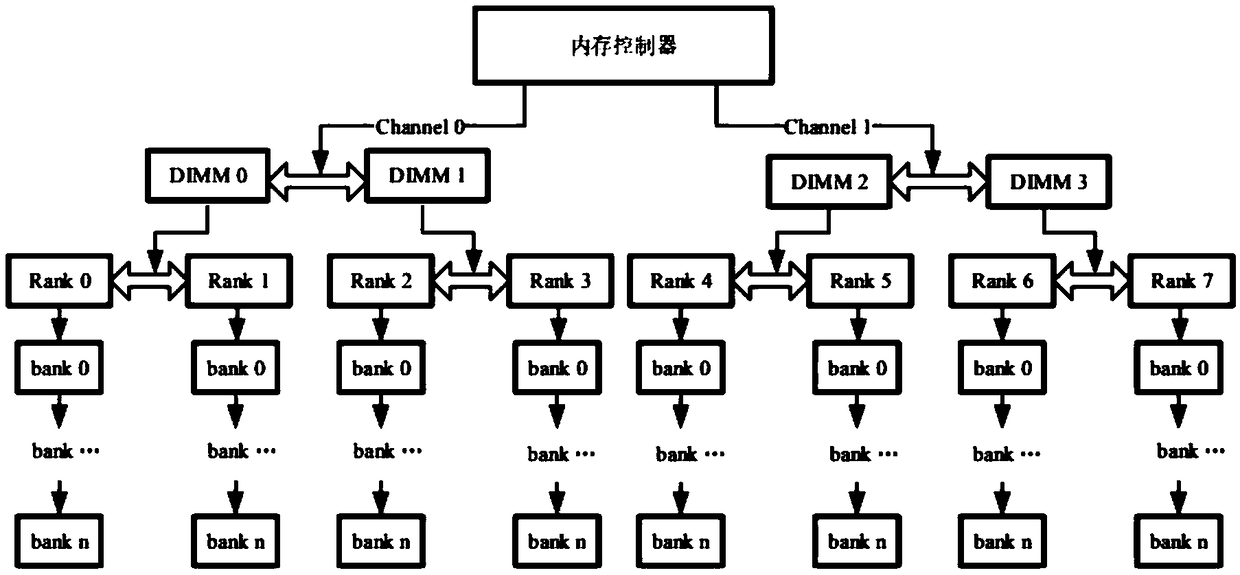

[0026] figure 1 A hierarchical diagram of a modern DRAM memory system. It usually includes one or more memory channels (Channel), and multiple different memory channels are executed in parallel, and each channel has an independent address, data, and instruction bus. Each memory channel contains one or more storage arrays (Rank), and all Ranks in the same channel share channel resources. Each memory array contains multiple memory banks (Banks). All Banks in the Rank share the command bus and address bus. The data bus width is the sum of the data bus widths of all the Banks in the Rank. All Banks in the Rank can simultaneously Read and write data in parallel.

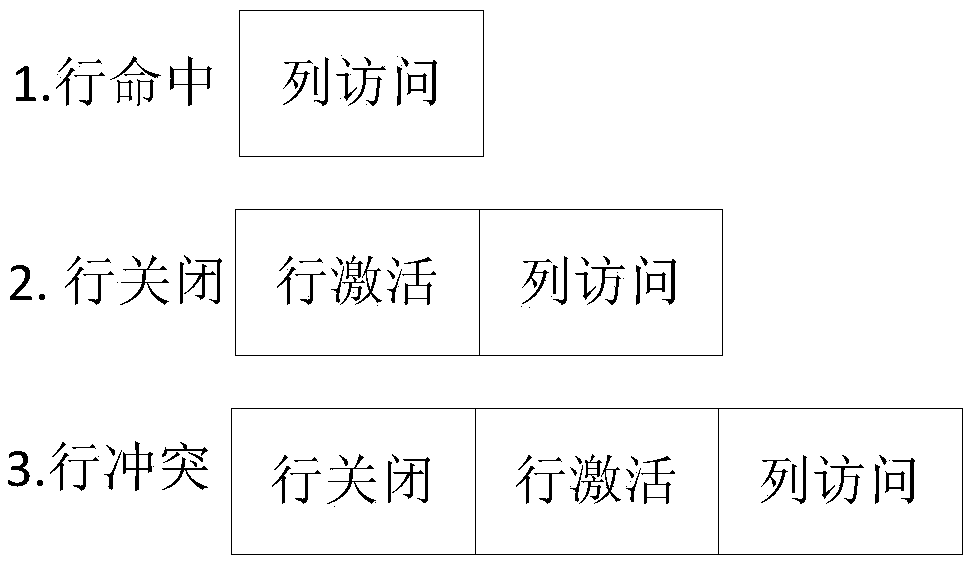

[0027] In a DRAM memory system, there are three DRAM operations which are row activation, column access and precharge.

[0028] Row activation, according to the row address, activates the target row in the dat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com