Face three-dimensional reconstruction method based on end-to-end convolutional neural network

A convolutional neural network and three-dimensional reconstruction technology, applied in neural learning methods, biological neural network models, neural architectures, etc. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

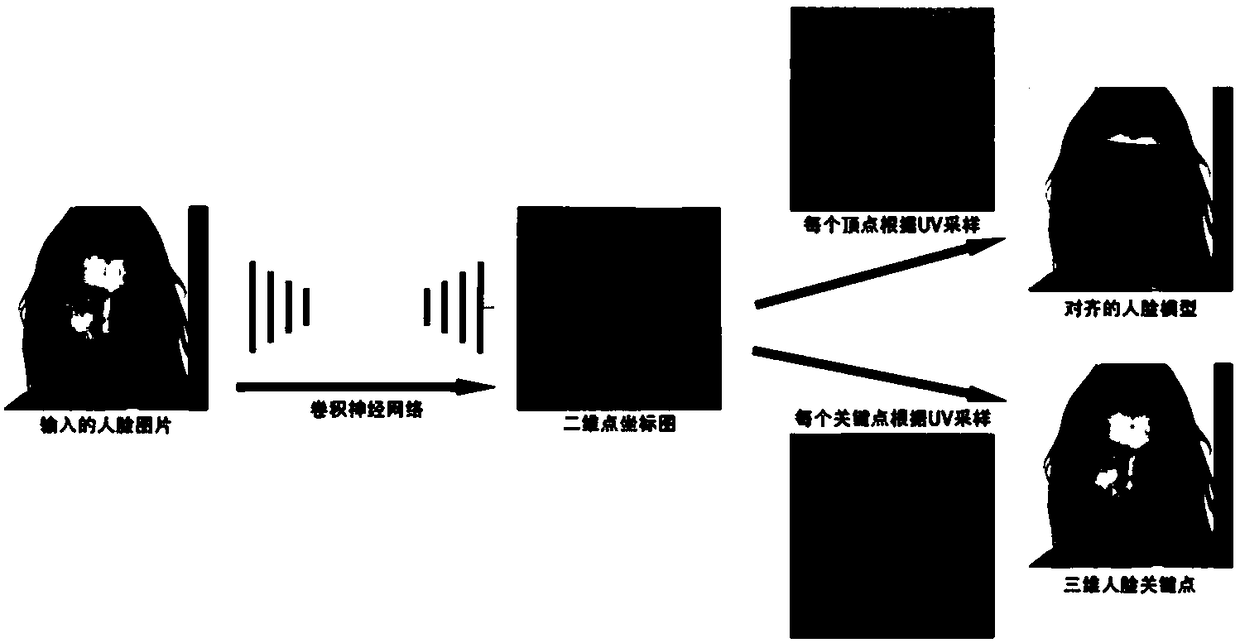

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

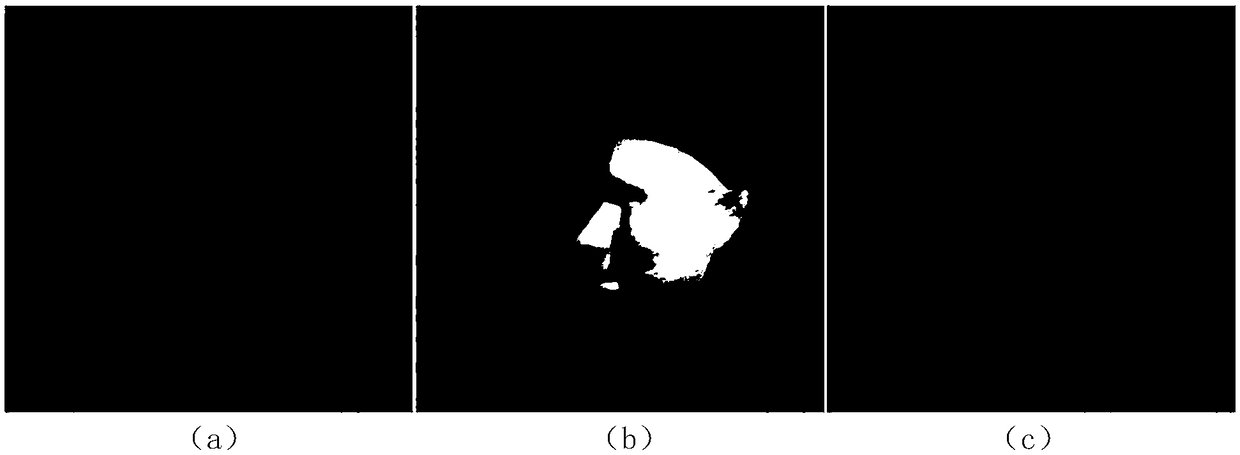

[0137] Embodiment 1. The inventor used this method to reconstruct a three-dimensional face model from a male profile picture, such as figure 2 shown. The first picture is the original face picture, the second picture is the result of face reconstruction to get the 3D model of the face rendered on the original picture, and the third picture is the 68 key points and their outlines obtained by alignment, these key points include Eyes nose mouth and lower contour. The face in the picture has a large deflection angle, and the scene is insufficiently illuminated, and a large area of the face is in shadow. In this case, reconstruction is very difficult. From the reconstruction results, it can be seen that this method can still obtain good results in the face of large-angle rotation and complex lighting. At the same time, the results of high-precision face alignment also prove the accuracy of the reconstruction results from the side.

Embodiment 2

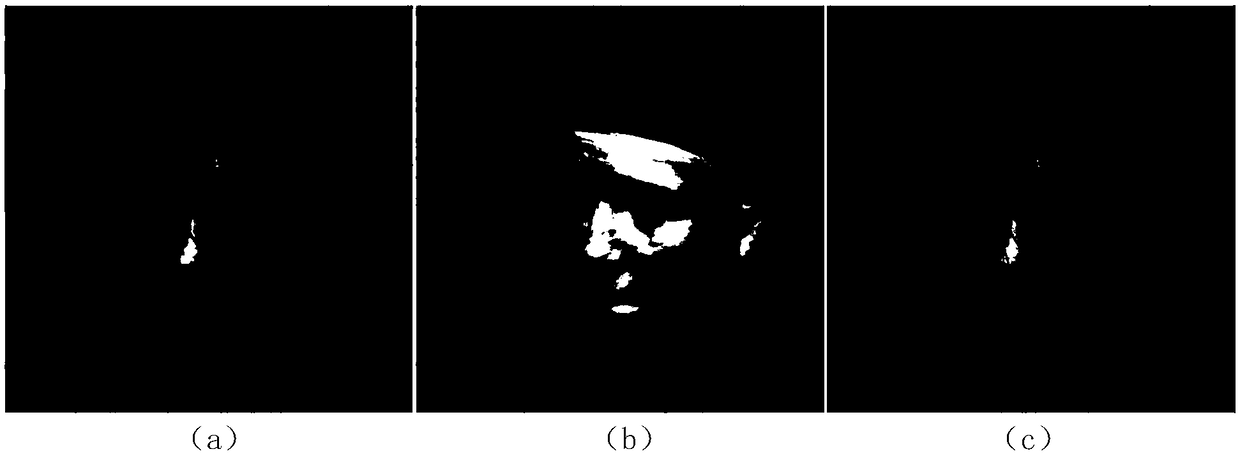

[0138] Embodiment 2. The inventor uses this method to reconstruct the result from a picture of a woman with a large occlusion. The meaning of the picture is the same as that of Embodiment 1. Part of the face in the picture is covered by hair, and the occlusion area is relatively complex. It can be seen that under the challenge of complex occlusion, the present invention can still obtain accurate reconstruction results. Among them, the main area of the face, the ear, nose and mouth parts are accurately positioned, and the reconstruction error is small, which shows that the loss function design based on the weight mask is effective.

Embodiment 3

[0139] Embodiment 3. The inventor tested the effect of the algorithm flow of the present invention on a total of 3300 face pictures on two public face data sets. The experimental results show that the algorithm of the present invention can still accurately and efficiently reconstruct the aligned 3D face model in the face of difficult challenges such as large-angle rotation, complex lighting, occlusion, blurring and makeup. Traditional methods often do not have strong robustness in the face of these challenges, but this method is based on convolutional neural networks, and the model has strong expressive ability and.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com