Workpiece pose rapid high-precision estimation method and apparatusbased on point cloud data

A point cloud data and workpiece technology, which is applied in the field of fast and high-precision estimation of workpiece pose based on point cloud data, can solve the problems of inability to meet the requirements of robot grasping and assembly accuracy, expensive computing equipment, and low pose estimation accuracy. , to achieve the effect of fast time, wide application and improved accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0062] In the following, the concept, specific structure and technical effects of the present invention will be clearly and completely described in conjunction with the embodiments and the drawings, so as to fully understand the objectives, solutions and effects of the present invention.

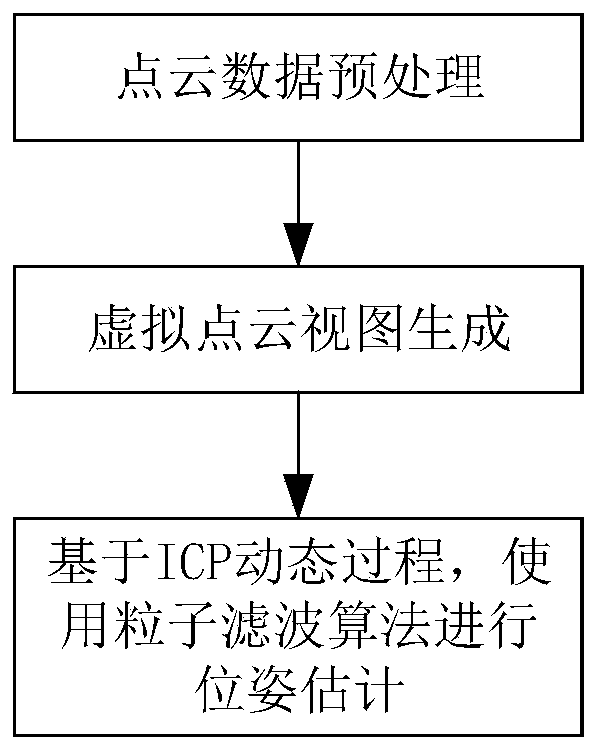

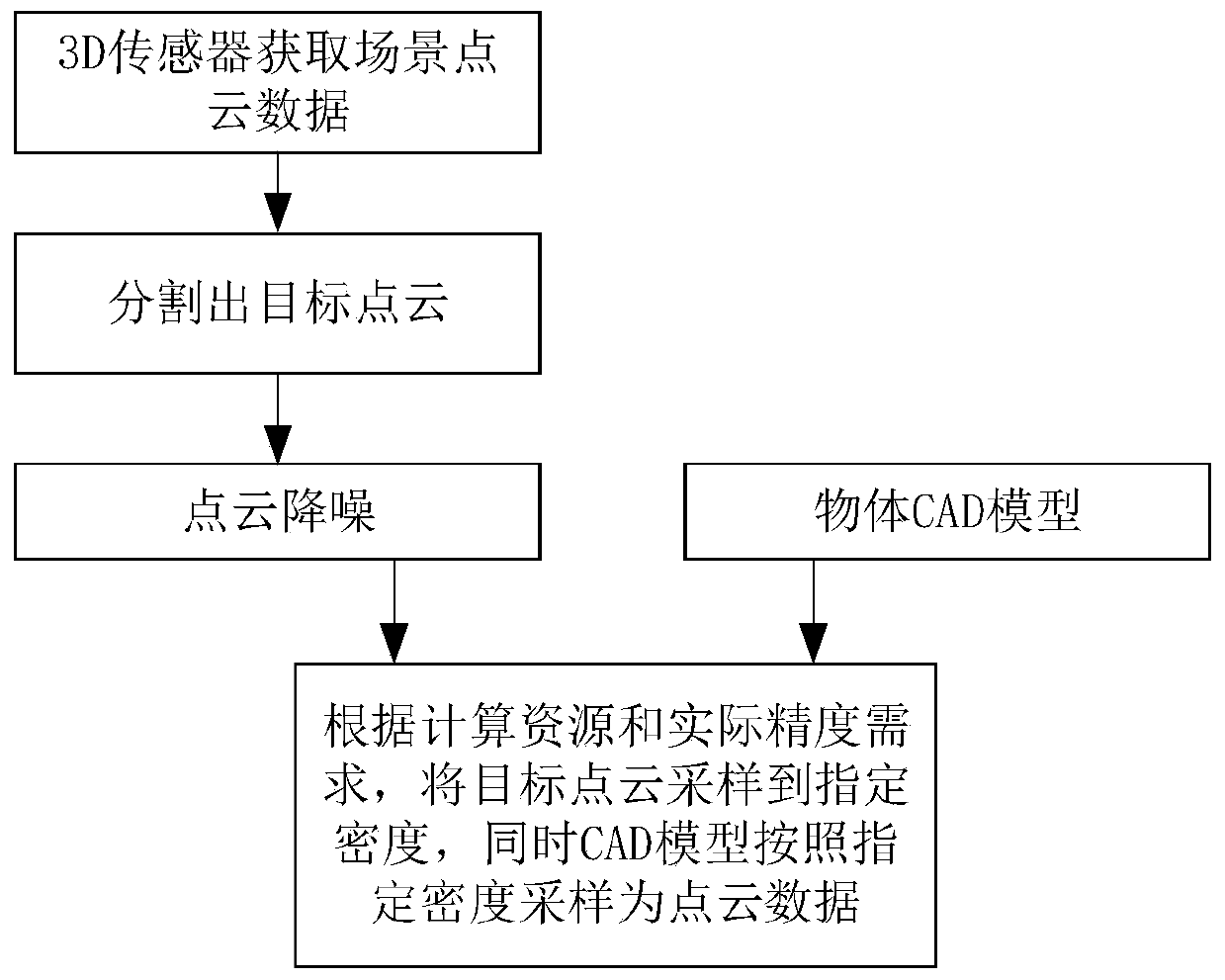

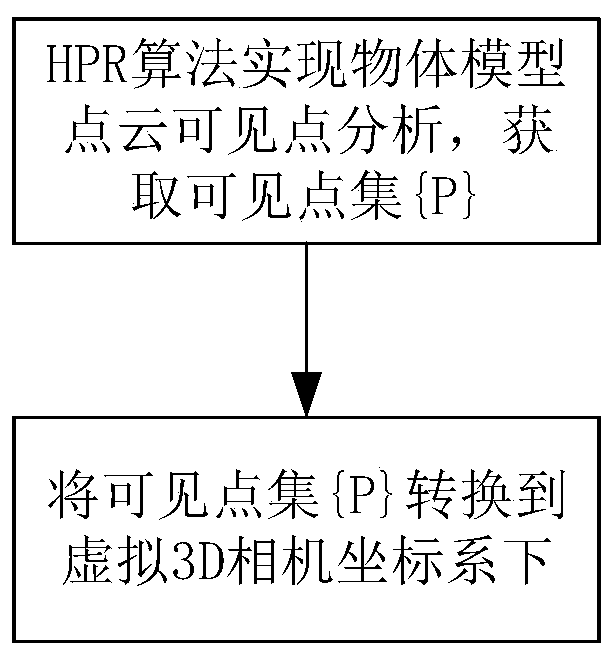

[0063] This article mainly discloses a method for estimating the pose of a workpiece based on a point cloud, which is mainly used to solve the problem of workpiece pose estimation in the field of automated assembly, especially in the field of small parts assembly. Such as figure 1 As shown, the method is divided into three steps: point cloud data preprocessing, point cloud virtual view extraction and pose estimation. The point cloud data preprocessing is mainly to segment the object point cloud acquired by the sensor as the target point cloud; convert the CAD model of the object into point cloud data, which is used to generate the point cloud view of the virtual 3D camera. Point cloud virtual ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com