Real 3D virtual simulation interaction method and system

A technology of three-dimensional virtual and interactive methods, applied in the field of true three-dimensional virtual simulation interactive methods and systems, can solve problems such as single expression of knowledge points, inability to achieve a situational reading and learning experience, and heavy performance by lecturers.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

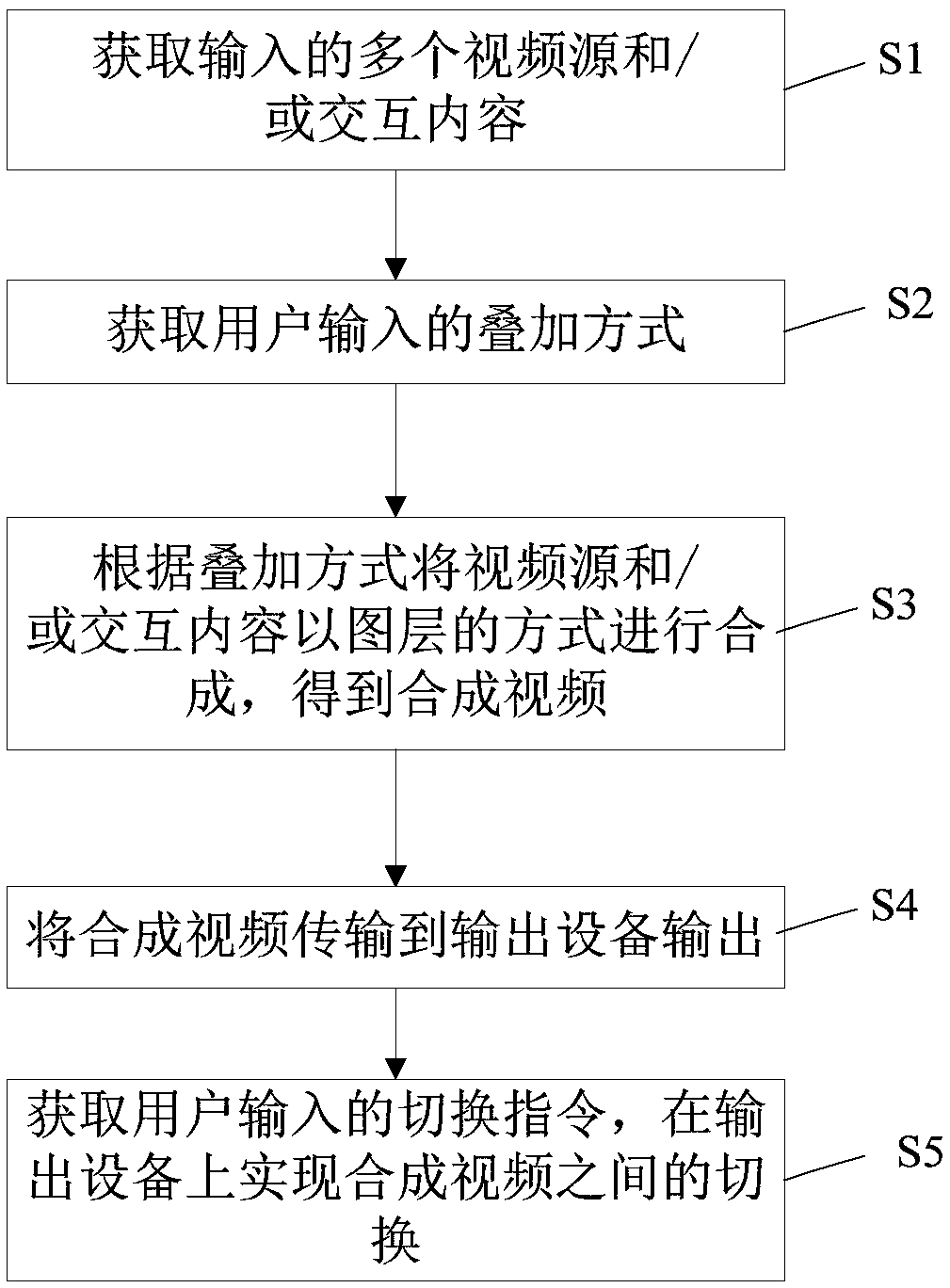

[0062] A kind of real three-dimensional virtual simulation interactive method, see figure 1 , including the following steps:

[0063] S1: Obtain multiple input video sources and / or interactive content;

[0064] S2: Obtain the superimposition method input by the user;

[0065] Specifically, the overlay mode is used to indicate the video source and / or interactive content that needs to be overlaid, and the corresponding layer.

[0066] S3: Synthesize the video source and / or interactive content in layers according to the superimposition method to obtain a composite video;

[0067]Specifically, for example, the data to be synthesized includes video source A, video source B, interactive content A, and interactive content B. When the superimposition method input by the user is to superimpose interactive content A and video source A, and interactive content A is superimposed on video source A, then when superimposing, video source A is used as the bottom layer, and interactive cont...

Embodiment 2

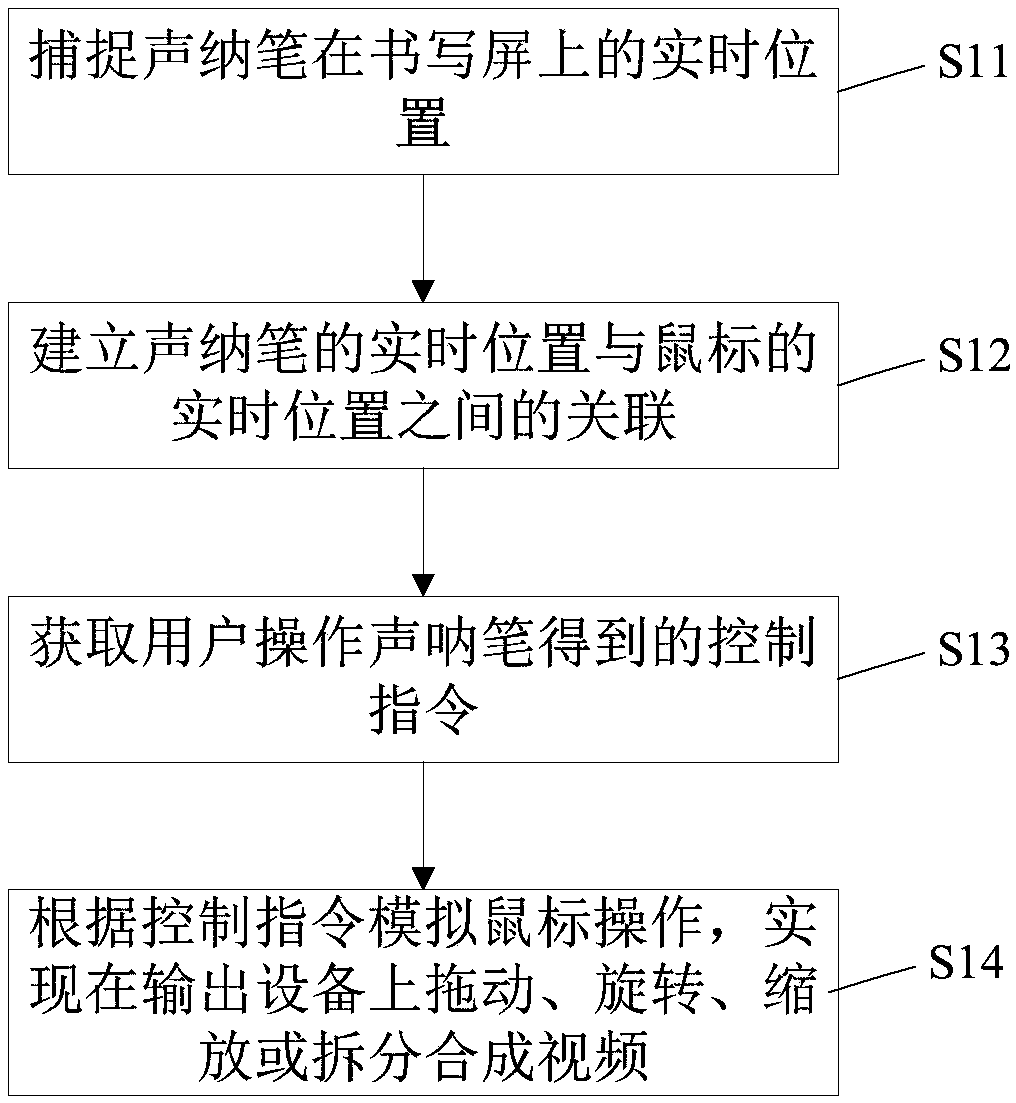

[0074] Embodiment 2 On the basis of Embodiment 1, the following interactive functions through the sonar pen are added.

[0075] The output device includes a writing screen; after the method realizes switching between synthesized videos on the output device, refer to figure 2 ,Also includes:

[0076] S11: Capture the real-time position of the sonar pen on the writing screen;

[0077] Specifically, the sonar pen is an existing mature product.

[0078] S12: establishing the association between the real-time position of the sonar pen and the real-time position of the mouse;

[0079] Specifically, after the association is established, the user can directly simulate mouse actions by operating the sonar pen, such as dragging, clicking, and double-clicking.

[0080] S13: Obtain a control command obtained by the user operating the sonar pen;

[0081] S14: simulating mouse operation according to the control instruction to realize dragging, rotating, scaling or splitting the synthes...

Embodiment 3

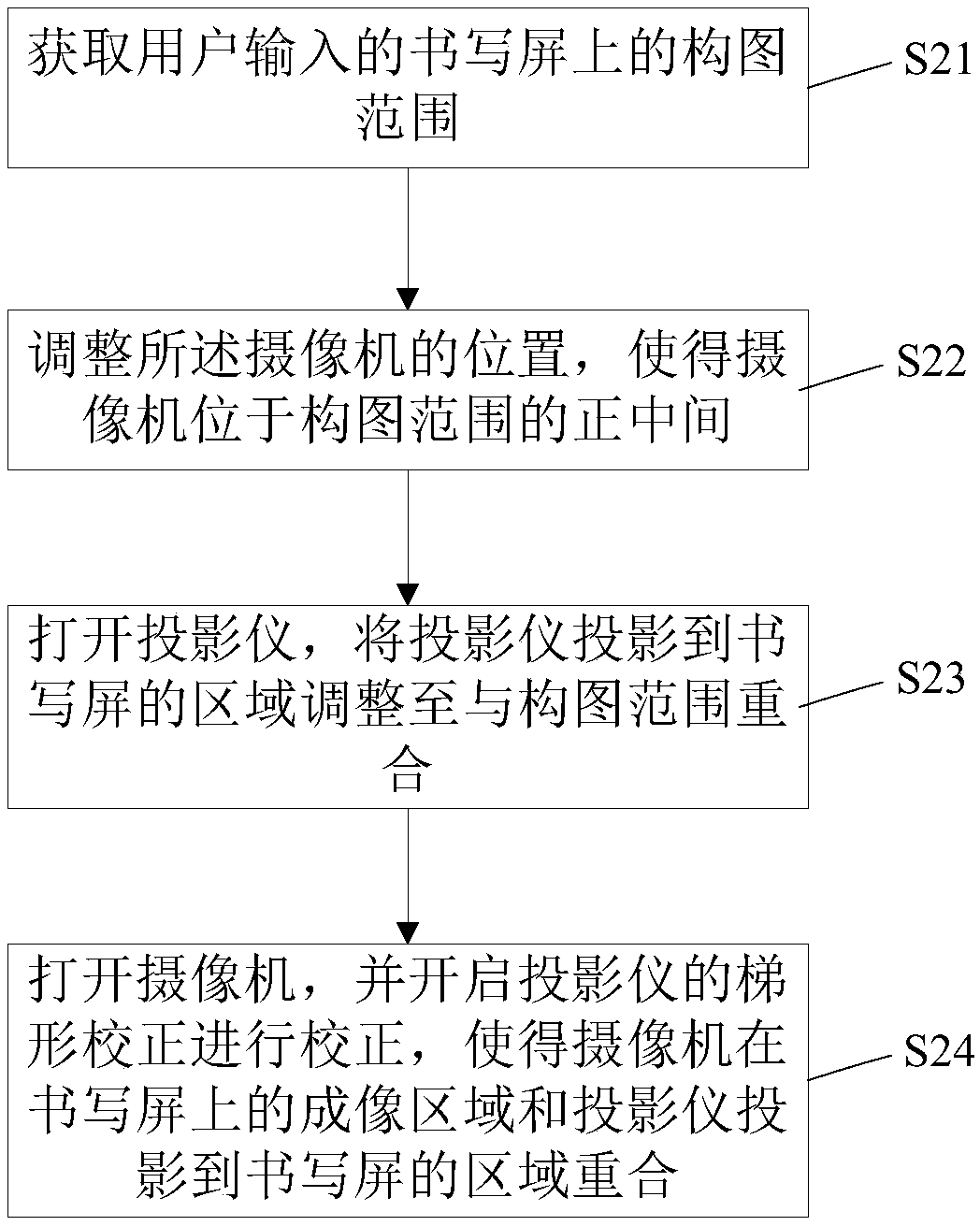

[0087] Embodiment 3 On the basis of the foregoing embodiments, the following content is added:

[0088] The output device also includes a projector and a video camera;

[0089] The writing screen is fixed in front of the wall; the projector is set between the writing screen and the wall by rear projection; the camera is set in front of the writing screen, and the lens of the camera is set facing the writing screen.

[0090] Specifically, the writing screen may be a green screen. The distance between the writing screen and the wall is preferably 1.1m, the distance between the projector and the writing screen is preferably 0.3m, and the distance between the lens aperture of the camera and the writing screen is preferably 3.3m. The projector is installed by means of rear projection, which is suitable for environments with few audiences and good ambient light and lighting.

[0091] Further, see image 3 , the transmission of the synthesized video to the output device output spe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com