Object contour extraction method based on mask-RCNN

A contour extraction and contour technology, applied in biological neural network models, image data processing, instruments, etc., can solve problems such as incomplete segmentation, inaccurate instance contour detection, and blurred images.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

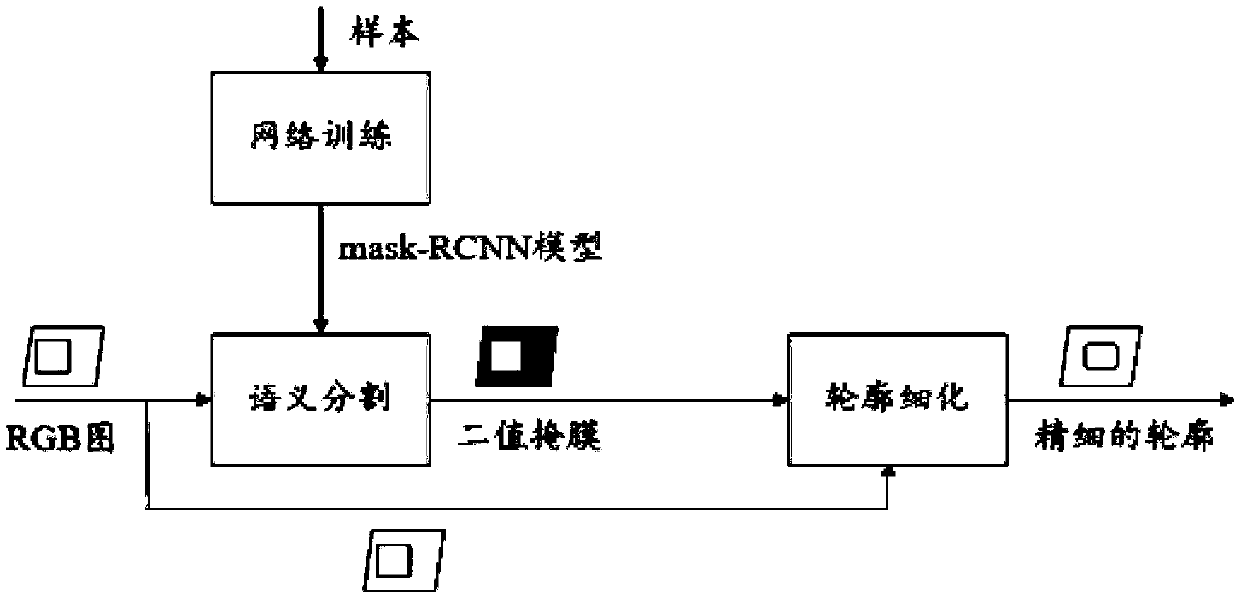

[0110] A method for extracting object contours based on mask-RCNN provided by a preferred embodiment of the present invention, the process is:

[0111] Copy the RGB image of the outline of the object to be extracted to obtain image IM1 and image IM2, and IM1 and IM2 are exactly the same.

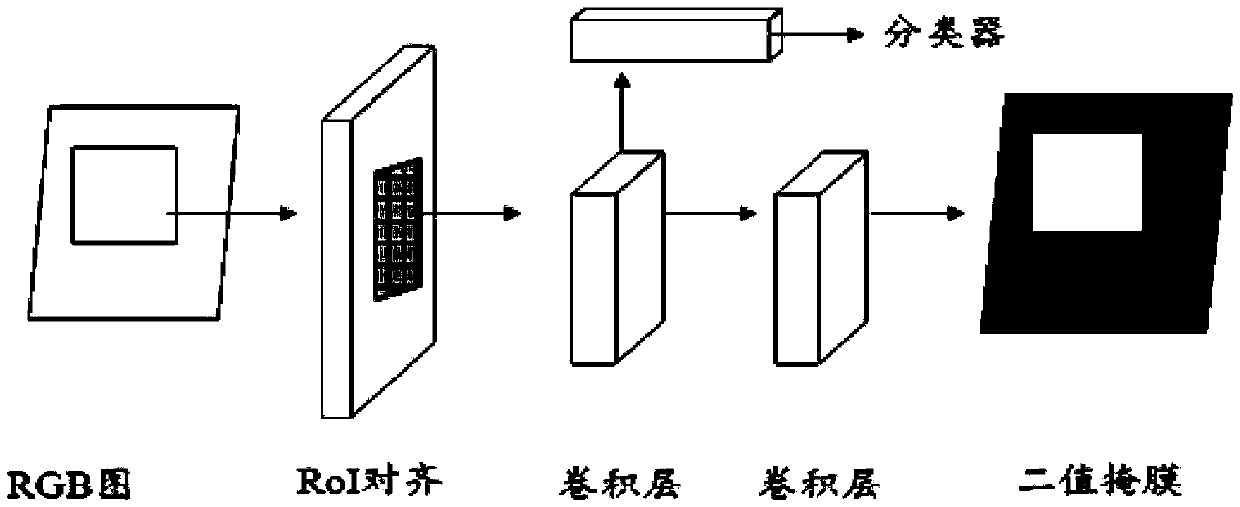

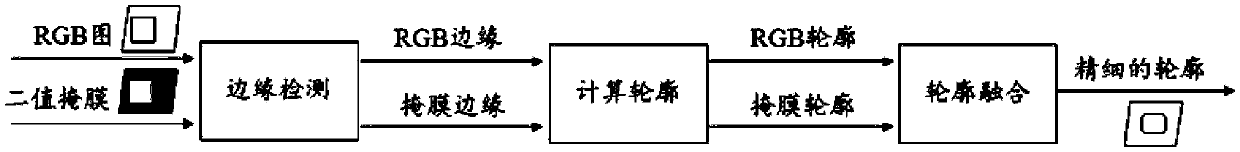

[0112] Step 1: Use the obtained ImageNet training samples to pre-train to obtain the mask-RCNN model, and perform semantic segmentation on the mask-RCNN model obtained by inputting IM1, to obtain binary mask images of each object in the scene. Assume that the mask image K1 of the object A is currently obtained, the mask image K1 is the same size as the IM1 image, the area where the object A is located is marked as 1, and the other areas of the mask image are marked as 0. Since the object A area of the mask image obtained in the actual operation cannot completely overlap with the object A area of the original RGB image, and the edges are rough, further edge refinement operations are perfo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com