A Sound Scene Recognition Method Based on Label Augmentation and Multispectrum Fusion

A scene recognition, multi-spectrum technology, applied in the field of scene recognition, can solve the problem of not considering clustering to extract super-category labels, etc., to achieve the effect of fast training convergence, system robustness, and optimized performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

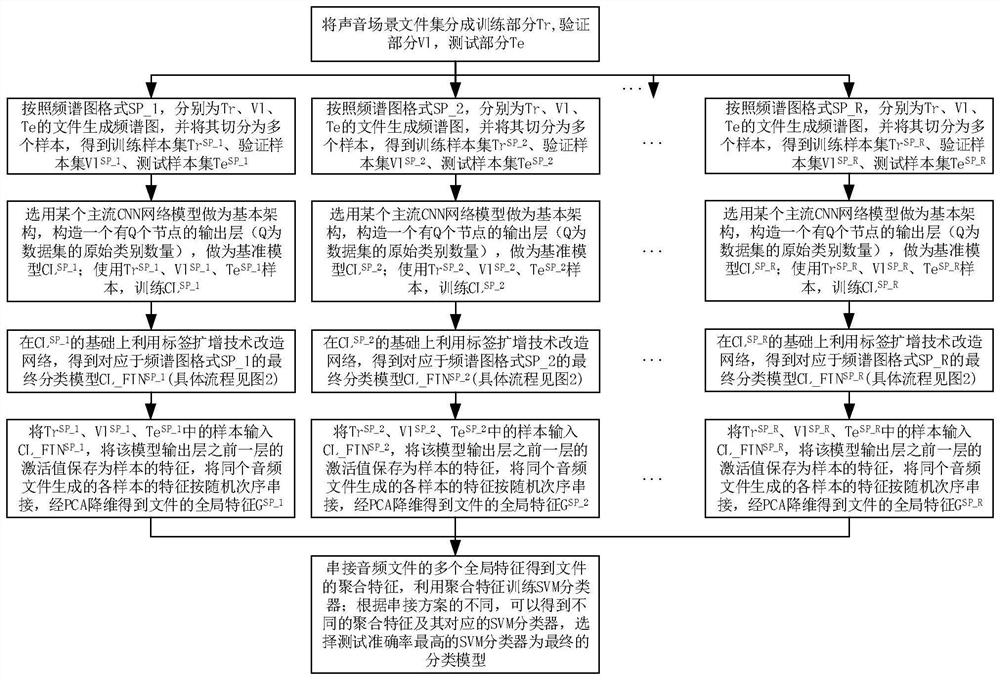

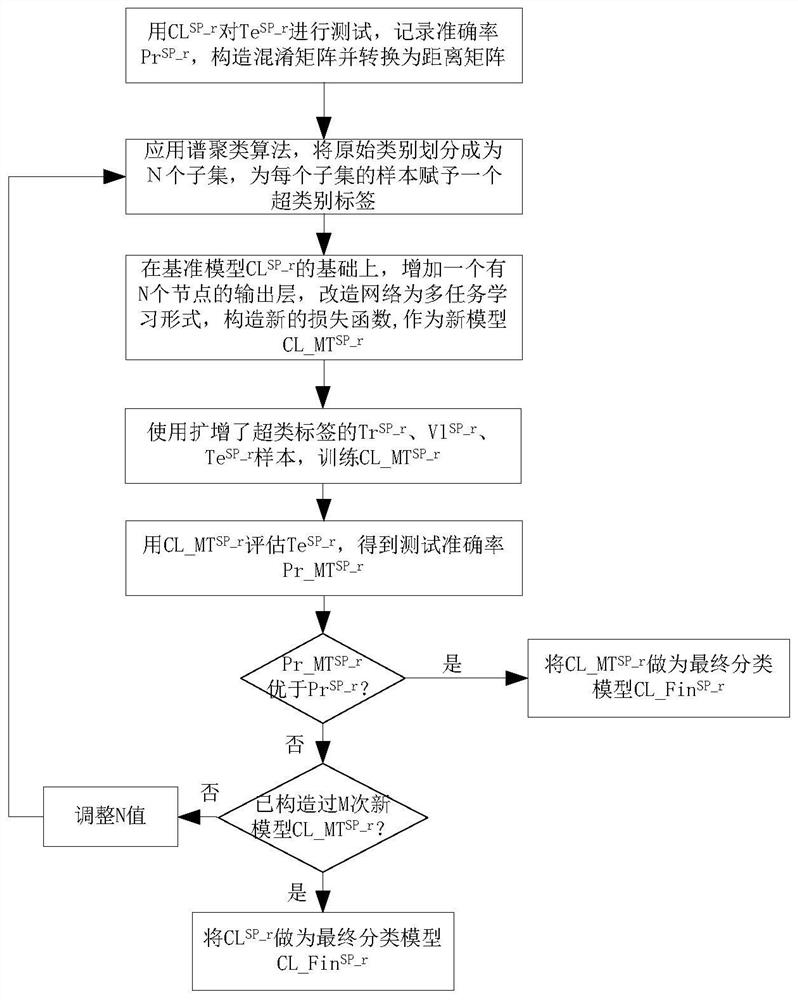

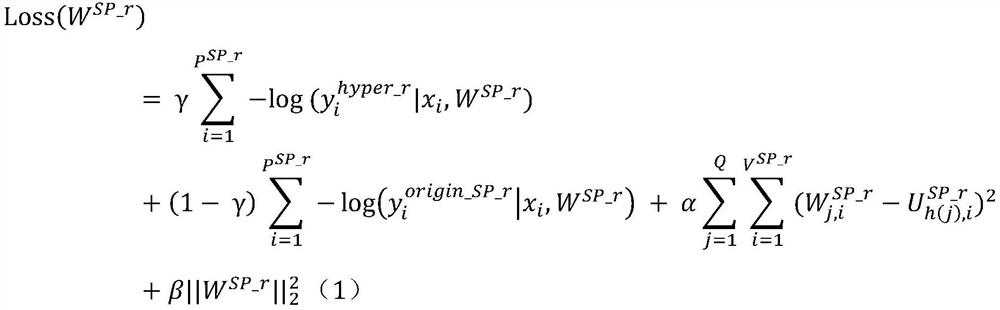

[0030] like figure 1 As shown, in this embodiment, a sound scene recognition method based on tag amplification and multi-spectrum fusion includes the following steps:

[0031] Step S1: the data set used in this embodiment includes the Development file set and the Evaluation file set of DCASE2017 sound scene recognition; 90% of the Development file set is used as the training part Tr, and the remaining 10% is used as the verification part V1, and the Evaluation file set is used as the verification part V1 As a test part Te. The audio files in each file set are 10 seconds long. Without loss of generality, this embodiment only uses two spectrogram formats to describe the implementation steps: one is the STFT spectrogram, and the other is the CQT spectrogram.

[0032] Step S2: Take out the audio files one by one from Tr, and obtain the STFT time-frequency characteristic value after operations such as framing, windowing, and short-time Fourier transform, and organize the time-fre...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com