Voice interaction method and device, equipment and storage medium

A voice interaction and voice technology, which is applied in the field of human-computer interaction, can solve the problems of being unable to provide personalized response services to the user's emotional state, unable to recognize the user's emotional state, etc., and achieve the effect of improving effectiveness and accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

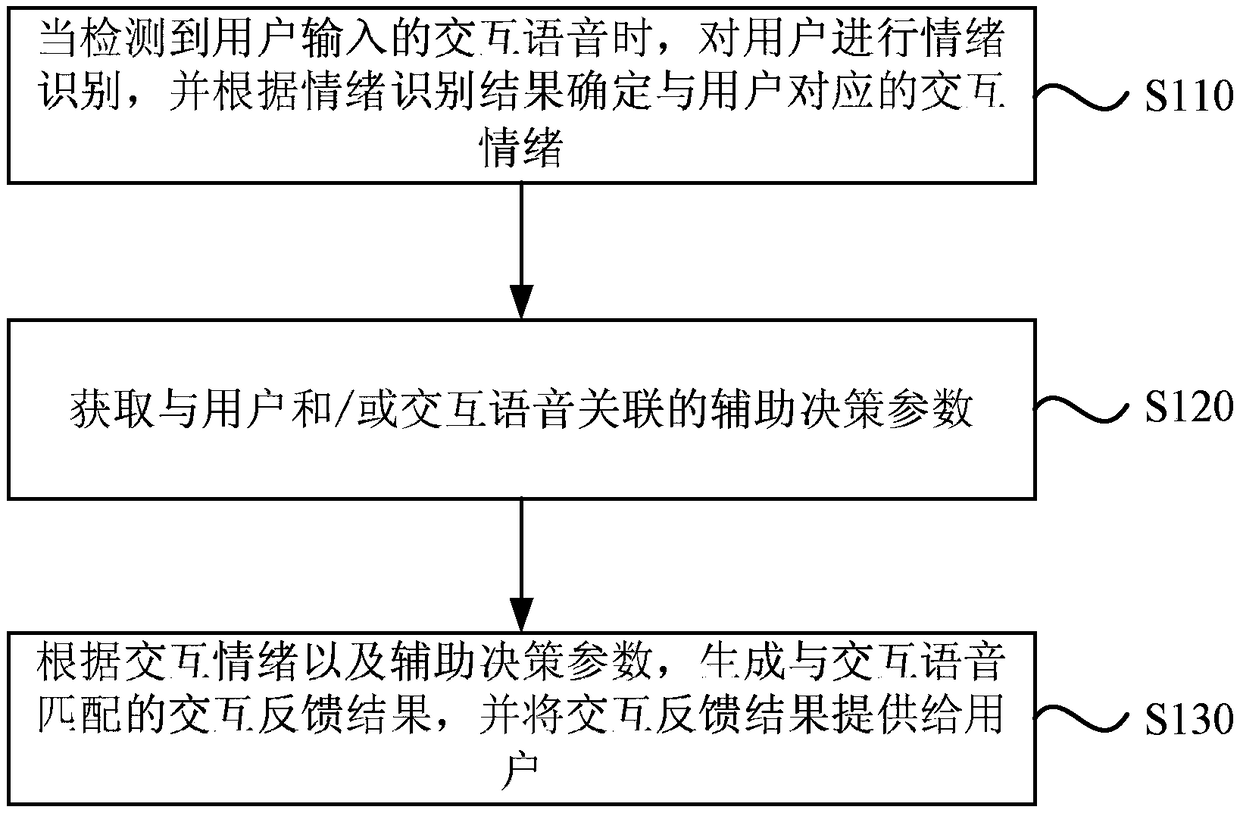

[0030] Figure 1a It is a flow chart of a voice interaction method provided by Embodiment 1 of the present invention. This embodiment is applicable to the situation of recognizing emotions in interactive voices. The method can be executed by a voice interaction device, which can be implemented by hardware and / or Or software, and can generally be integrated in computers, servers, and all terminals that include voice interaction functions. like Figure 1a As shown, the method specifically includes the following steps:

[0031] S110, when an interaction voice input by the user is detected, perform emotion recognition on the user, and determine an interaction emotion corresponding to the user according to an emotion recognition result.

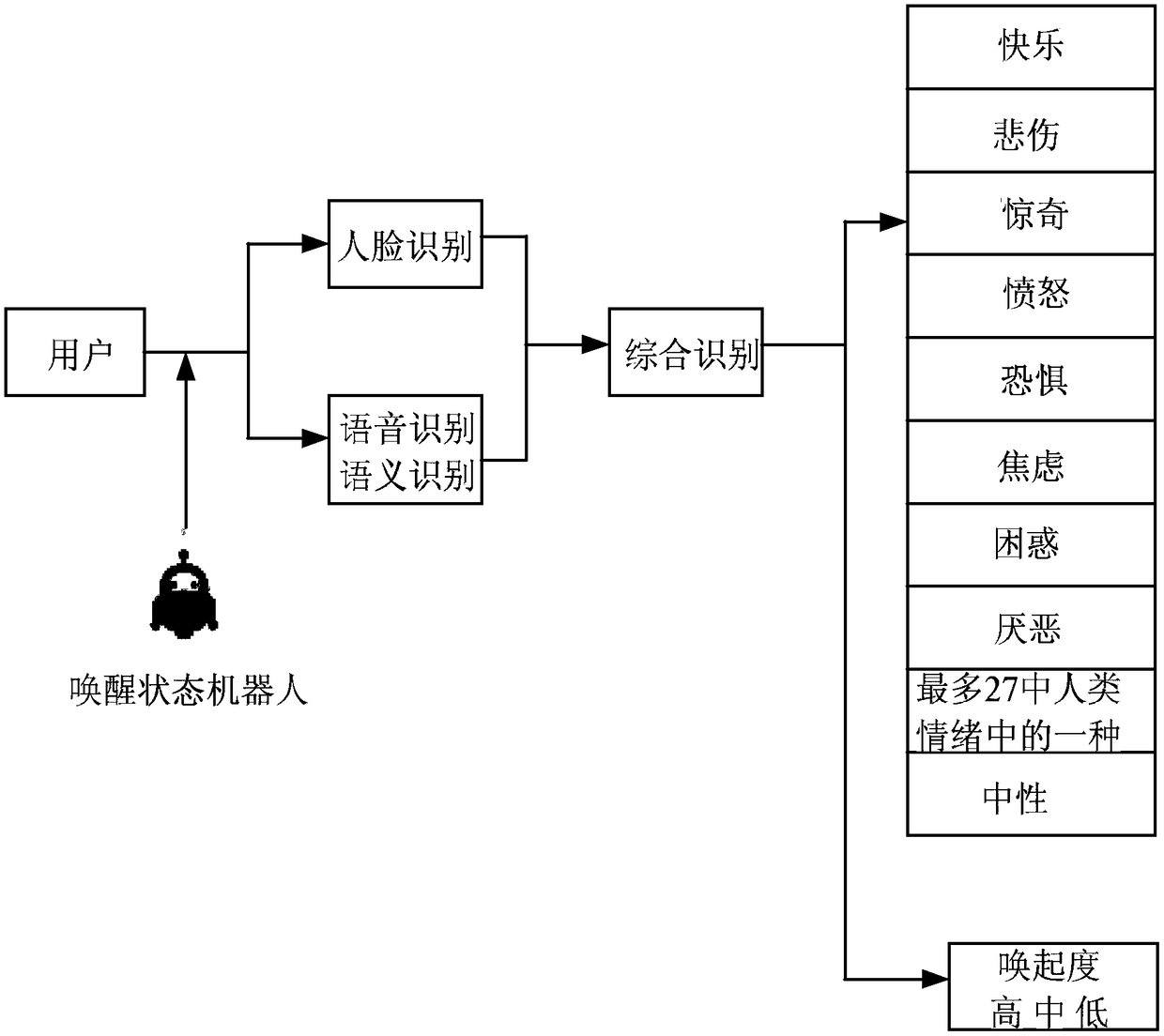

[0032] Among them, the emotion can be one of the six classic psychological emotions, which are: happiness, sadness, surprise, anger, fear and disgust; or one of the 27 psychological emotions, which are: Admiration, adoration, appreciation, entert...

Embodiment 2

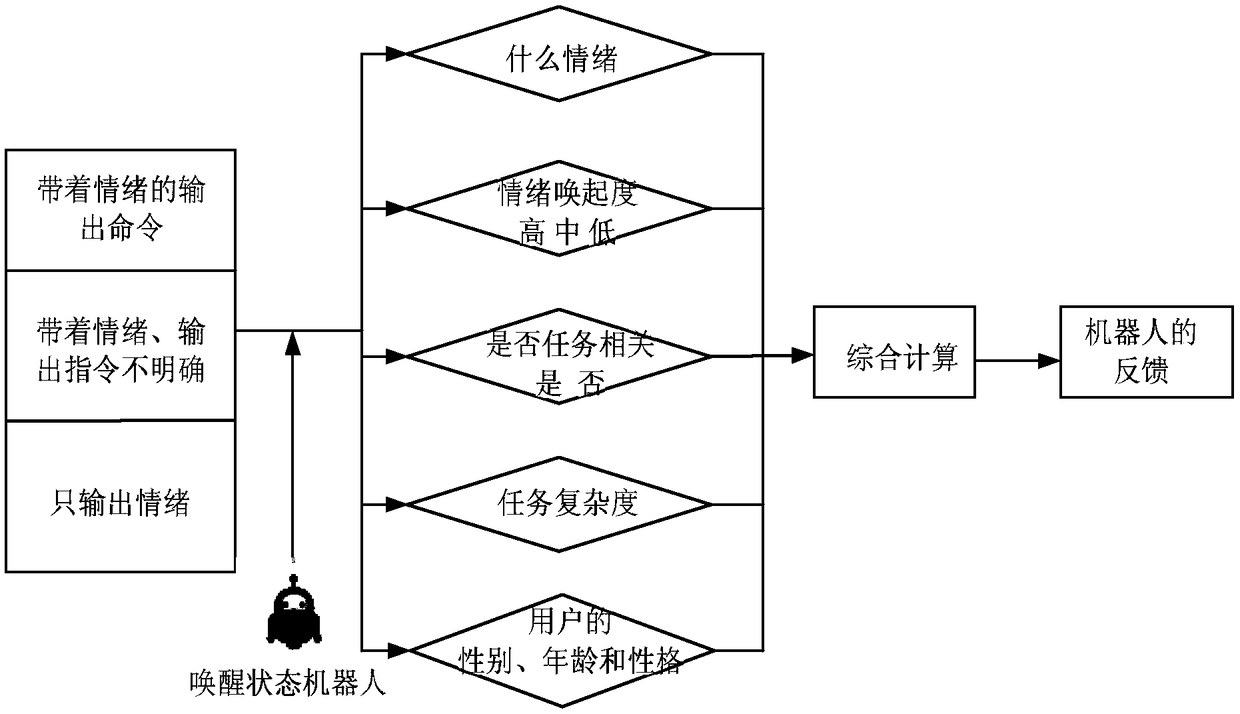

[0058] figure 2 It is a flowchart of a voice interaction method provided by Embodiment 2 of the present invention. As a further explanation of the above examples, as figure 2 As shown, the method includes the following steps:

[0059] S210. When an interaction voice input by the user is detected, perform emotion recognition on the user, and determine an interaction emotion corresponding to the user according to an emotion recognition result.

[0060] S220. Acquire auxiliary decision-making parameters associated with the user and / or the interactive voice.

[0061] S230. Generate an interaction feedback result matching the interaction voice according to the interaction emotion and auxiliary decision parameters, and provide the interaction feedback result to the user.

[0062] S240, if a new interactive voice input by the user for the currently provided interactive feedback result is received, acquire historical machine feedback emotions and historical machine feedback patte...

Embodiment 3

[0072] Figure 3a It is a flowchart of a voice interaction method provided by Embodiment 3 of the present invention. like Figure 3a Shown, as further explanation to above-mentioned embodiment, this method comprises the following steps:

[0073]S310. When the interactive voice input by the user is detected, based on the face image, the emotional feature information in the interactive voice, and the semantic recognition result of the interactive voice, respectively acquire confidence levels of at least two preset emotions as emotion recognition results.

[0074] S320, in the at least two types of emotion recognition results obtained by using at least two emotion recognition methods, respectively calculate the comprehensive confidence corresponding to each preset emotion according to the confidence corresponding to the same preset emotion and the preset weighting algorithm ; According to the comprehensive confidence calculation result, determine the interaction emotion corresp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com