Unmanned aerial vehicle autonomous positioning method based on visual SLAM (Simultaneous Localization and Mapping)

A self-positioning and unmanned aerial vehicle technology, applied in the field of image processing and computer vision, can solve the problems of increased difficulty and achieve the effects of improved stability and reliability, good scalability, and large amount of calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] Function and characteristics of the present invention are as follows:

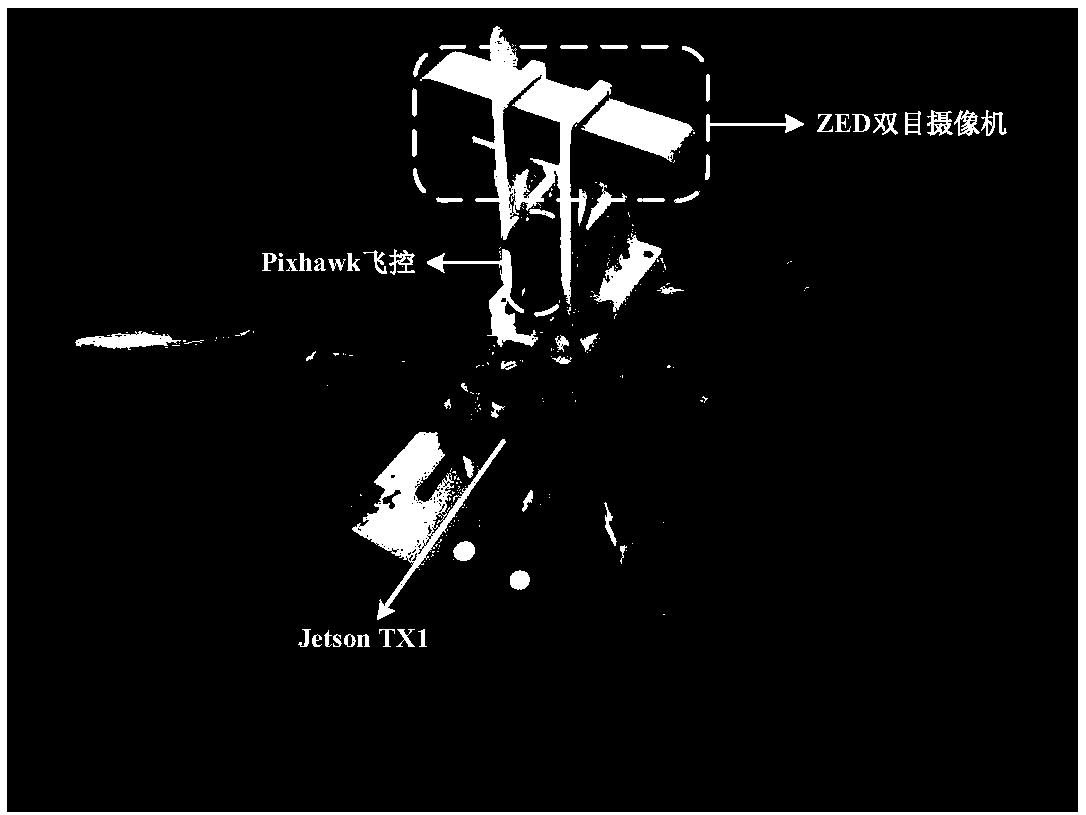

[0037] (1) The present invention has a binocular camera for collecting images in front of the drone. With an imu, used to read acceleration and angular velocity information;

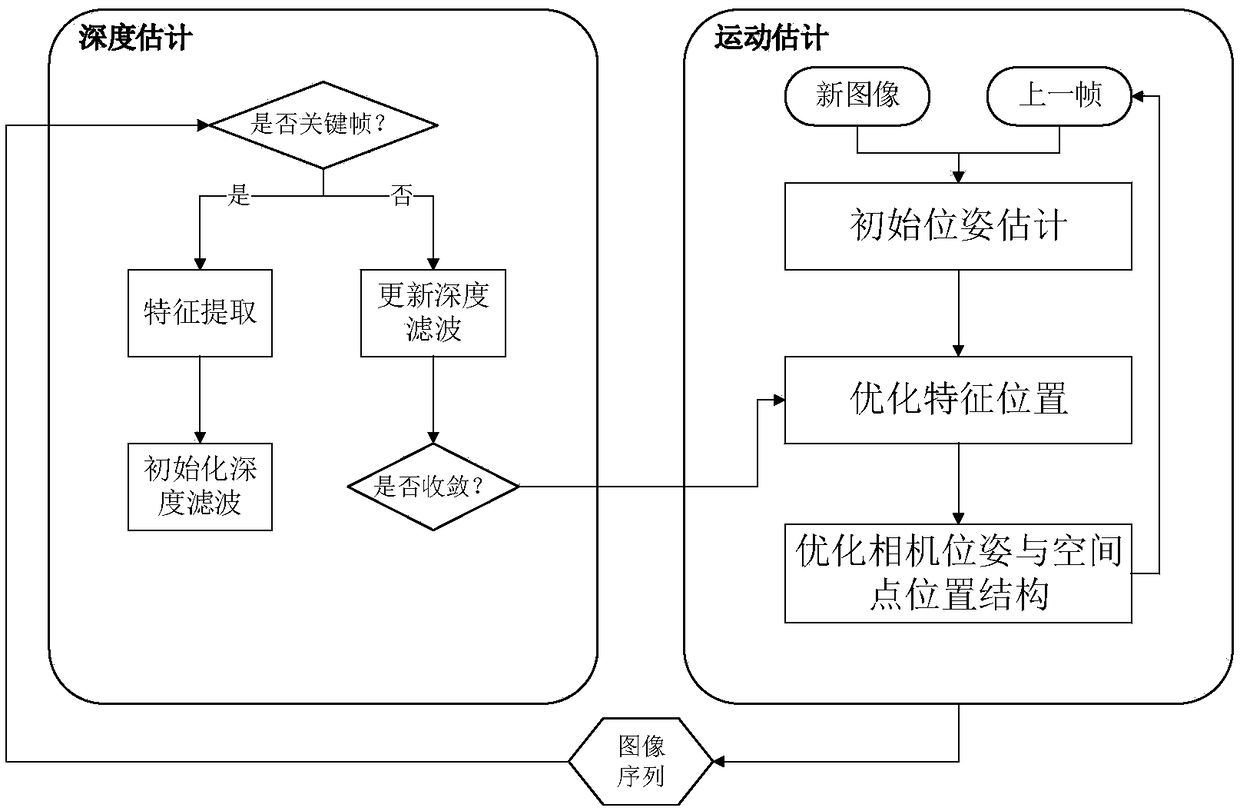

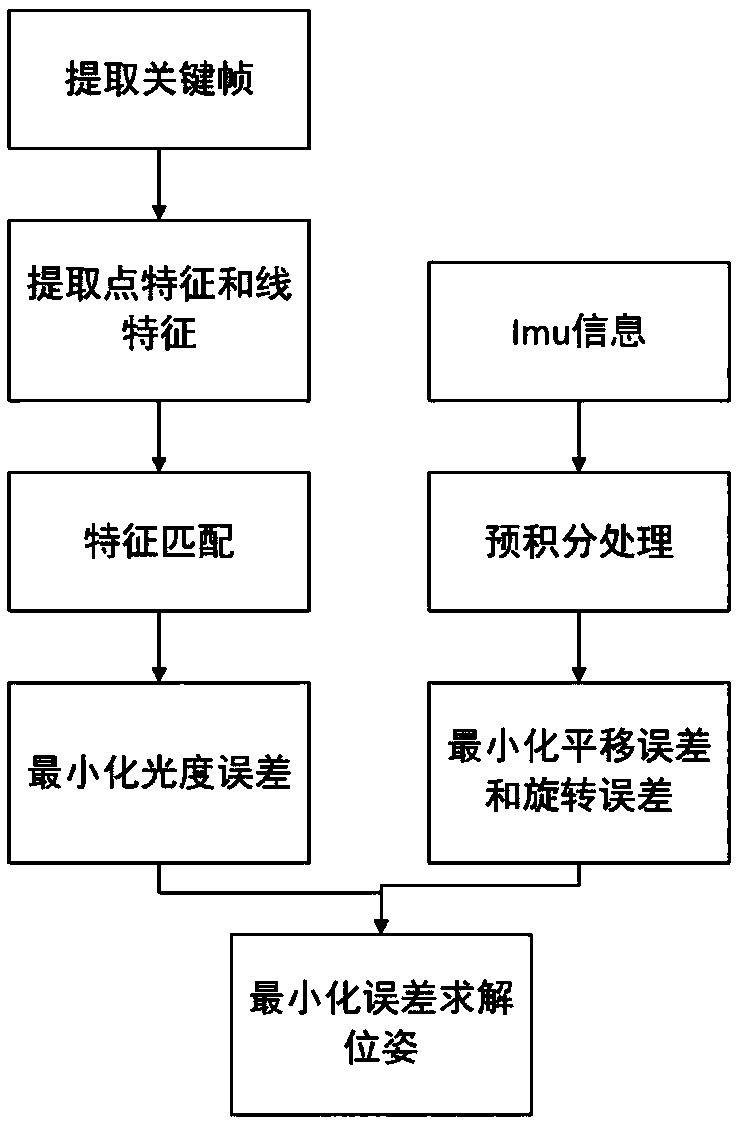

[0038] (2) The present invention processes the images collected by the camera through computer vision, visual SLAM and other technologies, screens out key frames through a certain mechanism, and performs feature matching after feature extraction to initially obtain the pose of the drone . Then process the imu information, fuse the two, and finally get the precise position information of the drone through a series of optimizations.

[0039] (3) The method of combining the direct method and the feature point method is adopted, which combines the advantages of the feature point and the direct method, and greatly improves the efficiency.

[0040] (4) When extracting features, the positioning method in the present invention divi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com