Planetary landing image and distance measurement fusion relative navigation method

A relative navigation, planetary technology, applied in the field of deep space exploration

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0062] In order to better illustrate the purpose and advantages of the present invention, the content of the invention will be further described below in conjunction with the accompanying drawings and embodiments.

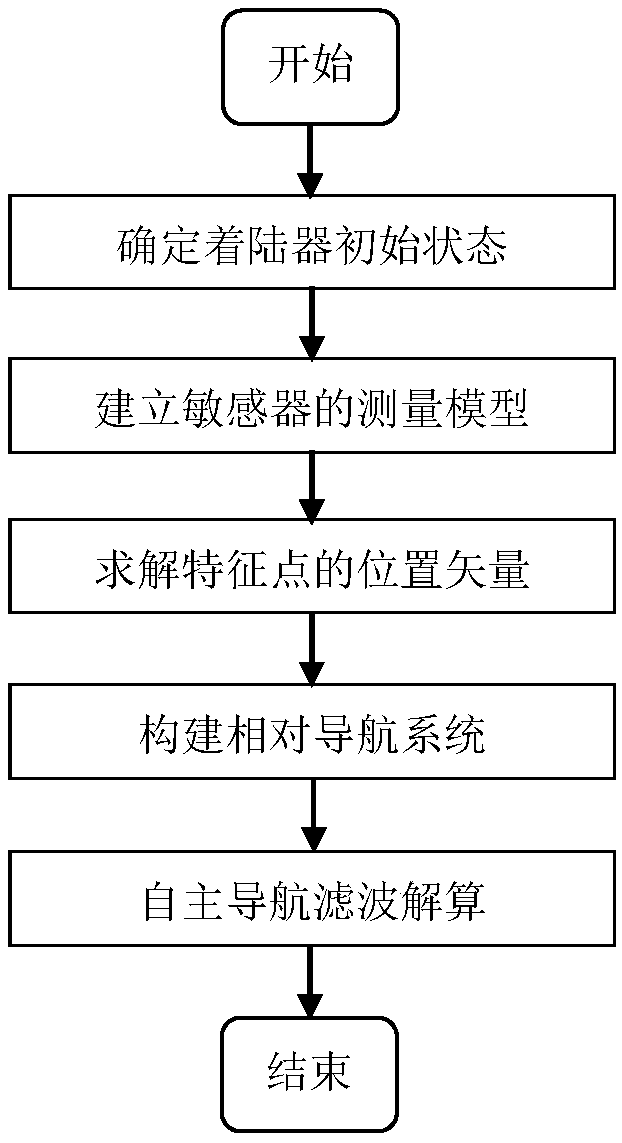

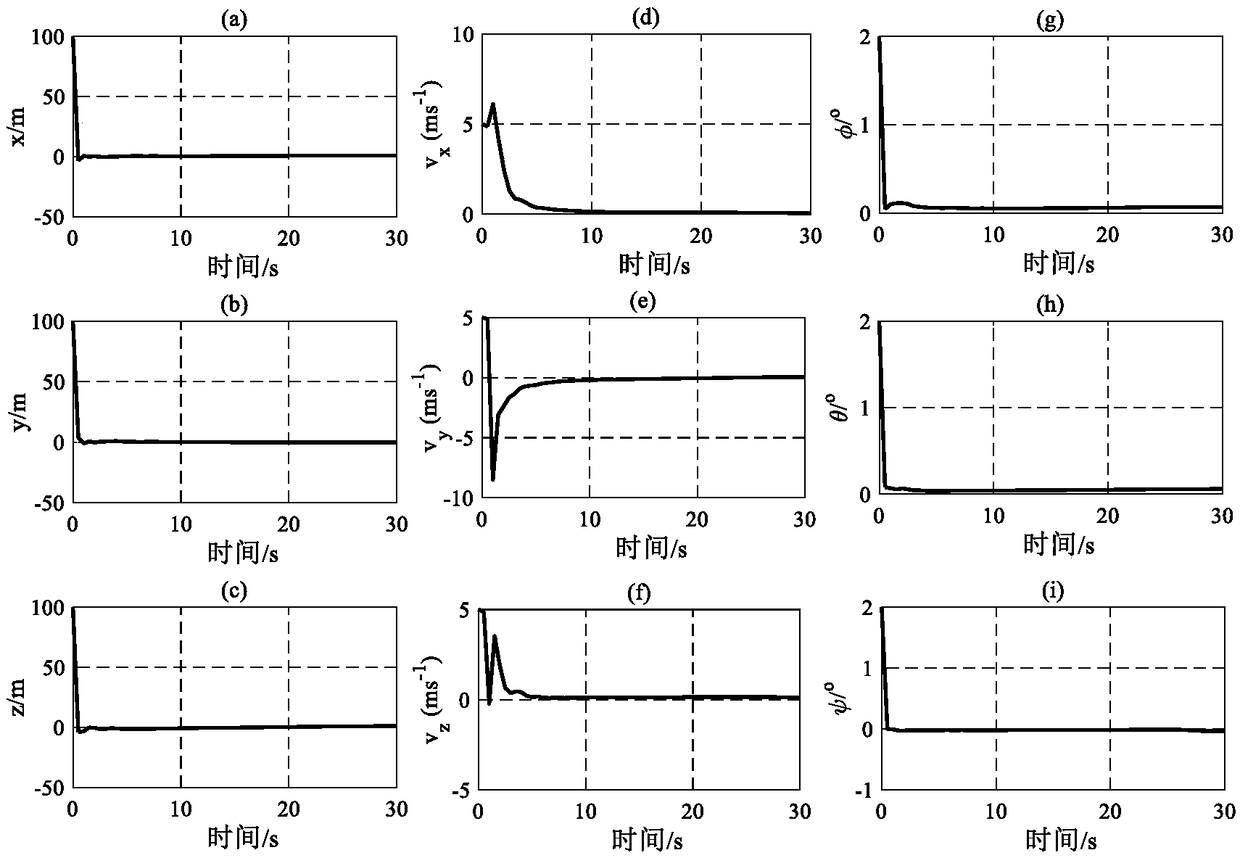

[0063] Such as figure 1 As shown, this example is aimed at the relative optical navigation method of the Mars landing dynamic descent, combining the measurement information of the optical camera and the three-beam rangefinder, and using the extended Kalman filter for filter calculation to achieve high-precision navigation during the dynamic descent. The specific implementation method of this example is as follows:

[0064] Step 1: Build the measurement model of the optical camera and rangefinder

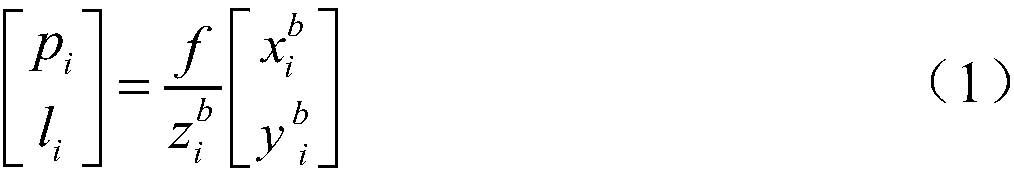

[0065] The measurement model of the optical camera is shown in formula (1).

[0066]

[0067] In the formula, p i , l i is the pixel coordinate of the i-th feature point in the image on the image plane, f is the focal length of the camera, is the three-axis posit...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com