Complex background SAR vehicle target detection method based on CNN

A technology of target detection and complex background, which is applied in the field of complex background SAR vehicle target detection based on CNN, can solve the problems of unrealistic target detection and fixed input image size, etc., to facilitate engineering application, avoid gradient disappearance, and good detection effect of effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0028] The complex background SAR vehicle target detection method based on CNN of the present invention comprises steps:

[0029] S1, collecting pattern data and processing to obtain a sample data set;

[0030] S2, the ResNet (network model depth residual network model) and the Faster-RCNN framework are fused to form a fusion framework structure, and the fusion framework is retrained on the basis of pre-training weights;

[0031] S3. Using the retrained fusion framework to perform target detection and recognition on the pattern data.

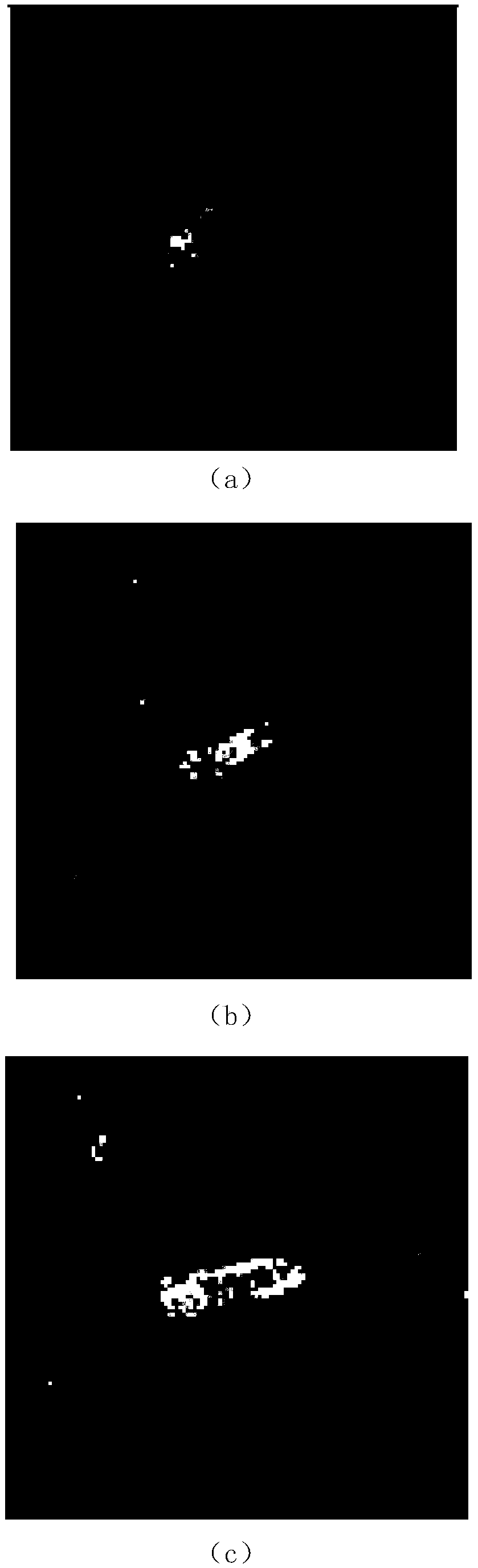

[0032] Step S1 specifically is to image the same scene through airborne X-band radars of different voyages, and after data preprocessing, sample the pattern data with a ground distance of 0.3m in both azimuth and range, and use 128×128 (unit: pixel ) with a fixed size to manually extract the vehicle samples in the pattern data, and a total of 500 original sample slice datasets containing original samples 1 of various vehicles (trucks, buses and...

Embodiment 2

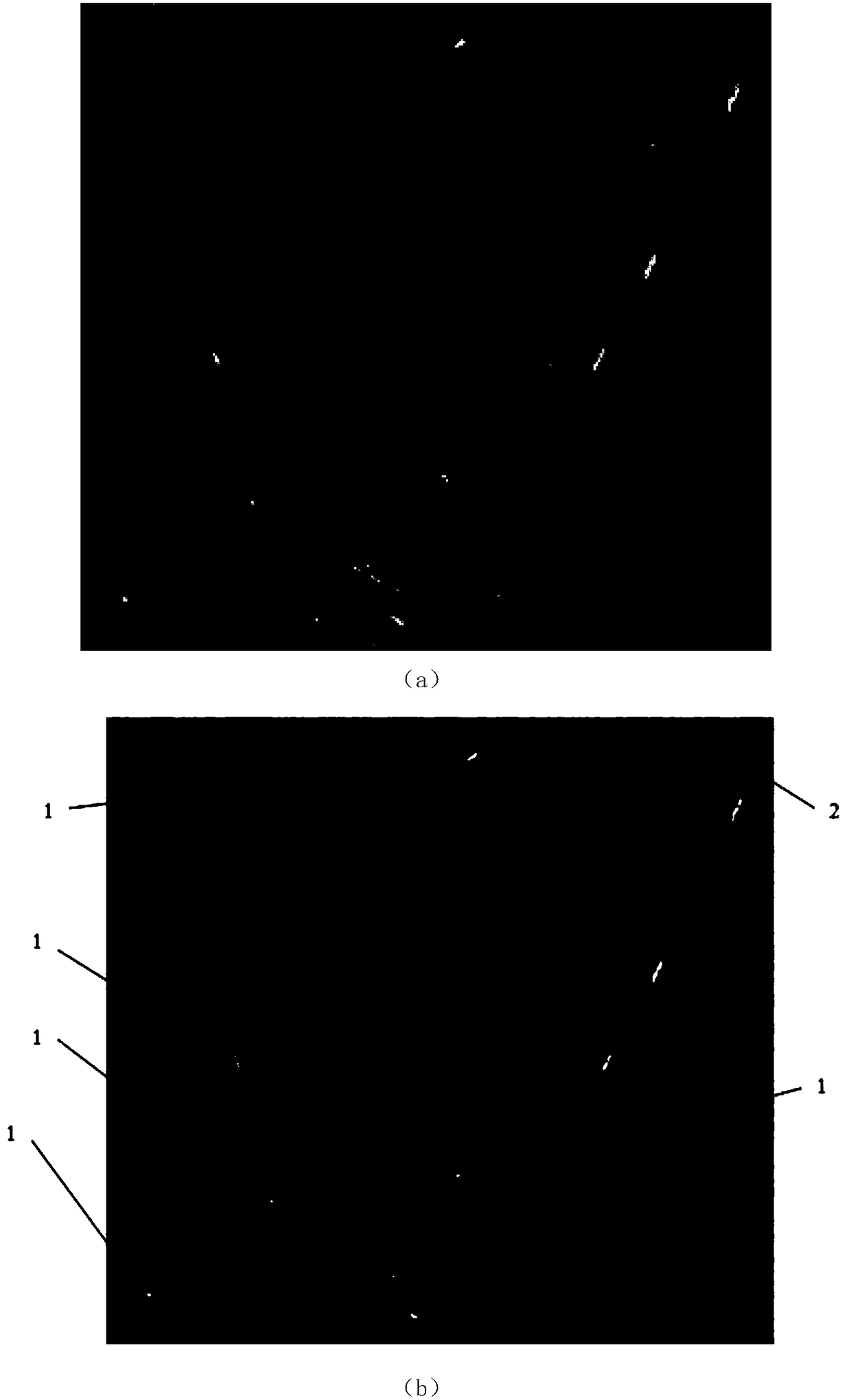

[0039] Such as image 3as shown, image 3 It is the frame diagram of Faster-RCNN. In step S2, the pattern data needs to be processed through the Faster-RCNN framework.

[0040] Specifically, the Faster-RCNN (Faster Region CNN) framework mainly includes a feature extraction layer, a Region Proposal Network (RPN), an ROI pooling layer, and a classification extraction layer.

[0041] The feature extraction layer mainly includes a plurality of convolutional layers, activation layers and pooling layers, and the feature extraction layer extracts feature maps from the pattern data as the input of the classification recognition layer. The feature extraction layer can be divided into a ZF network model and a VGG network model according to the depth of the network model, wherein the VGG-16 network model includes 13 conv (convolution layers), 13 relu (activation layers) and 4 pooling (pooling layer), the ZF network model includes 5 convs, 4 relus and 2 poolings, the size of the featur...

Embodiment 3

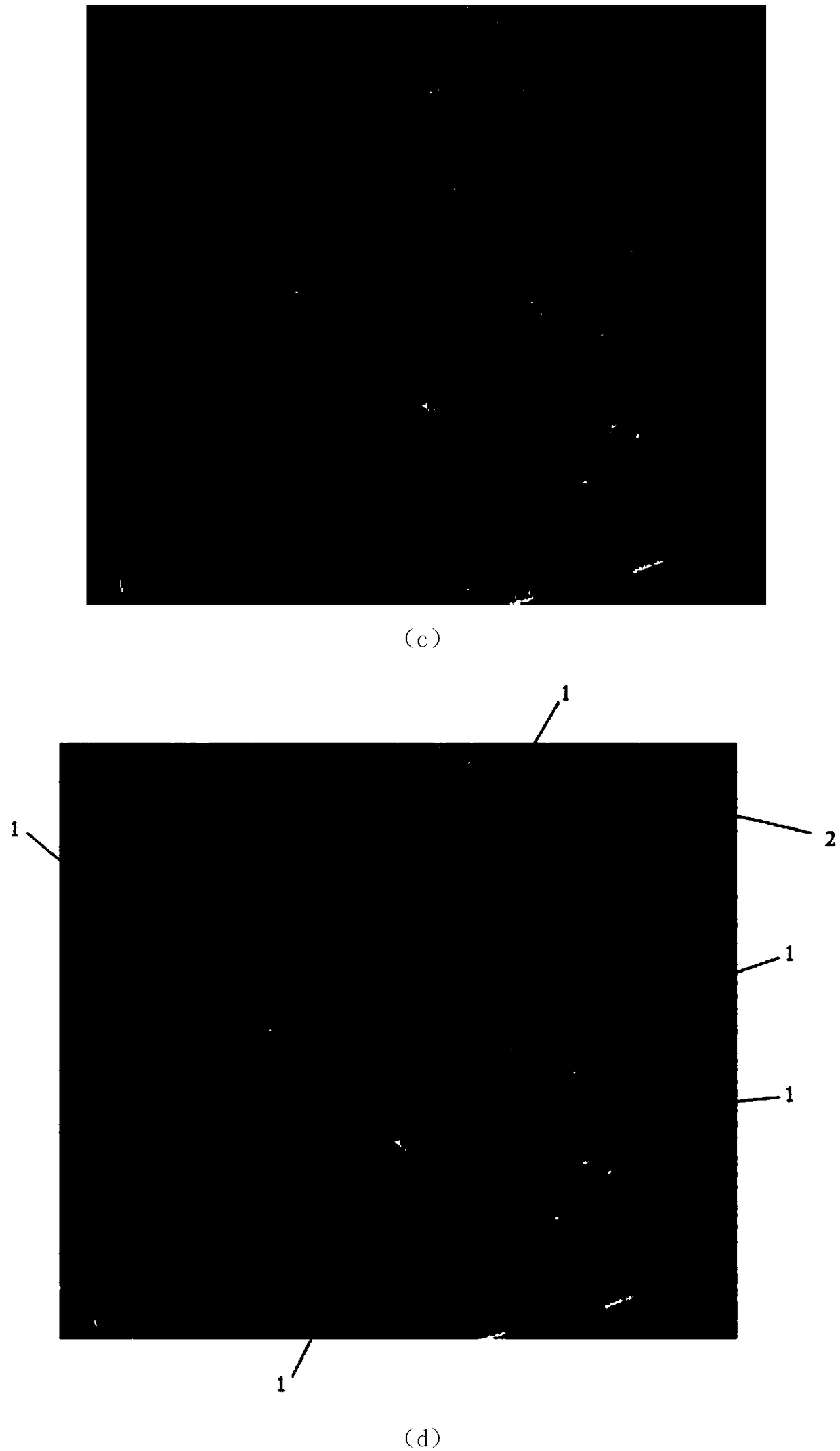

[0047] Preferably, in this embodiment, step S2 is specifically to fuse the ResNet-50 network model and the Faster RCNN framework to form a fusion framework; the fusion process of the ResNet-50 network model and the Faster RCNN framework mainly includes:

[0048] Obtain 50 layers of residual blocks according to the ResNet-50 network model, the feature extraction layer of the fusion framework is set to the residual block of 40 layers, and the size of the output feature map is kept as 1 / of the pattern data 16;

[0049] The region generation network extracts candidate frames through a sliding window in the feature map of the last layer input by the feature extraction layer, and sets 9 anchor points (anchor points) with different sizes and aspect ratios for each pixel in the feature map. ), and combined with frame regression to preliminarily obtain the candidate frame of the pattern data.

[0050] The ROI pooling layer collects the input feature map and the candidate frame, extra...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com