A moving object tracking method based on multi-feature fusion

A multi-feature fusion and moving target technology, applied in image data processing, instruments, character and pattern recognition, etc., can solve problems such as tracking failure, inability to fully express targets, and tracking performance differences

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

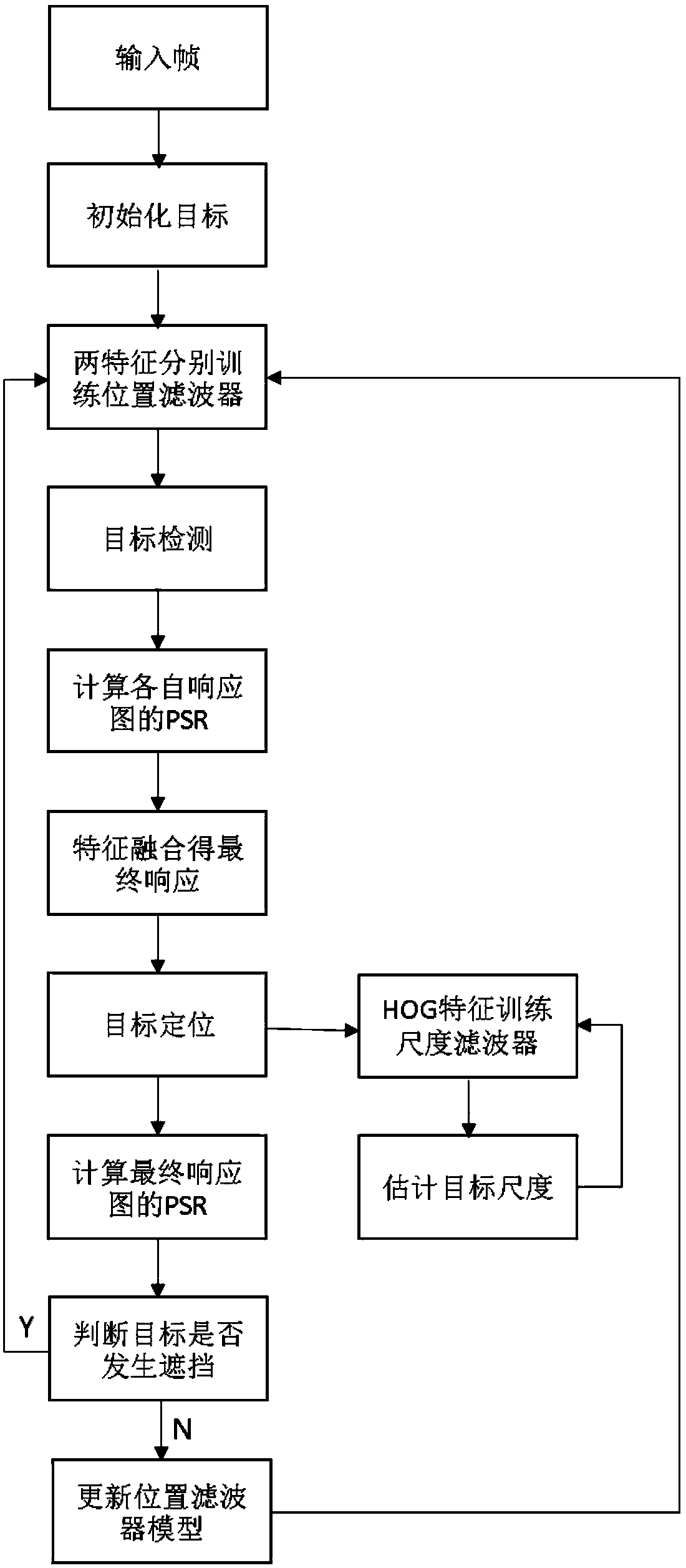

[0063] Embodiment 1: as figure 1 As shown, the moving target tracking method based on multi-feature fusion, the specific steps of the method are as follows:

[0064] Step1. Initialize the target and select the target area;

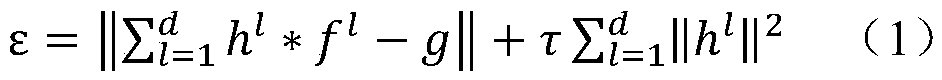

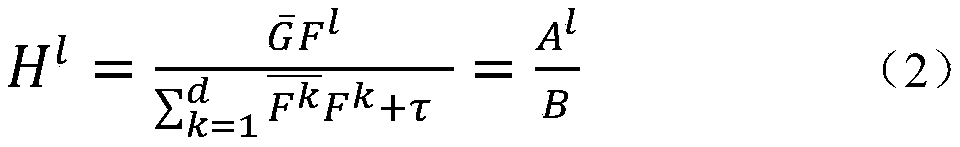

[0065] Step2. Extract the histogram of oriented gradient (Histogram of Oriented Gradient, HOG) feature of the target area as a training sample, and extract the color (Color Name, CN) feature of the target area as another training sample. Train the respective positional filter models with the two training samples;

[0066] Step3. Extract two kinds of features in the target area of the new frame to obtain two detection samples, respectively calculate the correlation scores of the two detection samples and the respective position filters trained in the previous step, that is, obtain the response maps of different features;

[0067] Step4. Calculate the peak side lobe ratio of the response graph of different characteristics, and fuse the two characteristic...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com