Multi-source image fusion and feature extraction algorithm based on depth learning

A technology of feature extraction and deep learning, which is applied in computing, computer parts, character and pattern recognition, etc., can solve the problems of ignoring feature learning and optimization, limiting the scope of practical application, and not being able to represent the topology of 3D models, so as to improve information Effects of Missing, Improving Accuracy, and Improving Accuracy and Efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

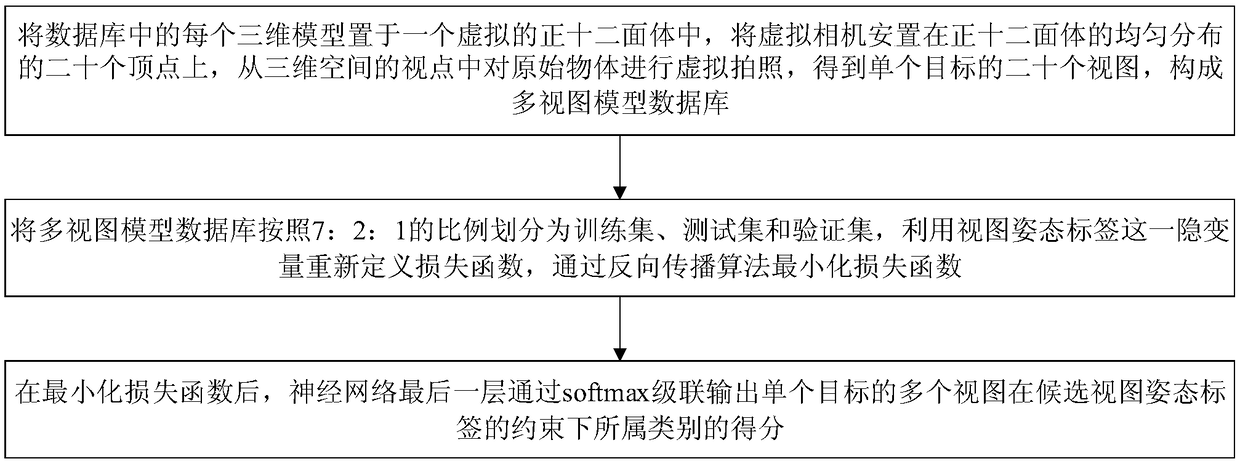

[0031] A multi-source image fusion and feature extraction algorithm based on deep learning, see figure 1 , the method includes the following steps:

[0032] 101: Place each 3D model in the database in a virtual dodecahedron, place the virtual camera on twenty evenly distributed vertices of the dodecahedron, and view the original object from the viewpoint of the 3D space Take a virtual photo to get 20 views of a single target to form a multi-view model database;

[0033] 102: Divide the multi-view model database into training set, test set and verification set according to the ratio of 7:2:1, use the hidden variable of view pose label to redefine the loss function, and minimize the loss function through the backpropagation algorithm;

[0034] 103: After minimizing the loss function, the last layer of the neural network outputs multiple views of a single target through softmax cascade, and the score of the category under the constraint of the candidate view pose label.

[0035...

Embodiment 2

[0039] The scheme in embodiment 1 is further introduced below in conjunction with specific examples and calculation formulas, see the following description for details:

[0040] 201: ModelNet40 [11] Each 3D model in the database is placed in a virtual dodecahedron, the virtual camera is placed on the 20 vertices of the dodecahedron, and the original object is viewed from these 20 viewpoints uniformly distributed in the 3D space By taking virtual photos, you can get twenty views of a single target;

[0041] Wherein, the above step 201 mainly includes:

[0042] A set of viewpoints are predefined, and the viewpoints are the viewpoints of the object to be observed. Let M be the number of predefined viewpoints. In the embodiment of the present invention, M is taken as 20. Place the virtual camera on the 20 vertices of the dodecahedron containing the target. The dodecahedron is the regular polyhedron with the largest number of vertices, and its viewpoints are completely evenly dis...

Embodiment 3

[0076] Below in conjunction with concrete test, the scheme in embodiment 1 and 2 is carried out feasibility verification, see the following description for details:

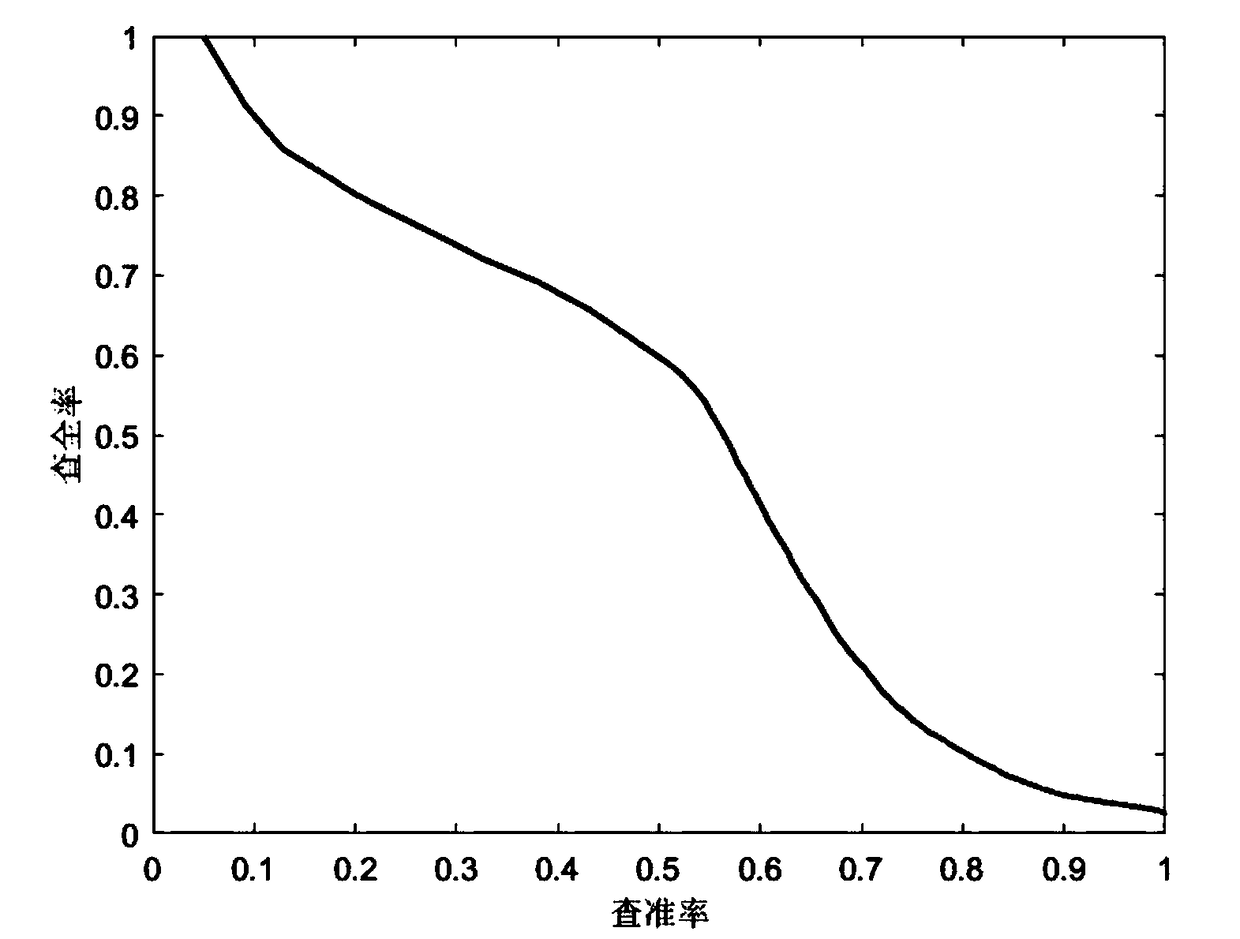

[0077] image 3 The scheme in the present embodiment has been carried out feasibility verification, adopts recall rate-precision rate to measure the performance of this method, it uses recall rate (Recall) and precision rate (Precision) as x-axis and y-axis respectively, It can be obtained according to the following formula:

[0078]

[0079] Among them, Recall is the recall rate, N z For the number of correctly retrieved targets, C r is the number of all related targets.

[0080]

[0081] Among them, Precision is the precision rate, C all is the number of all retrieved targets.

[0082] Generally speaking, the larger the area enclosed by the recall-precision rate curve and the coordinate axis, the better the performance of the algorithm. Depend on image 3 It can be seen that the area enclosed by th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com