A method for predicting prison term based on multi-task artificial neural network

A technology of artificial neural network and prediction method, which is applied in the field of sentence prediction based on multi-task artificial neural network, can solve the problems of large deviation of actual prediction results, ignoring useful information associations, ignoring relevant relationships, etc., and achieves the effect of improving accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

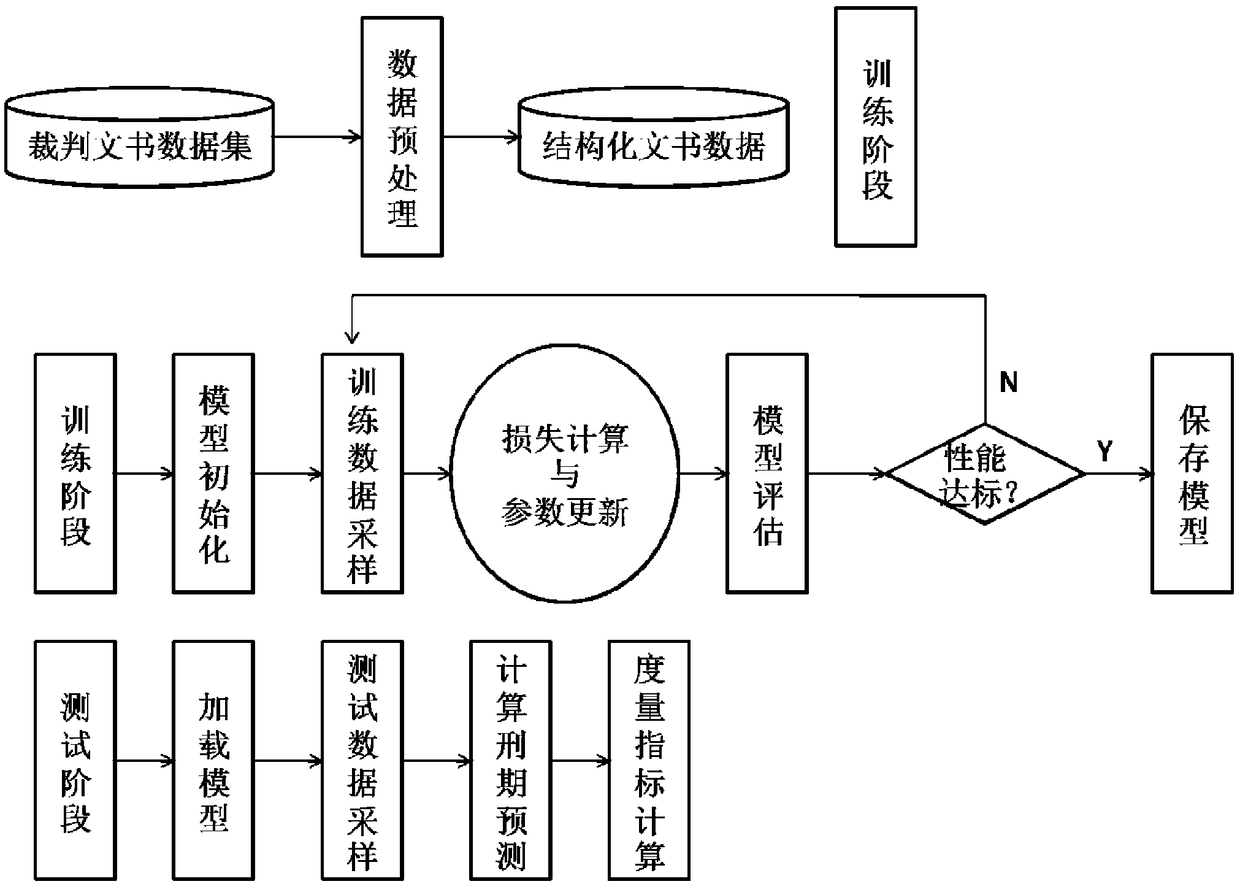

[0067] A method of sentence prediction based on multi-task artificial neural network, such as figure 1 shown, including the following steps:

[0068] (1) Preprocessing the raw data:

[0069] Extract the required information, realize data structuring, and construct structured data sets;

[0070] (2) Training stage:

[0071] Randomly divide the structured data set into two parts, with a ratio of 8:2. The large part of the data set is divided into N parts after being disrupted. Each time, N-1 parts are taken for training, 1 part for verification, and N times of cross-validation. Evaluate the performance of the model, and use a small part as the test data set; obtain the training data required for the current training stage, perform word segmentation processing and word vector mapping on the training data in turn, input the model, and obtain the output;

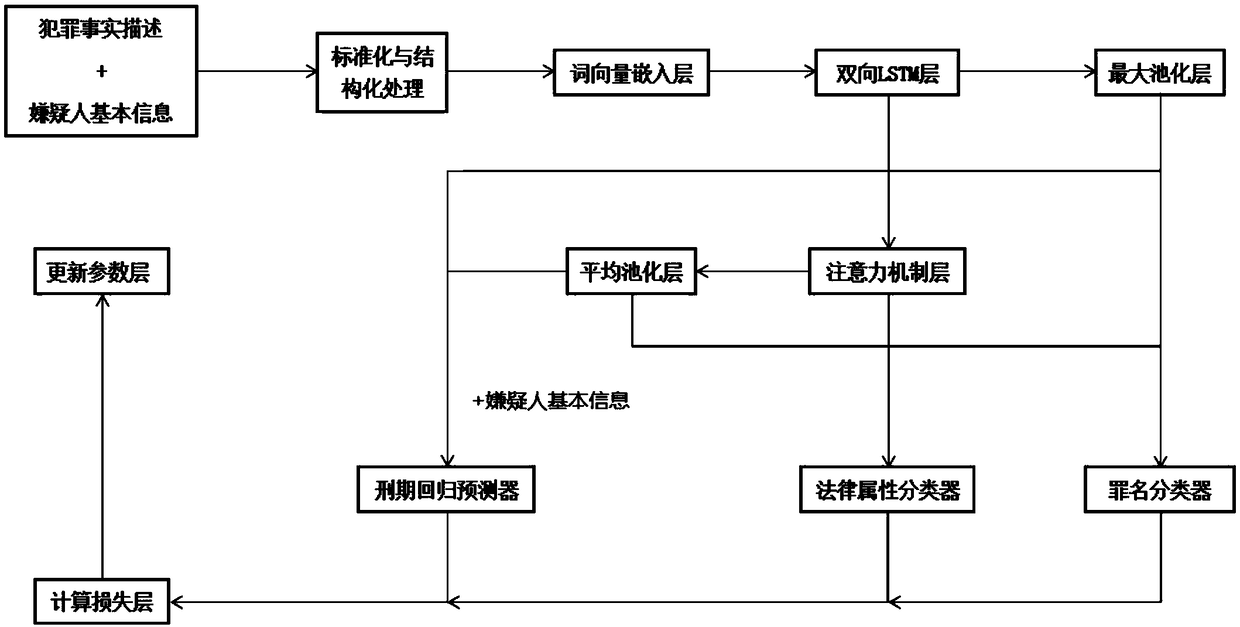

[0072] model such as figure 2 As shown, the model includes word vector embedding layer, bidirectional LSTM layer, maximum ...

Embodiment 2

[0086] According to a kind of sentence prediction method based on multi-task artificial neural network described in embodiment 1, its difference is:

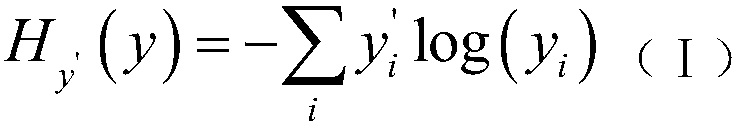

[0087] In step (2), for the classification task of predicting crimes and intermediate attributes, the classification error in the form of cross-entropy is used to calculate the error between the output and the target; the cross-entropy calculation formula is shown in formula (I):

[0088]

[0089] In formula (I), y' i is the ith value in the label, y i is the corresponding predictive component, when the cross-entropy is smaller, the classification is more accurate. h y′ (y) refers to the cross entropy;

[0090] In step (2), for the sentence regression task, the mean square error is used to calculate the error, and the mean square error between the target and the actual sentence is calculated; the mean square error calculation formula is shown in formula (II):

[0091]

[0092] In formula (II), y' i is the ith value in...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com