Image cooperative segmentation method based on salient image fusion

A collaborative segmentation and image technology, applied in the field of image processing, can solve problems such as missing foreground objects and inability to accurately segment foreground objects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

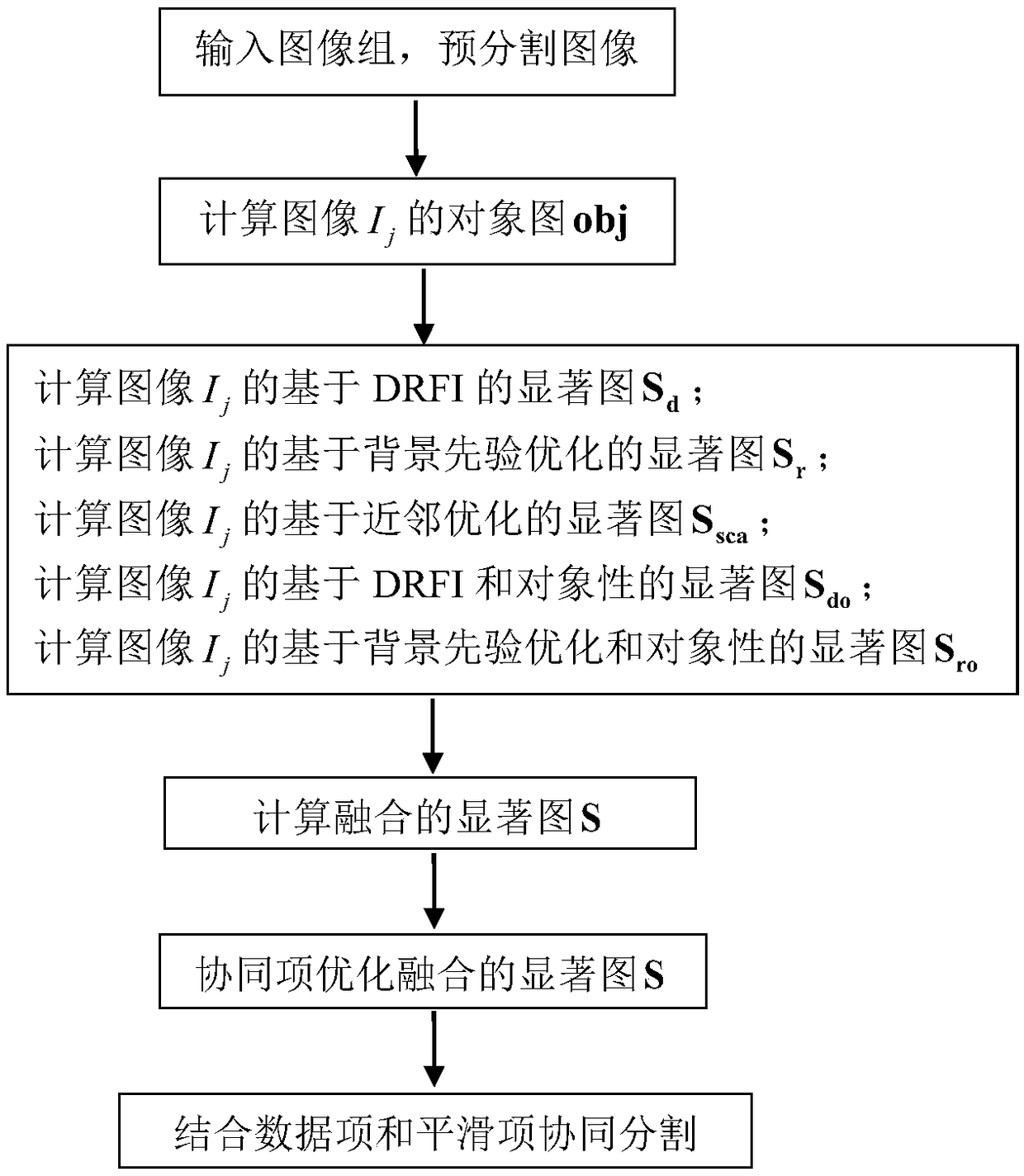

[0152] In this embodiment, an image collaborative segmentation method based on saliency map fusion, the specific steps are as follows:

[0153] In the first step, the input image group is pre-segmented:

[0154] Input image group I = {I 1 ,I 2 ,...,I sum}, for image I j ,j=1,2,...,sum Use the SLIC method for pre-segmentation, and get the superpixel sp={sp i ,i=1,2,...,n}, n is shown in formula (1),

[0155]

[0156] In formula (1), row and col are image I j The size of rows and columns, thus completing the input of 026Airshows image group in the iCoseg database, and pre-segmenting images;

[0157] The second step is to calculate the image I j The object graph obj:

[0158] For the image I in the first step above j , using the object measurement method proposed in "Measuring the objectness of imagewindows", the output image I j A group of bounding boxes and the probability of the image foreground objects contained in this group of bounding boxes, and then the proba...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com