A 3D scene reconstruction method based on depth learning

A 3D scene and deep learning technology, applied in 3D modeling, image analysis, image enhancement, etc., can solve the problem that the scale information cannot be effectively estimated based on the monocular camera, and achieve the effect of outdoor 3D reconstruction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

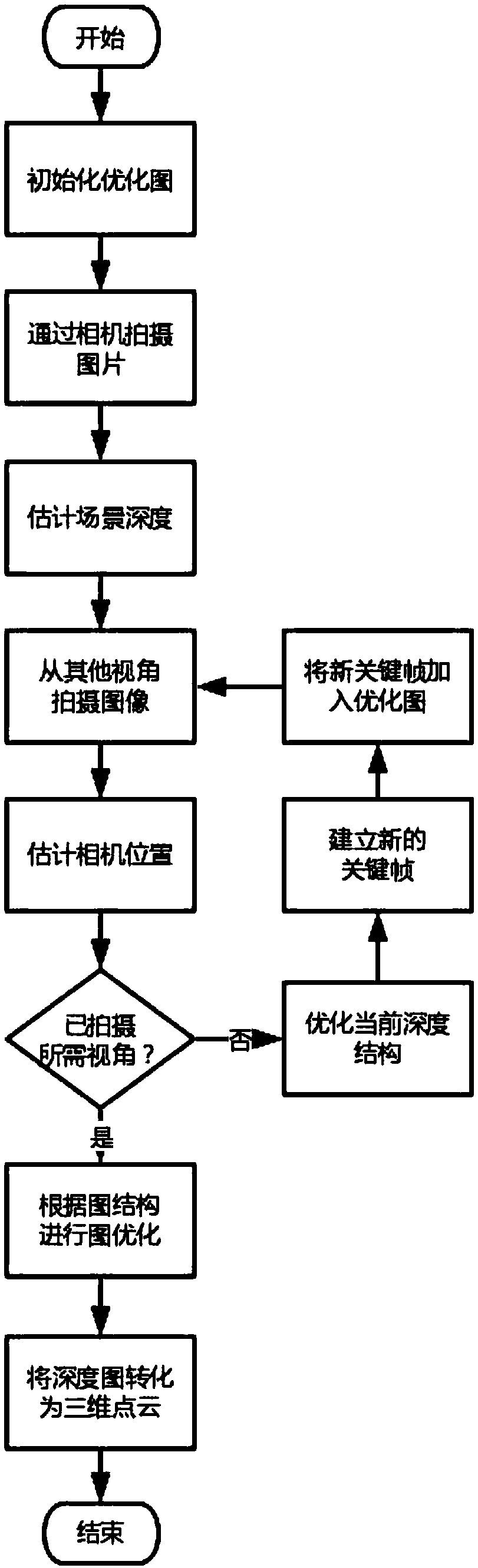

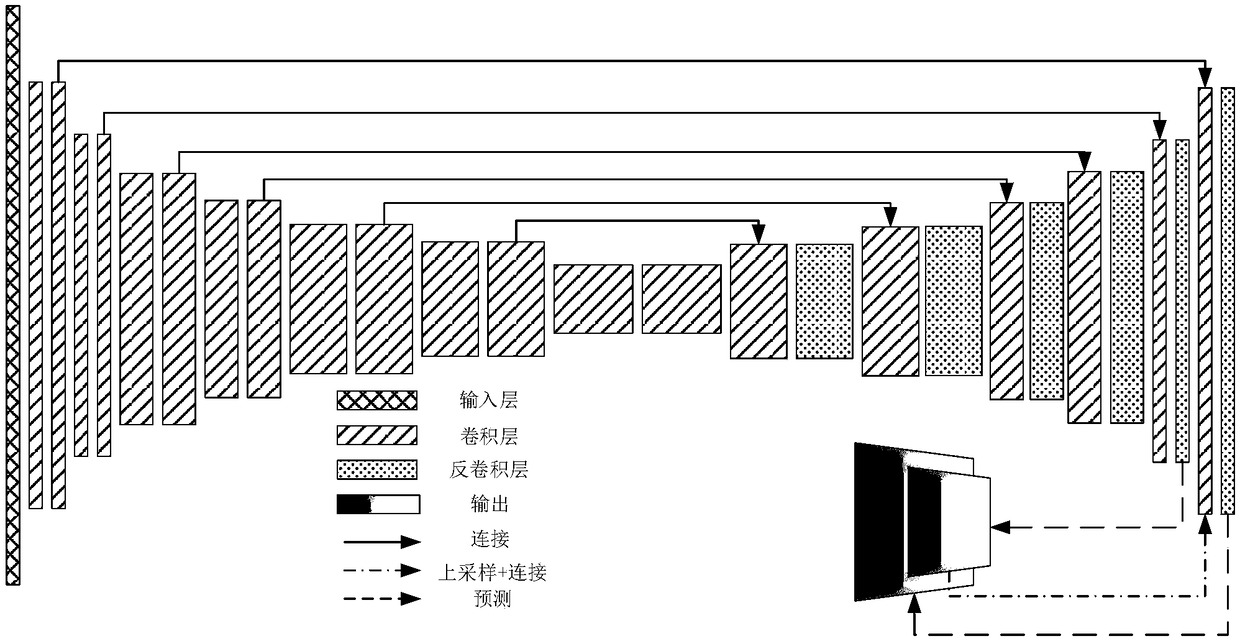

[0064] The neural network used in the present embodiment 1 has included 28 neural layers, and its structure is as attached figure 2 shown in . The specific implementation steps of this embodiment are as follows figure 1 shown, from figure 1 As can be seen, the method of the present invention comprises the following steps:

[0065] Step A: Initialize the optimization graph;

[0066] Specific to this embodiment, that is, initialize the graph optimization tool g2o, select the solver and optimization algorithm used;

[0067] Step B: taking a color image;

[0068] Specifically to this embodiment, the image is captured by a color camera;

[0069] Take photos of the scene with a color camera, and the scene structure of the photo content should be as clear as possible. The corresponding picture is transferred to the program through the USB port;

[0070] Step C: Estimate scene depth;

[0071] Specifically in this embodiment, the depth structure of the scene is estimated throu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com