Compression and acceleration method based on a deep neural network model

A technology of deep neural network and model, applied in the field of neural network, can solve the problems of reducing the accuracy loss of neural network and not solving it, and achieve the effect of improving the accuracy of the model

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0055] The invention will be further described in detail below in conjunction with the drawings and embodiments of the invention.

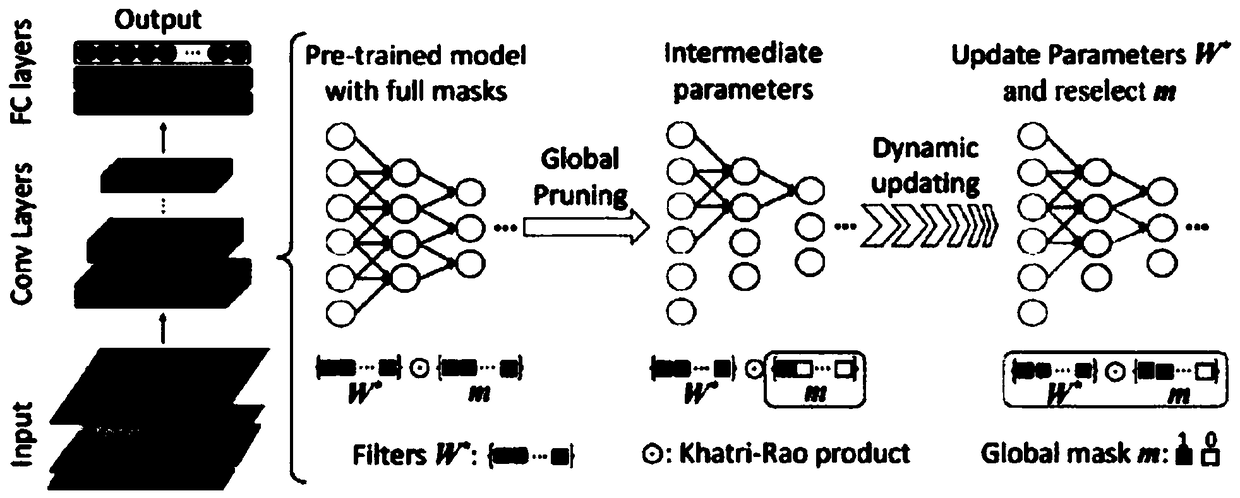

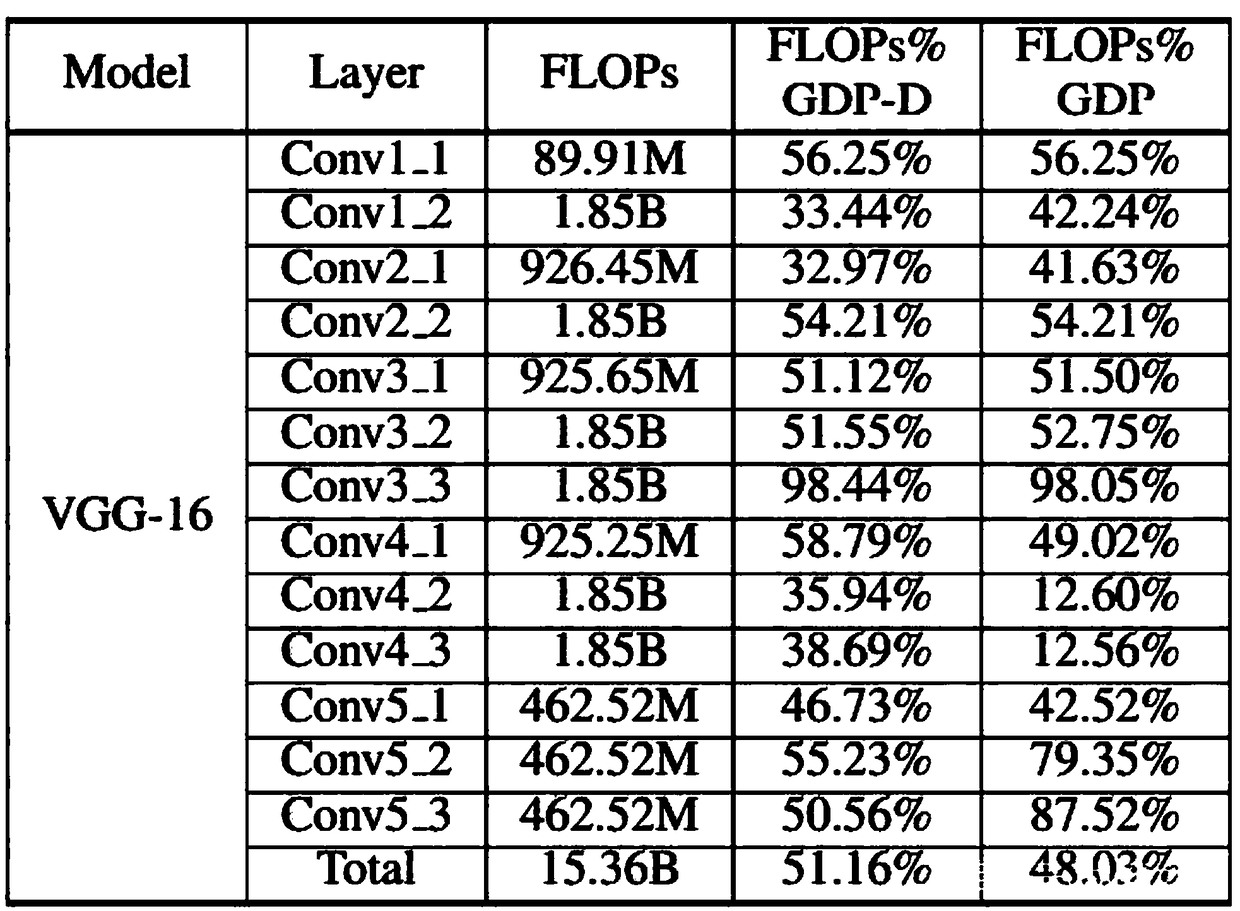

[0056] The models of general global and dynamic pruning and acceleration methods (DGP) usually have a large amount of information redundancy, and there is room for reduction from the number of parameters of the model to the representation accuracy of the parameters. Relying on the world's leading research results in the field of neural network model compression, redundant filters can be pruned to solve the above two problems, which can greatly speed up the pruned network and reduce the accuracy loss of the network , under the premise of ensuring that the accuracy of the algorithm is basically not lost, the calculation amount and scale of the network model can be reduced several times.

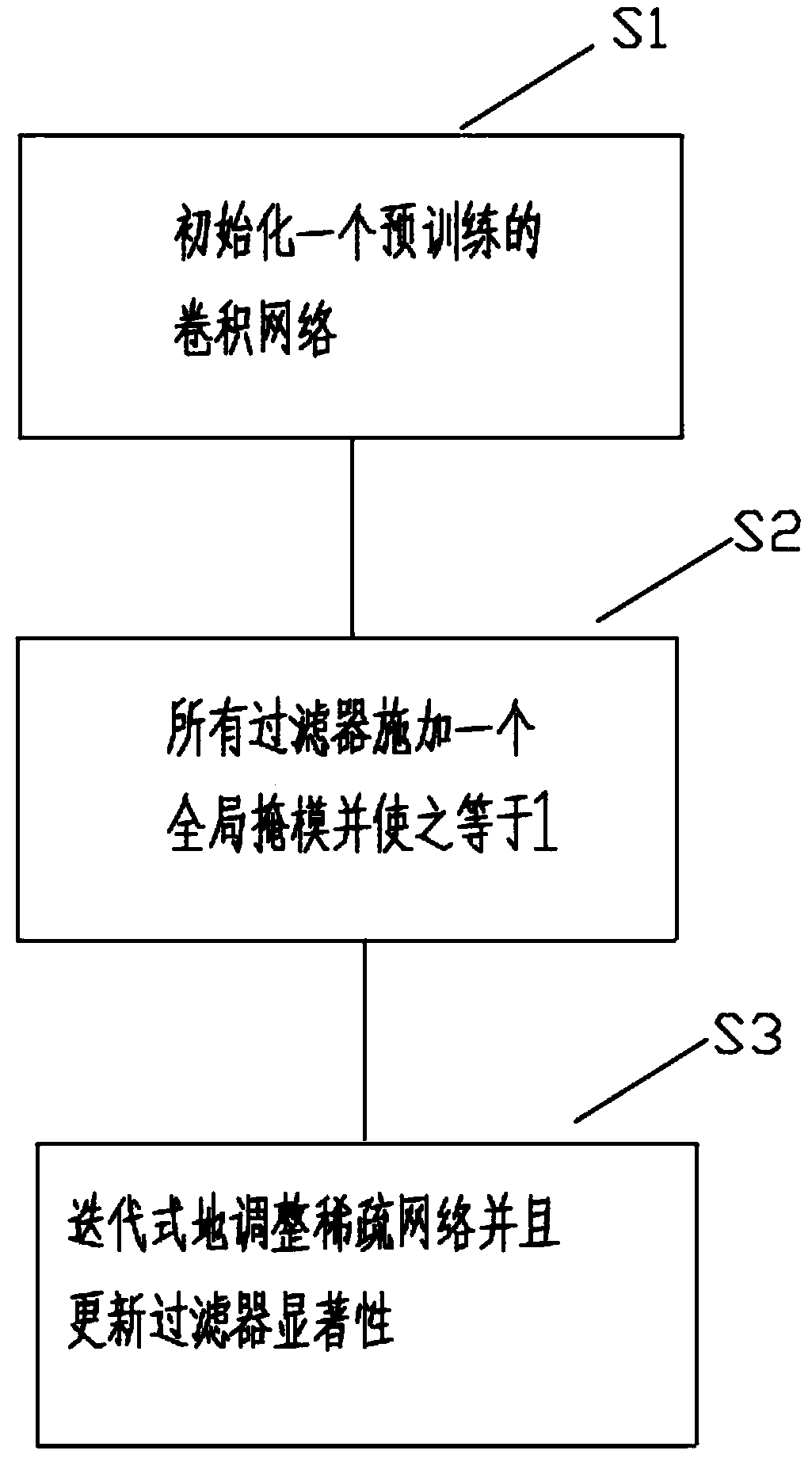

[0057] The present invention evaluates the weight of each filter globally on all network layers, then dynamically and iteratively prunes and adjusts the network ac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com