Robot multi-camera visual-inertial real-time positioning method and device

A multi-camera vision and real-time positioning technology, applied in the field of robot navigation, can solve the problems of blurred imaging, incompetence of pure visual positioning methods, and inconspicuous visual features, and achieve the effect of improving positioning accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] Below, the technical solution of the present invention will be further described in conjunction with the accompanying drawings and specific embodiments:

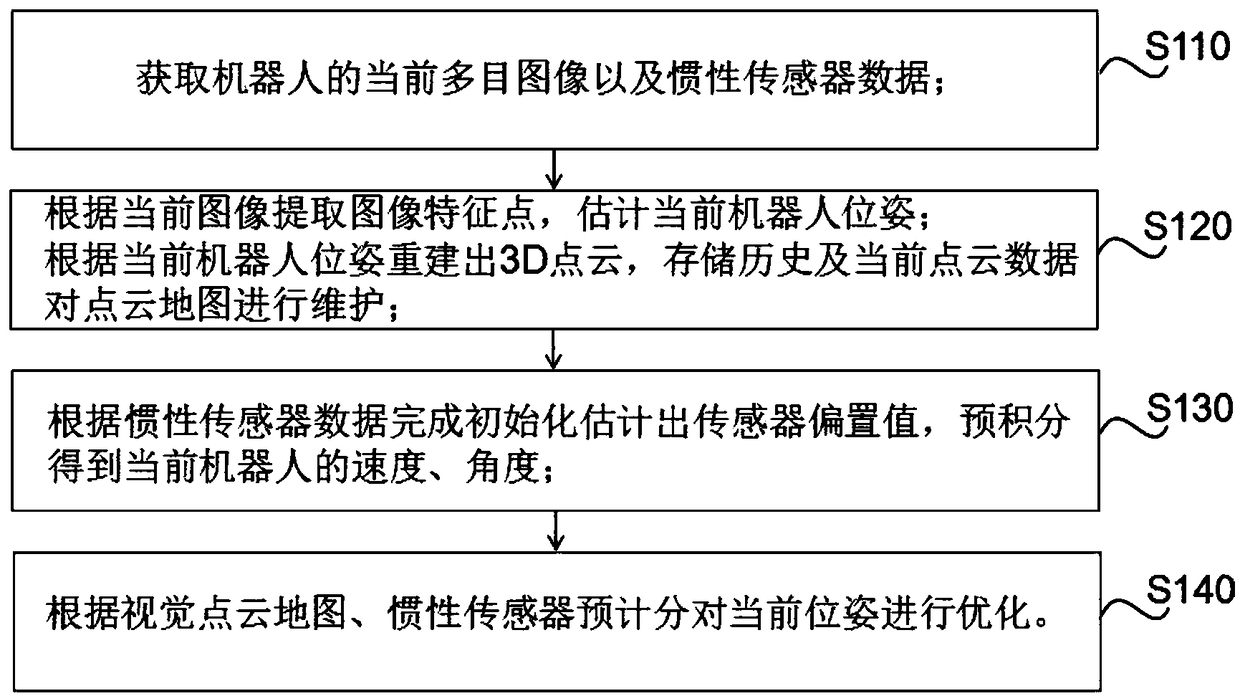

[0043] figure 1 It is a schematic flow chart of the multi-camera visual-inertial real-time positioning method for a robot of the present invention, and the present invention discloses a multi-camera visual-inertial real-time positioning method for a robot, comprising the following steps:

[0044] Obtain the current multi-eye image and inertial sensor data of the robot;

[0045] Extract image feature points according to the current image, estimate the current robot pose; reconstruct a 3D point cloud according to the current robot pose, store historical and current point cloud data to maintain the visual point cloud map;

[0046] Complete the initialization and estimate the sensor bias value according to the inertial sensor data, and pre-integrate to obtain the current speed and angle of the robot;

[0047] Optimize t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com