An asynchronous on-line calibration method for multi-sensor fusion

A technology of multi-sensor fusion and calibration method, which is applied in the field of asynchronous online calibration of multi-sensor fusion, and can solve the problems of time synchronization and complex process.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0072] The present invention will be further described below in conjunction with the accompanying drawings.

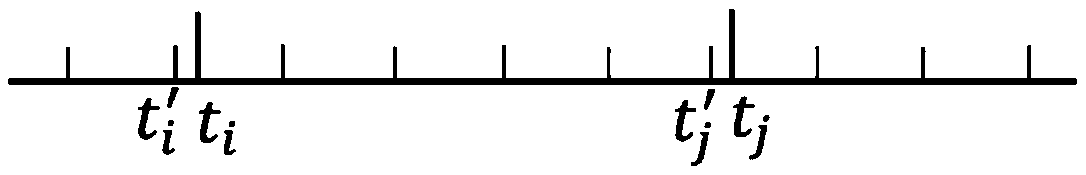

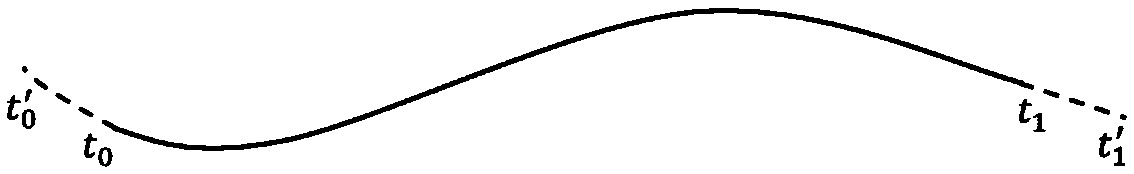

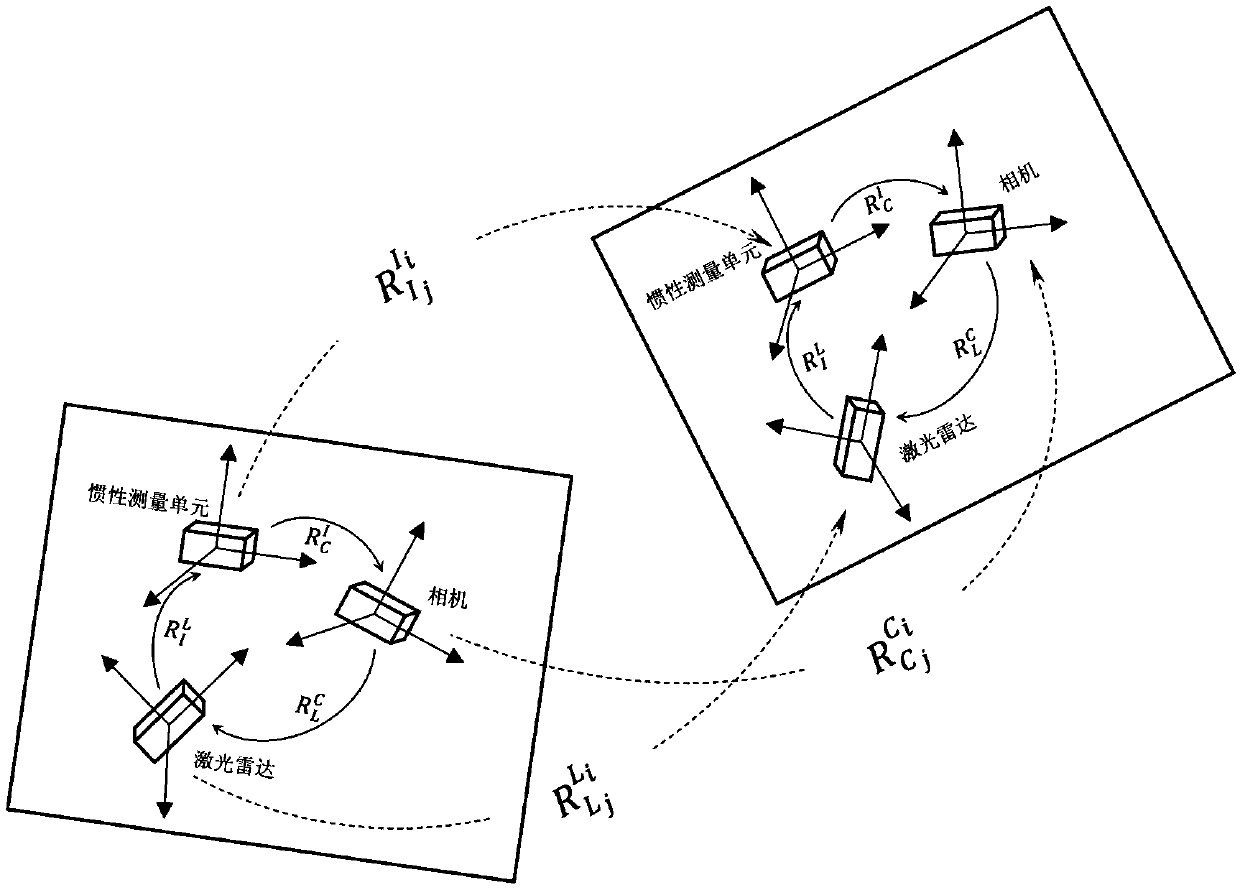

[0073] refer to Figure 1 ~ Figure 4 , an asynchronous online calibration method for multi-sensor fusion, comprising the following steps:

[0074] An asynchronous online calibration method for multi-sensor fusion, comprising the following steps:

[0075] 1) Calculate the rotation of the camera

[0076] Let point P be a point in the camera coordinate system, whose coordinates are [x y z] T , set point p 1 ,p 2 are point P in graph F i , F j projection in C i ,C j are the camera coordinate systems at time i and time j respectively, using the pinhole camera model, the scale-independent expression is obtained:

[0077] p 1 =KP

[0078] p 2 =K(RP+t) (1.1)

[0079] where K is the internal reference matrix of the camera, R and t are from C i to C j The rotation matrix and translation vector of are converted to get the epipolar constraints:

[0080]

[0081]...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com