A vision-based robot navigation method for agricultural and forestry parks

A navigation method and robot technology, which is applied in the field of vision-based robot navigation in agricultural and forestry parks, can solve the problems of inability to accurately locate GPS and inability to turn around accurately between lines, and achieve the effects of realizing correct navigation, eliminating constraints, and simplifying navigation algorithms

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] Embodiments of the present invention are described below with reference to the drawings, in which like parts are denoted by like reference numerals. In the case of no conflict, the following embodiments and the technical features in the embodiments can be combined with each other. Below take orchard as an example to describe the present invention, but in forest area, the present invention is applicable equally.

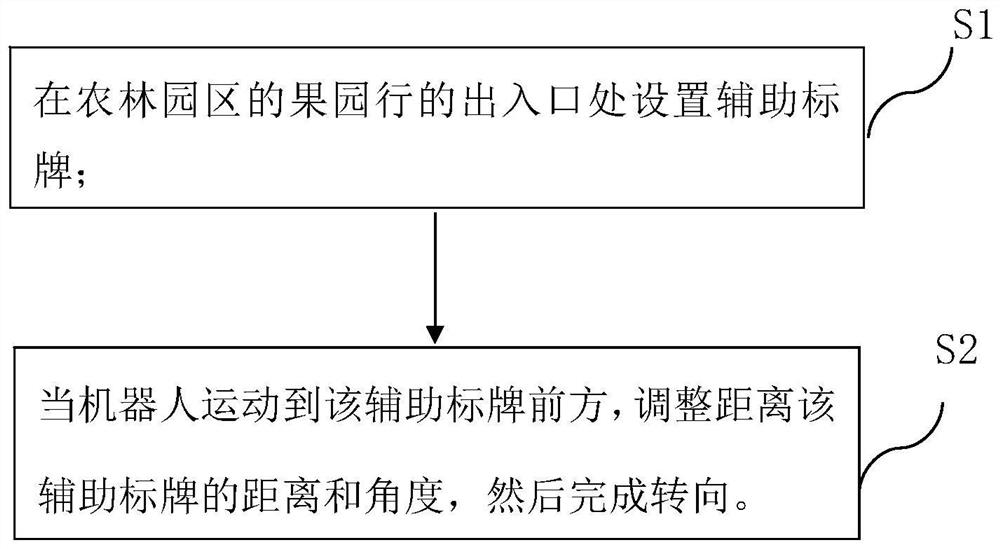

[0022] Such as figure 1 An embodiment of the method of the present invention is shown, and the method of the present invention includes: S1, setting auxiliary signs at the entrances and exits of orchard rows in the agricultural and forestry park. S2, when the robot moves to the front of the auxiliary sign, adjust the distance and angle from the auxiliary sign, and then complete the turning.

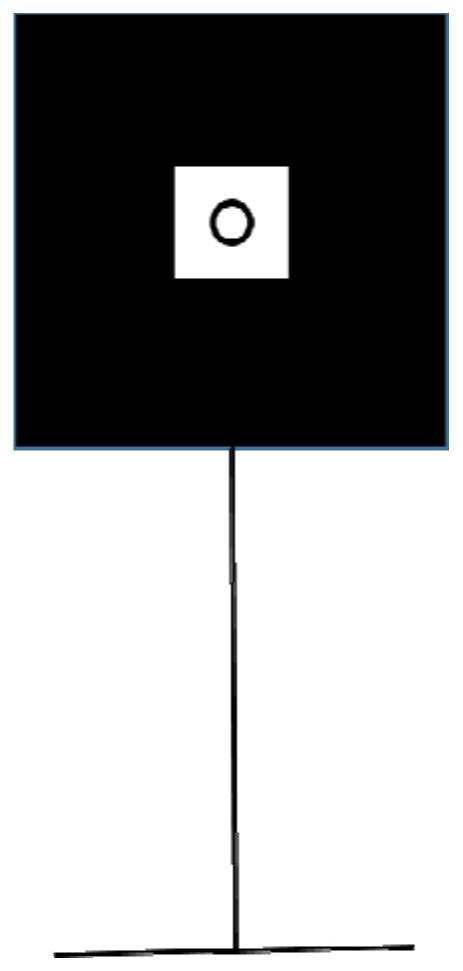

[0023] In one embodiment, the appearance of the auxiliary signage can have a special design, such as Figure 2-Figure 5 shown. The auxiliary sign adopts a graphic with...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com