A large-screen interaction system based on laser radar positioning

A lidar and interactive system technology, applied in the field of human-computer interaction, can solve the problems of inability to directly apply, large amount of lidar output data, complex data decoding algorithm, etc., to achieve real-time accurate interactive information collection, strong reusability, The effect of efficient and accurate positioning information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

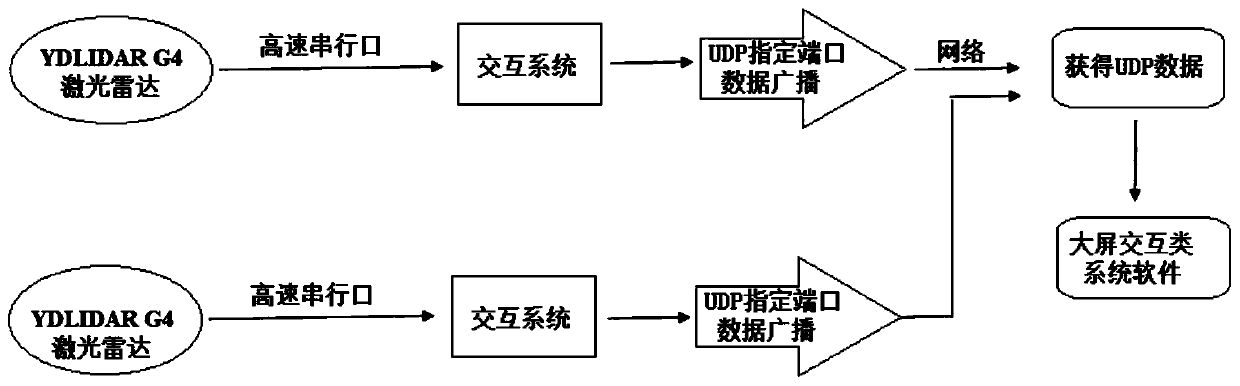

[0064] A large-screen interactive system based on lidar positioning, such as figure 1 As shown, YDLIDAR G4 lidar is used to collect data, and the interactive system includes the following modules:

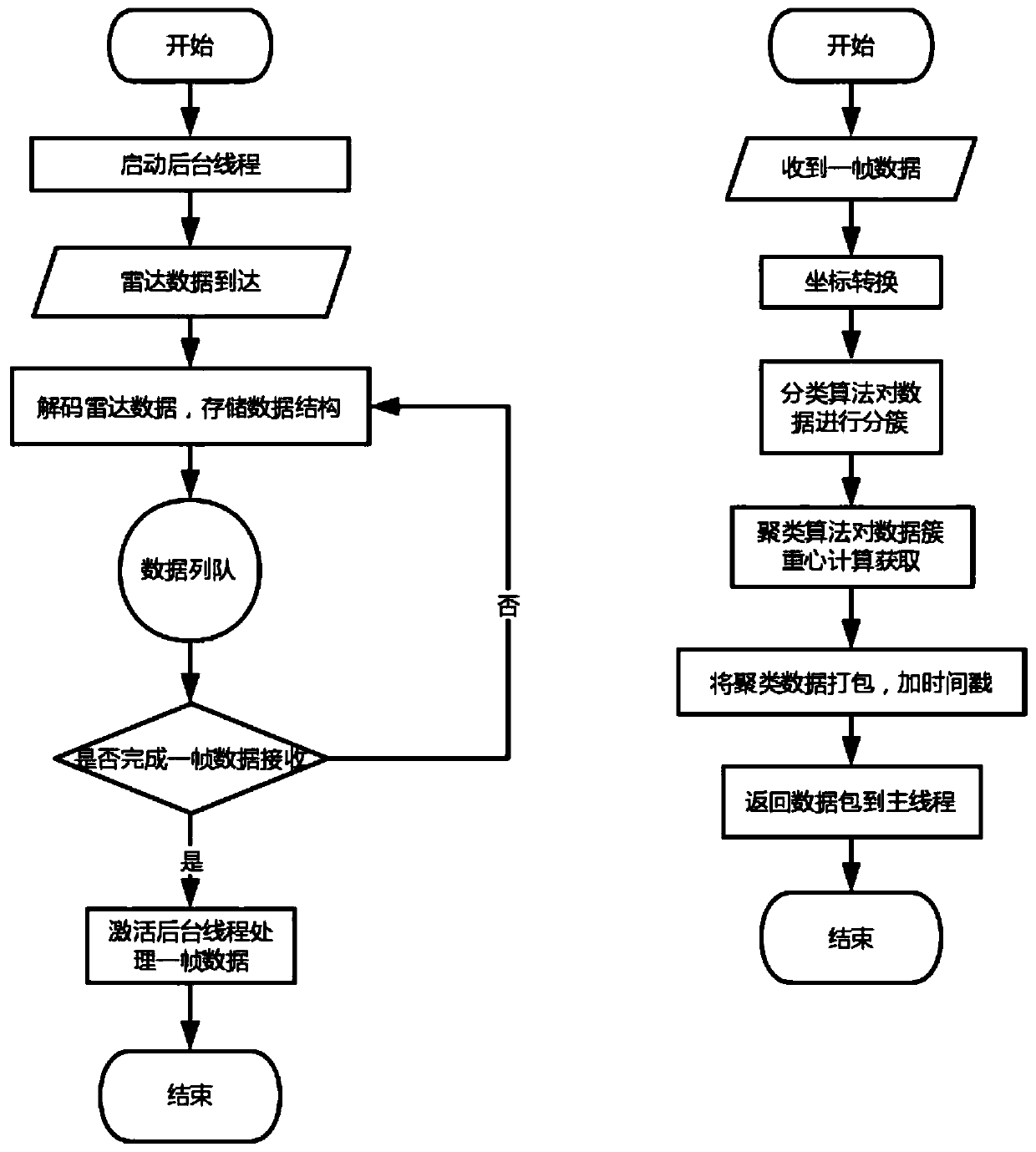

[0065] (1) The data acquisition and processing module is used to collect and process the laser radar serial port data, and then obtain effective coordinates by classifying the radar serial port data and then clustering and calculating; the specific process is as follows, as figure 2 Shown:

[0066] S11, start the background thread, obtain the radar serial port data in real time, and decode the radar serial port data after the radar serial port data arrives;

[0067] S12. Check and analyze the obtained radar serial port data to obtain scanning coordinates (x, y):

[0068] Angle Analysis Angle i =(AngleS–AngleE)*(i–1)+Angle 1

[0069] Distance analysis Distance i =((Dis_q2_LL<<8)+Dis_q2_HH) / 4

[0070] x=Distance i *Cos(π*Angle i / 180)

[0071] y=Distance i *Sin(π*Angle i...

Embodiment 2

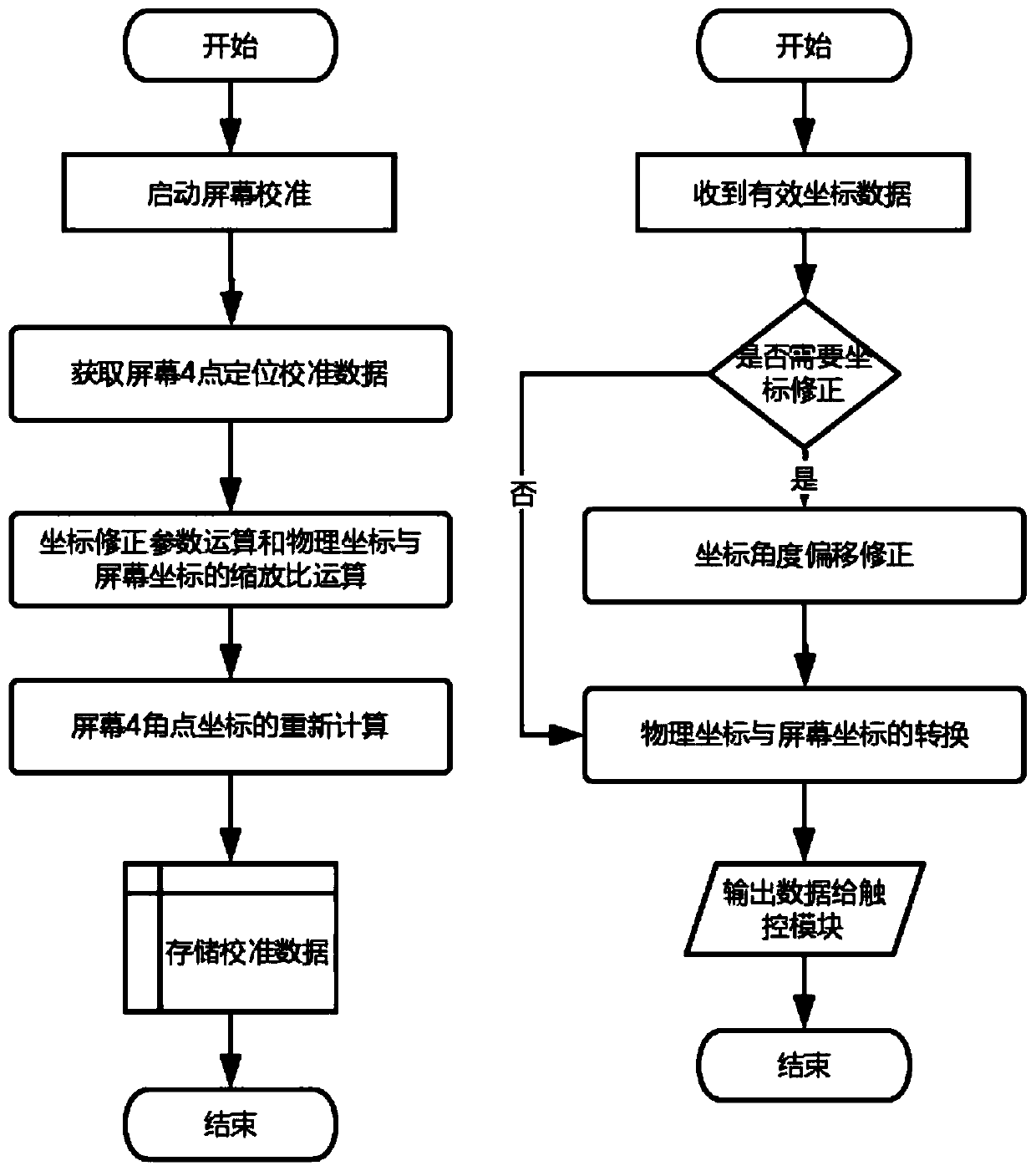

[0101] The scheme of embodiment 2 is basically the same as embodiment 1, the only difference is that the specific operation process of the coordinate conversion module is as follows, as image 3 Shown:

[0102] S21, through the mapping relationship, the effective coordinates (x 3 ,y 3 ) to screen coordinates (x 4 ,y 4 );

[0103] Start the screen calibration, according to the screen resolution of different systems, get the calibration data of the coordinates of the 4 corners of the screen (X 0 , Y 0 ), (X 0 , Y 1 ),(X 1 , Y 0 ),(X 1 , Y 1 ), four-corner coordinate offset correction parameter calculation and calculation of the mapping relationship between physical coordinates and screen coordinates, the software obtains the screen resolution, the ratio of screen coordinates to physical coordinates is the recalculation of offset parameters for the four corner coordinates of the screen, and an effective Coordinate data of the 4 corners of the screen to obtain calibrat...

Embodiment 3

[0118] The scheme of embodiment 3 is basically the same as that of embodiment 2, the only difference is that the specific operation process of the touch module is as follows, as Figure 4 Shown:

[0119] S31 judges whether the screen coordinates obtained by the coordinate conversion module support multi-touch, and when the screen coordinates support multi-touch, perform a multi-touch data algorithm; when the screen coordinates do not support multi-touch, perform single-touch Data algorithm, through data algorithm analysis, to obtain touch data of single touch and multi-touch;

[0120] Touch recognition: Double-buffer the screen coordinate data structure, use two frames of data to calculate the distance between coordinate points, and compare the timestamps of the data packets to determine whether the touch is consistent and effective, and then track the touch data to identify touch tracking. ID for screen coordinate packet marking.

[0121] S32. Then determine whether the tou...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com