A fatigue detection method based on deep learning face posture estimation

A face posture and fatigue detection technology, which is applied in computing, computer parts, instruments, etc., can solve the problems of inability to make accurate judgments of drivers and the inability to accurately identify the status of drivers, so as to reduce the probability of accidents, Ingenious design, strong anti-interference effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] The following will clearly and completely describe the technical solutions in the embodiments of the present invention with reference to the accompanying drawings in the embodiments of the present invention. Obviously, the described embodiments are only some, not all, embodiments of the present invention.

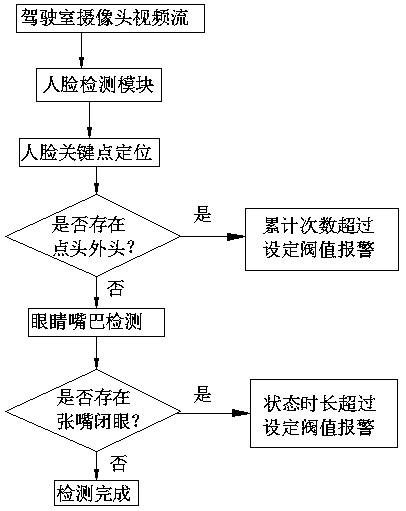

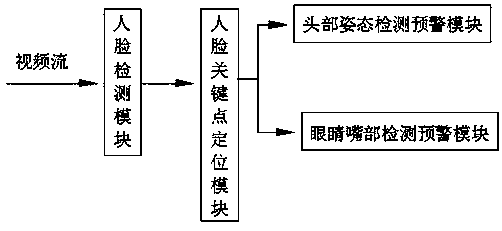

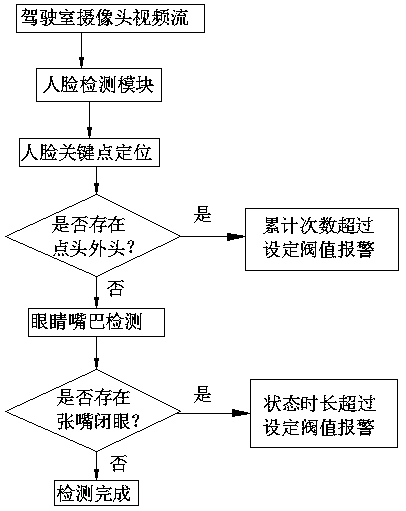

[0026] refer to Figure 1-2 , a fatigue detection method based on deep learning face pose estimation, comprising the following steps:

[0027] S1. Obtaining the video stream of the cab camera: first, the driver’s driving status video is collected through the on-board camera in the cab, and the face area is detected by using the HOG extraction algorithm, and is framed by a rectangular frame, and the face recognition module is used, wherein the face recognition module Use the on-board camera installed in front of the driver to collect the driver's driving status video, use the HOG-based face detector to detect each frame of image in the video stream, identify the area ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com