A Vision-Based Perception Method for External State of Spatial Cellular Robot

A state-aware, robotics technology, applied in manipulators, program-controlled manipulators, manufacturing tools, etc., can solve problems such as method failure, larger image changes in the target area, and reduced depth uncertainty, achieving a wide range of applicability.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

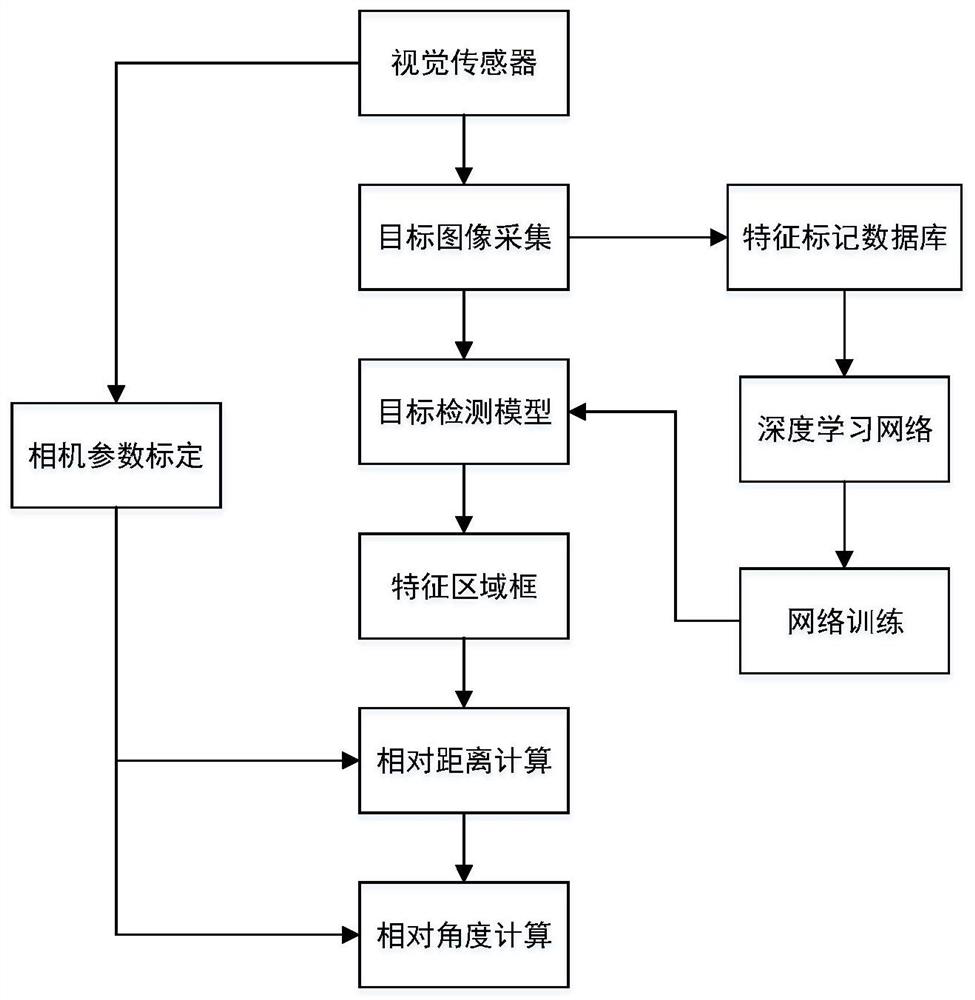

[0044] Embodiment one: if Figure 1-4 As shown, a vision-based spatial cell robot external state perception method involved in this embodiment, the specific steps are:

[0045] (1) Monocular camera calibration of space cell robot

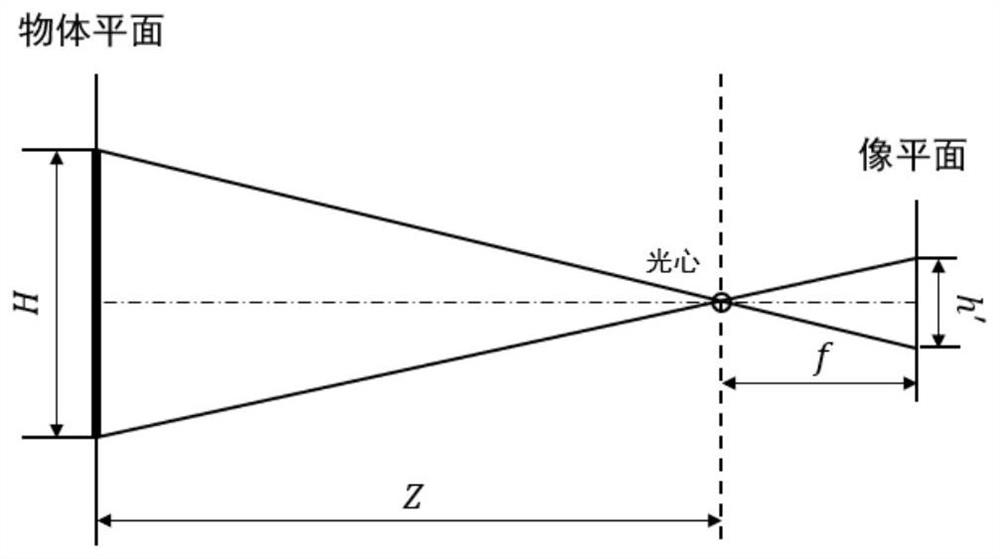

[0046] Based on the pinhole camera model, the internal and external parameter model of the camera is derived, as shown in formula (1):

[0047]

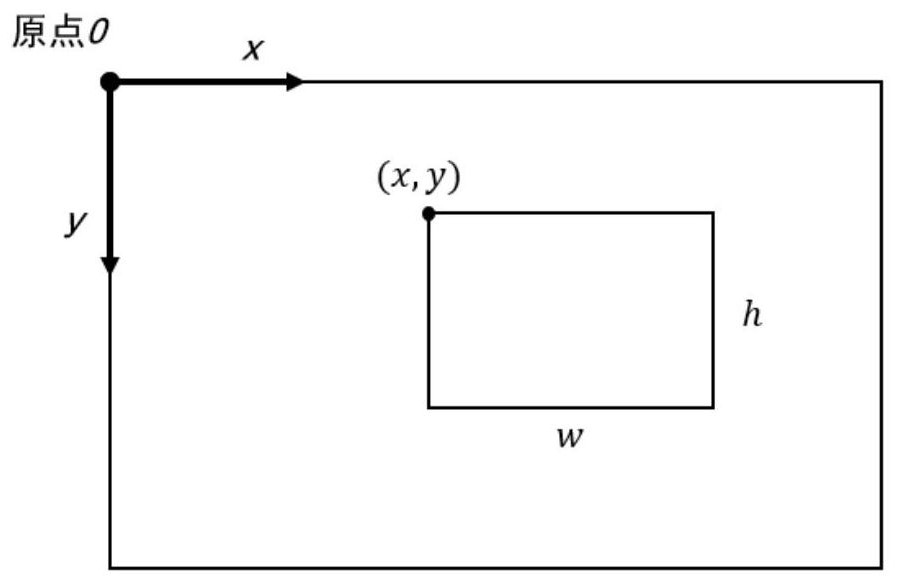

[0048] where [X,Y,Z] T is the coordinate of point P in the world coordinate system, [u, v] T is the coordinate of P in the pixel coordinate system, α and β are the magnification factors from the pixel coordinate system to the image plane coordinate system on the u-axis and v-axis respectively, [c x ,c y ] T is the translation vector from the pixel coordinate system to the image plane coordinate system, f is the focal length of the space cell robot USB camera, f x = αf and f y =βf are respectively the magnification factors from the pixel coordinate system to the world coordinate system along the x...

Embodiment 2

[0073] Embodiment two: if Figure 1-4 As shown, in the simulation verification process of a vision-based external state perception method of a space cell robot involved in this embodiment, the USB camera of the space cell robot is calibrated by the Zhang Zhengyou calibration method, and the 6×9 checkerboard calibration board is used to calibrate the USB camera. Take pictures of the chessboard calibration board from different angles, and select 20 pictures for corner point extraction. The results of calibration by Zhang Zhengyou calibration method are shown in the following table:

[0074] Table 1 Calibration results of camera parameters

[0075]

[0076] Select 200 spatial cell robot images from different angles to establish a spatial cell robot image database. Carry out further feature region labeling processing on the images in the image database, and label the parts to be identified. The data containing the feature label frame includes the image information of each pict...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com