Image subtitle generation method based on multi-attention generative adversarial network

An attention and network technology, applied in biological neural network models, image communication, neural learning methods, etc., can solve problems such as lack of capturing global information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0080] The accompanying drawings are for illustrative purposes only and should not be construed as limiting the patent.

[0081] The present invention will be further elaborated below in conjunction with the accompanying drawings and embodiments.

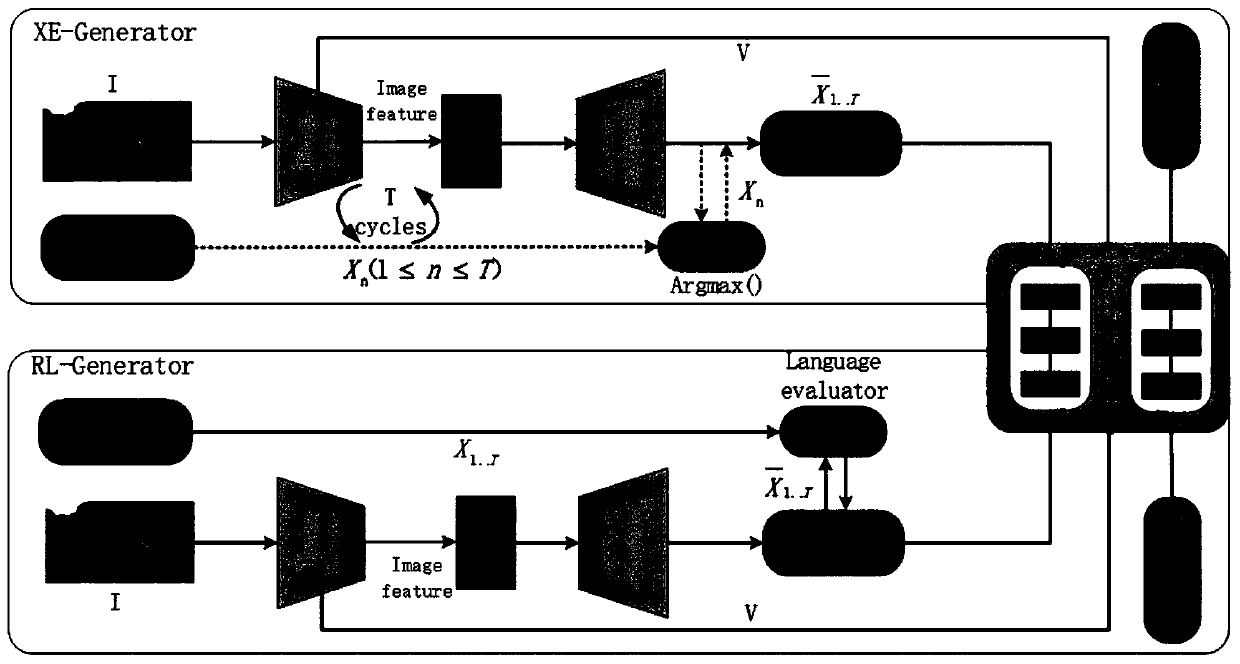

[0082] figure 1 Schematic diagram of the adversarial network architecture for multi-attention generation. Such as figure 1 As shown, the multi-attention generation confrontation network includes two multi-attention generators (XE-Generator, RL-Generator) and a multi-attention discriminator, where the cross-entropy-generator (XE-Generator) and reinforcement learning-generation Both RL-Generators are multi-attention generators with the same structure, but different training strategies, and both training strategies are trained based on the proposed multi-attention generator structure.

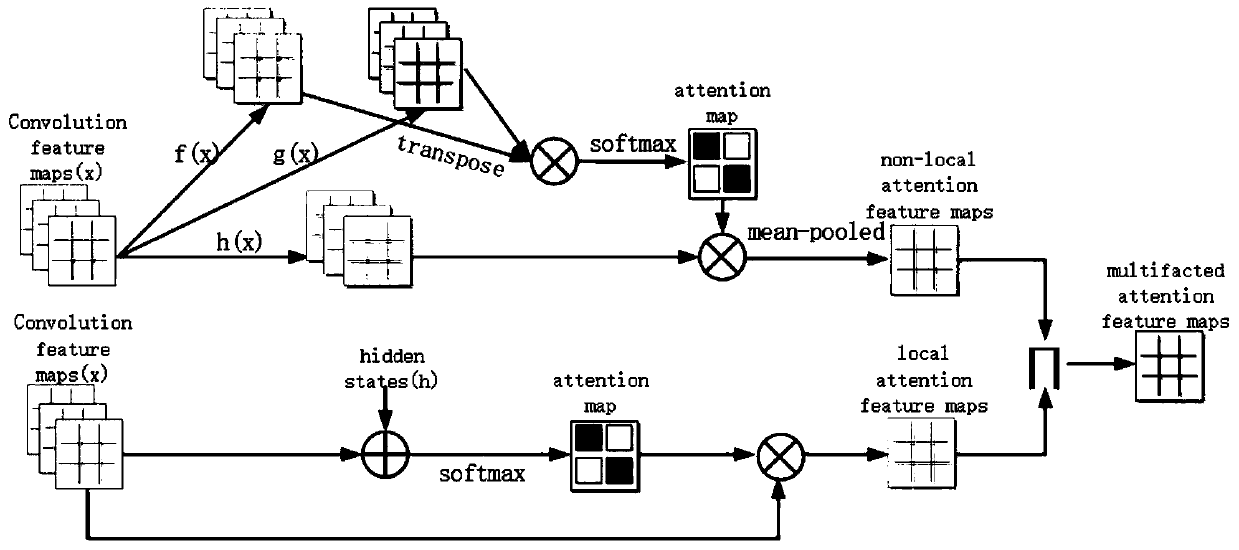

[0083] figure 2 It is a schematic diagram of the multi-attention mechanism network structure. Such as figure 2 As shown, the top of the figure ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com