Real-time gesture recognition method and system

A gesture recognition and gesture technology, applied in the field of human-computer interaction, can solve the problems that the accuracy of gesture recognition is not very high, the effect of similar gesture recognition is not very good, and gesture recognition takes a long time to process, so as to improve the accuracy and speed , the effect of improving robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

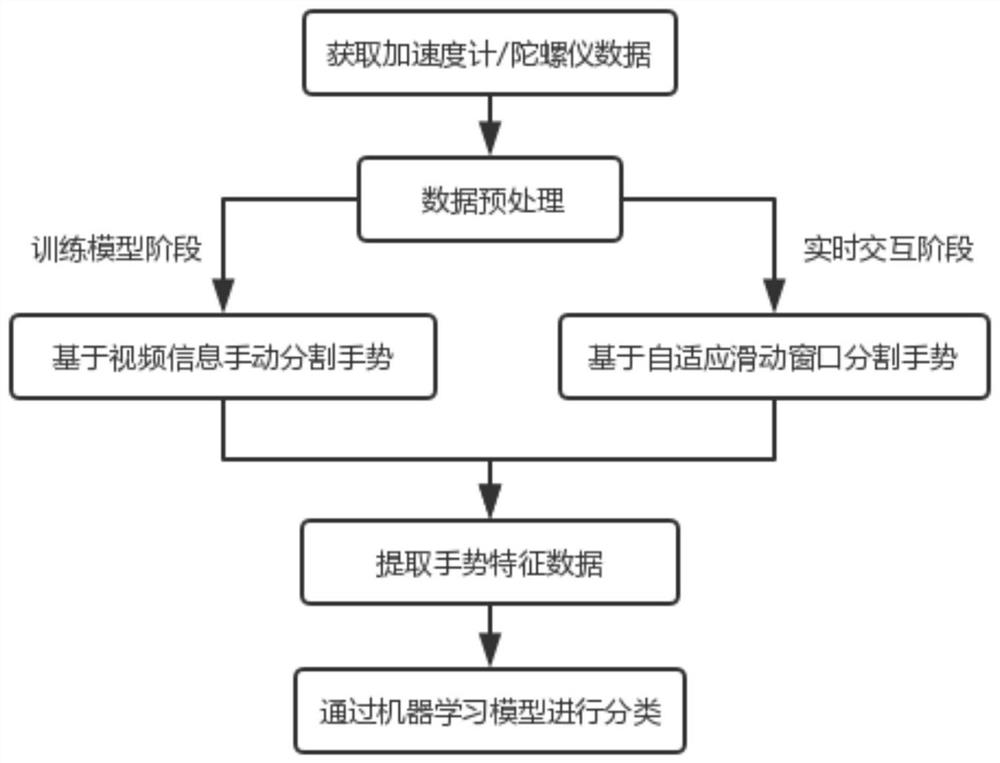

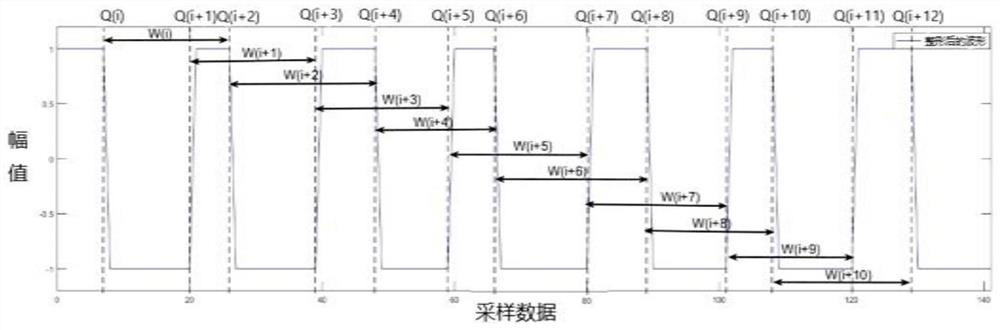

[0053] see Figure 1 to Figure 3 , a real-time gesture recognition method mainly includes the following steps:

[0054] 1) Establish a gesture classification model and store it on the server side.

[0055] The main steps of building a gesture classification model are as follows:

[0056] 1.1) Obtain training sample data, the main steps are as follows:

[0057] 1.1.1) Use the motion sensor of the smart terminal to collect the motion sensor data of n testers, which is recorded as data set B=[B 1 , B 2 ,...,B h ,...,B m ]. B h Represents a set of motion sensor data.

[0058] The motion sensor mainly includes a three-axis acceleration sensor and a three-axis gyro sensor. The motion sensing data mainly includes three-axis acceleration data, three-axis gyroscope data and motion time.

[0059] 1.1.2) The smart terminal sends the collected motion sensor data to the server via Bluetooth. The server side is a mobile phone or a computer with data storage and processing functio...

Embodiment 2

[0103] The system based on real-time gesture recognition method mainly includes smart terminal, camera and server.

[0104] The smart terminal has a motion sensor.

[0105] The smart terminal mainly includes smart bracelets, smart watches and smart gloves.

[0106] The motion sensor collects the motion sensor data of the user in real time and uploads it to the server.

[0107] When the camera collects gesture sensing data, it records the video information collected by the gesture and uploads it to the server.

[0108] Gesture classification models are stored on the server side.

[0109] The server tags the gesture data against the video information and the motion sensor data.

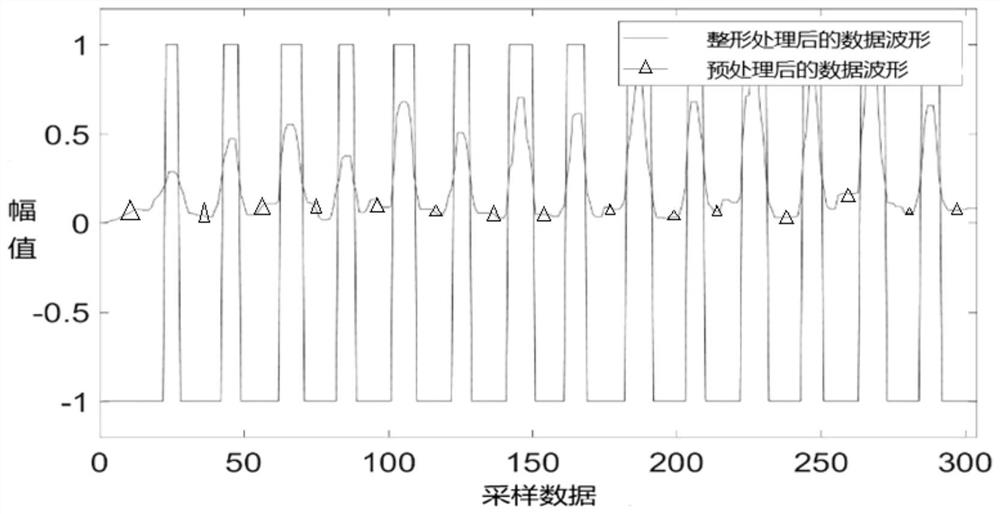

[0110] The server side preprocesses the motion sensor data to obtain the resultant acceleration data a.

[0111] The resultant acceleration a is as follows:

[0112]

[0113] In the formula, x, y and z are the data of the three axes of the three-axis acceleration sensor, respectively.

[0114] ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com