Self-calibration method and device for structured light 3D depth camera

A technology of depth camera and structured light, which is applied in the fields of image processing, computer vision and artificial intelligence, can solve the problems of precision drop, optical axis offset, optical axis distortion, etc., and achieve the effect of real-time self-correction and improved robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

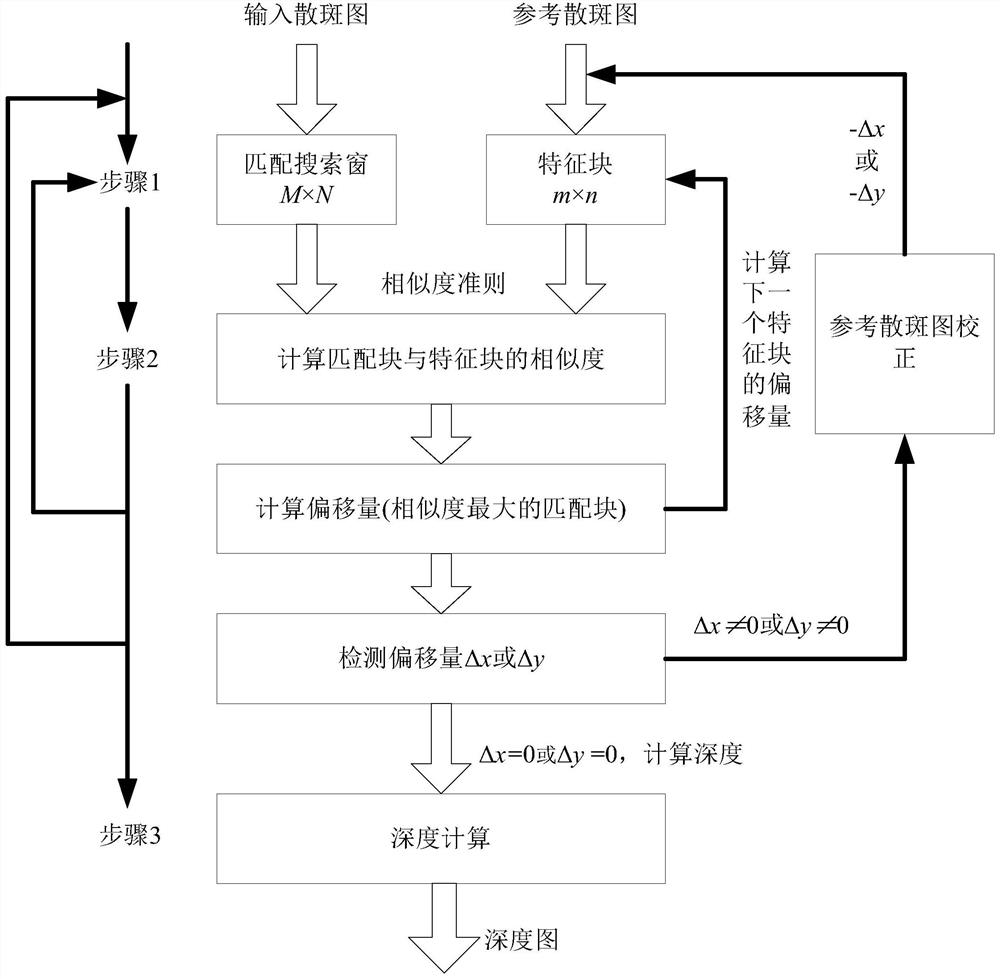

[0057] Attached below Figure 1-8 The self-calibration method and device of the present invention will be further described in detail.

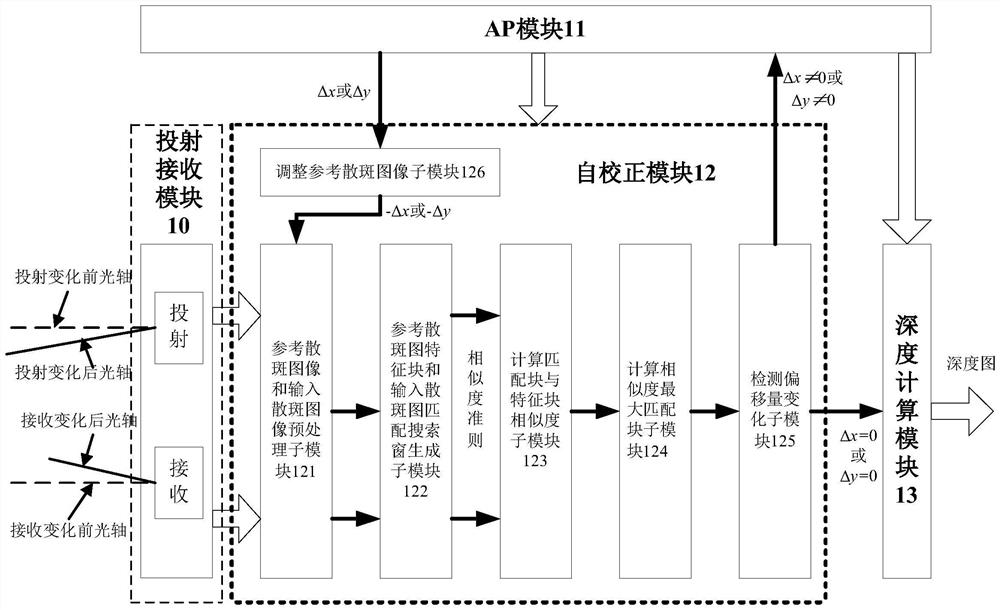

[0058] figure 1 It is a structural frame diagram of a structured light depth camera self-calibration device for a smart phone according to an embodiment of the present invention. Such as figure 1 As shown, the self-calibration device includes a projection receiving module 10 , an AP module 11 , a self-calibration module 12 and a depth calculation module 13 .

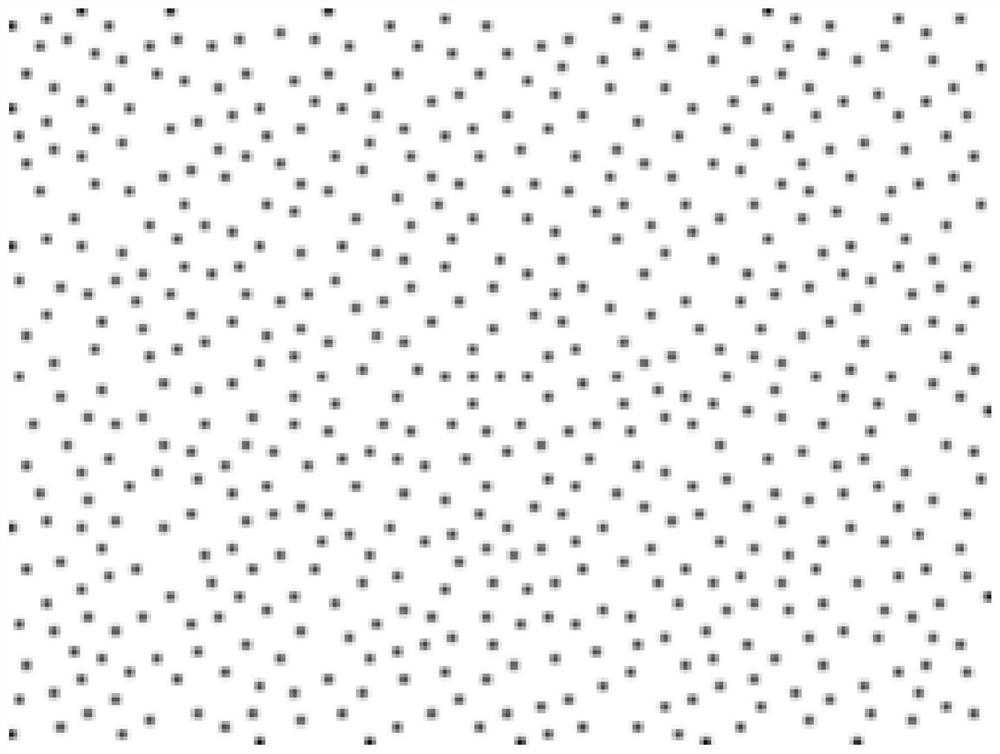

[0059] The projecting and receiving module 10 is configured to receive an input speckle image for depth calculation projected from an infrared laser speckle projector and collected by an image receiving sensor, and a reference speckle image.

[0060] The speckle image projected by the infrared laser speckle projector can be composed of a vertical cavity surface laser transmitter VCSEL combined with a collimating mirror and a diffractive optical device DOE, or a semiconductor laser LD...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com