Neural network operation optimization and data processing method and device and storage medium

A technology of operation optimization and neural network, applied in the computer field, can solve the problems of popularization and application of multi-core computing equipment, low computing efficiency, etc., and achieve the effect of improving computing efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

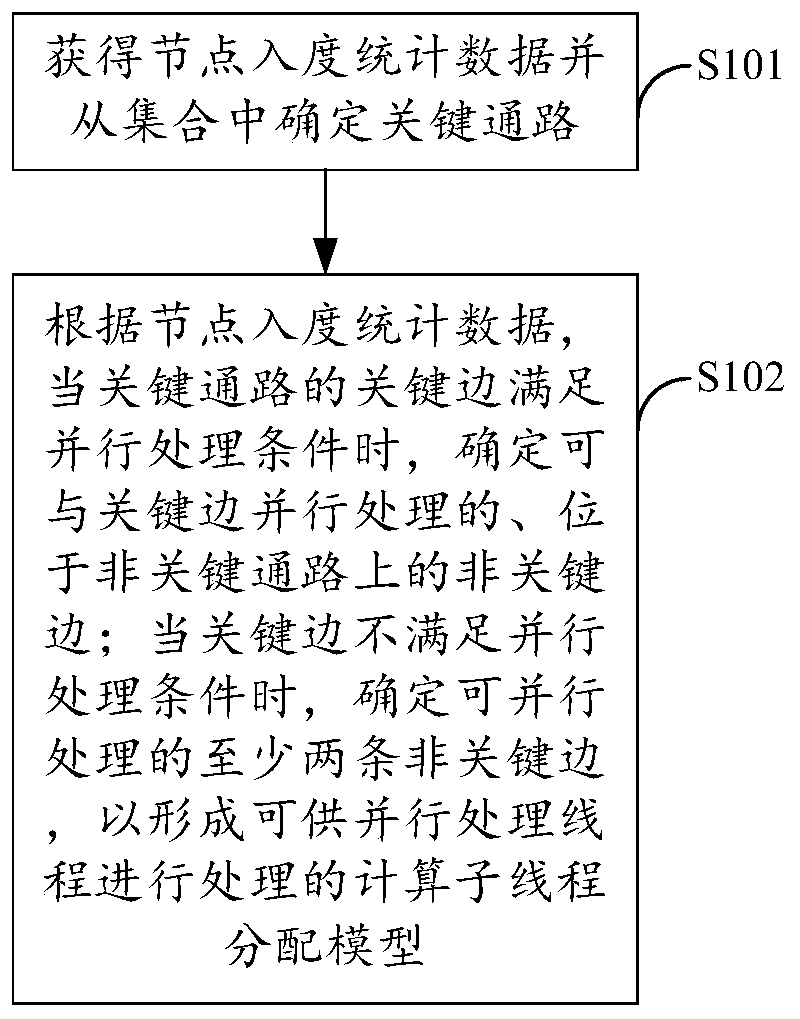

[0036] figure 1 The implementation flow of the neural network operation optimization method provided by the first embodiment of the present invention is shown. For the convenience of description, only the parts related to the embodiment of the present invention are shown, and the details are as follows:

[0037] The forward graph of the neural network corresponds to a set of at least two paths between the input and the output. Each path uses a feature map (Feature Map) as a node and a calculator as an edge, and the calculator corresponds to at least one network layer. .

[0038] In this embodiment, the neural network is similar to the Inception-Net, and the computational sub-connection structure between the input and output of the neural network is composed of multiple paths, which is a multi-branch structure . When the calculation sub-combination of this type of neural network is more complex, the network calculation accuracy is higher, and accordingly, parallel acceleratio...

Embodiment 2

[0051] On the basis of Embodiment 1, this embodiment further provides the following content:

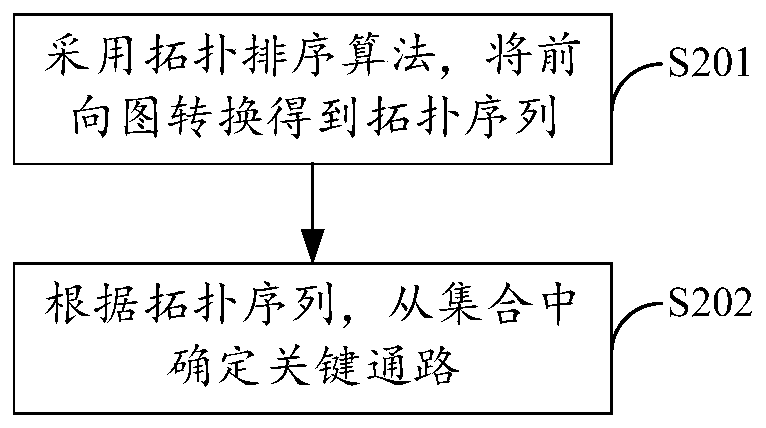

[0052] Such as figure 2 As shown, in this embodiment, step S101 may mainly include:

[0053] In step S201, a topological sorting algorithm is used to convert the forward graph to obtain a topological sequence.

[0054] In this embodiment, the topological sorting algorithm can mainly perform topological sorting on the forward graph, and arrange all the nodes in it into a linear sequence satisfying the topological order, so that any pair of nodes (u, v) is in the linear sequence, and u is in v Before.

[0055] In step S202, the critical path is determined from the set according to the topology sequence.

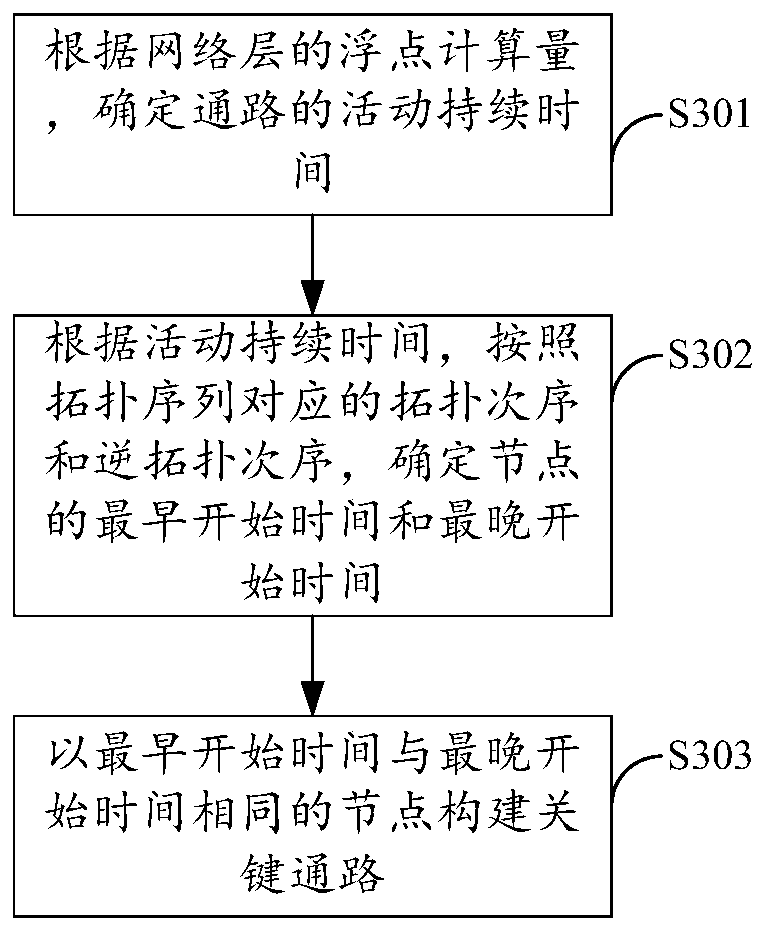

[0056] In this embodiment, step S202 may include such as image 3 The flow shown:

[0057] In step S301, the activity duration of the path is determined according to the amount of floating-point calculation of the network layer.

[0058] In this embodiment, network layers such ...

Embodiment 3

[0064] This embodiment further provides the following content on the basis of embodiment one or two:

[0065] In this embodiment, the number of parallel processing threads is preset as N, and N is a natural number greater than 1. Then, step S102 is specifically:

[0066] When the real-time in-degree data of the node at the start position of the key edge is zero, determine the non-key edges that can be processed in parallel with the key edge and located on at most N-1 non-key paths; when the node at the start position of the key edge When the real-time in-degree data is not zero, non-critical edges that can be processed in parallel and located on at least two and at most N non-critical paths are determined. Wherein, the real-time in-degree data is obtained based on changes in node in-degree statistical data.

[0067] The parallelism of N threads can be regarded as a sliding window on the calculation sub-queue. Whenever a certain thread completes the current calculation sub-tas...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com