Virtual try-on method and device based on posture guidance

A virtual try-on and posture technology, applied in the field of virtual try-on, can solve the problems of input and output space mismatch, inability to guarantee, stiff collar part covering the human body, etc., to achieve the effect of maintaining color and texture characteristics

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

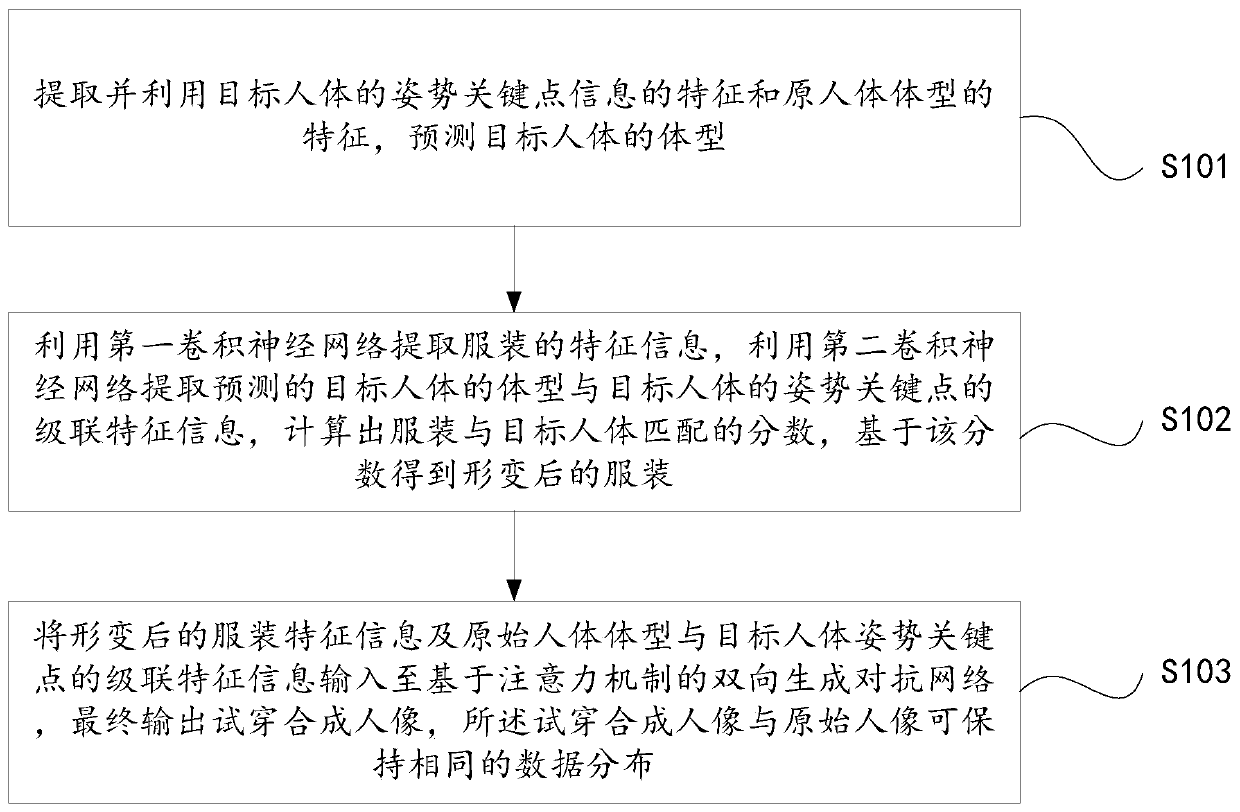

[0037] like figure 1 As shown, a virtual try-on method based on posture guidance in this embodiment includes:

[0038] S101: Extract and utilize the features of the posture key point information of the target human body and the features of the original body shape to predict the body shape of the target human body.

[0039] In a specific implementation, the specific process of predicting the body shape of the target human body in step S101 includes:

[0040] S1011: Use the target body pose key point information and the original body shape information to construct a target body shape prediction network:

[0041]

[0042] in Representative and target human pose key point P B Align the target body size. S A A mask representing the primitive body shape. Θ p Represents network parameters.

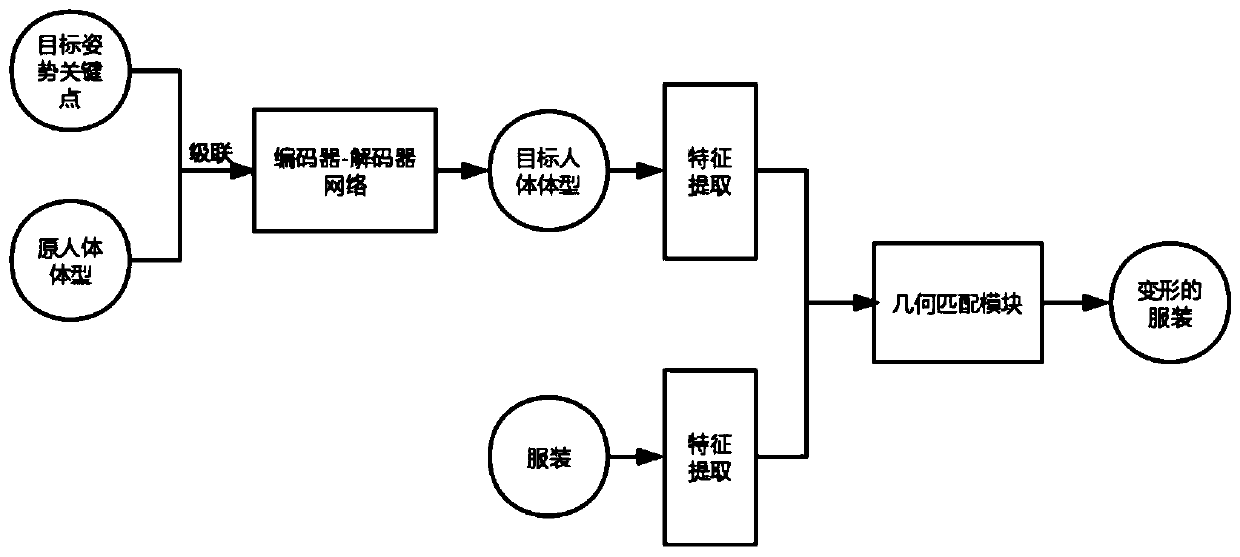

[0043] In this embodiment, an encoder-decoder structure is used to construct a target human body shape prediction network, and the S A and P B the cascade as input. Specifically, a...

Embodiment 2

[0097] A gesture-guided virtual try-on device of the present embodiment includes:

[0098](1) predicting the body shape of the target human body, which is used to extract and utilize the features of the posture key point information of the target human body and the features of the original body shape to predict the body shape of the target human body;

[0099] Specifically, in the predicting target human body shape module, an encoder-decoder structure is used to construct a target human body shape prediction network.

[0100] (2) Clothing and target human body matching module, which is used to extract the feature information of clothing by using the first convolutional neural network, and use the second convolutional neural network to extract the cascade of the predicted body shape of the target human body and the pose key points of the target human body feature information, calculate the matching score between the clothing and the target human body, and obtain the deformed cl...

Embodiment 3

[0108] This embodiment provides a computer-readable storage medium on which a computer program is stored, and when the program is executed by a processor, realizes the following: figure 1 Steps in the gesture-guided virtual try-on method shown.

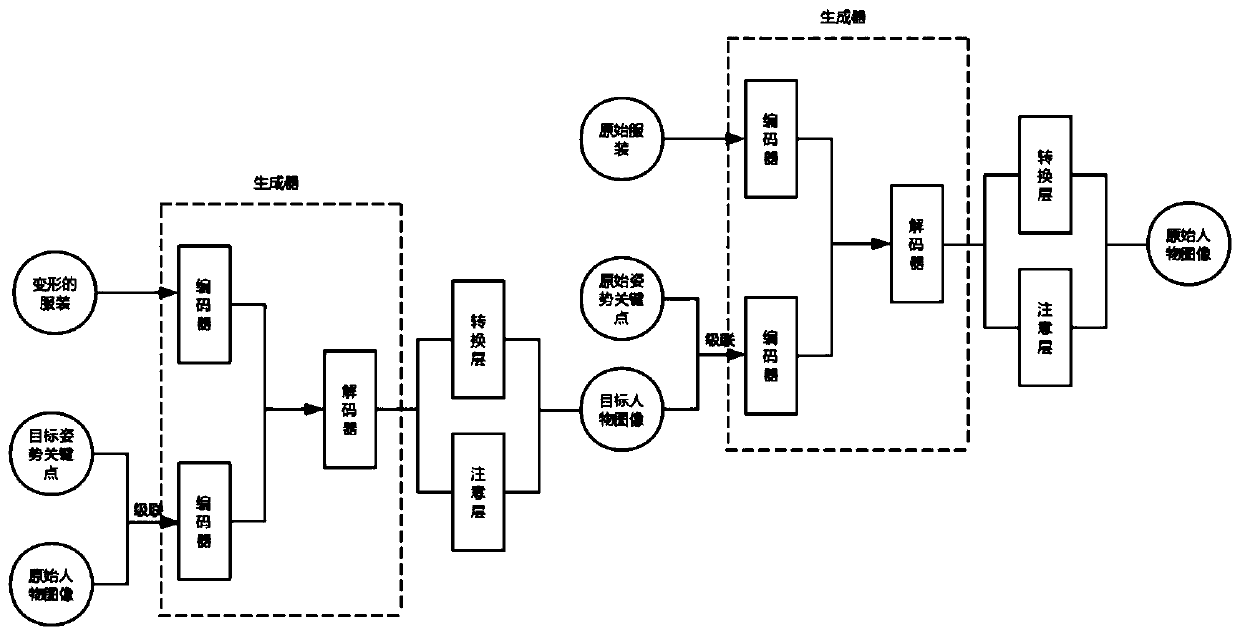

[0109] This embodiment solves the online virtual try-on task based on 2D pictures. In order to generate a more realistic try-on picture effect, this embodiment uses the first convolutional neural network to extract the feature information of clothing, and uses the second convolutional neural network to extract predictions. The cascading feature information of the target body shape and the pose key points of the target body is calculated, and the matching score between the clothing and the target body is calculated, and the deformed clothing is obtained based on the score; The cascade feature information of the key points of human body posture is input into the bidirectional generative adversarial network based on the attention mechani...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com