Relative navigation method for eye movement interaction augmented reality

An augmented reality and relative navigation technology, applied in navigation, user/computer interaction input/output, surveying and navigation, etc., can solve problems such as insecurity, lack of visual interaction process, and insufficient consideration of cognition and conversion capabilities. Achieve the effect of improving the success rate of guidance, reducing distraction, and improving the navigation experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0052] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

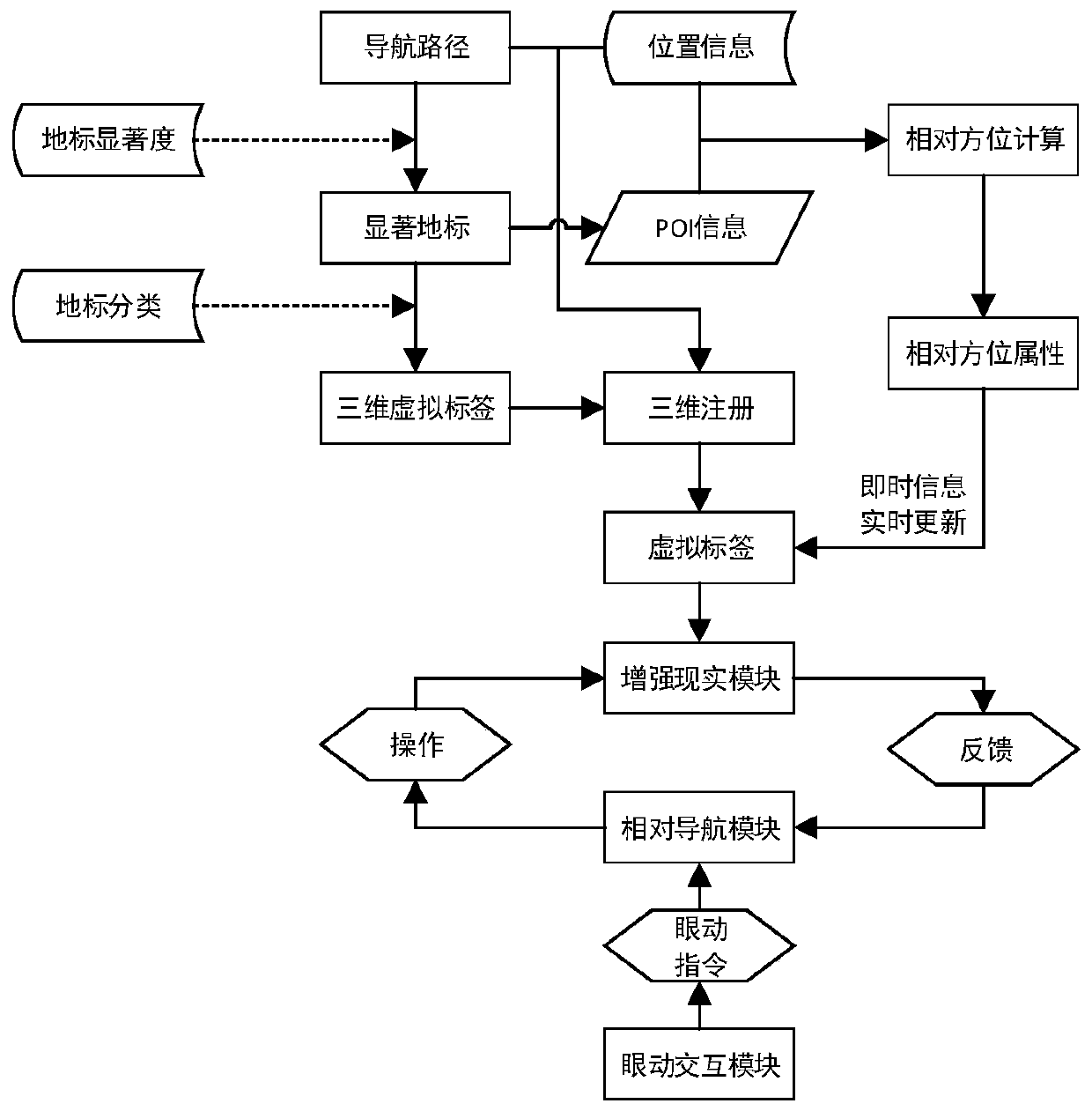

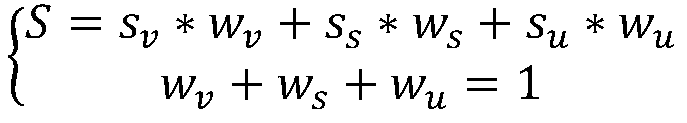

[0053] The present invention mainly realizes dynamic projection and visual dynamic relative guidance of prominent landmark virtual labels based on augmented reality and relative relationship model, and mainly realizes human-computer intelligent visual interaction between a wearable eye tracker and a navigation system. Specifically, it includes the generation of virtual labels of prominent landmarks and the calculation of real-time relative relationship attributes, based on augmented reality technology, virtual labels of prominent landmarks (including relative relationship attribute information) and ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com